爬取四大名著之《三国演义》

productor

import requests

res = requests.get("https://www.shicimingju.com/book/sanguoyanyi.html")

with open('text.html',mode='wb') as fw:

for line in res.iter_content():

fw.write(line)

customer

from bs4 import BeautifulSoup

import requests

soup = BeautifulSoup(open('text.html'), 'lxml')

download_info = (

{

'title': li.text,

'link': 'https://www.shicimingju.com' + li.find('a').attrs.get('href')

}

for li in soup.find(class_='book-mulu').find_all(name='li')

)

for item in download_info:

article_soup = BeautifulSoup(requests.get(item.get('link', None)).text, 'lxml')

article_div = article_soup.find(class_='bookmark-list')

with open('sgyy.txt', mode='ab+') as fw:

title = article_div.find(name='h1').text

content = article_div.find(class_='chapter_content').text

fw.write((title + '

' + content + '

').encode('utf-8'))

效果图

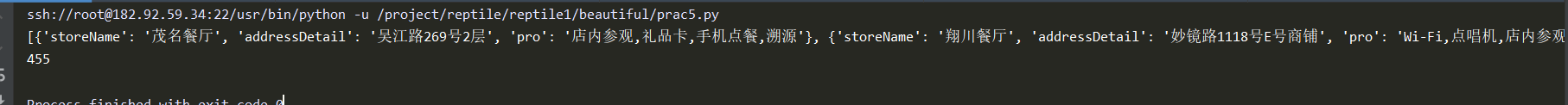

爬取上海市的肯德基门店信息

productor

import requests

res = requests.get("http://www.kfc.com.cn/kfccda/storelist/index.aspx")

with open('text2.html',mode='wb') as fw:

for line in res.iter_content():

fw.write(line)

customer

import requests

import json

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36',

'Referer': 'http://www.kfc.com.cn/kfccda/storelist/index.aspx',

}

res = requests.post(

"http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx",

params={

'op': 'cname'

},

data={

'cname': '上海',

'pid': '',

'keyword':'',

'pageIndex': 1,

'pageSize': 500

},

headers=header

)

kfc_info = json.loads(res.text).get('Table1')

kfc_list = [

{

"storeName":kfc.get('storeName')+'餐厅',

"addressDetail":kfc.get("addressDetail"),

"pro":kfc.get("pro")

}

for kfc in kfc_info

]

print(kfc_list)

print(len(kfc_list)) #455