k8s的两种网络方案与多种工作模式

1. Flannel:

flannel有三种工作模式:

1. vxlan(隧道方案) 2. host-gw(路由方案)

2. udp(在用户态实现的数据封装解封装,由于性能较差已经被弃用)

vxlan模式:

vxlan模式会在当前服务器中创建一个cni0的网桥,和flannel.1隧道端点. 这个隧道端点会对数据包进行再次封装.然后flannel会把数据包传输到目标节点中.同时它也会在本地创建几个路由表.(可以通过命令 ip route 查看到)

[root@k8s-master1 ~]# ip route

default via 10.0.0.254 dev eth0

10.0.0.0/24 dev eth0 proto kernel scope link src 10.0.0.63

10.244.1.0/24 via 10.244.1.0 dev flannel.1 onlink

10.244.2.0/24 via 10.244.2.0 dev flannel.1 onlink

169.254.0.0/16 dev eth0 scope link metric 1002

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

1.1 Flannel网络部署与卸载:

1. 安装flannel网络:

wget https://www.chenleilei.net/soft/k8s/kube-flannel.yaml

kubectl apply -f kube-flannel.yaml

1.1 验证网络:

1.1.1 创建一个应用

kubectl create deployment nginx --image=nginx

kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

1.1.2 检查测试:

[root@k8s-master1 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-f89759699-rcgh2 1/1 Running 0 52s 10.244.2.16 k8s-node2 <none> <none>

1.1.3 测试flannel网络连通性:

[root@k8s-master1 ~]# ping 10.244.2.16

PING 10.244.2.16 (10.244.2.16) 56(84) bytes of data.

64 bytes from 10.244.2.16: icmp_seq=1 ttl=63 time=0.865 ms

64 bytes from 10.244.2.16: icmp_seq=2 ttl=63 time=0.549 ms

1.1.4 卸载flannel网络:

[root@k8s-master1 ~]# ip route

default via 10.0.0.254 dev eth0

10.0.0.0/24 dev eth0 proto kernel scope link src 10.0.0.63

10.244.1.0/24 via 10.244.1.0 dev flannel.1 onlink # <---flannel网络

10.244.2.0/24 via 10.244.2.0 dev flannel.1 onlink # <---flannel网络

169.254.0.0/16 dev eth0 scope link metric 1002

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

[老师提供方法: 所有服务器执行]

ip link delete cni0

ip link delete flannel.1

执行后,检查ip route 查看是否有路由表,flannel网络已经不存在.

[root@k8s-master1 ~]# ip link delete cni0

Cannot find device "cni0"

[root@k8s-master1 ~]# ip link delete flannel.1

[root@k8s-master1 ~]# ip route

default via 10.0.0.254 dev eth0

10.0.0.0/24 dev eth0 proto kernel scope link src 10.0.0.63

169.254.0.0/16 dev eth0 scope link metric 1002

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

1.1.5 测试

[root@k8s-master1 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-f89759699-rcgh2 1/1 Running 0 23m 10.244.2.16 k8s-node2 <none> <none>

[root@k8s-master1 ~]# ping 10.244.2.16

PING 10.244.2.16 (10.244.2.16) 56(84) bytes of data.

^C

--- 10.244.2.16 ping statistics ---

68 packets transmitted, 0 received, 100% packet loss, time 68199ms

此时,没有flannel网络的情况下,nginx的这个pod已经无法访问.

在卸载网络插件时无法删除可以使用 --grace-period=0 --force

如:

kubectl delete pod coredns-7ff77c879f-5cc29 -n kube-system --grace-period=0 --force

2. Calico网络部署与卸载

calico有2种中作模式:

1. ipip(隧道方案) 2.bgp(路由方案)

注意: 公有云可能会对路由方案造成影响,并且有的云主机会禁止路由(bgp)方案,所以有些云厂商是禁止此实现方式的,因为他会写入路由表,这样可能会影响到厂商现有网络.

路由方案: 对现有网络有一定的要求,但是他的性能最好,它是直接的路由转发模式,他不会经过数据包封装再封装,没有网络消耗.此方案优先选择,但是也要看厂商是否支持. 它会要求,二层网络可达

隧道方案: 对现有网络要求不高,它只需要三层通信正常基本都可以通信.

calico网络插件下载:

官方地址:

wget https://docs.projectcalico.org/manifests/calico.yaml

个人网盘地址:

wget https://www.chenleilei.net/soft/k8s/calico.yaml

注意: 安装calico网络插件 需要卸载 flannel网络插件.

#卸载flannel网络:

ip link delete cni0

ip link delete flannel.1

kubectl delete -f kube-flannel.yaml

#执行后,检查ip route 查看是否有路由表,flannel网络已经不存在.

[root@k8s-master1 ~]# ip link delete cni0

Cannot find device "cni0"

[root@k8s-master1 ~]# ip link delete flannel.1

[root@k8s-master1 ~]# ip route

default via 10.0.0.254 dev eth0

10.0.0.0/24 dev eth0 proto kernel scope link src 10.0.0.63

169.254.0.0/16 dev eth0 scope link metric 1002

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

calico配置:

1.默认网段修改:

找到以下内容:

# - name: CALICO_IPV4POOL_CIDR

# value: "192.168.0.0/16"

改为安装kubernetes时初始化的网段:

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

2. 安装calico网络插件 执行yaml

[root@k8s-master1 ~]# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

3. 卸载calico网络插件.

[root@k8s-master1 ~]# kubectl delete -f calico.yaml

检查;

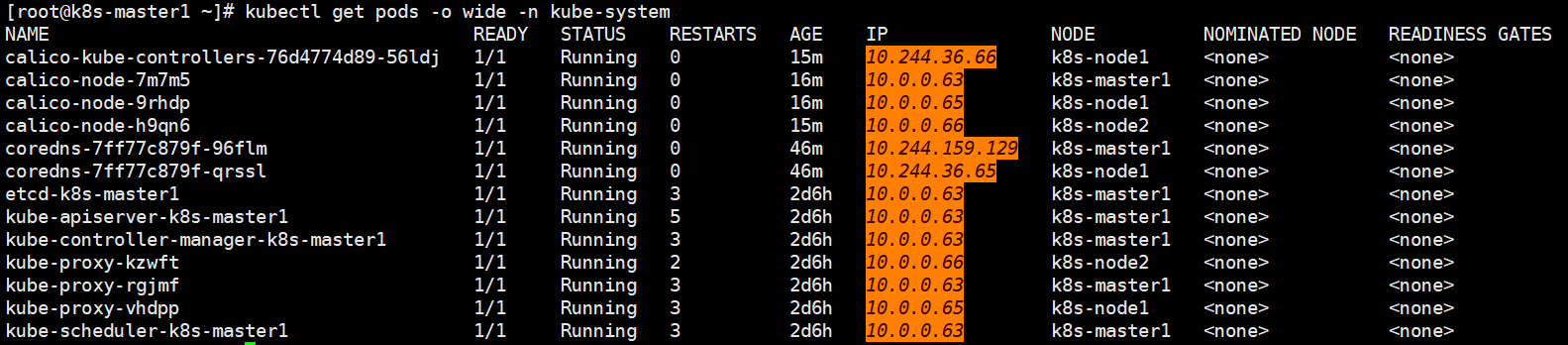

kubectl get pods -o wide -n kube-system

2.1 验证与日志检查:

应用创建:

kubectl create deployment nginx --image=nginx

kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

日志检查:

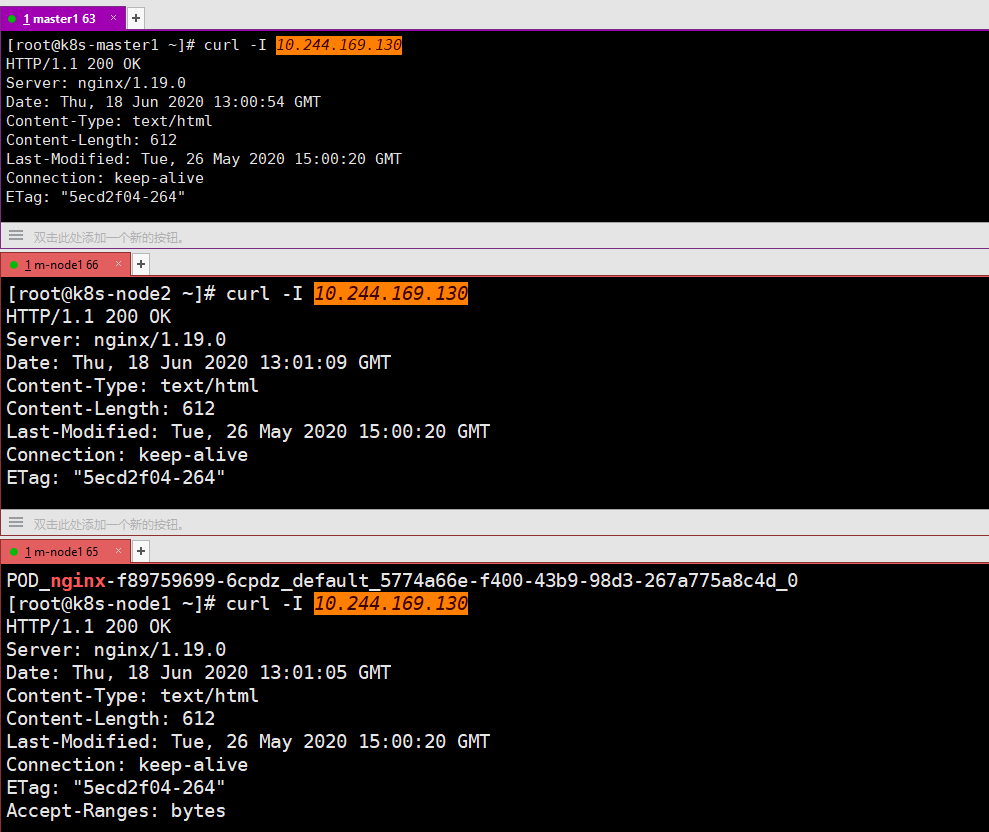

[root@k8s-master1 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-f89759699-qlckm 1/1 Running 0 95s 10.244.169.130 k8s-node2 <none> <none>

[root@k8s-master1 ~]# curl -I 10.244.169.130

HTTP/1.1 200 OK

Server: nginx/1.19.0

Date: Thu, 18 Jun 2020 12:41:58 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 26 May 2020 15:00:20 GMT

Connection: keep-alive

ETag: "5ecd2f04-264"

Accept-Ranges: bytes

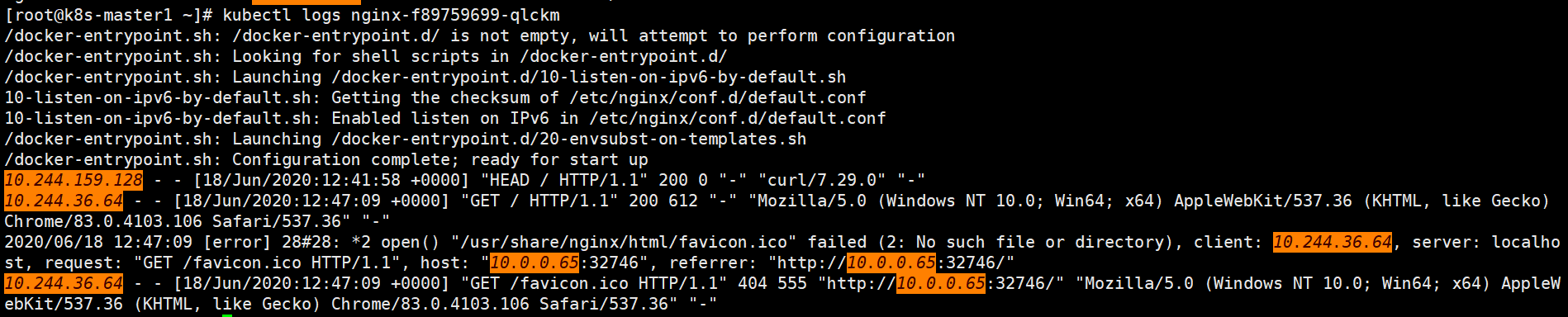

[root@k8s-master1 ~]# kubectl logs nginx-f89759699-qlckm

10.244.36.64 - - [18/Jun/2020:12:47:09 +0000] "GET /favicon.ico HTTP/1.1" 404 555 "http://10.0.0.65:32746/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.106 Safari/537.36" "-"

访问没有问题,证明calico网络部署成功.