一、过拟合问题

欠拟合、恰当拟合、过拟合(代价函数约为0,泛化太差)

样本数量少,而样本特征很多,容易出现过拟合问题,如何解决?

1.利用一些算法自动舍弃一部分特征;

2.正则化,保留所有特征,减小 θ 量级。

二、线性回归的正则化

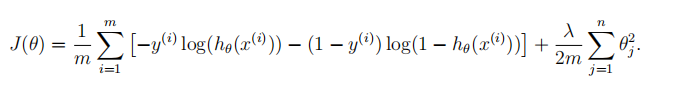

三、逻辑回归的正则化

编程作业

1.plotDate.m

在第一部分作业中,无法完成在这部分的绘图,修改了代码。

function plotData(X, y) %PLOTDATA Plots the data points X and y into a new figure % PLOTDATA(x,y) plots the data points with + for the positive examples % and o for the negative examples. X is assumed to be a Mx2 matrix. % Create New Figure figure; hold on; % ====================== YOUR CODE HERE ====================== % Instructions: Plot the positive and negative examples on a % 2D plot, using the option 'k+' for the positive % examples and 'ko' for the negative examples. % n0 = 1; n1 = 1; for i=1:length(y), if y(i)==0, matrix0(n0,:) = X(i,:); n0 = n0 + 1; end; if y(i)==1, matrix1(n1,:) = X(i,:); n1 = n1 + 1; end; end; plot(matrix0(:,1),matrix0(:,2),'ko', 'MarkerFaceColor', 'y', ... 'MarkerSize', 7); plot(matrix1(:,1),matrix1(:,2),'k+','LineWidth', 2, ... 'MarkerSize', 7); % ========================================================================= hold off; end

2.costFunctionReg.m

正则化逻辑回归的代价函数

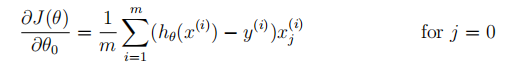

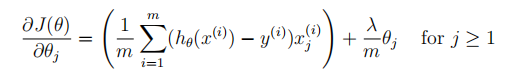

下降梯度

在这部分需要注意的是,求代价函数的公式中的正则化项中的 θ 是不包括 θ0 的(约定俗成),在因为在MATLAB中下标从1开始,故指的是θ1.

function [J, grad] = costFunctionReg(theta, X, y, lambda) %COSTFUNCTIONREG Compute cost and gradient for logistic regression with regularization % J = COSTFUNCTIONREG(theta, X, y, lambda) computes the cost of using % theta as the parameter for regularized logistic regression and the % gradient of the cost w.r.t. to the parameters. % Initialize some useful values m = length(y); % number of training examples % You need to return the following variables correctly J = 0; grad = zeros(size(theta)); % ====================== YOUR CODE HERE ====================== % Instructions: Compute the cost of a particular choice of theta. % You should set J to the cost. % Compute the partial derivatives and set grad to the partial % derivatives of the cost w.r.t. each parameter in theta h = sigmoid(X*theta); [J, grad] = costFunction(theta,X,y); J = J + lambda*(theta'*theta-theta(1).^2)/2/m; grad = X'*(h-y)/m+lambda*theta/m; temp =(X(:,1))'*(h-y)/m; grad(1,1) = temp; % ============================================================= end