kylin构建报错,日志如下:

java.lang.RuntimeException: cannot get HiveTableMeta at org.apache.kylin.source.hive.HiveTable.<init>(HiveTable.java:50) at org.apache.kylin.source.hive.HiveSource.createReadableTable(HiveSource.java:65) at org.apache.kylin.source.SourceManager.createReadableTable(SourceManager.java:144) at org.apache.kylin.cube.CubeManager$DictionaryAssist.buildSnapshotTable(CubeManager.java:1129) at org.apache.kylin.cube.CubeManager.buildSnapshotTable(CubeManager.java:1046) at org.apache.kylin.cube.cli.DictionaryGeneratorCLI.processSegment(DictionaryGeneratorCLI.java:90) at org.apache.kylin.cube.cli.DictionaryGeneratorCLI.processSegment(DictionaryGeneratorCLI.java:49) at org.apache.kylin.engine.mr.steps.CreateDictionaryJob.run(CreateDictionaryJob.java:71) at org.apache.kylin.engine.mr.MRUtil.runMRJob(MRUtil.java:92) at org.apache.kylin.engine.mr.common.HadoopShellExecutable.doWork(HadoopShellExecutable.java:63) at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:162) at org.apache.kylin.job.execution.DefaultChainedExecutable.doWork(DefaultChainedExecutable.java:69) at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:162) at org.apache.kylin.job.impl.threadpool.DefaultScheduler$JobRunner.run(DefaultScheduler.java:307) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) Caused by: MetaException(message:javax.jdo.JDOUserException: The query returned more than one instance BUT either unique is set to true or only aggregates are to be returned, so should have returned one result maximum at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:636) at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:388) at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:238) at org.apache.hadoop.hive.metastore.ObjectStore.getMTable(ObjectStore.java:1149) at org.apache.hadoop.hive.metastore.ObjectStore.getTable(ObjectStore.java:1000) at sun.reflect.GeneratedMethodAccessor2.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:111) at com.sun.proxy.$Proxy17.getTable(Unknown Source) at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.get_table_core(HiveMetaStore.java:1743) at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.get_table(HiveMetaStore.java:1698) at sun.reflect.GeneratedMethodAccessor3.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:138) at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:99) at com.sun.proxy.$Proxy19.get_table(Unknown Source) at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$get_table.getResult(ThriftHiveMetastore.java:9869) at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$get_table.getResult(ThriftHiveMetastore.java:9853) at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:110) at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:106) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657) at org.apache.hadoop.hive.metastore.TUGIBasedProcessor.process(TUGIBasedProcessor.java:118) at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) NestedThrowablesStackTrace: The query returned more than one instance BUT either unique is set to true or only aggregates are to be returned, so should have returned one result maximum org.datanucleus.store.query.QueryNotUniqueException: The query returned more than one instance BUT either unique is set to true or only aggregates are to be returned, so should have returned one result maximum at org.datanucleus.store.query.Query.executeQuery(Query.java:1879) at org.datanucleus.store.query.Query.executeWithArray(Query.java:1733) at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:365) at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:238) at org.apache.hadoop.hive.metastore.ObjectStore.getMTable(ObjectStore.java:1149) at org.apache.hadoop.hive.metastore.ObjectStore.getTable(ObjectStore.java:1000) at sun.reflect.GeneratedMethodAccessor2.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:111) at com.sun.proxy.$Proxy17.getTable(Unknown Source) at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.get_table_core(HiveMetaStore.java:1743) at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.get_table(HiveMetaStore.java:1698) at sun.reflect.GeneratedMethodAccessor3.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:138) at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:99) at com.sun.proxy.$Proxy19.get_table(Unknown Source) at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$get_table.getResult(ThriftHiveMetastore.java:9869) at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$get_table.getResult(ThriftHiveMetastore.java:9853) at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:110) at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:106) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657) at org.apache.hadoop.hive.metastore.TUGIBasedProcessor.process(TUGIBasedProcessor.java:118) at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) ) at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$get_table_result$get_table_resultStandardScheme.read(ThriftHiveMetastore.java:45060) at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$get_table_result$get_table_resultStandardScheme.read(ThriftHiveMetastore.java:45037) at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$get_table_result.read(ThriftHiveMetastore.java:44968) at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:86) at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_get_table(ThriftHiveMetastore.java:1282) at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.get_table(ThriftHiveMetastore.java:1268) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.getTable(HiveMetaStoreClient.java:1266) at org.apache.kylin.source.hive.CLIHiveClient.getHiveTableMeta(CLIHiveClient.java:78) at org.apache.kylin.source.hive.HiveTable.<init>(HiveTable.java:48) ... 16 more

从日志上看报错:返回了多条记录?

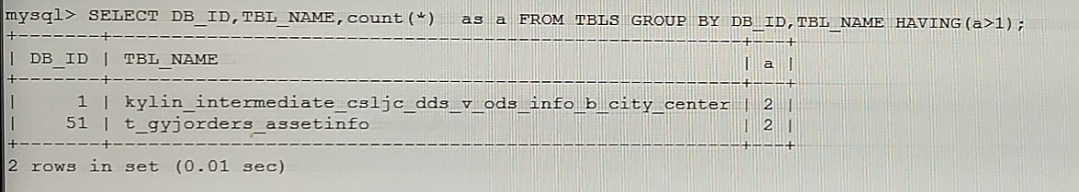

从hive的元数据查看有没有一个表对应多条记录,果真如此,发现kylin的一张表对应2条记录

SELECT DB_ID,TBL_NAME,count(*) as a FROM TBLS GROUP BY DB_ID,TBL_NAME HAVING(a>1)

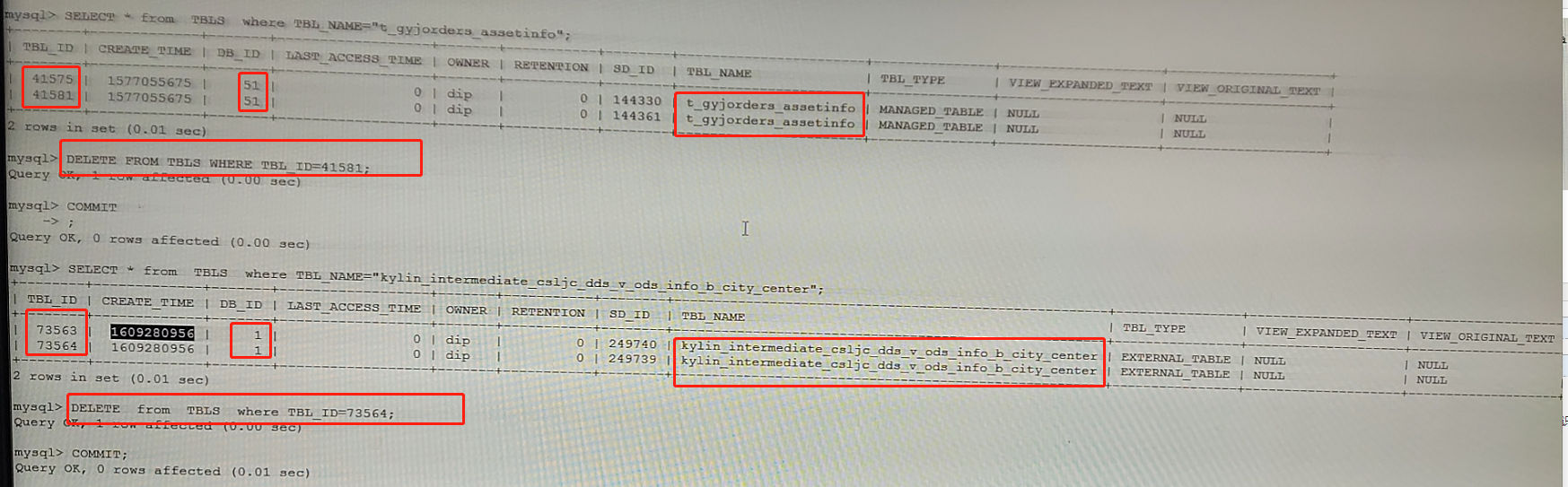

从hive中查看这2张表,发现这2张表均报错

从TBLS表中同一个DB_ID中重复的表记录删除一个(我们删除了TBL_ID较大的那个)

从hive中可以查询出数据,kylin恢复