一、nova简介:

nova和swift是openstack最早的两个组件,nova分为控制节点和计算节点,计算节点通过nova computer进行虚拟机创建,通过libvirt调用kvm创建虚拟机,nova之间通信通过rabbitMQ队列进行通信,起组件和功能如下:

1.1:nova API的功能,nova API:

1.2:nova scheduler

nova scheduler模块在openstack中的作用是决策虚拟机创建在哪个主机(计算节点)上。决策一个虚拟机应该调度到某物理节点,需要分为两个步骤:

过滤(filter),过滤出可以创建虚拟机的主机

计算权值(weight),根据权重大进行分配,默认根据资源可用空间进行权重排序

二、部署nova控制节点:

官方文档:https://docs.openstack.org/ocata/zh_CN/install-guide-rdo/nova-controller-install.html

1、准备数据库

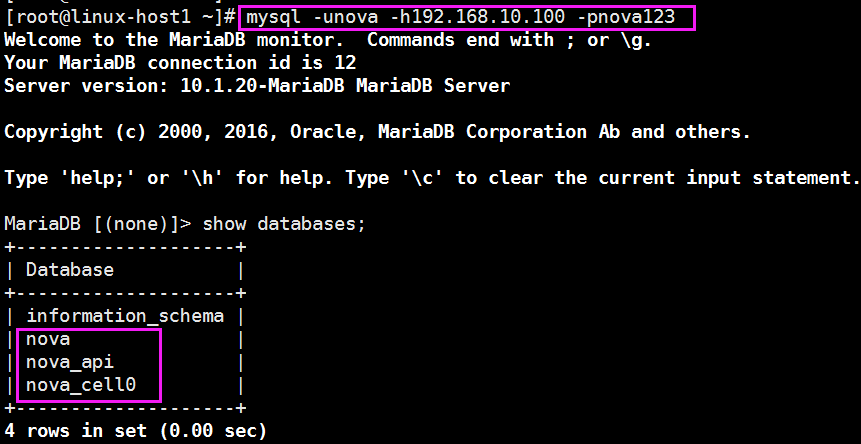

1、用数据库连接客户端以 root 用户连接到数据库服务器,并创建nova_api, nova, 和nova_cell0 databases:

[root@openstack-1 ~]# mysql -uroot -pcentos MariaDB [(none)]> CREATE DATABASE nova_api; MariaDB [(none)]> CREATE DATABASE nova; MariaDB [(none)]> CREATE DATABASE nova_cell0;

2、对数据库进行正确的授权

# GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'nova123'; # GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'nova123'; # GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'nova123';

3、在控制台一验证数据库

2、在控制台一创建nova用户

1、获得 admin 凭证来获取只有管理员能执行的命令的访问权限:

[root@openstack-1 ~]# . admin.sh #获取admin权限 [root@openstack-1 ~]# openstack user create --domain default --password-prompt nova #创建nova用户,密码也是nova

2、给 nova 用户添加 admin 角色:

# openstack role add --project service --user nova admin

3、创建 nova 服务实体:

# openstack service create --name nova --description "OpenStack Compute" compute

4、创建公共端、私有端和服务端,注意修改里边的openstack-vip.net是域名,解析VIP地址的hosts文件,根据不同配置进行修改。

$ openstack endpoint create --region RegionOne compute public http://openstack-vip.net:8774/v2.1 +--------------+-------------------------------------------+ | Field | Value | +--------------+-------------------------------------------+ | enabled | True | | id | 3c1caa473bfe4390a11e7177894bcc7b | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 060d59eac51b4594815603d75a00aba2 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | +--------------+-------------------------------------------+ $ openstack endpoint create --region RegionOne compute internal http://openstack-vip.net:8774/v2.1 +--------------+-------------------------------------------+ | Field | Value | +--------------+-------------------------------------------+ | enabled | True | | id | e3c918de680746a586eac1f2d9bc10ab | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 060d59eac51b4594815603d75a00aba2 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | +--------------+-------------------------------------------+ $ openstack endpoint create --region RegionOne compute admin http://openstack-vip.net:8774/v2.1 +--------------+-------------------------------------------+ | Field | Value | +--------------+-------------------------------------------+ | enabled | True | | id | 38f7af91666a47cfb97b4dc790b94424 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | 060d59eac51b4594815603d75a00aba2 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | +--------------+-------------------------------------------+

5、如果创建重复的,就需要删除。

# openstack endpoint list #先查询ID # openstack endpoint delete ID # 删除重复的ID

6、创建placement用户并授权,密码也是placement。

$ openstack user create --domain default --password-prompt placement

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | fa742015a6494a949f67629884fc7ec8 |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

7、将admin角色加入到项目服务和placement用户中

$ openstack role add --project service --user placement admin

8、在服务目录中创建放置API条目

$ openstack service create --name placement --description "Placement API" placement +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | Placement API | | enabled | True | | id | 2d1a27022e6e4185b86adac4444c495f | | name | placement | | type | placement | +-------------+----------------------------------+

9、创建放置API服务端点

$ openstack endpoint create --region RegionOne placement public http://controller:8778 #注意controller需要修改为VIP解析的域名,三个都需要替换。 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 2b1b2637908b4137a9c2e0470487cbc0 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 2d1a27022e6e4185b86adac4444c495f | | service_name | placement | | service_type | placement | | url | http://controller:8778 | +--------------+----------------------------------+ $ openstack endpoint create --region RegionOne placement internal http://controller:8778 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 02bcda9a150a4bd7993ff4879df971ab | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 2d1a27022e6e4185b86adac4444c495f | | service_name | placement | | service_type | placement | | url | http://controller:8778 | +--------------+----------------------------------+ $ openstack endpoint create --region RegionOne placement admin http://controller:8778 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 3d71177b9e0f406f98cbff198d74b182 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | 2d1a27022e6e4185b86adac4444c495f | | service_name | placement | | service_type | placement | | url | http://controller:8778 |

三、在haproxy服务器上配置

1、修改haproxy配置文件,监听两个端口,将VIP转发到控制台一:vim /etc/haproxy/haproxy.cfg

listen openstack_nova_port_8774

bind 192.168.7.248:8774

mode tcp

log global

server 192.168.7.100 192.168.7.100:8774 check inter 3000 fall 3 rise 5

server 192.168.7.101 192.168.7.101:8774 check inter 3000 fall 3 rise 5 backup

listen openstack_nova_port_8778

bind 192.168.7.248:8778

mode tcp

log global

server 192.168.7.100 192.168.7.100:8778 check inter 3000 fall 3 rise 5

server 192.168.7.101 192.168.7.101:8778 check inter 3000 fall 3 rise 5 backup

2、重启haproxy服务

[root@mysql1 ~]# systemctl restart haproxy

四、在所有控制端安装包

1、安装软件包

# yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api

2、编辑``/etc/nova/nova.conf``文件并完成下面的操作:

在``[DEFAULT]``部分,只启用计算和元数据API:

[DEFAULT] # ... enabled_apis = osapi_compute,metadata

3、在``[api_database]``和``[database]``部分,配置数据库的连接:

[api_database] # ... connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api [database] # ... connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

NOVA_DBPASS,cotroller改为VIP解析hosts域名。

4、在``[DEFAULT]``部分,配置``RabbitMQ``消息队列访问权限:

[DEFAULT] # ... transport_url = rabbit://openstack:RABBIT_PASS@controller

用你在 “RabbitMQ” 中为 “openstack” 选择的密码替换 “RABBIT_PASS”。

5、编辑nova配置文件,定义keystone配置

[api] # ... auth_strategy = keystone [keystone_authtoken] # ... auth_uri = http://controller:5000 #需要将controller修改为VIP的域名解析地址 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = nova #nova密码

6、在 [DEFAULT]``部分,启用网络服务支持:

[DEFAULT] # ... use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver # 关闭防火墙

7、在``[vnc]``部分,配置VNC代理使用控制节点的管理接口IP地址 :

[vnc] enabled = true # ... vncserver_listen = 192.168.7.100 #监听本地IP地址,也可以监听本地所有地址0.0.0.0 vncserver_proxyclient_address = 192.168.7.100

8、在 [glance] 区域,配置镜像服务 API 的位置:

[glance] # ... api_servers = http://openstack-vip.net:9292 #监听VIP的域名地址(openstack-vip.net)

9、在 [oslo_concurrency] 部分,配置锁路径:

[oslo_concurrency] # ... lock_path = /var/lib/nova/tmp

10、配置API接口

[placement] # ... os_region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://openstack-vip.net:35357/v3 username = placement password = placement #创建placement的用户密码

11、配置 apache 允许访问placement API,修改配置文件:/etc/httpd/conf.d/00-nova-placement-api.conf,在底部加入以下内容:

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

12、重启httpd服务

# systemctl restart httpd

13、初始化nova数据库,以下四个步骤都执行一下。

# su -s /bin/sh -c "nova-manage api_db sync" nova # su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova # su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova # su -s /bin/sh -c "nova-manage db sync" nova

14、验证cell0和cell1是否正确

# nova-manage cell_v2 list_cells +-------+--------------------------------------+ | Name | UUID | +-------+--------------------------------------+ | cell1 | 109e1d4b-536a-40d0-83c6-5f121b82b650 | | cell0 | 00000000-0000-0000-0000-000000000000 | +-------+--------------------------------------+

15、启动nova服务,并设置为开机启动

# systemctl enable openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service # systemctl start openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

在haproxy服务器监听新的端口6080

修改haproxy服务的配置文件: vim /etc/haproxy/haproxy.cfg

listen openstack_nova_port_6080

bind 192.168.7.248:6080

mode tcp

log global

server 192.168.7.100 192.168.7.100:6080 check inter 3000 fall 3 rise 5

server 192.168.7.101 192.168.7.101:6080 check inter 3000 fall 3 rise 5 backup

重启haproxy服务

[root@mysql1 ~]# systemctl restart haproxy

然后可以在web页面登陆查看此时的连接情况:

192.168.7.106:15672 访问的是后端rabbitmq服务。

备份控制端nova数据,同步到其他控制端上,实现nova高可用

1、将/etc/nova目录下的文件打包,复制到其他控制端服务器上

[root@openstack-1 ~]# cd /etc/nova [root@openstack-1 ~]# tar zcvf nova-conller.tar.gz ./*

2、将打包的文件复制到其他控制端主机上

[root@openstack-1 ~]# scp /etc/nova/zcvf nova-conller.tar.gz 192.168.7.101:/etc/nova/

3、在复制到其他控制端的包解压,并修改IP地址

[root@openstack-2 ~]# tar -xvf nova-conllar.tar.gz

4、修改/etc/nova/nova.conf配置文件,将里边的IP地址修改为本机的IP地址

[vnc] enabled = true # ... vncserver_listen = 192.168.7.101 #监听本地IP地址,也可以监听本地所有地址0.0.0.0 vncserver_proxyclient_address = 192.168.7.101

5、配置 apache 允许访问placement API,修改配置文件:/etc/httpd/conf.d/00-nova-placement-api.conf,在底部加入以下内容:

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

6、启动httpd服务,并设置为开机启动

# systemctl start httpd # systemctl enable httpd

7、启动nova服务,并设置为开机启动

# systemctl enable openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service # systemctl start openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

重启命令最好是写到一个指定的目录下,并创建一个脚本:

[root@openstack-1 scripts]# mkdit scripts #创建一个专门存放脚本的目录 [root@openstack-1 scripts]# vim nova-restart.sh #写一个重启nova脚本

写一个重启nova脚本

#!/bin/bash systemctl restart openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

执行脚本,在工作中会大大提高工作效率

#bash nova-restart.sh

8、验证nova服务,注意所有的控制端的Host文件名称不能一样,否则会有错误。

[root@linux-host1 ~]# nova service-list +----+------------------+-------------------------+----------+---------+-------+----------------------------+-----------------+ | Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason | +----+------------------+-------------------------+----------+---------+-------+----------------------------+-----------------+ | 1 | nova-consoleauth | linux-host1.exmaple.com | internal | enabled | up | 2016-10-28T07:51:45.000000 | - | | 2 | nova-scheduler | linux-host1.exmaple.com | internal | enabled | up | 2016-10-28T07:51:45.000000 | - | | 3 | nova-conductor | linux-host1.exmaple.com | internal | enabled | up | 2016-10-28T07:51:44.000000 | - | | 6 | nova-compute | linux-host2.exmaple.com | nova | enabled | up | 2016-10-28T07:51:40.000000 | - | +----+------------------+-------------------------+----------+---------+-------+----

五、安装部署nova计算节点

1、在计算节点服务器上配置

1、安装软件包

# yum install openstack-nova-compute -y

修改计算节点服务器的本地hosts文件,用来解析VIP地址的域名

# vim /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.7.248 openstack-vip.net

2、编辑``/etc/nova/nova.conf``文件并完成下面的操作:

在``[DEFAULT]``部分,只启用计算和元数据API:

[DEFAULT] # ... enabled_apis = osapi_compute,metadata

3、在``[DEFAULT]``部分,配置``RabbitMQ``消息队列访问权限:

[DEFAULT] # ... transport_url = rabbit://openstack:RABBIT_PASS@controller

用你在 “RabbitMQ” 中为 “openstack” 选择的密码替换 “RABBIT_PASS”。

4、配置nova的API接口

[api] # ... auth_strategy = keystone

[keystone_authtoken] # ... auth_uri = http://controller:5000 #controller改为本地VIP解析的域名地址 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = nova #改为nova密码

5、在 [DEFAULT]``部分,启用网络服务支持:

[DEFAULT] # ... use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver

6、在``[vnc]``部分,启用并配置远程控制台访问:

[vnc] # ... enabled = True vncserver_listen = 0.0.0.0 vncserver_proxyclient_address = 192.168.7.103 #改为本地的计算机节点IP地址 novncproxy_base_url = http://controller:6080/vnc_auto.html #将controller改为本地VIP解析的hosts域名地址

服务器组件监听所有的 IP 地址,而代理组件仅仅监听计算节点管理网络接口的 IP 地址。基本的URL 指示您可以使用 web 浏览器访问位于该计算节点上实例的远程控制台的位置。

7、在 [glance] 区域,配置镜像服务 API 的位置:

[glance] # ... api_servers = http://controller:9292 #将controller改为VIP地址,也就是本地的hosts文件解析VIP地址的域名。

8、在 [oslo_concurrency] 部分,配置锁路径:

[oslo_concurrency] # ... lock_path = /var/lib/nova/tmp

9、在[placement]中配置api接口:

[placement] # ... os_region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:35357/v3 #修改为VIP解析的地址。 username = placement password = PLACEMENT_PASS #placement账号密码

10、确定您的计算节点是否支持虚拟机的硬件加速。

$ egrep -c '(vmx|svm)' /proc/cpuinfo

11、在 /etc/nova/nova.conf 文件的 [libvirt] 区域做出如下的编辑,使用kvm虚拟化,走的是CPU硬件类,启动比较快

[libvirt] # ... virt_type = qemu

12、启动计算服务,并设置为开机启动

# systemctl enable libvirtd.service openstack-nova-compute.service # systemctl start libvirtd.service openstack-nova-compute.service

测试效果:

1、先发现计算机主机

# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova Found 2 cell mappings. Skipping cell0 since it does not contain hosts. Getting compute nodes from cell 'cell1': ad5a5985-a719-4567-98d8-8d148aaae4bc Found 1 computes in cell: ad5a5985-a719-4567-98d8-8d148aaae4bc Checking host mapping for compute host 'compute': fe58ddc1-1d65-4f87-9456-bc040dc106b3 Creating host mapping for compute host 'compute': fe58ddc1-1d65-4f87-9456-bc040dc106b3

2、然后列出此时的创建的结果,显示后就说明创建成功。

$ openstack hypervisor list +----+---------------------+-----------------+-----------+-------+ | ID | Hypervisor Hostname | Hypervisor Type | Host IP | State | +----+---------------------+-----------------+-----------+-------+ | 1 | compute1 | QEMU | 10.0.0.31 | up | +----+---------------------+-----------------+-----------+-------+

实现计算机节点高可用

在第二节计算机节点配置

1、先安装软件包

# yum install openstack-nova-compute

修改第二个计算机节点的hosts文件

# vim /etc/hosts 192.168.7.104 openstack-vip.net

2、将第一个计算机节点安装完的nova进行打包

[root@computer-1 ~]# cd /etc/nova [root@computer-1 ~]# tar -zcvf nova-computer.tar.gz ./*

3、将第一个计算机节点安装的nova包传到第二个计算机节点上

[root@computer-1 ~]# scp nova-computer.tar.gz 192.168.7.104:/etc/nova/

4、在第二个计算机节点进行解压

# tar -xvf nova.tar.gz

5、修改/etc/nova/nova.conf配置文件

[vnc] # ... enabled = True vncserver_listen = 0.0.0.0 vncserver_proxyclient_address = 192.168.7.104 #改为本地的计算机节点IP地址 novncproxy_base_url = http://controller:6080/vnc_auto.html #将controller改为本地VIP解析的hosts域名地址

6、然后启动nova计算机节点服务,并设置为开机启动

# systemctl enable libvirtd.service openstack-nova-compute.service # systemctl start libvirtd.service openstack-nova-compute.service

7、启动nova后可以查看日志是否有报错

[root@openstack-1 ~]# tail /var/log/nova/*.log

8、在控制台执行此条命令:添加新的计算节点时,必须在控制器节点上运行nova-managecell_v2discover_host来注册这些新的计算节点。或者,您可以在/etc/nova/nova.conf中设置适当的间隔:

[scheduler] discover_hosts_in_cells_interval = 300

测试效果:

1、先在控制台进行以下操作,发现计算机主机

# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova Found 2 cell mappings. Skipping cell0 since it does not contain hosts. Getting compute nodes from cell 'cell1': ad5a5985-a719-4567-98d8-8d148aaae4bc Found 1 computes in cell: ad5a5985-a719-4567-98d8-8d148aaae4bc Checking host mapping for compute host 'compute': fe58ddc1-1d65-4f87-9456-bc040dc106b3 Creating host mapping for compute host 'compute': fe58ddc1-1d65-4f87-9456-bc040dc106b3

2、然后在计算节点上列出此时的创建的结果,显示后就说明创建成功。

$ openstack hypervisor list +----+---------------------+-----------------+-----------+-------+ | ID | Hypervisor Hostname | Hypervisor Type | Host IP | State | +----+---------------------+-----------------+-----------+-------+ | 1 | compute1 | QEMU | 10.0.0.31 | up | +----+---------------------+-----------------+-----------+-------+

3、在控制台列出计算机服务列表

$ openstack nova service list +----+--------------------+------------+----------+---------+-------+----------------------------+ | Id | Binary | Host | Zone | Status | State | Updated At | +----+--------------------+------------+----------+---------+-------+----------------------------+ | 1 | nova-consoleauth | controller | internal | enabled | up | 2016-02-09T23:11:15.000000 | | 2 | nova-scheduler | controller | internal | enabled | up | 2016-02-09T23:11:15.000000 | | 3 | nova-conductor | controller | internal | enabled | up | 2016-02-09T23:11:16.000000 | | 4 | nova-compute | compute1 | nova | enabled | up | 2016-02-09T23:11:20.000000 | +----+--------------------+------------+----------+---------+-------+----------------------------+

3、在控制台查看Nova运行状态

# nova-status upgrade check +---------------------------+ | Upgrade Check Results | +---------------------------+ | Check: Cells v2 | | Result: Success | | Details: None | +---------------------------+ | Check: Placement API | | Result: Success | | Details: None | +---------------------------+ | Check: Resource Providers | | Result: Success | | Details: None | +---------------------------+

4、使用openstack命令在控制端测试

$ openstack image list +--------------------------------------+-------------+-------------+ | ID | Name | Status | +--------------------------------------+-------------+-------------+ | 9a76d9f9-9620-4f2e-8c69-6c5691fae163 | cirros | active | +--------------------------------------+-------------+-------------+