在高并发的大数据 场景下Linux服务器报错fork: retry:资源暂时不可用

jvm会产生一个hs_err_pid74299.log类似这样的日志文件

#

# There is insufficient memory for the Java Runtime Environment to continue.

# Cannot create GC thread. Out of system resources.

# Possible reasons:

# The system is out of physical RAM or swap space

# In 32 bit mode, the process size limit was hit

# Possible solutions:

# Reduce memory load on the system

# Increase physical memory or swap space

# Check if swap backing store is full

# Use 64 bit Java on a 64 bit OS

# Decrease Java heap size (-Xmx/-Xms)

# Decrease number of Java threads

# Decrease Java thread stack sizes (-Xss)

# Set larger code cache with -XX:ReservedCodeCacheSize=

# This output file may be truncated or incomplete.

#

# Out of Memory Error (gcTaskThread.cpp:46), pid=74299, tid=140016377341760

#

# JRE version: (7.0_71-b14) (build )

# Java VM: Java HotSpot(TM) 64-Bit Server VM (24.71-b01 mixed mode linux-amd64 )

# Failed to write core dump. Core dumps have been disabled. To enable core dumping, try "ulimit -c unlimited" before starting Java again

#

默认情况下Linux服务起的core core file size设置为0,需要调整该参数,但是这个参数并不能 解决问题;

问题的根本原因在于服务器的运行应用程序的打开文件的最大数及最大进程数设置的相对较小默认为4096

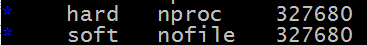

需要修改如下配置:

/etc/security/limits.conf

* soft nofile 327680

* hard nofile 327680

hdfs soft nproc 131072

hdfs hard nproc 131072

mapred soft nproc 131072

mapred hard nproc 131072

hbase soft nproc 131072

hbase hard nproc 131072

zookeeper soft nproc 131072

zookeeper hard nproc 131072

hive soft nproc 131072

hive hard nproc 131072

root soft nproc 131072

root hard nproc 131072

cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.4 (Maipo)

ll /etc/security/limits.d/

总用量 4

-rw-r--r-- 1 root root 228 7月 20 16:08 20-nproc.conf_bak_20190720

问题得到解决。