⽐赛数据分为训练数据集和测试数据集。两个数据集都包括每栋房⼦的特征,如街道类型、建造年份、房顶类型、地下室状况等特征值。这些特征值有连续的数字、离散的标签甚⾄是缺失值“na”。只有训练数据集包括了每栋房⼦的价格,也就是标签。我们可以访问⽐赛⽹⻚,下载这些数据集。

一、导包

%matplotlib inline

import torch

import torch.nn as nn

import numpy as np

import pandas as pd

import sys

sys.path.append("..")

import d2lzh_pytorch as d2l

print(torch.__version__)

torch.set_default_tensor_type(torch.FloatTensor)

二、导入数据

# 导入数据

train_data = pd.read_csv('F:/CodeStore/DL_Pytorch/data/kaggle_house/train.csv')

test_data = pd.read_csv('F:/CodeStore/DL_Pytorch/data/kaggle_house/test.csv')

train_data.shape# 输出 (1460, 81)

test_data.shape# 输出 (1459, 80)

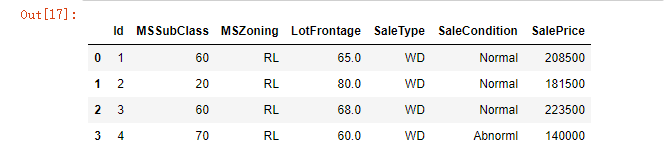

# 查看前4个样本的前4个特征、后2个特征和标签(SalePrice):

train_data.iloc[0:4, [0, 1, 2 , 3, -3, -2, -1]]

输出结果:

三、预处理数据

# 合并有效特征

all_features = pd.concat((train_data.iloc[:, 1:-1], test_data.iloc[:, 1:]))

# 预处理数据

numeric_features = all_features.dtypes[all_features.dtypes != 'object'].index

all_features[numeric_features] = all_features[numeric_features].apply(lambda x: (x - x.mean()) / (x.std()))

# 标准化后,每个特征的均值变为0,所以可以直接用0来替换缺失值

all_features = all_features.fillna(0)

# 将离散数值转成指示特征

# dummy_na=True将缺失值也当作合法的特征值并为其创建指示特征

all_features = pd.get_dummies(all_features, dummy_na=True)

all_features.shape

n_train = train_data.shape[0]

train_features = torch.tensor(all_features[:n_train].values, dtype=torch.float)

test_features = torch.tensor(train_data.SalePrice.values, dtype=torch.float).view(-1, 1)

四、训练模型

# 训练模型

loss = torch.nn.MSELoss()

def get_net(feature_num):

net = nn.Linear(feature_num, 1)

for param in net.parameters():

nn.init.normal_(param, mean=0, std=0.01)

return net

对数均⽅根误差的实现如下。

def log_rmse(net, features, labels):

with torch.no_grad():

# 将小于1的值设成1,使得取得对数时数值更稳定

clipped_preds = torch.max(net(features), torch.tensor(1.0))

rmse = torch.sqrt(2 * loss(clipped_preds.log(),labels.log()).mean())

return rmse.item()

def train(net, train_features, train_labels, test_features, test_labels, num_epochs, learning_rate, weight_decay, batch_size):

train_ls, test_ls = [],[]

dataset = torch.utils.data.DataLoder(dataset, batch_size, shuffle=True)

#这里使用了Adam算法

optimizer = torch.optim.Adam(params=net.parameters(), lr=learning_rate, weight_decay=weight_decay)

net = net.float()

for epoch in range(num_epochs):

for X, y in train_iter:

l = loss(net(x.float()), y.float())

optimazer.zero_grad()

l.backward()

optimazer.step()

train_ls.append(log_rmse(net, test_features, test_labels))

if test_labels is not None:

test_ls.append(log_rmse(net, test_features, test_labels))

return train_ls, test_ls

五、K折交叉验证

# K折交叉验证

def get_k_fold_data(k, i, x, y):

assert k > 1

fold_size = x.shape[0]

x_train, y_train = None, None

for j in range(k):

idx = slice(j * fold_size, (j+1) * fold_size)

x_part, y_part = x[idx, :],y[idx]

if j==i:

x_valid, y_valid = x_part, y_part

elif x_train is None:

x_train, y_train = x_part, y_part

else:

x_train = torch.cat((x_train, x_part), dim=0)

y_train = torch.cat((y_train, y_part), dim=0)

return x_train, y_train, x_valid, y_valid