下载地址:

正式课各组件版本对照

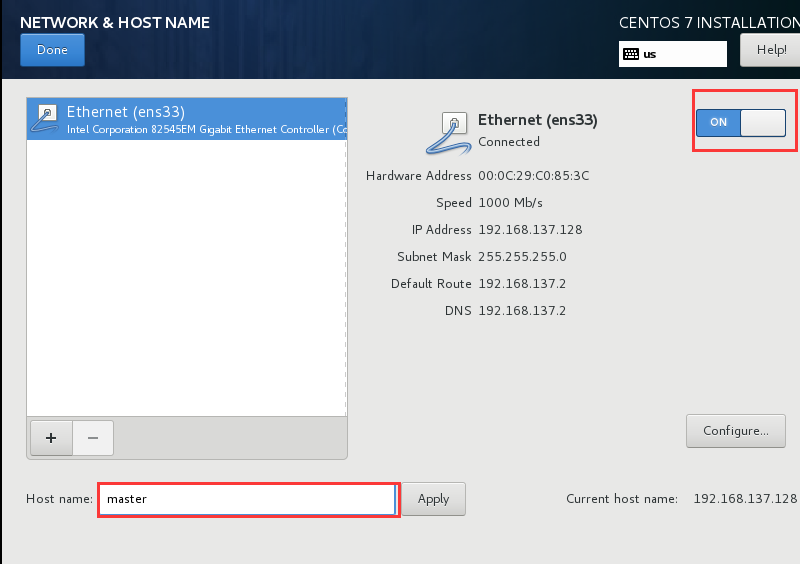

安装CentOS7

为了节省资源,安装时选择最小化安装,后续需要的软件包按需安装

简便起见,安装时最好设置好网络

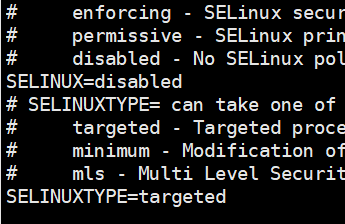

设置IPV4,禁用IPV6

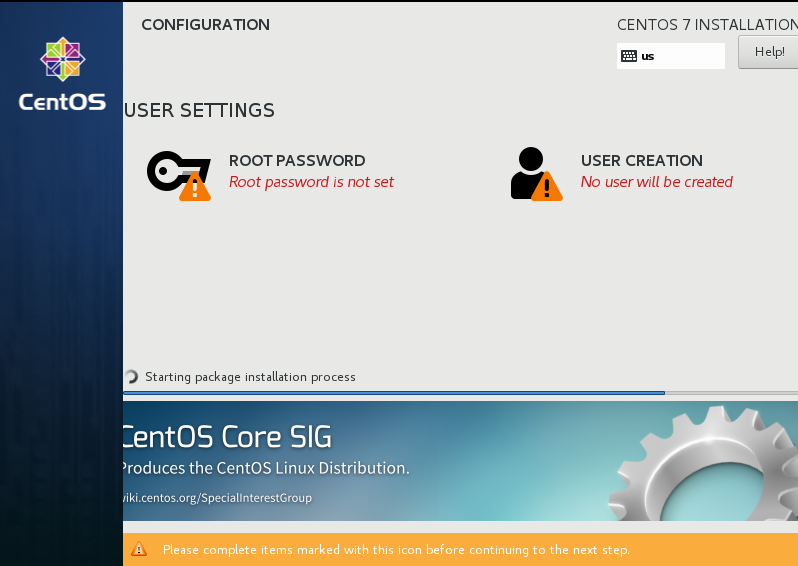

点击Begin Installation,设置root密码和hadoop主账号

安装好之后关机,将整个虚拟机所在的目录(比如hadoop-master)复制两份:hadoop-slave1, hadoop-slave2

将三台虚拟机开机,vmware workstation提示是否复制或移动了虚拟机,选择【复制】

#关闭防火墙(root用户):

systemctl status firewalld

systemctl stop firewalld

systemctl disable firewalld

#分别修改三台主机名和IP地址(root用户):

[root@master ~]# hostnamectl set-hostname master (其他两台机器上这里是slave1和slave2)

[root@master ~]# hostnamectl status

Static hostname: master

Icon name: computer-vm

Chassis: vm

Machine ID: eeedf4adb2294e3c9ff14910b85233b7

Boot ID: 4510949614fe4d8fa164629cacef285a

Virtualization: vmware

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 3.10.0-862.el7.x86_64

Architecture: x86-64

vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="none"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="no"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens33"

UUID="090924a5-2a39-48c7-8948-f4ed4f07e776"

DEVICE="ens33"

ONBOOT="yes"

IPADDR="192.168.137.21" # slave1和slave2的IP分别修改为 22 ,23

PREFIX="24"

GATEWAY="192.168.137.1"

DNS1="10.0.0.1"

#修改/etc/hosts(root用户)

vi /etc/hosts

192.168.137.21 master master.will.com

192.168.137.22 slave1 slave1.will.com

192.168.137.23 slave2 slave2.will.com

#最后一列不是必要的

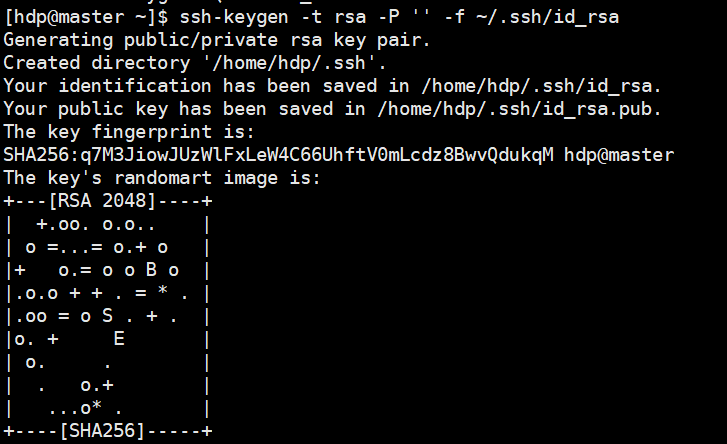

#SSH免密码登录(hdp用户)

分别在三台机器上用hdp用户登录,然后执行:

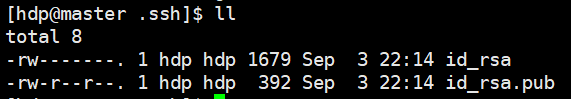

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

也可以执行ssh-keygen,然后按3次回车

这样会在hdp用户的家目录下生成一个.ssh目录,在.ssh目录下生成一个id_rsa文件和id_rsa.pub文件

其中,id_rsa是私钥,权限应该是600。不要泄漏这个文件,也不要改动它的权限

id_rsa.pub是公钥,需要将公钥发给其他机器,其他机器就可以免密码登录这台机器了

在master上/home/hdp/.ssh目录中执行

cp id_rsa.pub authorized_keys

在slave1的/home/hdp/.ssh目录中执行

scp id_rsa.pub master:/home/hdp/.ssh/id_rsa.pub.slave1

在slave2的/home/hdp/.ssh目录中执行

scp id_rsa.pub master:/home/hdp/.ssh/id_rsa.pub.slave2

然后在master的/home/hdp/.ssh目录中执行

cat id_rsa.pub.slave1>> authorized_keys

cat id_rsa.pub.slave2>> authorized_keys

scp authorized_keys slave1:/home/hdp/.ssh/

scp authorized_keys slave2:/home/hdp/.ssh/

然后特别需要注意的就是要修改.ssh目录和authorized_keys文件的权限,

.ssh目录的权限一定是700,authorized_keys文件的权限一定是644,否则免密失败

#下载安装JDK

http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

选择对应的版本和平台,这里选jdk-8u181-linux-x64.tar.gz

#下载和安装hadoop安装包