1.创建scrapy项目

dos窗口输入:

scrapy startproject xiaohuar

cd xiaohuar

2.编写item.py文件(相当于编写模板,需要爬取的数据在这里定义)

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class XiaohuarItem(scrapy.Item):

# define the fields for your item here like:

#名字

name = scrapy.Field()

#学校

info = scrapy.Field()

#点赞人数

zan = scrapy.Field()

#图片链接

image = scrapy.Field()

3.创建爬虫文件

dos窗口输入:

scrapy genspider myspider www.xiaohuar.com

4.编写myspider.py文件(接收响应,处理数据)

# -*- coding: utf-8 -*-

import scrapy

from xiaohuar.items import XiaohuarItem

class MyspiderSpider(scrapy.Spider):

name = 'myspider'

allowed_domains = ['www.xiaohuar.com']

url = "http://www.xiaohuar.com/list-1-"

offset = 0

start_urls = [url+str(offset)+".html"]

def parse(self, response):

for each in response.xpath("//div[@class='item masonry_brick']"):

item = XiaohuarItem()

#名字

item['name'] = each.xpath('.//span/text()').extract()[0]

#学校

item['info'] = each.xpath('.//a/text()').extract()[0]

#赞

item['zan'] = each.xpath('.//em/text()').extract()[0]

#图片链接

if each.xpath('.//img/@src').extract()[0].startswith('/d'):

item['image'] ='http://www.xiaohuar.com'+ each.xpath('.//img/@src').extract()[0]

else:

item['image'] = each.xpath('.//img/@src').extract()[0]

yield item

if self.offset < 43:

self.offset += 1

else:

raise ("程序结束")

yield scrapy.Request(self.url+str(self.offset)+'.html',callback=self.parse)

5.编写pipelines.py(存储数据)

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

import scrapy

#获取setting文件的内容

from scrapy.utils.project import get_project_settings

#导入专门处理图片的包

from scrapy.pipelines.images import ImagesPipeline

import os

import json

#第一个类保存文字描述

class XiaohuarPipeline(object):

def __init__(self):

self.filename = open('F:\xiaohuar\data.txt', 'wb')

def process_item(self, item, spider):

text = json.dumps(dict(item),ensure_ascii=False) + '

'

self.filename.write(text.encode('utf-8'))

return item

def close_spider(self):

self.filename.close()

#第二个类保存图片

class ImagePipeline(ImagesPipeline):

IMAGES_STORE = get_project_settings().get("IMAGES_STORE")

# 得到图片链接,发送图片请求

def get_media_requests(self, item, info):

# 图片链接

image_url = item["image"]

# 图片请求

yield scrapy.Request(image_url)

def item_completed(self, result, item, info):

# 图片路径

image_path = [x["path"] for ok, x in result if ok]

# 保存路径,改名

os.rename(self.IMAGES_STORE + image_path[0], self.IMAGES_STORE + item["name"] + ".jpg")

# 图片重命名后的名字

item["imagePath"] = self.IMAGES_STORE + item["name"]

return item

6.编写settings.py(设置headers,pipelines等)

robox协议

# Obey robots.txt rules ROBOTSTXT_OBEY = False

headers

DEFAULT_REQUEST_HEADERS = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

}

pipelines

ITEM_PIPELINES = {

'xiaohuar.pipelines.XiaohuarPipeline': 300,

'xiaohuar.pipelines.ImagePipeline': 400,

}

IMAGES_STORE = "F:\xiaohuar\"

7.运行爬虫

dos窗口输入:

scrapy crawl myspided

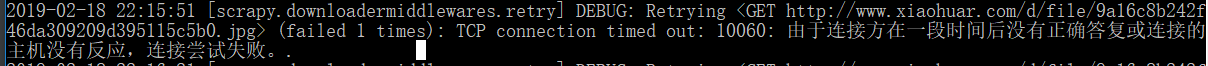

查看debug:

KeyError: 'XiaohuarItem does not support field: imagePath'

emmmmm,

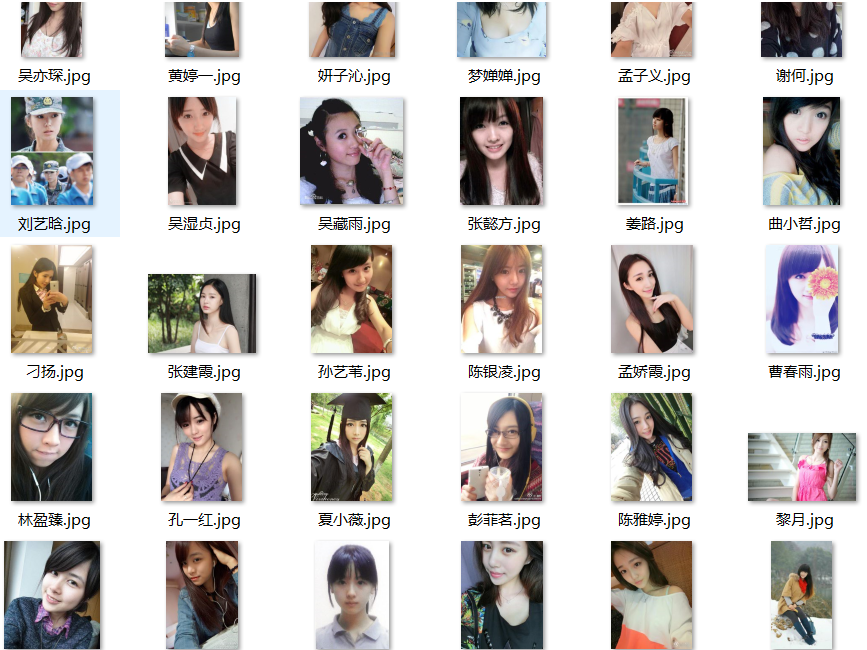

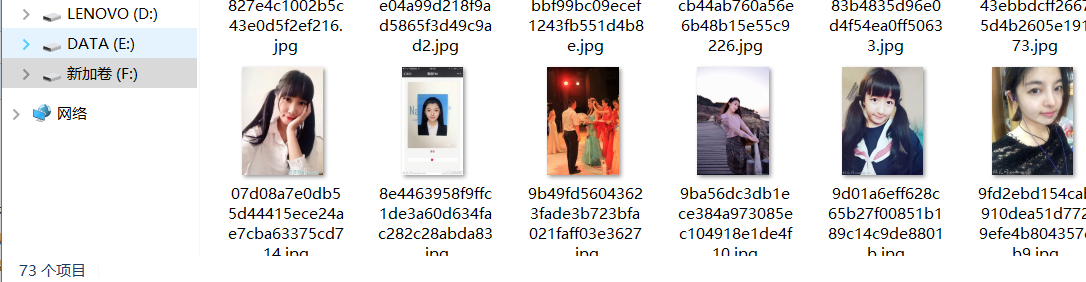

查看结果:

爬取:命名名成功了:1047-2(一个full文件夹,一个txt文档)

在full:+命名失败了73

44页,每页25:44*25=1100应该爬完了,至于多出来的,是我最开始指定offset小于44,多爬了一页

最后命名失败我估计是服务器奔溃了

emmmm,反正这次主要是为了试验一下爬取图片,成功了就行