HDFS(Hadoop Distribute File Ststem)

HDFS全程是Hadoop Distribute File System,Hadoop分布式文件系统。它是一种允许文件通过在多台主机上分享的文件系统,可以让多台机器上的多个用户分享文件和存储空间。类似的分布式文件管理系统还有很多,比如GFS,TFS和S3。

HDFS Shell

针对于HDFS,可以再命令窗中使用Shell命令进行操作。类似于在Linux中的文件。具体命令格式如下:

使用bin 目录下的hdfs,后面指定hfd,表示是操作分布式文件系统。这属于固定格式。

建议将bin目录配置到Path中,这样可以再全局使用。

其实后面的信息就是core-site.xml配置文件中配置的fs.defaultFS属性的值,代表HDFS的地址。

HDFS常见的操作

查看所有的hdfs dfs相关的命令

hdfs dfs

[root@bigdata01 ~]# hdfs dfs

Usage: hadoop fs [generic options]

[-appendToFile <localsrc> ... <dst>]

[-cat [-ignoreCrc] <src> ...]

[-checksum <src> ...]

[-chgrp [-R] GROUP PATH...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-copyFromLocal [-f] [-p] [-l] [-d] [-t <thread count>] <localsrc> ... <dst>]

[-copyToLocal [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-count [-q] [-h] [-v] [-t [<storage type>]] [-u] [-x] [-e] <path> ...]

[-cp [-f] [-p | -p[topax]] [-d] <src> ... <dst>]

[-createSnapshot <snapshotDir> [<snapshotName>]]

[-deleteSnapshot <snapshotDir> <snapshotName>]

[-df [-h] [<path> ...]]

[-du [-s] [-h] [-v] [-x] <path> ...]

[-expunge]

[-find <path> ... <expression> ...]

[-get [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-getfacl [-R] <path>]

[-getfattr [-R] {-n name | -d} [-e en] <path>]

[-getmerge [-nl] [-skip-empty-file] <src> <localdst>]

[-head <file>]

[-help [cmd ...]]

[-ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [-e] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] [-l] [-d] <localsrc> ... <dst>]

[-renameSnapshot <snapshotDir> <oldName> <newName>]

[-rm [-f] [-r|-R] [-skipTrash] [-safely] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]]

[-setfattr {-n name [-v value] | -x name} <path>]

[-setrep [-R] [-w] <rep> <path> ...]

[-stat [format] <path> ...]

[-tail [-f] <file>]

[-test -[defsz] <path>]

[-text [-ignoreCrc] <src> ...]

[-touch [-a] [-m] [-t TIMESTAMP ] [-c] <path> ...]

[-touchz <path> ...]

[-truncate [-w] <length> <path> ...]

[-usage [cmd ...]]

Generic options supported are:

-conf <configuration file> specify an application configuration file

-D <property=value> define a value for a given property

-fs <file:///|hdfs://namenode:port> specify default filesystem URL to use, overrides 'fs.defaultFS' property from configurations.

-jt <local|resourcemanager:port> specify a ResourceManager

-files <file1,...> specify a comma-separated list of files to be copied to the map reduce cluster

-libjars <jar1,...> specify a comma-separated list of jar files to be included in the classpath

-archives <archive1,...> specify a comma-separated list of archives to be unarchived on the compute machines

The general command line syntax is:

command [genericOptions] [commandOptions]

- -ls : 查看指定路径信息

查看hdfs目录下的内容。

[root@bigdata01 ~]# hdfs dfs -ls /

Found 4 items

-rw-r--r-- 1 root supergroup 150569 2021-03-27 14:49 /LICENSE.txt

-rw-r--r-- 1 root supergroup 22125 2021-03-27 14:49 /NOTICE.txt

-rw-r--r-- 1 root supergroup 1361 2021-03-27 14:49 /README.txt

-rw-r--r-- 3 zhangshao supergroup 502 2021-03-27 15:39 /hadoop安装注意事项.sh

其实全路径写法应为

hdfs dfs -ls -hdfs://bigdata01:9000/,因为在hdfs执行的时候会根据HADOOP_HOME自动识别配置文件中的fs.defaultFS属性。

- -put: 从本地上传文件

将本地hadoop根目录下的README.txt直接上传到hdfs根目录下

[root@bigdata01 ~]# hdfs dfs -put /data/soft/hadoop-3.2.0/README.txt /

确认下上传的文件信息

[root@bigdata01 ~]# hdfs dfs -ls -h /

Found 4 items

-rw-r--r-- 1 root supergroup 147.0 K 2021-03-27 14:49 /LICENSE.txt

-rw-r--r-- 1 root supergroup 21.6 K 2021-03-27 14:49 /NOTICE.txt

-rw-r--r-- 1 root supergroup 1.3 K 2021-03-28 18:07 /README.txt

-rw-r--r-- 3 zhangshao supergroup 502 2021-03-27 15:39 /hadoop安装注意事项.sh

- -cat:查看hdfs文件内容

查看README.txt文件中的内容

[root@bigdata01 ~]# hdfs dfs -cat /README.txt

For the latest information about Hadoop, please visit our website at:

http://hadoop.apache.org/

and our wiki, at:

http://wiki.apache.org/hadoop/

This distribution includes cryptographic software. The country in

which you currently reside may have restrictions on the import,

possession, use, and/or re-export to another country, of

encryption software. BEFORE using any encryption software, please

check your country's laws, regulations and policies concerning the

import, possession, or use, and re-export of encryption software, to

see if this is permitted. See <http://www.wassenaar.org/> for more

information.

The U.S. Government Department of Commerce, Bureau of Industry and

Security (BIS), has classified this software as Export Commodity

Control Number (ECCN) 5D002.C.1, which includes information security

software using or performing cryptographic functions with asymmetric

algorithms. The form and manner of this Apache Software Foundation

distribution makes it eligible for export under the License Exception

ENC Technology Software Unrestricted (TSU) exception (see the BIS

Export Administration Regulations, Section 740.13) for both object

code and source code.

The following provides more details on the included cryptographic

software:

Hadoop Core uses the SSL libraries from the Jetty project written

by mortbay.org.

- -get:下载文件到本地

下载README.txt到本地

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -get /README.txt

get: `README.txt': File exists

注意:这样执行报错了,提示文件已存在,我这条命令的意思是要把HDFS中的README.txt下载当前目录中,但是当前目录中已经有这个文件了,要么换到其它目录,要么给文件重命名.

- -mkdir [-p]:创建文件夹

使用mkdir创建test文件夹

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -mkdir /test

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -ls -h /

Found 5 items

-rw-r--r-- 1 root supergroup 147.0 K 2021-03-27 14:49 /LICENSE.txt

-rw-r--r-- 1 root supergroup 21.6 K 2021-03-27 14:49 /NOTICE.txt

-rw-r--r-- 1 root supergroup 1.3 K 2021-03-28 18:07 /README.txt

-rw-r--r-- 3 zhangshao supergroup 502 2021-03-27 15:39 /hadoop安装注意事项.sh

drwxr-xr-x - root supergroup 0 2021-03-28 18:16 /test

如果要递归创建多级目录,可指定-p参数

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -mkdir -p /abc/def

如果想递归显示所有目录信息,可以在ls后添加-R参数

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -ls -R /

-rw-r--r-- 1 root supergroup 150569 2021-03-27 14:49 /LICENSE.txt

-rw-r--r-- 1 root supergroup 22125 2021-03-27 14:49 /NOTICE.txt

-rw-r--r-- 1 root supergroup 1361 2021-03-28 18:07 /README.txt

drwxr-xr-x - root supergroup 0 2021-03-28 18:18 /abc

drwxr-xr-x - root supergroup 0 2021-03-28 18:18 /abc/def

-rw-r--r-- 3 zhangshao supergroup 502 2021-03-27 15:39 /hadoop安装注意事项.sh

drwxr-xr-x - root supergroup 0 2021-03-28 18:16 /test

- -rm[-r]:删除文件/文件夹

如果想要删除hdfs中的目录或者文件,可以使用rm命令。

删除文件

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -rm /README.txt

Deleted /README.txt

删除abc/def目录

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -rm -r /abc/def

Deleted /abc/def

HDFS Shell命令练习

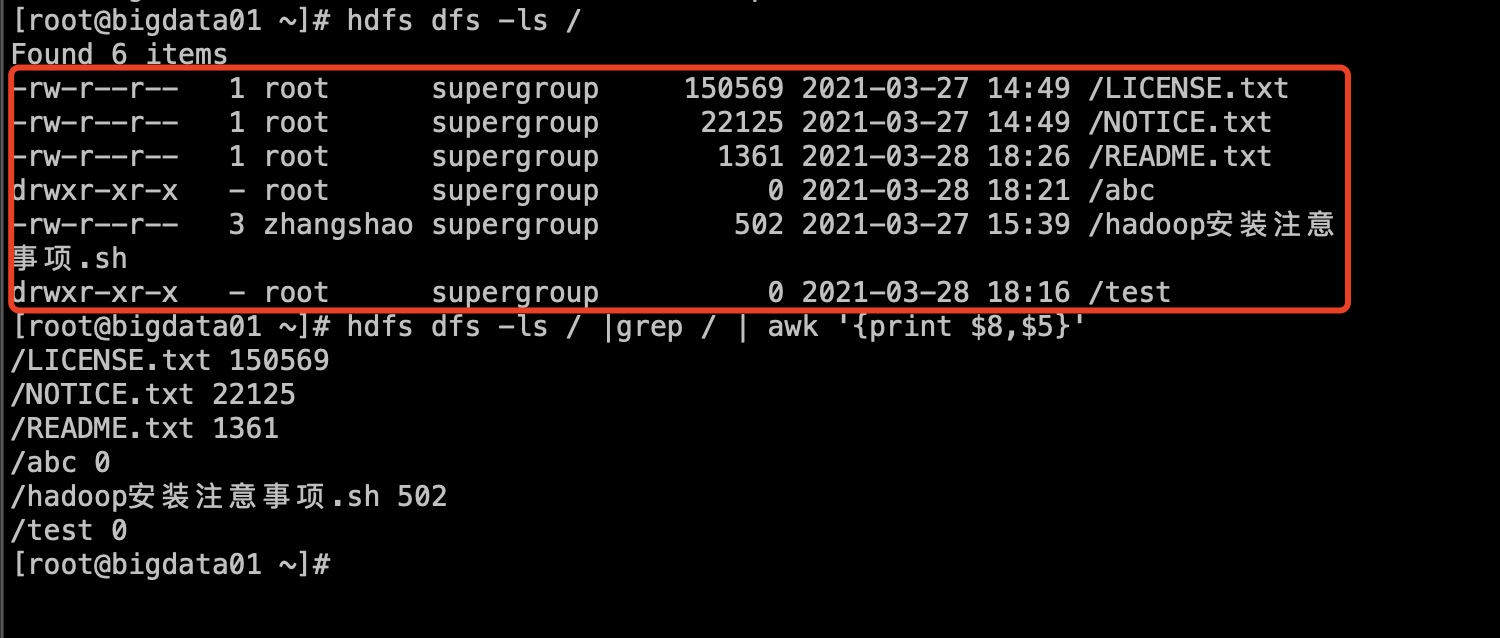

需求:统计HDFS中文件的个数和每个文件的大小

先向HDFS中上传文件,把hadoop目录中的txt上传。

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -put LICENSE.txt /

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -put NOTICE.txt /

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -put README.txt /

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -ls -h /

Found 6 items

-rw-r--r-- 1 root supergroup 147.0 K 2021-03-27 14:49 /LICENSE.txt

-rw-r--r-- 1 root supergroup 21.6 K 2021-03-27 14:49 /NOTICE.txt

-rw-r--r-- 1 root supergroup 1.3 K 2021-03-28 18:26 /README.txt

drwxr-xr-x - root supergroup 0 2021-03-28 18:21 /abc

-rw-r--r-- 3 zhangshao supergroup 502 2021-03-27 15:39 /hadoop安装注意事项.sh

drwxr-xr-x - root supergroup 0 2021-03-28 18:16 /test

统计根目录下文件的个数

[root@bigdata01 hadoop-3.2.0]# hdfs dfs -ls / |grep /|wc -l 6统计根目录每个文件的大小,最终把文件名称和大小打印出来

[root@bigdata01 ~]# hdfs dfs -ls / |grep / | awk '{print $8,$5}'

/LICENSE.txt 150569

/NOTICE.txt 22125

/README.txt 1361

/abc 0

/hadoop安装注意事项.sh 502

/test 0

此处的8 5是因为名称和大小分别在第5列和第8列

JAVA操作HDFS

创建mave工程,在maven的pom文件中引入如下依赖

pom.xml

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.2.0</version>

</dependency>

<!--log4j-->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.29</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.29</version>

</dependency>

resources目录下添加log4j.properties文件

log4j.rootLogger=info,stdout

log4j.appender.stdout = org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target = System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss,SSS} [%t] [%c] [%p] - %m%n

创建通用代码

package com.imooc.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import java.io.FileInputStream;

/**

* 文件操作:上传文件、下载文件、删除文件

* @author zhangshao

* @date 2021/3/27 3:15 下午

*/

public class HdfsOp {

public static void main(String[] args) throws Exception {

//创建配置对象

Configuration conf = new Configuration();

//指定hdfs地址

conf.set("fs.defaultFS","hdfs://bigdata01:9000");

//获取操作HDFS对象

FileSystem fileSystem = FileSystem.get(conf);

//上传文件

//获取本地文件的输入流

FileInputStream fis = new FileInputStream("/Users/zhangshao/Desktop/hadoop安装注意事项.sh");

//获取HDFS文件系统的输出流

FSDataOutputStream fos = fileSystem.create(new Path("/hadoop安装注意事项.sh"));

//上传文件:通过工具类把输出流

IOUtils.copyBytes(fis,fos,1024,true);

}

}

执行代码,发现报错,内容为权限拒绝。

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=yehua, access=WRITE, inode="/":root:supergroup:drwxr-xr-x

解决方式有两种:

- 去掉hdfs的用户鉴权机制,在hdfs-site.xml中配置dfs.permission.enable为false即可。

- 把代码发布到linux服务器中运行。

为本地测试方便,先使用第一种方式。

#### STEPS

1、停掉集群

[root@bigdata01 ~]# stop-al.sh

-bash: stop-al.sh: command not found

[root@bigdata01 ~]# stop-all.sh

Stopping namenodes on [bigdata01]

Last login: Wed Mar 31 22:56:17 CST 2021 on pts/0

Stopping datanodes

Last login: Wed Mar 31 23:09:26 CST 2021 on pts/0

Stopping secondary namenodes [bigdata01]

Last login: Wed Mar 31 23:09:27 CST 2021 on pts/0

Stopping nodemanagers

Last login: Wed Mar 31 23:09:30 CST 2021 on pts/0

Stopping resourcemanager

Last login: Wed Mar 31 23:09:32 CST 2021 on pts/0

2、 修改hdfs-site.xml配置文件

注意:集群内所有节点中的配置文件都需要修改,先在bigdata01节点上修改,然后再同步到另外两个节点上

[root@bigdata01 hadoop-3.2.0]# vi etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>bigdata01:50090</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

</configuration>

3、启动Hadoop集群

[root@bigdata01 ~]# start-all.sh

Starting namenodes on [bigdata01]

Last login: Wed Mar 31 23:09:35 CST 2021 on pts/0

Starting datanodes

Last login: Wed Mar 31 23:19:08 CST 2021 on pts/0

Starting secondary namenodes [bigdata01]

Last login: Wed Mar 31 23:19:10 CST 2021 on pts/0

Starting resourcemanager

Last login: Wed Mar 31 23:19:15 CST 2021 on pts/0

Starting nodemanagers

Last login: Wed Mar 31 23:19:19 CST 2021 on pts/0

4、重新执行代码,发现没有报错。去hdfs上查看文件上传信息发现已上传

[root@bigdata01 ~]# hdfs dfs -cat /hadoop安装注意事项.sh

start-dfs.sh stop-dfs.sh

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

[root@bigdata01 sbin]# vi stop-dfs.sh

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_TARN_HOME,HADOOP_MAPRED_HOME</value>

接下来,对抽取通用代码

package com.imooc.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import java.io.FileInputStream;

import java.io.FileOutputStream;

import java.io.IOException;

/**

* 文件操作:上传文件、下载文件、删除文件

* @author zhangshao

* @date 2021/3/27 3:15 下午

*/

public class HdfsOp {

public static void main(String[] args) throws Exception {

//创建配置对象

Configuration conf = new Configuration();

//指定hdfs地址

conf.set("fs.defaultFS","hdfs://bigdata01:9000");

//获取操作HDFS对象

FileSystem fileSystem = FileSystem.get(conf);

// //上传文件

// put(fileSystem);

//下载文件

get(fileSystem);

}

- 上传文件

/**

* 上传文件

* @param fileSystem

* @throws IOException

*/

private static void put(FileSystem fileSystem) throws IOException {

//获取本地文件的输入流

FileInputStream fis = new FileInputStream("/Users/zhangshao/Desktop/hadoop安装注意事项.sh");

//获取HDFS文件系统的输出流

FSDataOutputStream fos = fileSystem.create(new Path("/hadoop安装注意事项.sh"));

//上传文件:通过工具类把输出流

IOUtils.copyBytes(fis,fos,1024,true);

}

- 下载文件

/**

* 下载文件

* @param fileSystem

* @throws IOException

*/

private static void get(FileSystem fileSystem) throws IOException {

//获取HDFS输入流

FSDataInputStream fis = fileSystem.open(new Path("/README.txt"));

//获取本地文件输出流

FileOutputStream fos = new FileOutputStream("/Users/zhangshao/Desktop/README.txt");

IOUtils.copyBytes(fis,fos,1024);

}

- 删除文件

/**

* 删除文件

* @param fileSystem

* @throws IOException

*/

private static void delete(FileSystem fileSystem) throws IOException {

//删除文件,目录也可以删除

// 如果是递归删除目录,第二个参数设置为true.如果是删除文件或者空目录,第二个参数自动被忽略

boolean deleteResult = fileSystem.delete(new Path("/LICENSE.txt"), true);

if(deleteResult){

System.out.println("删除成功");

}else {

System.out.println("删除失败");

}

}

hdfs上验证LICENSE.txt文件已被成功删除

[root@bigdata01 ~]# hdfs dfs -ls /

Found 5 items

-rw-r--r-- 1 root supergroup 22125 2021-03-27 14:49 /NOTICE.txt

-rw-r--r-- 1 root supergroup 1361 2021-03-28 18:26 /README.txt

drwxr-xr-x - root supergroup 0 2021-03-28 18:21 /abc

-rw-r--r-- 3 zhangshao supergroup 502 2021-03-27 15:39 /hadoop安装注意事项.sh

drwxr-xr-x - root supergroup 0 2021-03-28 18:16 /test