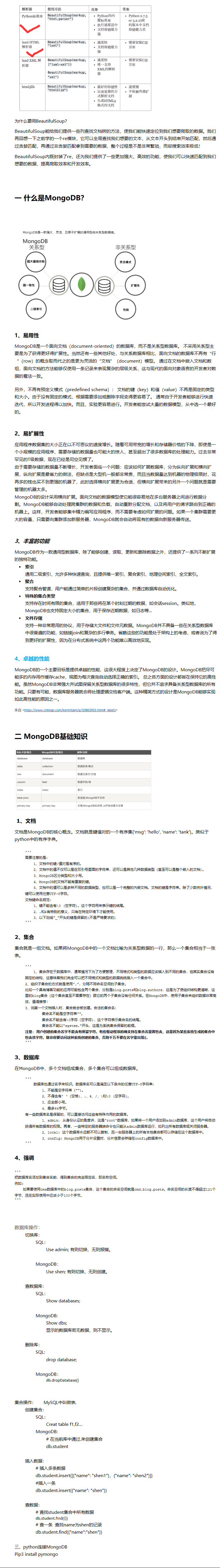

课堂笔记:

1、BeautifulSoup解析库

2、MongoDB存储库

3、requests-html请求库

BeautifulSoup

1、什么是bs4,为什么要使用bs4?

是一个基于re开发的解析库,

作业:

1、基于豌豆荚爬取简介截图图片地址、网友评论

2、把豌豆荚爬取的数据插入MongoDB中

-创建一个wandoujia库

-把主页的数据存放一个名为index集合中

-把详情页的数据存放在一个名为detail集合中

一、解析库之bs4

1 ''' 2 pip3 install beautifulsoup4 #安装bs4 3 pip3 install lxml #下载lxml解析器 4 ''' 5 6 7 html_doc = """ 8 <html><head><title>The Dormouse's story</title></head> 9 <body> 10 <p class="sister"><b>$37</b></p> 11 12 <p class="story" id="p">Once upon a time there were three little sisters; and their names were 13 <a href="http://example.com/elsie" class="sister" >Elsie</a>, 14 <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and 15 <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; 16 and they lived at the bottom of a well.</p> 17 18 <p class="story">...</p> 19 """ 20 # 从bs4中导入BeautifulSoup 21 from bs4 import BeautifulSoup 22 23 # 调用BeautifulSoup实例化得到一个soup对象 24 # 参数一:解析文本 25 # 参数二: 26 #参数三:解析器(html.parser、lxml...) 27 soup = BeautifulSoup(html_doc, 'lxml') 28 29 print(soup) 30 print('*' * 100) 31 print(type(soup)) 32 # 文档美化 33 html = soup.prettify() 34 print(html)

二、bs4之遍历文档树

1 html_doc = """<html><head><title>The Dormouse's story</title></head><body><p class="sister"><b>$37</b></p><p class="story" id="p">Once upon a time there were three little sisters; and their names were<p>shen</p><a href="http://example.com/elsie" class="sister" >Elsie</a>,<a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and<a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>;and they lived at the bottom of a well.<hr></hr></p><p class="story">...</p>""" 2 3 from bs4 import BeautifulSoup 4 soup = BeautifulSoup(html_doc, 'lxml') 5 6 ''' 7 1、直接使用 8 2、获取标签的名称 9 3、获取标签的属性 10 4、获取标签的内容 11 5、嵌套选择 12 6、子节点、子孙节点 13 7、父节点、祖先节点 14 8、兄弟节点 15 ''' 16 17 # 1、直接使用 18 # print(soup.p) # 查找第一个p标签 19 # print(soup.a) # 查找第一个a标签 20 21 # 2、获取标签的名称 22 # print(soup.head.name) # 获取head标签的名称 23 24 # 3、获取标签的属性 25 # print(soup.a.attrs) # 获取a标签中的所有属性 26 # print(soup.a.attrs['href']) # 获取a标签中的href属性 27 28 # 4、获取标签的内容 29 # print(soup.p.text) # $37 30 31 # 5、嵌套选择 32 # print(soup.html.head) 33 34 # 6、子节点、子孙节点 35 # print(soup.body.children) # body所有子节点,返回的是迭代器对象 # <list_iterator object at 0x000002738557A780> 迭代器 36 # print(list(soup.body.children)) # 强转成列表类型 37 38 # print(soup.body.descendants) # 子孙节点 # <generator object descendants at 0x0000026693F27468> 生成器对象 39 # print(list(soup.body.descendants)) 40 41 # 7、父节点、祖先节点 42 # print(soup.p.parent) # 获取p标签的父亲节点 43 # print(soup.p.parents) # 获取p标签所有的祖先节点 # <generator object parents at 0x0000012F95BC7468> 44 # print(list(soup.p.parents)) 45 46 # 8、兄弟节点 47 # 找下一个兄弟 48 # print(soup.p.next_sibling) 49 # 找下面所有的兄弟,返回的是生成器 50 # print(soup.p.next_siblings) 51 # print(list(soup.p.next_siblings)) 52 53 # 找上一个兄弟 54 print(soup.a.previous_sibling) # 找到第一个a标签的上一个兄弟节点 55 # 找到a标签上面的所有兄弟节点 56 print(soup.a.previous_siblings) # 返回的是生成器 57 print(list(soup.a.previous_siblings))

三、bs4之搜索文档树

1 html_doc = """<html><head><title>The Dormouse's story</title></head><body><p class="sister"><b>$37</b></p><p class="story" id="p">Once upon a time there were three little sisters; and their names were<p>shen</p><a href="http://example.com/elsie" class="sister" >Elsie</a>,<a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and<a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>;and they lived at the bottom of a well.<hr></hr></p><p class="story">...</p>""" 2 ''' 3 搜索文档树: 4 find() 找一个 5 find_all() 找多个 6 7 标签查找与属性查找: 8 9 标签: 10 - 字符串过滤器 字符串全局匹配 11 name 属性匹配 12 attrs 属性查找匹配 13 text 文本匹配 14 15 16 - 正则过滤器 17 re模块匹配 18 19 - 列表过滤器 20 列表内的数据匹配 21 22 - bool过滤器 23 True匹配 24 25 - 方法过滤器 26 用于一些要的属性以及不需要的属性查找。 27 28 属性: 29 - class_ 30 - id 31 ''' 32 33 from bs4 import BeautifulSoup 34 soup = BeautifulSoup(html_doc, 'lxml') 35 36 # 字符串过滤器 37 # name 38 # p_tag = soup.find(name='p') 39 # print(p_tag) # 根据文本p查找某个标签 40 # 找到所有标签名为p的节点 41 # tag_s1 = soup.find_all(name='p') 42 # print(tag_s1) 43 44 45 # attrs 46 # 查找第一个class为sister的节点 47 # p= soup.find(attrs={"class":"sister"}) 48 #print(p) 49 # 查找所有class为sister的节点 50 # tag_s2 = soup.find_all(attrs={"class":"sister"}) 51 # print(tag_s2) 52 53 54 # text 55 # text = soup.find(text="$37") 56 # print(text) 57 58 59 # 配合使用 60 # 找到一个id为link2、文本为Lacie的a标签 61 # a_tag = soup.find(name="a", attrs={"id": "link2"}, text="Lacie") 62 # print(a_tag) 63 64 65 66 67 # 正则过滤器 68 # import re 69 # # name 70 # p_tag = soup.find(name=re.compile('p')) 71 # print(p_tag) 72 73 74 # 列表过滤器 75 # import re 76 # # name 77 # tags = soup.find_all(name=['p', 'a', re.compile('html')]) 78 # print(tags) 79 80 # -bool过滤器 81 # True匹配 82 # 找到y有id的p标签 83 # p = soup.find(name='p', attrs={"id": True}) 84 # print(p) 85 86 # 方法过滤器 87 # 匹配标签名为a、属性有id和class的标签 88 # def have_id_class(tag): 89 # # if tag.name == 'a' and tag.has_attr('id') and tag.has_attr('class'): 90 # # return tag 91 # # 92 # # tag = soup.find(name=have_id_class) 93 # # print(tag)

四、爬取豌豆荚app数据

1 ''' 2 主页: 3 图标地址、 4 https://www.wandoujia.com/category/6001 5 6 7 8 32 9 ''' 10 11 import requests 12 from bs4 import BeautifulSoup 13 # 1、发送请求 14 def get_page(url): 15 response = requests.get(url) 16 return response 17 18 # 2、开始解析 19 # 解析详情页 20 def parse_detail(text): 21 soup = BeautifulSoup(text, 'lxml') 22 # print(soup) 23 24 # app名称 25 name = soup.find(name="span", attrs={"class": "title"}).text 26 # print(name) 27 28 # 好评率 29 love = soup.find(name='span', attrs={"class": "love"}).text 30 # print(love) 31 32 # 评论人数 33 commit_num = soup.find(name='a', attrs={"class": "comment-open"}).text 34 # print(commit_num) 35 36 # 小编点评 37 commit_content = soup.find(name='div', attrs={"class": "con"}).text 38 # print(commit_content) 39 40 # app下载链接 41 download_url = soup.find(name='a', attrs={"class": "normal-dl-btn"}).attrs['href'] 42 # print(download_url) 43 44 # data = {'name':name} 45 # # table.insert(data) 46 47 print( 48 f''' 49 ===============tank============ 50 app名称:{name} 51 好评率:{love} 52 评论人数:{commit_num} 53 小编点评:{commit_content} 54 app下载链接:{download_url} 55 ''' 56 ) 57 58 59 # 解析主页 60 def parse_index(data): 61 soup = BeautifulSoup(data, 'lxml') 62 63 # 获取所有app的li标签 64 app_list = soup.find_all(name='li', attrs={"class": "card"}) 65 for app in app_list: 66 print('*' * 1000) 67 # print(app) 68 # 图标地址 69 # 获第一个img标签中的data-origina属性 70 img = app.find(name='img').attrs['data-original'] 71 print(img) 72 73 # 下载次数 74 # 获取class为install-count的span标签中的文本 75 down_num = app.find(name='span',attrs={"class": "install-count"}).text 76 print(down_num) 77 78 # 大小 79 # 根据文本正则获取到文本中包含 数字 + MB (d+代表数字)的span标签中的文本 80 import re 81 size = soup.find(name='span', text=re.compile("d+MB")).text 82 print(size) 83 84 # 详情页地址 85 # 获取class为detail-check-btn的a标签中的href属性 86 detail_url = app.find(name='a').attrs['href'] 87 print(detail_url) 88 89 # 3、往app详情页发送请求 90 response = get_page(detail_url) 91 # print(response.text) 92 # print('tank') 93 94 # 4、解析详情页 95 parse_detail(response.text) 96 97 98 def main(): 99 for line in range(1,33): 100 url = f'https://www.wandoujia.com/wdjweb/api/category/more?catId=6001&subCatId=0&page={line}&ctoken=ql8VkarJqaE7VAYNAEe2JueZ' 101 102 # 1、往app接口发送前请求 103 response = get_page(url) 104 # print(response.text) 105 print('*' * 1000) 106 # 反序列化为字典 107 data = response.json() 108 # 获取接口中app标签数据 109 app_li = data['data']['content'] 110 # print(app_li) 111 # 2、解析app标签数据 112 parse_index(app_li) 113 114 115 116 if __name__ == '__main__': 117 main()

五、MongoDB存储库

1 from pymongo import MongoClient 2 3 # 1、链接MongoDB客户端 4 # 参数1: 5 # 参数2:端口号 6 client = MongoClient('localhost', 27017) 7 print(client) 8 9 # 2、进入shen_db数据库,没有则创建 10 # print(client['shen_db']) 11 12 # 3、创建集合 13 # print(client['shen_db']['people']) 14 15 # 4、给shen_db库插入数据 16 # 1.插入一条 17 data1 = { 18 'name': 'shen', 19 'age': 20, 20 'sex': 'female' 21 } 22 data2 = { 23 'name': 'lu', 24 'age': 21, 25 'sex': 'female' 26 } 27 data3 = { 28 'name': 'liu', 29 'age': 22, 30 'sex': 'feale' 31 } 32 client['shen_db']['people'].insert_many([data1,data2,data3]) 33 34 # 5、查数据 35 # 查看所有数据 36 data_s = client['shen_db']['people'].find() 37 print(data_s) 38 # 需要循环打印所有数据 39 for data in data_s: 40 print(data) 41 42 # 查看一条数据 43 data = client['shen_db']['people'].find_one() 44 print(data) 45 46 # 官方推荐 47 # 插入一条insert_one 48 # client['shen_db']['people'].insert_one() 49 # 插入多条insert_many 50 # client['shen_db']['people'].insert_many()