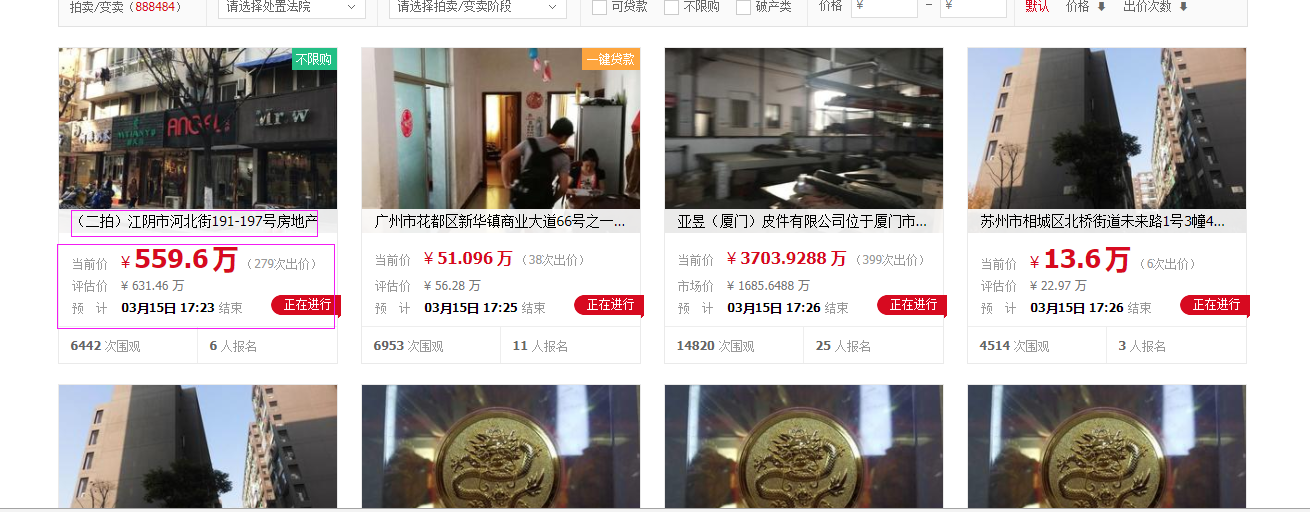

抓取https://sf.taobao.com/item_list.htm信息

driver=webdriver.PhantomJS(service_args=['--ssl-protocol=any']) or driver = webdriver.PhantomJS( service_args=['--ignore-ssl-errors=true'])

cur_driver=webdriver.PhantomJS(service_args=['--ssl-protocol=any', '--load-images=false'])

service_args=['--load-images=false']

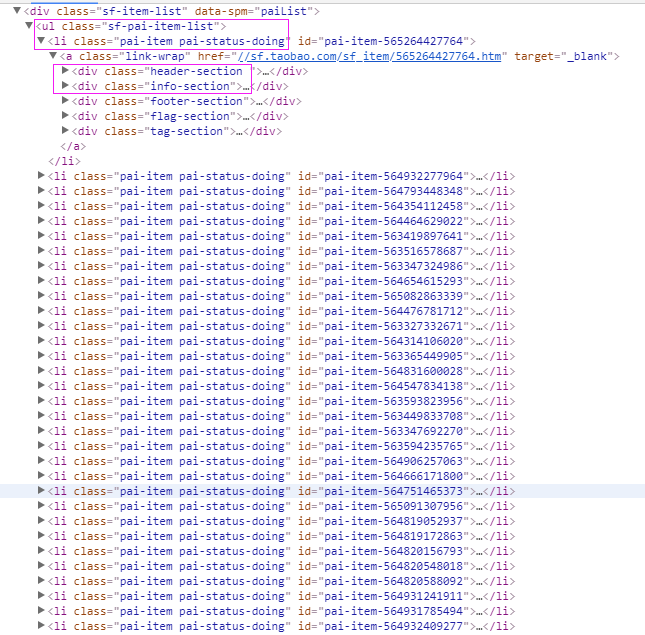

抓取代码

# coding=utf-8 import os import re from selenium import webdriver # from selenium.common.exceptions import TimeoutException import selenium.webdriver.support.ui as ui import time from datetime import datetime from selenium.webdriver.common.action_chains import ActionChains import IniFile # from threading import Thread from pyquery import PyQuery as pq import LogFile import mongoDB import urllib class taobao(object): def __init__(self): self.driver = webdriver.PhantomJS(service_args=['--ssl-protocol=any']) self.driver.set_page_load_timeout(10) self.driver.maximize_window() self.url ='https://sf.taobao.com/item_list.htm' def scrapy_date(self): try: self.driver.get(self.url) selenium_html = self.driver.execute_script("return document.documentElement.outerHTML") doc = pq(selenium_html) Elements = doc('ul[class="sf-pai-item-list"]').find('li[class="pai-item pai-status-doing"]') for element in Elements.items(): priceinfo = element('div[class="info-section"]').find('p').text().encode('utf8').strip() title = element('div[class="header-section "]').find('p').text().encode('utf8').strip() print title print priceinfo print '--------------------------------------------------------------------------------' except Exception, e: print e.message finally: pass obj = taobao() obj.scrapy_date()

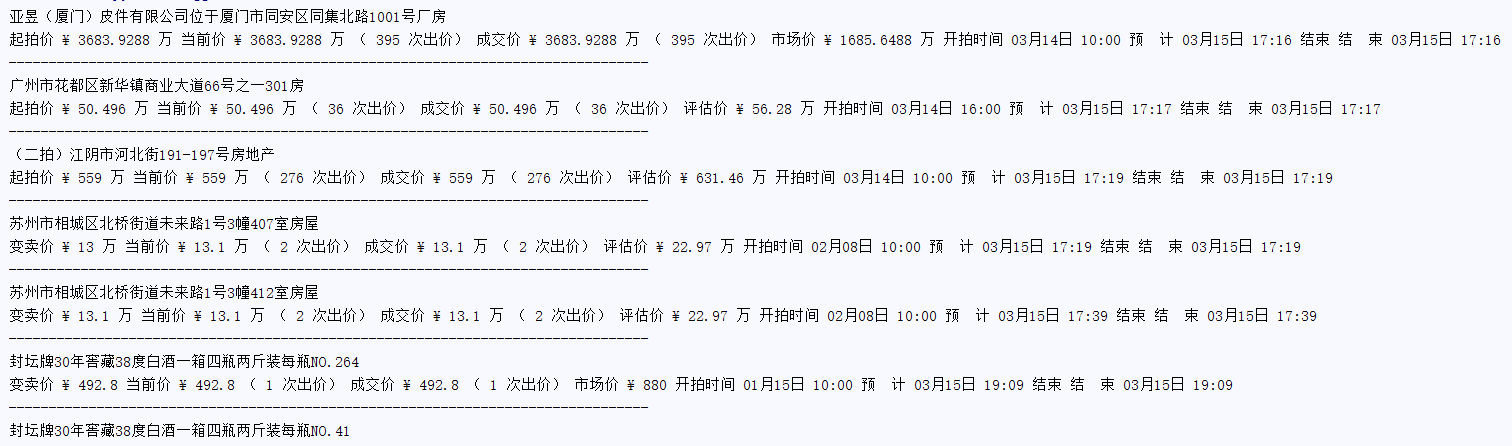

抓取结果