1.xpath解析(各个爬虫语言通用)

(1)环境安装

pip install lxml

(2)解析原理

- 获取页面原码数据

- 实例化etree对象,将页面原码数据加载到该对象中

- 调用该对象的xpath方法进行指定标签的定位(xparh函数必须结合着xpath表达式进行标签的定位和内容的捕获)

(3)xpath语法(返回值是一个列表)

## 一.数据解析 ### 1.xpath解析(各个爬虫语言通用) #### (1)环境安装 ``` pip install lxml ``` #### (2)解析原理 ``` - 获取页面原码数据 - 实例化etree对象,将页面原码数据加载到该对象中 - 调用该对象的xpath方法进行指定标签的定位(xparh函数必须结合着xpath表达式进行标签的定位和内容的捕获) ``` #### (3)xpath语法(返回值是一个列表) ``` 属性定位 / 相当于 > (在开头一定从根节点开始) // 相当于 ' ' @ 表示属性 例://div[@class="song"] 索引定位(索引从1开始) //ul/li[2] 逻辑运算 //a[@href='' and @class='du'] 和 //a[@href='' | @class='du'] 或 模糊匹配 //div[contains(@class,'ng')] //div[starts-with(@class,'ng')] 取文本 //div/text() 直系文本内容 //div//text() 非直系文本内容(返回列表) 取属性 //div/@href ``` #### (4)案例 ##### 案例一:58同城二手房数据爬取 ```python import requests from lxml import etree import os url='https://bj.58.com/changping/ershoufang/?utm_source=market&spm=u-2d2yxv86y3v43nkddh1.BDPCPZ_BT&PGTID=0d30000c-0000-1cc0-306c-511ad17612b3&ClickID=1' headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) title_price_list=tree.xpath('//ul[@class="house-list-wrap"]/li/div[2]/h2/a/text() | //ul[@class="house-list-wrap"]/li/div[3]//text()') with open('./文件夹1/fangyuan.txt','w',encoding='utf-8') as f: for title_price in title_price_list: f.write(title_price) f.close() print("over") ``` ###### *注:区别解析的数据源是原码还是局部数据* ``` 原码数据 tree.HTML('//ul...') 局部数据 tree.HTML('./ul...') #以.开头 ``` ##### 测试xpath语法的正确性 ###### 方式一:xpath.crx(xpath插件) ``` 找到浏览器的 更多工具>拓展程序 开启开发者模式 将xpath.crx拖动到浏览器中 xpath插件启动快捷键:ctrl+shift+x 作用:用于测试xpath语法的正确性 ```  ###### 方式二:浏览器自带  ##### 案例二:4k网爬取图片 ``` import requests from lxml import etree import urllib headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } page_num=int(input("请输入要爬取的页数:")) if page_num==1: url='http://pic.netbian.com/4kyingshi/index.html' origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) a_list=tree.xpath('//ul[@class="clearfix"]/li/a') for a in a_list: name=a.xpath('./b/text()')[0] name=name.encode('iso-8859-1').decode('gbk') url='http://pic.netbian.com'+a.xpath('./img/@src')[0] picture=requests.get(url=url,headers=headers).content picture_name='./文件夹2/'+name+'.jpg' with open(picture_name,'wb') as f: f.write(picture) f.close() print('over!!!') else: for page in range(1,page_num+1): url='http://pic.netbian.com/4kyingshi/index_%d.html' % page origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) a_list=tree.xpath('//ul[@class="clearfix"]/li/a') for a in a_list: name=a.xpath('./b/text()')[0] name=name.encode('iso-8859-1').decode('gbk') url='http://pic.netbian.com'+a.xpath('./img/@src')[0] picture=requests.get(url=url,headers=headers).content picture_name='./文件夹2/'+name+'.jpg' with open(picture_name,'wb') as f: f.write(picture) f.close() print('over!!!') ``` ###### 中文乱码问题 ``` 方式一: response.encoding='gbk' 方式二: name=name.encode('iso-8859-1').decode('utf-8') ``` ###### 数据来源问题 ``` etree.HTML() #处理网络数据 etree.parse() #处理本地数据 ``` ##### 案例3:爬取煎蛋网图片 ```python import requests from lxml import etree import urllib import base64 headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } url='http://jandan.net/ooxx' origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) span_list=tree.xpath('//span[@class="img-hash"]/text()') for span in span_list: src='http:'+base64.b64decode(span).decode("utf-8") picture_data=requests.get(url=src,headers=headers).content name='./文件夹3/'+src.split("/")[-1] with open(name,'wb') as f: f.write(picture_data) f.close() print('over!!!') ``` ###### ##反爬机制3:base64 在response返回数据中,图片的src都是相同的,每个图片都有一个span标签存储一串加密字符串,同时发现一个jandan_load_img函数,故猜测该加密字符串通过此函数可能得到图片地址.  全局搜索此函数  发现此函数中用到了jdtPGUg7oYxbEGFASovweZE267FFvm5aYz  全局搜索jdtPGUg7oYxbEGFASovweZE267FFvm5aYz  函数的最后用到了base64_decode  故断定该加密字符串用base64解密可得到图片地址 ##### 案例4:站长素材简历爬取 ```python import requests from lxml import etree import random headers={ 'Connection':'close', 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } url='http://sc.chinaz.com/jianli/free.html' origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) src_list=tree.xpath('//div[@id="main"]/div/div/a/@href') for src in src_list: filename='./文件夹4/'+src.split('/')[-1].split('.')[0]+'.rar' print(filename) down_page_data=requests.get(url=src,headers=headers).text tree=etree.HTML(down_page_data) down_list=tree.xpath('//div[@id="down"]/div[2]/ul/li/a/@href') res=random.choice(down_list) print(res) jianli=requests.get(url=res,headers=headers).content with open(filename,'wb') as f: f.write(jianli) f.close() print('over!!!') ``` ###### ##反爬机制4:Connection 经典错误 ``` HTTPConnectionPool(host:xx) Max retries exceeded with url ``` 原因 ``` 1.每次数据传输前客户端都要和服务端建立TCP连接,为了节省传输消耗,默认为keep-alive,即连接一次传输多次,然而如果连接迟迟不断开的话,链接池满后,则无法产生新的链接对象,导致请求无法发送 2.IP被封 3.请求频率太频繁 ``` 解决 ``` 1.设置请求头中Connection的值为close,每次成功后断开连接 2.更换请求IP 3.每次请求之间使用sleep进行请求间隔 ``` ##### 案例5:解析所有的城市名称 ```python import requests from lxml import etree headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } url='https://www.aqistudy.cn/historydata/' origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) hot_list=tree.xpath('//div[@class="row"]/div/div[1]/div/text() | //div[@class="row"]/div/div[1]/div[@class="bottom"]/ul[@class="unstyled"]/li/a/text()') with open('./文件夹1/city.txt','w',encoding='utf-8') as f: for hot in hot_list: f.write(hot.strip()) common_list=tree.xpath('//div[@class="row"]/div/div[2]/div[1]/text() | //div[@class="row"]/div/div[2]/div[2]/ul//text()') for common in common_list: f.write(common.strip()) f.close() print('over!!!') ``` ##### 案例6:图片懒加载,站长素材婚纱照 ```python import requests from lxml import etree headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } url='http://sc.chinaz.com/tupian/hunsha.html' origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) div_list=tree.xpath('//div[@id="container"]/div') for div in div_list: title=div.xpath('./p/a/text()')[0].encode('iso-8859-1').decode('utf-8') name='./文件夹1/'+title+'.jpg' photo_url=div.xpath('./div/a/@href')[0] origin_data=requests.get(url=photo_url,headers=headers).text tree=etree.HTML(origin_data) url_it=tree.xpath('//div[@class="imga"]/a/img/@src')[0] origin_data=requests.get(url=url_it,headers=headers).content with open(name,'wb') as f: f.write(origin_data) print('over!!!') ``` ###### ##反爬机制5:代理IP 使用 ```python import requests from lxml import etree import random proxie=[{'https':'116.197.134.153:80'},{'https':'103.224.100.43:8080'},{'https':'222.74.237.246:808'}] headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } url='https://www.baidu.com/s?wd=ip' origin_data=requests.get(url=url,headers=headers,proxies=random.choice(proxie)).text with open('./ip.html','w',encoding='utf-8') as f: f.write(origin_data) print('over!!!') ``` 常用代理网站 ``` www.goubanjia.com 快代理 西祠代理 ``` 代理知识 ``` 透明:对方知道使用了代理,且知道真实IP 匿名:对方知道使用了代理,不知道真实IP 高匿:对方不知道使用了代理,更不知道真实IP ``` *注:代理IP的类型必须和请求url的协议头 保持一致* *https://www.55xia.com下载电影* *顺序:动态加载,url加密,element*

(4)案例

案例一:58同城二手房数据爬取

import requests from lxml import etree import os url='https://bj.58.com/changping/ershoufang/?utm_source=market&spm=u-2d2yxv86y3v43nkddh1.BDPCPZ_BT&PGTID=0d30000c-0000-1cc0-306c-511ad17612b3&ClickID=1' headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) title_price_list=tree.xpath('//ul[@class="house-list-wrap"]/li/div[2]/h2/a/text() | //ul[@class="house-list-wrap"]/li/div[3]//text()') with open('./文件夹1/fangyuan.txt','w',encoding='utf-8') as f: for title_price in title_price_list: f.write(title_price) f.close() print("over")

注:区别解析的数据源是原码还是局部数据

原码数据 tree.HTML('//ul...') 局部数据 tree.HTML('./ul...') #以.开头

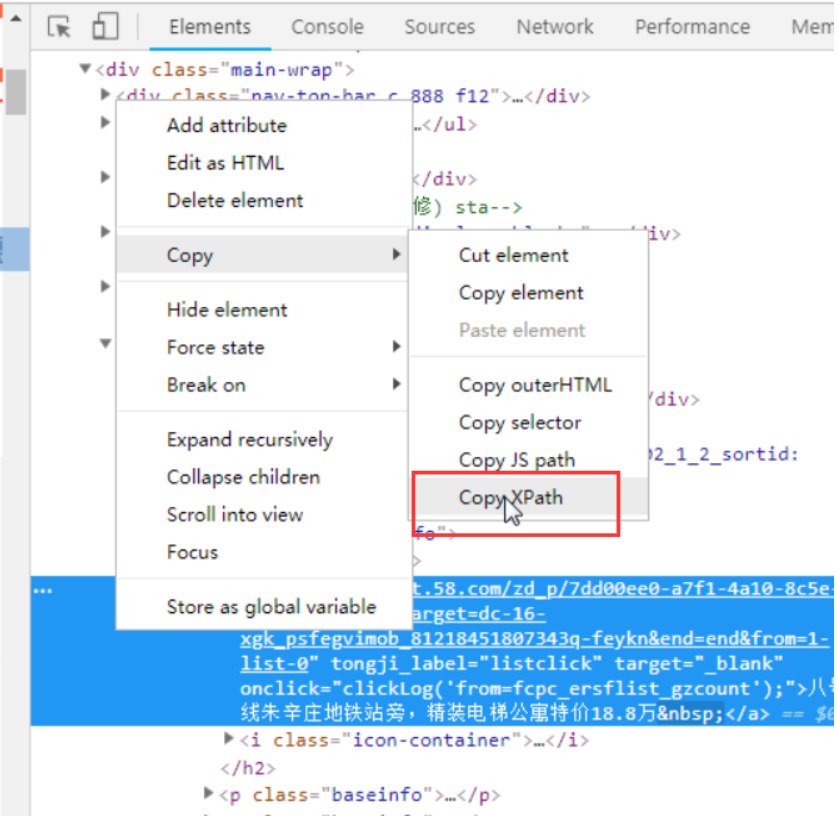

测试xpath语法的正确性

方式一:xpath.crx(xpath插件)

找到浏览器的 更多工具>拓展程序 开启开发者模式 将xpath.crx拖动到浏览器中 xpath插件启动快捷键:ctrl+shift+x 作用:用于测试xpath语法的正确性

方式二:浏览器自带

案例二:4k网爬取图片

import requests from lxml import etree import urllib headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } page_num=int(input("请输入要爬取的页数:")) if page_num==1: url='http://pic.netbian.com/4kyingshi/index.html' origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) a_list=tree.xpath('//ul[@class="clearfix"]/li/a') for a in a_list: name=a.xpath('./b/text()')[0] name=name.encode('iso-8859-1').decode('gbk') url='http://pic.netbian.com'+a.xpath('./img/@src')[0] picture=requests.get(url=url,headers=headers).content picture_name='./文件夹2/'+name+'.jpg' with open(picture_name,'wb') as f: f.write(picture) f.close() print('over!!!') else: for page in range(1,page_num+1): url='http://pic.netbian.com/4kyingshi/index_%d.html' % page origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) a_list=tree.xpath('//ul[@class="clearfix"]/li/a') for a in a_list: name=a.xpath('./b/text()')[0] name=name.encode('iso-8859-1').decode('gbk') url='http://pic.netbian.com'+a.xpath('./img/@src')[0] picture=requests.get(url=url,headers=headers).content picture_name='./文件夹2/'+name+'.jpg' with open(picture_name,'wb') as f: f.write(picture) f.close() print('over!!!')

中文乱码问题

方式一: response.encoding='gbk' 方式二: name=name.encode('iso-8859-1').decode('utf-8')

数据来源问题

etree.HTML() #处理网络数据 etree.parse() #处理本地数据

案例3:爬取煎蛋网图片

import requests from lxml import etree import urllib import base64 headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } url='http://jandan.net/ooxx' origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) span_list=tree.xpath('//span[@class="img-hash"]/text()') for span in span_list: src='http:'+base64.b64decode(span).decode("utf-8") picture_data=requests.get(url=src,headers=headers).content name='./文件夹3/'+src.split("/")[-1] with open(name,'wb') as f: f.write(picture_data) f.close() print('over!!!')

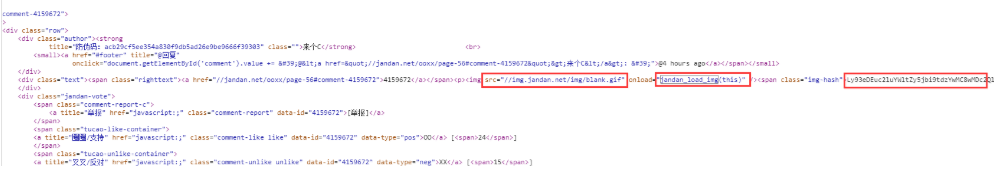

##反爬机制3:base64

在response返回数据中,图片的src都是相同的,每个图片都有一个span标签存储一串加密字符串,同时发现一个jandan_load_img函数,故猜测该加密字符串通过此函数可能得到图片地址.

全局搜索此函数

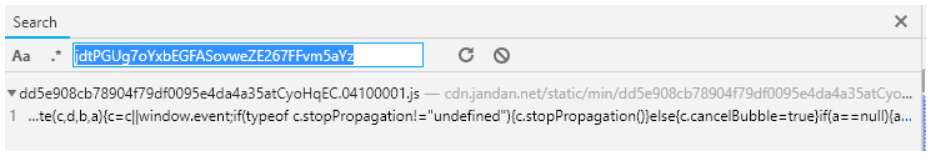

发现此函数中用到了jdtPGUg7oYxbEGFASovweZE267FFvm5aYz

全局搜索jdtPGUg7oYxbEGFASovweZE267FFvm5aYz

函数的最后用到了base64_decode

故断定该加密字符串用base64解密可得到图片地址

案例4:站长素材简历爬取

import requests from lxml import etree import random headers={ 'Connection':'close', 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } url='http://sc.chinaz.com/jianli/free.html' origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) src_list=tree.xpath('//div[@id="main"]/div/div/a/@href') for src in src_list: filename='./文件夹4/'+src.split('/')[-1].split('.')[0]+'.rar' print(filename) down_page_data=requests.get(url=src,headers=headers).text tree=etree.HTML(down_page_data) down_list=tree.xpath('//div[@id="down"]/div[2]/ul/li/a/@href') res=random.choice(down_list) print(res) jianli=requests.get(url=res,headers=headers).content with open(filename,'wb') as f: f.write(jianli) f.close() print('over!!!')

##反爬机制4:Connection

经典错误

HTTPConnectionPool(host:xx) Max retries exceeded with url

原因

1.每次数据传输前客户端都要和服务端建立TCP连接,为了节省传输消耗,默认为keep-alive,即连接一次传输多次,然而如果连接迟迟不断开的话,链接池满后,则无法产生新的链接对象,导致请求无法发送 2.IP被封 3.请求频率太频繁

解决

1.设置请求头中Connection的值为close,每次成功后断开连接 2.更换请求IP 3.每次请求之间使用sleep进行请求间隔

案例5:解析所有的城市名称

import requests from lxml import etree headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } url='https://www.aqistudy.cn/historydata/' origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) hot_list=tree.xpath('//div[@class="row"]/div/div[1]/div/text() | //div[@class="row"]/div/div[1]/div[@class="bottom"]/ul[@class="unstyled"]/li/a/text()') with open('./文件夹1/city.txt','w',encoding='utf-8') as f: for hot in hot_list: f.write(hot.strip()) common_list=tree.xpath('//div[@class="row"]/div/div[2]/div[1]/text() | //div[@class="row"]/div/div[2]/div[2]/ul//text()') for common in common_list: f.write(common.strip()) f.close() print('over!!!')

案例6:图片懒加载,站长素材婚纱照

import requests from lxml import etree headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } url='http://sc.chinaz.com/tupian/hunsha.html' origin_data=requests.get(url=url,headers=headers).text tree=etree.HTML(origin_data) div_list=tree.xpath('//div[@id="container"]/div') for div in div_list: title=div.xpath('./p/a/text()')[0].encode('iso-8859-1').decode('utf-8') name='./文件夹1/'+title+'.jpg' photo_url=div.xpath('./div/a/@href')[0] origin_data=requests.get(url=photo_url,headers=headers).text tree=etree.HTML(origin_data) url_it=tree.xpath('//div[@class="imga"]/a/img/@src')[0] origin_data=requests.get(url=url_it,headers=headers).content with open(name,'wb') as f: f.write(origin_data) print('over!!!')

##反爬机制5:代理IP

使用

import requests from lxml import etree import random proxie=[{'https':'116.197.134.153:80'},{'https':'103.224.100.43:8080'},{'https':'222.74.237.246:808'}] headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36' } url='https://www.baidu.com/s?wd=ip' origin_data=requests.get(url=url,headers=headers,proxies=random.choice(proxie)).text with open('./ip.html','w',encoding='utf-8') as f: f.write(origin_data) print('over!!!')

常用代理网站

www.goubanjia.com

快代理

西祠代理

代理知识

透明:对方知道使用了代理,且知道真实IP

匿名:对方知道使用了代理,不知道真实IP

高匿:对方不知道使用了代理,更不知道真实IP

注:代理IP的类型必须和请求url的协议头 保持一致

顺序:动态加载,url加密,element