一、方法1:with

2017年月度 各店铺 单客户平均消费(店铺消费/店铺unique客户数量)

环比:每个月和上一个月比

with tab as ( select date,store_id,avgCusPrice-lastAvgPrice priceDiff from ( select date,store_id,avgCusPrice, lag(avgCusPrice,1,0) over(partition by store_id order by date) lastAvgPrice from ( select totalprice/cusCount avgCusPrice,date,store_id from ( select count(distinct customer_name) cusCount,sum(price) totalprice,date,store_id from ( select customer_name,price ,from_unixtime(unix_timestamp(date,'yyyy-MM-dd'),'yyyy-MM') date,store_id from transaction_details )T group by date,store_id )T )T )T ) select t4.store_id, t4.priceDiff as diff4, t5.priceDiff as diff5, t6.priceDiff as diff6 from ( select store_id,priceDiff from tab where date = "2017-04" )t4 inner join tab t5 on t4.store_id=t5.store_id and t5.date="2017-05" inner join tab t6 on t4.store_id=t6.store_id and t6.date="2017-06"

二、方法2:case when

例1

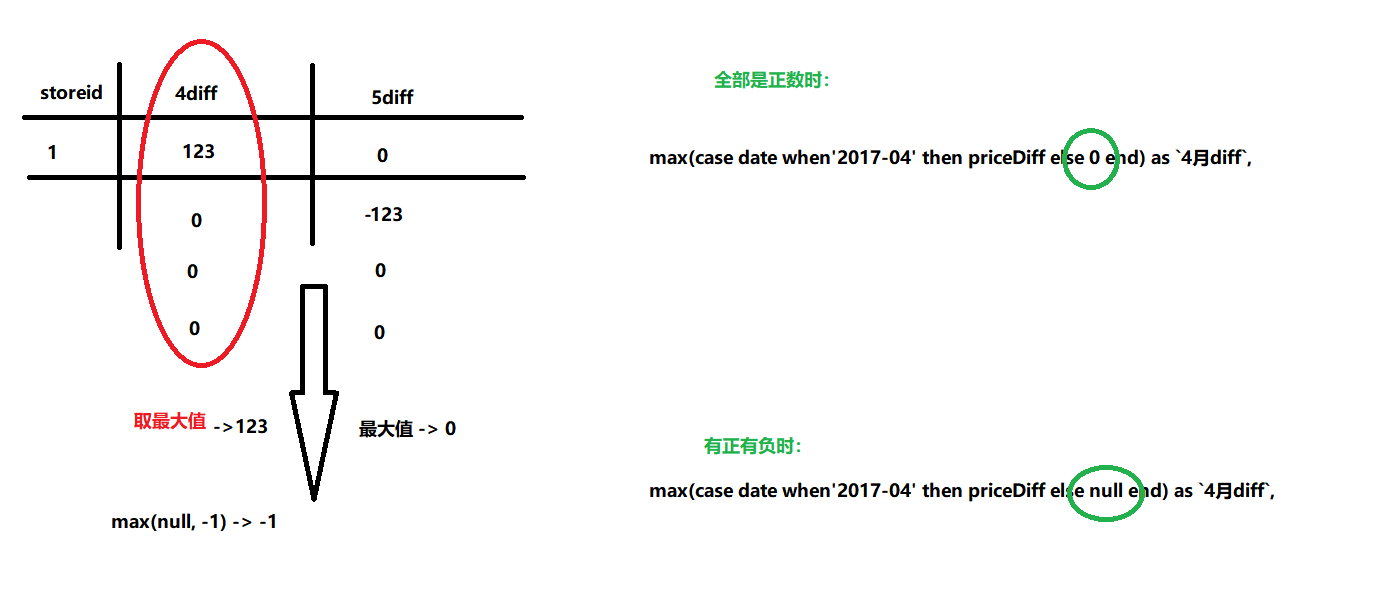

select store_id, max(case date when'2017-04' then priceDiff else null end) as `4月diff`, max(case date when'2017-05' then priceDiff else null end) as `5月diff`, max(case date when'2017-06' then priceDiff else null end) as `6月diff`, max(case date when'2017-07' then priceDiff else null end) as `7月diff` from ( select date,store_id,avgCusPrice-lastAvgPrice priceDiff from ( select date,store_id,avgCusPrice, lag(avgCusPrice,1,0) over(partition by store_id order by date) lastAvgPrice from ( select totalprice/cusCount avgCusPrice,date,store_id from ( select count(distinct customer_name) cusCount,sum(price) totalprice,date,store_id from ( select customer_name,price ,from_unixtime(unix_timestamp(date,'yyyy-MM-dd'),'yyyy-MM') date,store_id from transaction_details )T group by date,store_id )T )T )T )T group by store_id

例2

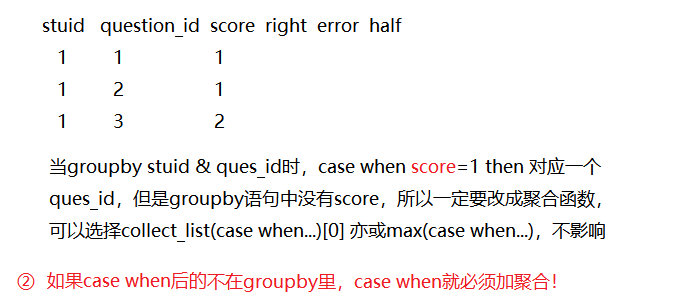

stu_id, question_id, score分为 1 0 0.5,对应 right,error,half。需要根据stu_id求他们每人right,error,half的题目集合

思路1

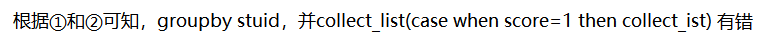

select student_id, concat_ws('',collect_list(t.right)) right, concat_ws('',collect_list(t.half)) half, concat_ws('',collect_list(t.error)) error from ( select student_id, case when score=1.0 then concat_ws(",",collect_list(question_id)) else null end right, case when score=0.5 then concat_ws(",",collect_list(question_id)) else null end half, case when score=0.0 then concat_ws(",",collect_list(question_id)) else null end error from ex_exam_record group by student_id,score ) t group by student_id;

思路2

select student_id, concat_ws(',',collect_list(`right`)) as `right`, concat_ws(',',collect_list(`half`)) as `half`, concat_ws(',',collect_list(`error`)) as `error` from ( select student_id, max(case score when 0 then question_id else null end) as `error`, max(case score when 1 then question_id else null end) as `right`, max(case score when 0.5 then question_id else null end) as `half` from ex_exam_record group by student_id,question_id )T group by student_id

select ddd.d_date, sum(case when datediff('2018-10-20',ddd.d_date)<=1 then 1 else 0 end) two_count from dw_sales_source.dwd_fact_sales_order dfo inner join dwd_dim_date ddd on dfo.date_sk = ddd.date_sk inner join dwd_dim_customer ddc on dfo.customer_sk = ddc.customer_sk inner join dwd_dim_product ddp on dfo.product_sk = ddp.product_sk where ddd.d_date>='2018-10-19' and ddd.d_date<='2018-10-20' group by ddd.d_date; select ddd.d_date, case when datediff('2018-10-20',ddd.d_date)<=1 then 1 else 0 end two_count # 近两天的order次数 from dw_sales_source.dwd_fact_sales_order dfo inner join dwd_dim_date ddd on dfo.date_sk = ddd.date_sk inner join dwd_dim_customer ddc on dfo.customer_sk = ddc.customer_sk inner join dwd_dim_product ddp on dfo.product_sk = ddp.product_sk where ddd.d_date>='2018-10-19' and ddd.d_date<='2018-10-20' group by ddd.d_date;

case when 后的d_date在group by 后面,“10-19 1”“10-19 1”分组加起来“10-19 出现次数”

思路3

只groupby stuid,是否就能够分类出对应的question_id集合?

答:不行

三、方法3:Lateral view explode

val friends = spark.read.format("csv").option("header","true").load("hdfs://192.168.56.111:9000/party/data/user_friends.csv")

方法1:lateral view explode之 hive

friends.createOrReplaceTempView("friends") spark.sql(""" select count(1) from ( select distinct user,friends_1 from friends LATERAL VIEW explode(split(friends,' '))friends as friends_1 where friends_1 is not null and friends_1 !="" // hive在split(空格)的时候会把两个空格中间视为"" )T """).show()

>>> 30386387

方法2:explode之 sparkSQL-API

friends.select($"user".alias("user_id"),explode(split($"friends"," ")).alias("friends_1")).filter("friends_1 is not null").distinct.count

>>> 30386387

结果都是: