On the effectiveness of the dark channel prior for single image dehazing by approximating with minimum volume ellipsoid

Kristofer B.Gibson, Truong Q.Nguyen

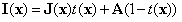

对于雾天成像模型: ,利用主成分分析法分析其像素灰度值的椭球聚集状态。

,利用主成分分析法分析其像素灰度值的椭球聚集状态。

对于雾天图像的某一窗口 ,且

,且 中总共有

中总共有 个像素,存在一系列位于窗口中的无雾图像的向量

个像素,存在一系列位于窗口中的无雾图像的向量 (均为列向量)。

(均为列向量)。

设 为其中的一个3x1的颜色向量,则其3x3的自相关矩阵为:

为其中的一个3x1的颜色向量,则其3x3的自相关矩阵为:

则有K-L变换为:

对角矩阵 中的元素值为

中的元素值为 的特征值的递减排列,且有

的特征值的递减排列,且有 ,其中

,其中 ,

, 与

与 为自相关矩阵

为自相关矩阵 的特征值,且3x3矩阵

的特征值,且3x3矩阵 为标准正交矩阵。

为标准正交矩阵。

设在矩形窗口 内的传输函数为一定值

内的传输函数为一定值 ,则有雾天像素值的自相关矩阵为:

,则有雾天像素值的自相关矩阵为:

所以雾天图像的窗口的像素灰度值的自相关矩阵与无雾图像的窗口的像素灰度值的自相关矩阵之间是比例系数关系。同时两者对应的对角矩阵

所以雾天图像的窗口的像素灰度值的自相关矩阵与无雾图像的窗口的像素灰度值的自相关矩阵之间是比例系数关系。同时两者对应的对角矩阵 也满足如上比例系数关系,推导过程为:

也满足如上比例系数关系,推导过程为:

从而有 ,

, 。

。

对于雾天成像模型: ,其传输函数

,其传输函数 ,当

,当 时,

时, ;当

;当 时,

时, 。所以传输函数的取值范围为:

。所以传输函数的取值范围为: 。

。

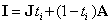

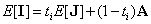

对于大气散射模型,两边取期望算符以求取窗口内部的向量的数学期望,则有:

则对于聚集的向量的期望,当 时,

时, ;当

;当 时,

时, 。所以

。所以 在

在 与

与 之间变化。由此可得结论:当景深

之间变化。由此可得结论:当景深 时,

时,