* 站在巨人的肩膀上可以看的更远 *

Android 9.0 Native Looper机制(原理篇)

Android 9.0 Native Looper机制(应用篇)

前言

在分析Android Framework Native层代码的时候,很多地方都用到了Android系统中重要的辅助类Looper来进行线程间通信或设计处理逻辑,本文将深入分析一下Looper机制,方便理解其运行原理。

- 先给出几篇非常值得参考的文章

https://blog.csdn.net/xiaosayidao/article/details/73992078

- 另外要理解Looper机制的原理,还需要额外理解Linux中的epool机制

https://blog.csdn.net/xiajun07061225/article/details/9250579

- Linux中的eventfd的使用:

https://blog.csdn.net/qq_28114615/article/details/97929524

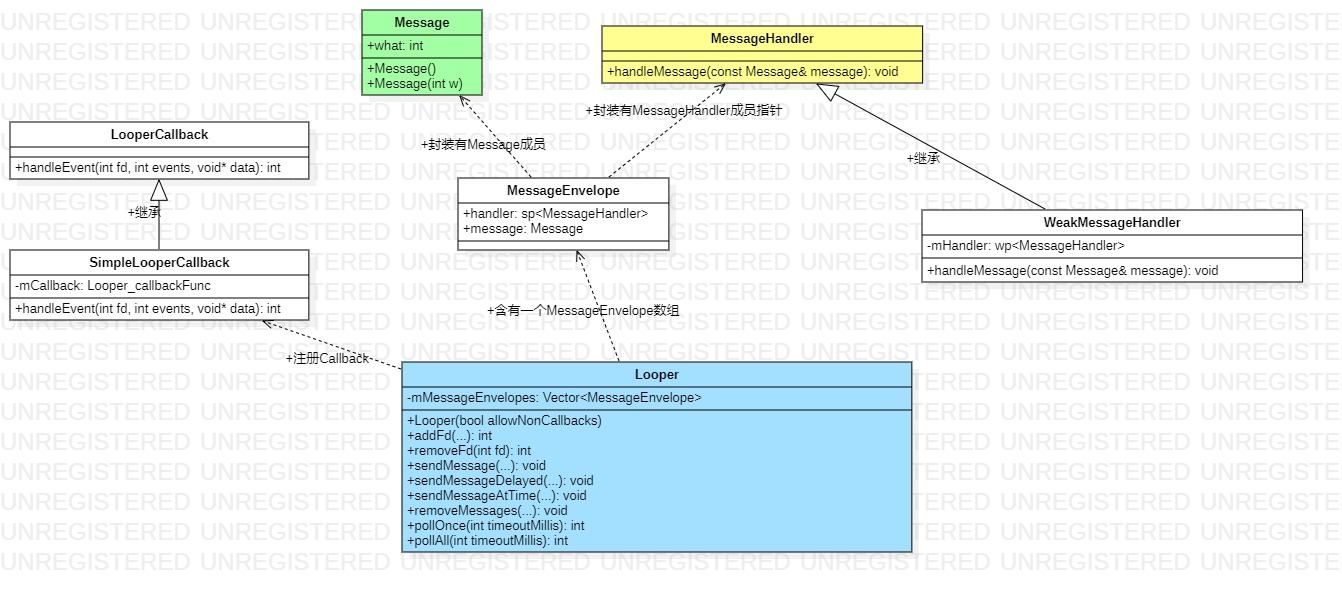

基本类图

Android Framework Native层的消息Looper机制代码,主要实现位于:

system/core/libutils/Looper.cpp

system/core/include/utils/Looper.h

基本类图如下:

基本对象说明

- Message : 消息的载体,代表了一个事件,通过一个what字段来标记是什么事件,源码定义如下:

/** * A message that can be posted to a Looper. */ struct Message { Message() : what(0) { } Message(int w) : what(w) { } /* The message type. (interpretation is left up to the handler) */ int what; };

- MessageHandler/WeakMessageHandler 消息处理的接口(基类), 子类通过实现handleMessage来实现特定Message的处理逻辑。WeakMessageHandler包含了一个MessageHandler的弱指针

/**

* Interface for a Looper message handler.

*

* The Looper holds a strong reference to the message handler whenever it has

* a message to deliver to it. Make sure to call Looper::removeMessages

* to remove any pending messages destined for the handler so that the handler

* can be destroyed.

*/

class MessageHandler : public virtual RefBase {

protected:

virtual ~MessageHandler();

public:

/**

* Handles a message.

*/

virtual void handleMessage(const Message& message) = 0;

};

/**

* A simple proxy that holds a weak reference to a message handler.

*/

class WeakMessageHandler : public MessageHandler {

protected:

virtual ~WeakMessageHandler();

public:

WeakMessageHandler(const wp<MessageHandler>& handler);

virtual void handleMessage(const Message& message);

private:

wp<MessageHandler> mHandler;

};

- LooperCallback/SimpleLooperCallback : 用于Looper回调,实际上就是保存一个Looper_callbackFunc指针的包装基类。在Looper::addFd()方法添加监测的fd时来设置回调。

/**

* A looper callback.

*/

class LooperCallback : public virtual RefBase {

protected:

virtual ~LooperCallback();

public:

/**

* Handles a poll event for the given file descriptor.

* It is given the file descriptor it is associated with,

* a bitmask of the poll events that were triggered (typically EVENT_INPUT),

* and the data pointer that was originally supplied.

*

* Implementations should return 1 to continue receiving callbacks, or 0

* to have this file descriptor and callback unregistered from the looper.

*/

virtual int handleEvent(int fd, int events, void* data) = 0;

};

/**

* Wraps a Looper_callbackFunc function pointer.

*/

class SimpleLooperCallback : public LooperCallback {

protected:

virtual ~SimpleLooperCallback();

public:

SimpleLooperCallback(Looper_callbackFunc callback);

virtual int handleEvent(int fd, int events, void* data);

private:

Looper_callbackFunc mCallback;

};

- Looper核心类,它其中维护着一个消息/监测的fd队列,当用户调用pollOnce或pollAll时,就会去判断是否有消息要处理(调用对应的handler::handleMessage)或监测对的fd有事件发生(回调对应的callback函数)

关键方法分析

- Create Looper == 创建Looper的方法

Looper提供两种方式创建Looper:

1. 直接调用Looper 构造函数:

Looper::Looper(bool allowNonCallbacks) :

mAllowNonCallbacks(allowNonCallbacks), mSendingMessage(false),

mPolling(false), mEpollFd(-1), mEpollRebuildRequired(false),

mNextRequestSeq(0), mResponseIndex(0), mNextMessageUptime(LLONG_MAX) {

mWakeEventFd = eventfd(0, EFD_NONBLOCK | EFD_CLOEXEC);

LOG_ALWAYS_FATAL_IF(mWakeEventFd < 0, "Could not make wake event fd: %s",

strerror(errno));

AutoMutex _l(mLock);

rebuildEpollLocked();

}

2. 调用Looper的静态函数 prepare() :如果线程已经有对应的Looper,则直接返回,否则才会创建新的Looper。

sp<Looper> Looper::prepare(int opts) {

bool allowNonCallbacks = opts & PREPARE_ALLOW_NON_CALLBACKS;

sp<Looper> looper = Looper::getForThread();

if (looper == NULL) {

looper = new Looper(allowNonCallbacks);

Looper::setForThread(looper);

}

if (looper->getAllowNonCallbacks() != allowNonCallbacks) {

ALOGW("Looper already prepared for this thread with a different value for the "

"LOOPER_PREPARE_ALLOW_NON_CALLBACKS option.");

}

return looper;

}

Looper的构造函数里主要做两件事情:

- > 调用eventfd(0, EFD_NONBLOCK)返回mWakeEventFd,用于唤醒epoll_wait()

- > 调用rebuildEpollLocked() 创建epoll 文件描述符,并将mWakeEventFd加入到epoll监听队列中

void Looper::rebuildEpollLocked() {

// Close old epoll instance if we have one.

if (mEpollFd >= 0) {

#if DEBUG_CALLBACKS

ALOGD("%p ~ rebuildEpollLocked - rebuilding epoll set", this);

#endif

close(mEpollFd);

}

// Allocate the new epoll instance and register the wake pipe.

mEpollFd = epoll_create(EPOLL_SIZE_HINT);

LOG_ALWAYS_FATAL_IF(mEpollFd < 0, "Could not create epoll instance: %s", strerror(errno));

struct epoll_event eventItem;

memset(& eventItem, 0, sizeof(epoll_event)); // zero out unused members of data field union

eventItem.events = EPOLLIN;

eventItem.data.fd = mWakeEventFd;

int result = epoll_ctl(mEpollFd, EPOLL_CTL_ADD, mWakeEventFd, & eventItem);

LOG_ALWAYS_FATAL_IF(result != 0, "Could not add wake event fd to epoll instance: %s",

strerror(errno));

for (size_t i = 0; i < mRequests.size(); i++) {

const Request& request = mRequests.valueAt(i);

struct epoll_event eventItem;

request.initEventItem(&eventItem);

int epollResult = epoll_ctl(mEpollFd, EPOLL_CTL_ADD, request.fd, & eventItem);

if (epollResult < 0) {

ALOGE("Error adding epoll events for fd %d while rebuilding epoll set: %s",

request.fd, strerror(errno));

}

}

}

注:参数allowNonCallbacks表明是否可以在Looper_addFd时不提供callback

- Send Message == 发送消息的方法

发送消息是指将消息插入到消息队列 mMessageEnvelopes。mMessageEnvelopes 里面的是根据时间顺序排列存放MessageEnvlope:下标越小,越早被处理。

发送消息的函数有如下三个,但最终都是调用sendMessageAtTime() 来实现的。

/**

* Enqueues a message to be processed by the specified handler.

*

* The handler must not be null.

* This method can be called on any thread.

*/

void sendMessage(const sp<MessageHandler>& handler, const Message& message);

/**

* Enqueues a message to be processed by the specified handler after all pending messages

* after the specified delay.

*

* The time delay is specified in uptime nanoseconds.

* The handler must not be null.

* This method can be called on any thread.

*/

void sendMessageDelayed(nsecs_t uptimeDelay, const sp<MessageHandler>& handler,

const Message& message);

/**

* Enqueues a message to be processed by the specified handler after all pending messages

* at the specified time.

*

* The time is specified in uptime nanoseconds.

* The handler must not be null.

* This method can be called on any thread.

*/

void sendMessageAtTime(nsecs_t uptime, const sp<MessageHandler>& handler,

const Message& message);

来看一下sendMessageAtTime()函数的具体实现:

void Looper::sendMessageAtTime(nsecs_t uptime, const sp<MessageHandler>& handler,

const Message& message) {

#if DEBUG_CALLBACKS

ALOGD("%p ~ sendMessageAtTime - uptime=%" PRId64 ", handler=%p, what=%d",

this, uptime, handler.get(), message.what);

#endif

size_t i = 0;

{ // acquire lock

AutoMutex _l(mLock);

size_t messageCount = mMessageEnvelopes.size();

while (i < messageCount && uptime >= mMessageEnvelopes.itemAt(i).uptime) {

i += 1;

}

MessageEnvelope messageEnvelope(uptime, handler, message);

mMessageEnvelopes.insertAt(messageEnvelope, i, 1);

// Optimization: If the Looper is currently sending a message, then we can skip

// the call to wake() because the next thing the Looper will do after processing

// messages is to decide when the next wakeup time should be. In fact, it does

// not even matter whether this code is running on the Looper thread.

if (mSendingMessage) {

return;

}

} // release lock

// Wake the poll loop only when we enqueue a new message at the head.

if (i == 0) {

wake();

}

}

首先根据uptime在mMessageEnvelopes遍历,找到合适的位置,并将message 封装成MessageEnvlope,插入找到的位置上。

然后决定是否要唤醒Looper:

- 如果Looper此时正在派发message,则不需要wakeup Looper。因为这一次looper处理完消息之后,会重新估算下一次epoll_wait() 的wakeup时间。

- 如果是插在消息队列的头部,则需要立即wakeup Looper

另外还有提供移除消息的方法removeMessages:

/**

* Removes all messages for the specified handler from the queue.

*

* The handler must not be null.

* This method can be called on any thread.

*/

void removeMessages(const sp<MessageHandler>& handler);

/**

* Removes all messages of a particular type for the specified handler from the queue.

*

* The handler must not be null.

* This method can be called on any thread.

*/

void removeMessages(const sp<MessageHandler>& handler, int what);

- Add fd == 添加fd的方法

添加新的文件描述符并设置回调方法,用于监测事件,提供了两种方法:

/**

* Adds a new file descriptor to be polled by the looper.

* If the same file descriptor was previously added, it is replaced.

*

* "fd" is the file descriptor to be added.

* "ident" is an identifier for this event, which is returned from pollOnce().

* The identifier must be >= 0, or POLL_CALLBACK if providing a non-NULL callback.

* "events" are the poll events to wake up on. Typically this is EVENT_INPUT.

* "callback" is the function to call when there is an event on the file descriptor.

* "data" is a private data pointer to supply to the callback.

*

* There are two main uses of this function:

*

* (1) If "callback" is non-NULL, then this function will be called when there is

* data on the file descriptor. It should execute any events it has pending,

* appropriately reading from the file descriptor. The 'ident' is ignored in this case.

*

* (2) If "callback" is NULL, the 'ident' will be returned by Looper_pollOnce

* when its file descriptor has data available, requiring the caller to take

* care of processing it.

*

* Returns 1 if the file descriptor was added, 0 if the arguments were invalid.

*

* This method can be called on any thread.

* This method may block briefly if it needs to wake the poll.

*

* The callback may either be specified as a bare function pointer or as a smart

* pointer callback object. The smart pointer should be preferred because it is

* easier to avoid races when the callback is removed from a different thread.

* See removeFd() for details.

*/

int addFd(int fd, int ident, int events, Looper_callbackFunc callback, void* data);

int addFd(int fd, int ident, int events, const sp<LooperCallback>& callback, void* data);

来看一下具体实现:

int Looper::addFd(int fd, int ident, int events, const sp<LooperCallback>& callback, void* data) {

#if DEBUG_CALLBACKS

ALOGD("%p ~ addFd - fd=%d, ident=%d, events=0x%x, callback=%p, data=%p", this, fd, ident,

events, callback.get(), data);

#endif

if (!callback.get()) {

if (! mAllowNonCallbacks) {

ALOGE("Invalid attempt to set NULL callback but not allowed for this looper.");

return -1;

}

if (ident < 0) {

ALOGE("Invalid attempt to set NULL callback with ident < 0.");

return -1;

}

} else {

ident = POLL_CALLBACK;

}

{ // acquire lock

AutoMutex _l(mLock);

Request request;

request.fd = fd;

request.ident = ident;

request.events = events;

request.seq = mNextRequestSeq++;

request.callback = callback;

request.data = data;

if (mNextRequestSeq == -1) mNextRequestSeq = 0; // reserve sequence number -1

struct epoll_event eventItem;

request.initEventItem(&eventItem);

ssize_t requestIndex = mRequests.indexOfKey(fd);

if (requestIndex < 0) {

int epollResult = epoll_ctl(mEpollFd, EPOLL_CTL_ADD, fd, & eventItem);

if (epollResult < 0) {

ALOGE("Error adding epoll events for fd %d: %s", fd, strerror(errno));

return -1;

}

mRequests.add(fd, request);

} else {

int epollResult = epoll_ctl(mEpollFd, EPOLL_CTL_MOD, fd, & eventItem);

if (epollResult < 0) {

if (errno == ENOENT) {

// Tolerate ENOENT because it means that an older file descriptor was

// closed before its callback was unregistered and meanwhile a new

// file descriptor with the same number has been created and is now

// being registered for the first time. This error may occur naturally

// when a callback has the side-effect of closing the file descriptor

// before returning and unregistering itself. Callback sequence number

// checks further ensure that the race is benign.

//

// Unfortunately due to kernel limitations we need to rebuild the epoll

// set from scratch because it may contain an old file handle that we are

// now unable to remove since its file descriptor is no longer valid.

// No such problem would have occurred if we were using the poll system

// call instead, but that approach carries others disadvantages.

#if DEBUG_CALLBACKS

ALOGD("%p ~ addFd - EPOLL_CTL_MOD failed due to file descriptor "

"being recycled, falling back on EPOLL_CTL_ADD: %s",

this, strerror(errno));

#endif

epollResult = epoll_ctl(mEpollFd, EPOLL_CTL_ADD, fd, & eventItem);

if (epollResult < 0) {

ALOGE("Error modifying or adding epoll events for fd %d: %s",

fd, strerror(errno));

return -1;

}

scheduleEpollRebuildLocked();

} else {

ALOGE("Error modifying epoll events for fd %d: %s", fd, strerror(errno));

return -1;

}

}

mRequests.replaceValueAt(requestIndex, request);

}

} // release lock

return 1;

}

主要做了3件事:

1. 把要监测的文件描述符fd、事件标识events、回调函数指针callback、一些额外参数data封装成一个Request对象;

2. 调用epoll_ctl对文件描述符fd进行监测;

3. 把Request对象添加到mRequests列表(KeyedVector<int, Request> mRequests)

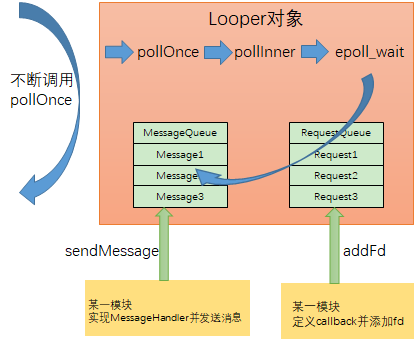

- Poll Looper == 轮询处理的方法

要让Looper运行起来才能处理消息。Looper提供了接口:pollOnce()

int Looper::pollOnce(int timeoutMillis, int* outFd, int* outEvents, void** outData);

调用poolOnce返回,返回结果如下四种情况

enum { /** * Result from Looper_pollOnce() and Looper_pollAll(): * The poll was awoken using wake() before the timeout expired * and no callbacks were executed and no other file descriptors were ready. */ POLL_WAKE = -1, // 在超时前通过wake()唤醒,没有callback被执行,且没有文件描述符事件 /** * Result from Looper_pollOnce() and Looper_pollAll(): * One or more callbacks were executed. */ POLL_CALLBACK = -2, // 一个或多个callback被执行 /** * Result from Looper_pollOnce() and Looper_pollAll(): * The timeout expired. */ POLL_TIMEOUT = -3, // 超时 /** * Result from Looper_pollOnce() and Looper_pollAll(): * An error occurred. */ POLL_ERROR = -4, // 发生错误 };

如果Looper中没有任何要处理的event/message,则会阻塞在epoll_wait() 等待事件到来。

调用流程:pollOnce() -> pollInner() -> epoll_wait()

struct epoll_event eventItems[EPOLL_MAX_EVENTS];

int eventCount = epoll_wait(mEpollFd, eventItems, EPOLL_MAX_EVENTS, timeoutMillis);

epoll_wait()其有三种情况会返回,返回值eventCount为上来的epoll event数量。

- 出现错误返回, eventCount < 0;

- timeout返回,eventCount = 0,表明监听的文件描述符中都没有事件发生,将直接进行native message的处理;

- 监听的文件描述符中有事件发生导致的返回,eventCount > 0; 有eventCount 数量的epoll event 上来。

epoll_wait唤醒后,接下首先是判断是否有监测的文件描述符事件,并把事件标识events和对应封装为Response对象,加入到mResponses队列中(Vector<Response> mResponses)

for (int i = 0; i < eventCount; i++) {

int fd = eventItems[i].data.fd;

uint32_t epollEvents = eventItems[i].events;

if (fd == mWakeEventFd) {

if (epollEvents & EPOLLIN) {

awoken();

} else {

ALOGW("Ignoring unexpected epoll events 0x%x on wake event fd.", epollEvents);

}

} else {

ssize_t requestIndex = mRequests.indexOfKey(fd);

if (requestIndex >= 0) {

int events = 0;

if (epollEvents & EPOLLIN) events |= EVENT_INPUT;

if (epollEvents & EPOLLOUT) events |= EVENT_OUTPUT;

if (epollEvents & EPOLLERR) events |= EVENT_ERROR;

if (epollEvents & EPOLLHUP) events |= EVENT_HANGUP;

pushResponse(events, mRequests.valueAt(requestIndex));

} else {

ALOGW("Ignoring unexpected epoll events 0x%x on fd %d that is "

"no longer registered.", epollEvents, fd);

}

}

}

然后 开始遍历消息队列,判断是否有消息到了处理时间了,并调用消息对应的handler->handleMessage(message);

while (mMessageEnvelopes.size() != 0) {

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

const MessageEnvelope& messageEnvelope = mMessageEnvelopes.itemAt(0);

if (messageEnvelope.uptime <= now) {

// Remove the envelope from the list.

// We keep a strong reference to the handler until the call to handleMessage

// finishes. Then we drop it so that the handler can be deleted *before*

// we reacquire our lock.

{ // obtain handler

sp<MessageHandler> handler = messageEnvelope.handler;

Message message = messageEnvelope.message;

mMessageEnvelopes.removeAt(0);

mSendingMessage = true;

mLock.unlock();

#if DEBUG_POLL_AND_WAKE || DEBUG_CALLBACKS

ALOGD("%p ~ pollOnce - sending message: handler=%p, what=%d",

this, handler.get(), message.what);

#endif

handler->handleMessage(message);

} // release handler

mLock.lock();

mSendingMessage = false;

result = POLL_CALLBACK;

} else {

// The last message left at the head of the queue determines the next wakeup time.

mNextMessageUptime = messageEnvelope.uptime;

break;

}

}

同样会遍历mResponses队列,并回调对应的response.request.callback->handleEvent(fd, events, data)

// Invoke all response callbacks.

for (size_t i = 0; i < mResponses.size(); i++) {

Response& response = mResponses.editItemAt(i);

if (response.request.ident == POLL_CALLBACK) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

#if DEBUG_POLL_AND_WAKE || DEBUG_CALLBACKS

ALOGD("%p ~ pollOnce - invoking fd event callback %p: fd=%d, events=0x%x, data=%p",

this, response.request.callback.get(), fd, events, data);

#endif

// Invoke the callback. Note that the file descriptor may be closed by

// the callback (and potentially even reused) before the function returns so

// we need to be a little careful when removing the file descriptor afterwards.

int callbackResult = response.request.callback->handleEvent(fd, events, data);

if (callbackResult == 0) {

removeFd(fd, response.request.seq);

}

// Clear the callback reference in the response structure promptly because we

// will not clear the response vector itself until the next poll.

response.request.callback.clear();

result = POLL_CALLBACK;

}

}

注:每个消息处理完,会被从消息队列移除 mMessageEnvelopes.removeAt(0);

每个fd事件回调完,也会被从mRequests列表中移除

对于pollInner()

调整timeout:Adjust the timeout based on when the next message is due.

mNextMessageUptime 是 消息队列 mMessageEnvelopes 中最近一个即将要被处理的message的时间点。

所以需要根据mNextMessageUptime 与 调用者传下来的timeoutMillis 比较计算出一个最小的timeout,这将决定epoll_wait() 可能会阻塞多久才会返回。

epoll_wait()

处理epoll_wait() 返回的epoll events.

判断epoll event 是哪个fd上发生的事件

如果是mWakeEventFd,则执行awoken(). awoken() 只是将数据read出来,然后继续往下处理了。其目的也就是使epoll_wait() 从阻塞中返回。

如果是通过Looper.addFd() 接口加入到epoll监听队列的fd,并不是立马处理,而是先push到mResponses,后面再处理。

处理消息队列 mMessageEnvelopes 中的Message.

如果还没有到处理时间,就更新一下mNextMessageUptime

处理刚才放入mResponses中的 事件.

只处理ident 为POLL_CALLBACK的事件。其他事件在pollOnce中处理

基本运行机制

大概画一个抽象的处理概念图示,不一定准确

- 创建消息Message并指定指定MessageHandler, 调用sendMessage()把消息传递给Looper。

- Looper根据Message 和 MessageHandler创建MessageEnvelope 。然后将MessageEnvelope 添加到Looper的消息队列 mMessageEnvelopes 中。

- Native Looper除了处理Message之外,还可以监听指定的文件描述符。

- 通过addFd() 添加要监听的fd到epoll的监听队列中,并将传进来的fd,ident,callback,data 封装成Request 对象,然后加入到Looper 的mRequests 中。

- 外部逻辑(可以是某一个Thread)不断调用poolOnce-> pollInner() -> epoll_wait()阻塞,等待事件发生或超时

- 当该fd有事件发生时,epoll_wait()会返回epoll event,然后从mRequests中找到对应的request对象,并加上返回的epoll event 类型(EPOLLIN、EPOLLOUT…)封装成Response对象,加入到mResponses 中。

- 然后在需要处理Responses的时候,从mResponses遍历取出Response进行处理。

- 同样遍历消息队列 mMessageEnvelopes中的消息进行处理

- 如此不断循环