背景

2014年获得Imagenet比赛冠军,这个结构证明了用更多的卷积、更深的层次可以得到更好的效果。

所采用了的Inception结构,也成为很多后续模型的基础。

结构

简单的理解就是尺度更加丰富的特征有助于提高识别效果。

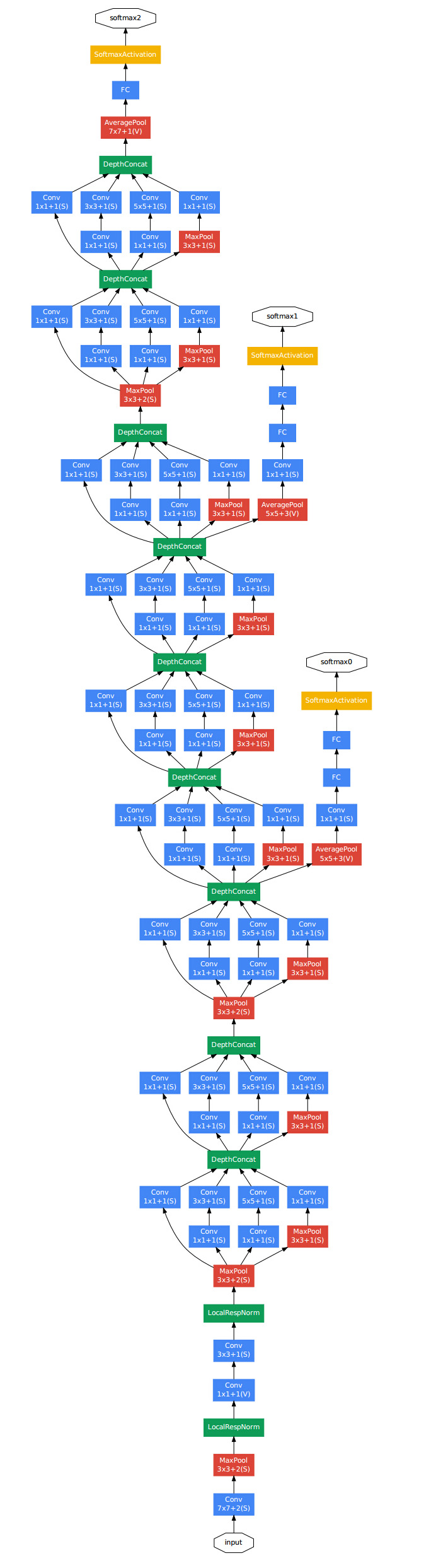

GoogleNet结构如下:

构成部件和alexnet差不多,不过中间有好几个inception的结构。

是说一分四,然后做一些不同大小的卷积,之后再堆叠feature map。

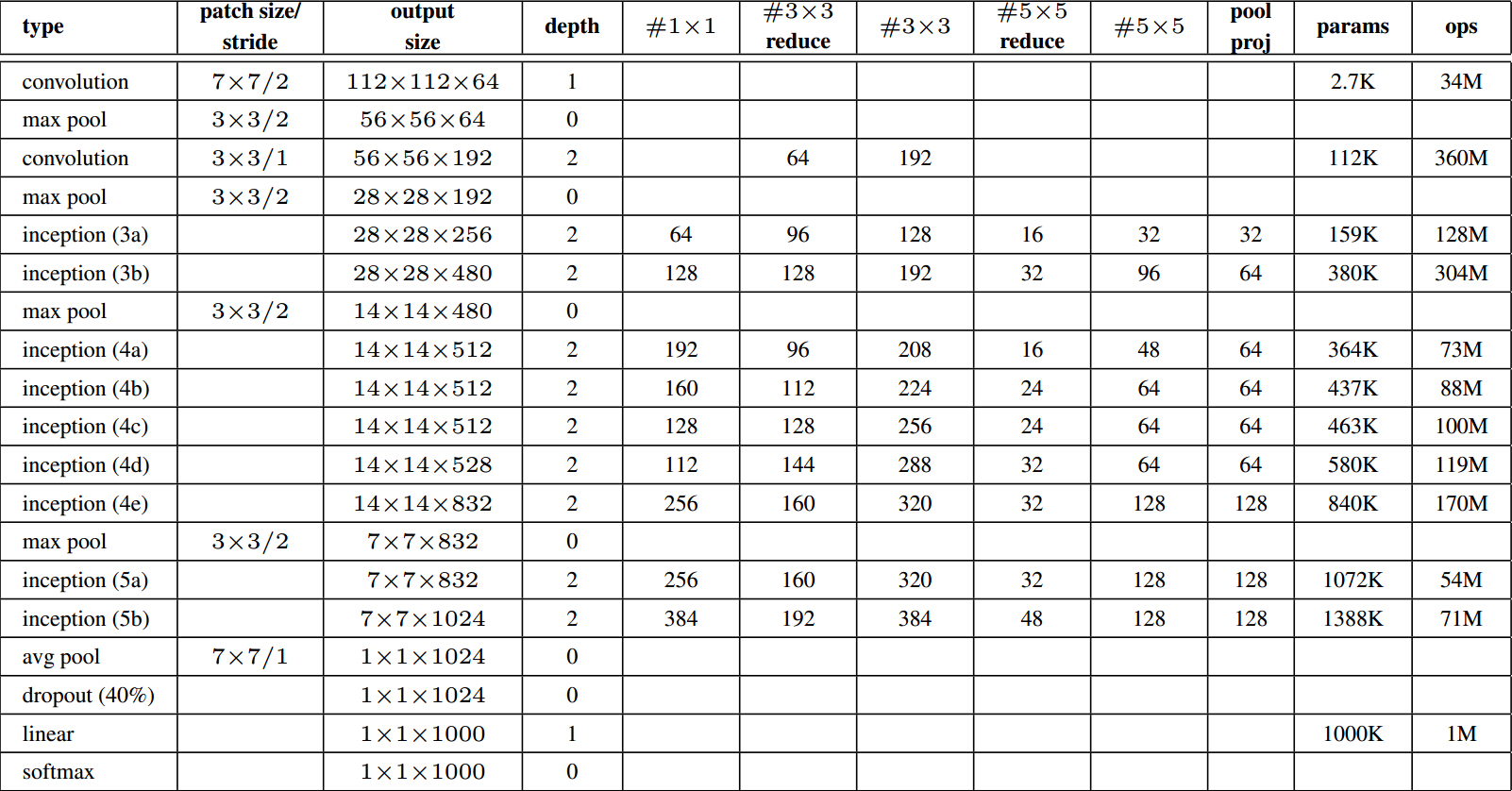

计算量如下图,可以看到参数总量并不大,但是计算次数是非常大的。

通过增加在不同层算loss和提出inception结构两种方式,不仅加深了网络,同时也加宽了网络,并且减少了参数个数。

实现

省略了结构图中的softmax0和softmax1。

def ConvFactory(data, num_filter, kernel, stride=(1,1), pad=(0, 0), name=None, suffix=''): conv = mx.symbol.Convolution(data=data, num_filter=num_filter, kernel=kernel, stride=stride, pad=pad, name='conv_%s%s' %(name, suffix)) act = mx.symbol.Activation(data=conv, act_type='relu', name='relu_%s%s' %(name, suffix)) return act def InceptionFactory(data, num_1x1, num_3x3red, num_3x3, num_d5x5red, num_d5x5, pool, proj, name): # 1x1 c1x1 = ConvFactory(data=data, num_filter=num_1x1, kernel=(1, 1), name=('%s_1x1' % name)) # 3x3 reduce + 3x3 c3x3r = ConvFactory(data=data, num_filter=num_3x3red, kernel=(1, 1), name=('%s_3x3' % name), suffix='_reduce') c3x3 = ConvFactory(data=c3x3r, num_filter=num_3x3, kernel=(3, 3), pad=(1, 1), name=('%s_3x3' % name)) # double 3x3 reduce + double 3x3 cd5x5r = ConvFactory(data=data, num_filter=num_d5x5red, kernel=(1, 1), name=('%s_5x5' % name), suffix='_reduce') cd5x5 = ConvFactory(data=cd5x5r, num_filter=num_d5x5, kernel=(5, 5), pad=(2, 2), name=('%s_5x5' % name)) # pool + proj pooling = mx.symbol.Pooling(data=data, kernel=(3, 3), stride=(1, 1), pad=(1, 1), pool_type=pool, name=('%s_pool_%s_pool' % (pool, name))) cproj = ConvFactory(data=pooling, num_filter=proj, kernel=(1, 1), name=('%s_proj' % name)) # concat concat = mx.symbol.Concat(*[c1x1, c3x3, cd5x5, cproj], name='ch_concat_%s_chconcat' % name) return concat def get_symbol(num_classes = 1000, **kwargs): data = mx.sym.Variable("data") conv1 = ConvFactory(data, 64, kernel=(7, 7), stride=(2,2), pad=(3, 3), name="conv1") pool1 = mx.sym.Pooling(conv1, kernel=(3, 3), stride=(2, 2), pool_type="max") conv2 = ConvFactory(pool1, 64, kernel=(1, 1), stride=(1,1), name="conv2") conv3 = ConvFactory(conv2, 192, kernel=(3, 3), stride=(1, 1), pad=(1,1), name="conv3") pool3 = mx.sym.Pooling(conv3, kernel=(3, 3), stride=(2, 2), pool_type="max") in3a = InceptionFactory(pool3, 64, 96, 128, 16, 32, "max", 32, name="in3a") in3b = InceptionFactory(in3a, 128, 128, 192, 32, 96, "max", 64, name="in3b") pool4 = mx.sym.Pooling(in3b, kernel=(3, 3), stride=(2, 2), pool_type="max") in4a = InceptionFactory(pool4, 192, 96, 208, 16, 48, "max", 64, name="in4a") in4b = InceptionFactory(in4a, 160, 112, 224, 24, 64, "max", 64, name="in4b") in4c = InceptionFactory(in4b, 128, 128, 256, 24, 64, "max", 64, name="in4c") in4d = InceptionFactory(in4c, 112, 144, 288, 32, 64, "max", 64, name="in4d") in4e = InceptionFactory(in4d, 256, 160, 320, 32, 128, "max", 128, name="in4e") pool5 = mx.sym.Pooling(in4e, kernel=(3, 3), stride=(2, 2), pool_type="max") in5a = InceptionFactory(pool5, 256, 160, 320, 32, 128, "max", 128, name="in5a") in5b = InceptionFactory(in5a, 384, 192, 384, 48, 128, "max", 128, name="in5b") pool6 = mx.sym.Pooling(in5b, kernel=(7, 7), stride=(1,1), pool_type="avg") flatten = mx.sym.Flatten(data=pool6) fc1 = mx.sym.FullyConnected(data=flatten, num_hidden=num_classes) softmax = mx.symbol.SoftmaxOutput(data=fc1, name='softmax') return softmax

其中concat用法如下。第一次运算时,c1x1, c3x3, cd5x5, cproj 分别为 1×64×28×28,1×128×28×28,1×32×28×28,1×32×28×28,合并得到1×256×28×28。

# coding:utf8 import mxnet.ndarray as nd x = nd.array([[1,1],[2,2]]) y = nd.array([[3,3],[4,4],[5,5]]) z = nd.array([[6,6], [7,7],[8,8]]) a1= nd.concat(x,y,z,dim=0) # 按照一维拼接,相当于竖着拼接。 a2= nd.concat(y,z,dim=1) # 按照二维拼接,相当于横着拼接。 默认dim=1,要求其他维度相同。 print a1.asnumpy() print a2.asnumpy() """ [[ 1. 1.] [ 2. 2.] [ 3. 3.] [ 4. 4.] [ 5. 5.] [ 6. 6.] [ 7. 7.] [ 8. 8.]] [[ 3. 3. 6. 6.] [ 4. 4. 7. 7.] [ 5. 5. 8. 8.]] """