本次学习采用了webmagic框架,完成的是一个简单的小demo

1 package com.mieba.spiader; 2 3 4 import us.codecraft.webmagic.Page; 5 import us.codecraft.webmagic.Site; 6 import us.codecraft.webmagic.Spider; 7 import us.codecraft.webmagic.pipeline.FilePipeline; 8 import us.codecraft.webmagic.processor.PageProcessor; 9 10 public class SinaPageProcessor implements PageProcessor 11 { 12 public static final String URL_LIST = "http://blog\.sina\.com\.cn/s/articlelist_1487828712_0_\d+\.html"; 13 14 public static final String URL_POST = "http://blog\.sina\.com\.cn/s/blog_\w+\.html"; 15 16 private Site site = Site.me().setDomain("blog.sina.com.cn").setRetryTimes(3).setSleepTime(3000).setUserAgent( 17 18 "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_2) AppleWebKit/537.31 (KHTML, like Gecko) Chrome/26.0.1410.65 Safari/537.31"); 19 20 @Override 21 22 public void process(Page page) 23 { 24 25 // 列表页 26 27 if (page.getUrl().regex(URL_LIST).match()) 28 { 29 // 从页面发现后续的url地址来抓取 30 page.addTargetRequests(page.getHtml().xpath("//div[@class="articleList"]").links().regex(URL_POST).all()); 31 32 33 page.addTargetRequests(page.getHtml().links().regex(URL_LIST).all()); 34 35 // 文章页 36 37 } else 38 { 39 // 定义如何抽取页面信息,并保存下来 40 page.putField("title", page.getHtml().xpath("//div[@class='articalTitle']/h2")); 41 42 page.putField("content", page.getHtml().xpath("//div[@id='articlebody']//div[@class='articalContent']")); 43 44 page.putField("date", page.getHtml().xpath("//div[@id='articlebody']//span[@class='time SG_txtc']").regex("\((.*)\)")); 45 46 } 47 48 } 49 50 @Override 51 52 public Site getSite() 53 { 54 55 return site; 56 57 } 58 59 public static void main(String[] args) 60 { 61 62 Spider.create(new SinaPageProcessor()) 63 //从"http://blog.sina.com.cn/s/articlelist_1487828712_0_1.html"开始抓 64 .addUrl("http://blog.sina.com.cn/s/articlelist_1487828712_0_1.html") 65 //结果用文件的格式保存下来 66 .addPipeline(new FilePipeline("E:\webmagic\")) 67 //开启5个线程抓取 68 .thread(5) 69 //启动爬虫 70 .run(); 71 72 } 73 }

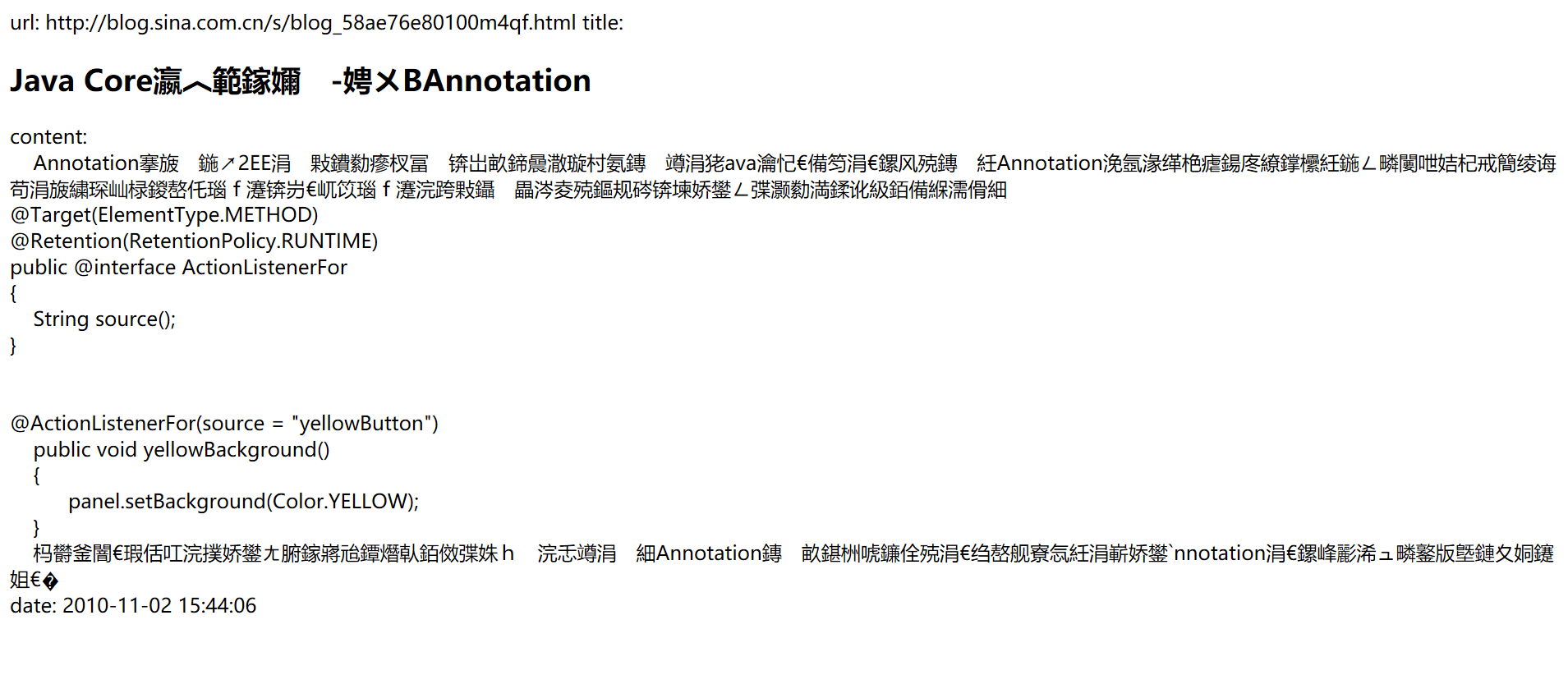

运行截图

爬取的网页