0 简介

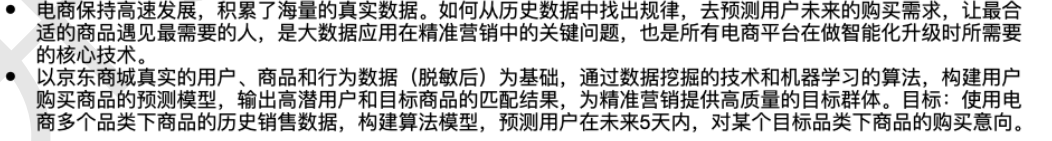

0.1 主题

0.2 目标

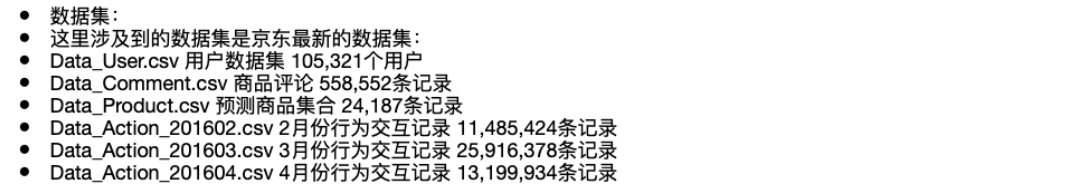

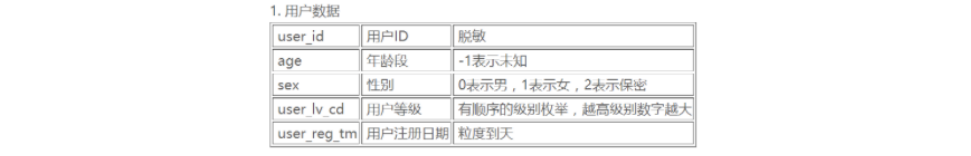

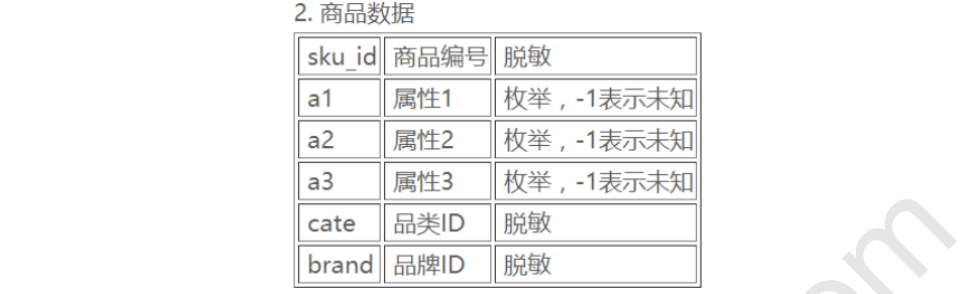

1. 数据集验证

1.1 检查Data_User中的用户和Data_Action中的用户是否一致

%matplotlib inline import numpy as np import pandas as pd import warnings warnings.filterwarnings('ignore') #忽视

# test sample df1 = pd.DataFrame({'Sku':['a','a','e','c'],'Action':[1,1,2,3]}) df2 = pd.DataFrame({'Sku':['a','b','f']}) df3 = pd.DataFrame({'Sku':['a','b','d']}) df4 = pd.DataFrame({'Sku':['a','b','c','d']}) print (pd.merge(df2,df1)) #print (pd.merge(df1,df2)) #print (pd.merge(df3,df1)) #print (pd.merge(df4,df1)) #print (pd.merge(df1,df3))

Sku Action 0 a 1 1 a 1

def user_action_check(): df_user = pd.read_csv('data/Data_User.csv',encoding='gbk') # 读入数据 df_sku = df_user.loc[:,'user_id'].to_frame() # series将数组转换为DataFrame格式 df_month2 = pd.read_csv('data/Data_Action_201602.csv',encoding='gbk') print ('Is action of Feb. from User file? ', len(df_month2) == len(pd.merge(df_sku,df_month2))) df_month3 = pd.read_csv('data/Data_Action_201603.csv',encoding='gbk') print ('Is action of Mar. from User file? ', len(df_month3) == len(pd.merge(df_sku,df_month3))) df_month4 = pd.read_csv('data/Data_Action_201604.csv',encoding='gbk') print ('Is action of Apr. from User file? ', len(df_month4) == len(pd.merge(df_sku,df_month4))) user_action_check() # 2、3、4月份的数据是否来自User文件

Is action of Feb. from User file? True Is action of Mar. from User file? True Is action of Apr. from User file? True

1.2 检查是否有重复记录

- 查看各个数据文件中完全重复的记录,可能解释是重复数据是有意义的,比如用户同时购买多件商品,同时添加多个数量的商品到购物车等

def deduplicate(filepath, filename, newpath): df_file = pd.read_csv(filepath,encoding='gbk') # 读入数据 before = df_file.shape[0] # 样本的行号/长度 df_file.drop_duplicates(inplace=True) # 去重复值 after = df_file.shape[0] # 再查看有多少样本数/长度 n_dup = before-after # 前后样本数的差值 print ('No. of duplicate records for ' + filename + ' is: ' + str(n_dup)) if n_dup != 0: df_file.to_csv(newpath, index=None) else: print ('no duplicate records in ' + filename)

# deduplicate('data/Data_Action_201602.csv', 'Feb. action', 'data/Data_Action_201602_dedup.csv') deduplicate('data/Data_Action_201603.csv', 'Mar. action', 'data/Data_Action_201603_dedup.csv') deduplicate('data/Data_Action_201604.csv', 'Feb. action', 'data/Data_Action_201604_dedup.csv') deduplicate('data/Data_Comment.csv', 'Comment', 'data/Data_Comment_dedup.csv') deduplicate('data/Data_Product.csv', 'Product', 'data/Data_Product_dedup.csv') deduplicate('data/Data_User.csv', 'User', 'data/Data_User_dedup.csv') # 第一行重复数据有7085038,说明同一个商品买了多个 # 第二行重复数据有3672710 # 第三行重复数据为0

No. of duplicate records for Mar. action is: 7085038 No. of duplicate records for Feb. action is: 3672710 No. of duplicate records for Comment is: 0 no duplicate records in Comment No. of duplicate records for Product is: 0 no duplicate records in Product No. of duplicate records for User is: 0 no duplicate records in User

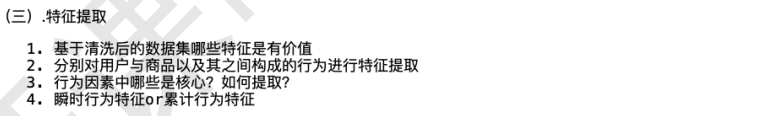

df_month2 = pd.read_csv('data/Data_Action_201602.csv',encoding='gbk') IsDuplicated = df_month2.duplicated() # 检查重复值 df_d=df_month2[IsDuplicated] df_d.groupby('type').count() #发现重复数据大多数都是由于浏览(1),或者点击(6)产生

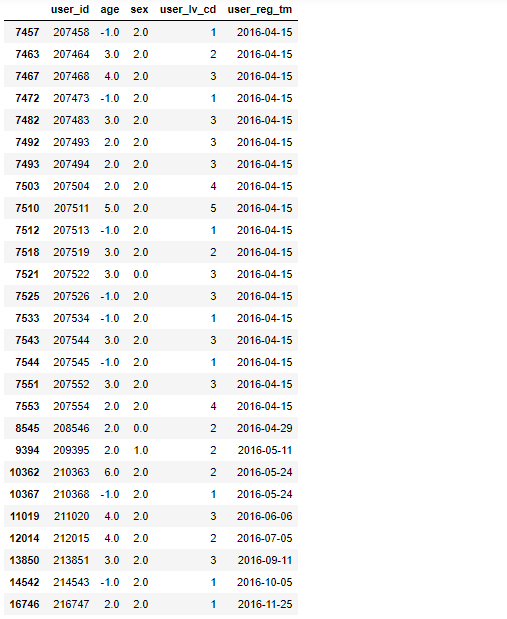

1.3 检查是否存在注册时间在2016年-4月-15号之后的用户

import pandas as pd df_user = pd.read_csv('data/Data_User.csv',encoding='gbk') df_user['user_reg_tm']=pd.to_datetime(df_user['user_reg_tm']) # 找到用户注册时间这一列 df_user.loc[df_user.user_reg_tm >= '2016-4-15'] #由于注册时间是系统错误造成,如果行为数据中没有在4月15号之后的数据的话,那么说明这些用户还是正常用户,并不需要删除。

df_month = pd.read_csv('data/Data_Action_201604.csv') df_month['time'] = pd.to_datetime(df_month['time']) df_month.loc[df_month.time >= '2016-4-16'] # 结论:说明用户没有异常操作数据,所以这一批用户不删除

1.4 行为数据中的user_id为浮点型,进行INT类型转换

- 因为2、3、4月份的数据集中用USERID,因此要转换为INT类型

import pandas as pd df_month = pd.read_csv('data/Data_Action_201602.csv',encoding='gbk') df_month['user_id'] = df_month['user_id'].apply(lambda x:int(x)) print (df_month['user_id'].dtype) df_month.to_csv('data/Data_Action_201602.csv',index=None) df_month = pd.read_csv('data/Data_Action_201603.csv',encoding='gbk') df_month['user_id'] = df_month['user_id'].apply(lambda x:int(x)) print (df_month['user_id'].dtype) df_month.to_csv('data/Data_Action_201603.csv',index=None) df_month = pd.read_csv('data/Data_Action_201604.csv',encoding='gbk') df_month['user_id'] = df_month['user_id'].apply(lambda x:int(x)) print (df_month['user_id'].dtype) df_month.to_csv('data/Data_Action_201604.csv',index=None)

int64 int64 int64

1.5 年龄区间的处理

- 把年龄映射成值

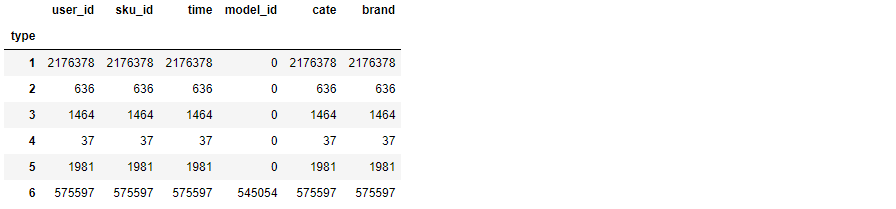

import pandas as pd df_user = pd.read_csv('data/Data_User.csv',encoding='gbk') def tranAge(x): if x == u'15岁以下': x='1' elif x==u'16-25岁': x='2' elif x==u'26-35岁': x='3' elif x==u'36-45岁': x='4' elif x==u'46-55岁': x='5' elif x==u'56岁以上': x='6' return x df_user['age']=df_user['age'].apply(tranAge) print (df_user.groupby(df_user['age']).count()) # 有14412个没有透露性别,在年龄值为3时候最多,属于”26—35岁“ df_user.to_csv('data/Data_User.csv',index=None)

user_id sex user_lv_cd user_reg_tm age -1.0 14412 14412 14412 14412 1.0 7 7 7 7 2.0 8797 8797 8797 8797 3.0 46570 46570 46570 46570 4.0 30336 30336 30336 30336 5.0 3325 3325 3325 3325 6.0 1871 1871 1871 1871

user_table

- user_table特征包括:

- user_id(用户id),age(年龄),sex(性别),

- user_lv_cd(用户级别),browse_num(浏览数),

- addcart_num(加购数),delcart_num(删购数),

- buy_num(购买数),favor_num(收藏数),

- click_num(点击数),buy_addcart_ratio(购买转化率),

- buy_browse_ratio(购买浏览转化率),

- buy_click_ratio(购买点击转化率),

- buy_favor_ratio(购买收藏转化率)

item_table特征包括:

- sku_id(商品id),attr1,attr2,

- attr3,cate,brand,browse_num,

- addcart_num,delcart_num,

- buy_num,favor_num,click_num,

- buy_addcart_ratio,buy_browse_ratio,

- buy_click_ratio,buy_favor_ratio,

- comment_num(评论数),

- has_bad_comment(是否有差评),

- bad_comment_rate(差评率)

1.6 构建User_table

#重定义文件名 ACTION_201602_FILE = "data/Data_Action_201602.csv" ACTION_201603_FILE = "data/Data_Action_201603.csv" ACTION_201604_FILE = "data/Data_Action_201604.csv" COMMENT_FILE = "data/Data_Comment.csv" PRODUCT_FILE = "data/Data_Product.csv" USER_FILE = "data/Data_User.csv" USER_TABLE_FILE = "data/User_table.csv" ITEM_TABLE_FILE = "data/Item_table.csv"

# 导入相关包 import pandas as pd import numpy as np from collections import Counter

# 功能函数: 对每一个user分组的数据进行统计 def add_type_count(group): behavior_type = group.type.astype(int) # 统计用户行为类别 type_cnt = Counter(behavior_type) # 1: 浏览 2: 加购 3: 删除 # 4: 购买 5: 收藏 6: 点击 group['browse_num'] = type_cnt[1] group['addcart_num'] = type_cnt[2] group['delcart_num'] = type_cnt[3] group['buy_num'] = type_cnt[4] group['favor_num'] = type_cnt[5] group['click_num'] = type_cnt[6] return group[['user_id', 'browse_num', 'addcart_num', 'delcart_num', 'buy_num', 'favor_num', 'click_num']]

#对action数据进行统计 #因为由于用户行为数据量较大,一次性读入可能造成内存错误(Memory Error) #因而使用pandas的分块(chunk)读取.根据自己调节chunk_size大小 def get_from_action_data(fname, chunk_size=50000): reader = pd.read_csv(fname, header=0, iterator=True,encoding='gbk') chunks = [] loop = True while loop: try: # 只读取user_id和type两个字段 chunk = reader.get_chunk(chunk_size)[["user_id", "type"]] chunks.append(chunk) except StopIteration: # 读完了就停止 loop = False print("Iteration is stopped") # 将块拼接为pandas dataframe格式 df_ac = pd.concat(chunks, ignore_index=True) # 按user_id分组,对每一组进行统计,as_index 表示无索引形式返回数据 df_ac = df_ac.groupby(['user_id'], as_index=False).apply(add_type_count) # 将重复的行丢弃 df_ac = df_ac.drop_duplicates('user_id') return df_ac

# 将各个action数据的统计量进行聚合 def merge_action_data(): df_ac = [] df_ac.append(get_from_action_data(fname=ACTION_201602_FILE)) df_ac.append(get_from_action_data(fname=ACTION_201603_FILE)) df_ac.append(get_from_action_data(fname=ACTION_201604_FILE)) df_ac = pd.concat(df_ac, ignore_index=True) # 用户在不同action表中统计量求和 df_ac = df_ac.groupby(['user_id'], as_index=False).sum() # 构造转化率字段 df_ac['buy_addcart_ratio'] = df_ac['buy_num'] / df_ac['addcart_num'] # 加了多少次购物车才买,购买率 df_ac['buy_browse_ratio'] = df_ac['buy_num'] / df_ac['browse_num'] # 浏览了多少次才买 df_ac['buy_click_ratio'] = df_ac['buy_num'] / df_ac['click_num'] # 点击了多少次才买 df_ac['buy_favor_ratio'] = df_ac['buy_num'] / df_ac['favor_num'] # 喜欢了多少个才买 # 将大于1的转化率字段置为1(100%),确保数据没有问题 df_ac.ix[df_ac['buy_addcart_ratio'] > 1., 'buy_addcart_ratio'] = 1. df_ac.ix[df_ac['buy_browse_ratio'] > 1., 'buy_browse_ratio'] = 1. df_ac.ix[df_ac['buy_click_ratio'] > 1., 'buy_click_ratio'] = 1. df_ac.ix[df_ac['buy_favor_ratio'] > 1., 'buy_favor_ratio'] = 1. return df_ac

# 从Data_User表中抽取需要的字段 def get_from_jdata_user(): df_usr = pd.read_csv(USER_FILE, header=0) df_usr = df_usr[["user_id", "age", "sex", "user_lv_cd"]] return df_usr

# 执行目的是得到大表 user_base = get_from_jdata_user() user_behavior = merge_action_data()

Iteration is stopped Iteration is stopped Iteration is stopped

# 连接成一张表,类似于SQL的左连接(left join) user_behavior = pd.merge(user_base, user_behavior, on=['user_id'], how='left') # 保存中间结果为user_table.csv user_behavior.to_csv(USER_TABLE_FILE, index=False)

user_table = pd.read_csv(USER_TABLE_FILE)

user_table.head()

1.7 构建Item_table

- 跟上面一样

#定义文件名 ACTION_201602_FILE = "data/Data_Action_201602.csv" ACTION_201603_FILE = "data/Data_Action_201603.csv" ACTION_201604_FILE = "data/Data_Action_201604.csv" COMMENT_FILE = "data/Data_Comment.csv" PRODUCT_FILE = "data/Data_Product.csv" USER_FILE = "data/Data_User.csv" USER_TABLE_FILE = "data/User_table.csv" ITEM_TABLE_FILE = "data/Item_table.csv"

# 导入相关包 import pandas as pd import numpy as np from collections import Counter

# 读取Product中商品 def get_from_jdata_product(): df_item = pd.read_csv(PRODUCT_FILE, header=0,encoding='gbk') return df_item

# 对每一个商品分组进行统计 def add_type_count(group): behavior_type = group.type.astype(int) type_cnt = Counter(behavior_type) group['browse_num'] = type_cnt[1] group['addcart_num'] = type_cnt[2] group['delcart_num'] = type_cnt[3] group['buy_num'] = type_cnt[4] group['favor_num'] = type_cnt[5] group['click_num'] = type_cnt[6] return group[['sku_id', 'browse_num', 'addcart_num', 'delcart_num', 'buy_num', 'favor_num', 'click_num']]

#对action中的数据进行统计 def get_from_action_data(fname, chunk_size=50000): reader = pd.read_csv(fname, header=0, iterator=True) chunks = [] loop = True while loop: try: chunk = reader.get_chunk(chunk_size)[["sku_id", "type"]] chunks.append(chunk) except StopIteration: loop = False print("Iteration is stopped") df_ac = pd.concat(chunks, ignore_index=True) df_ac = df_ac.groupby(['sku_id'], as_index=False).apply(add_type_count) # Select unique row df_ac = df_ac.drop_duplicates('sku_id') return df_ac

# 获取评论中的商品数据,如果存在某一个商品有两个日期的评论,我们取最晚的那一个 def get_from_jdata_comment(): df_cmt = pd.read_csv(COMMENT_FILE, header=0) df_cmt['dt'] = pd.to_datetime(df_cmt['dt']) # find latest comment index idx = df_cmt.groupby(['sku_id'])['dt'].transform(max) == df_cmt['dt'] df_cmt = df_cmt[idx] return df_cmt[['sku_id', 'comment_num', 'has_bad_comment', 'bad_comment_rate']]

def merge_action_data(): df_ac = [] df_ac.append(get_from_action_data(fname=ACTION_201602_FILE)) df_ac.append(get_from_action_data(fname=ACTION_201603_FILE)) df_ac.append(get_from_action_data(fname=ACTION_201604_FILE)) df_ac = pd.concat(df_ac, ignore_index=True) df_ac = df_ac.groupby(['sku_id'], as_index=False).sum() df_ac['buy_addcart_ratio'] = df_ac['buy_num'] / df_ac['addcart_num'] df_ac['buy_browse_ratio'] = df_ac['buy_num'] / df_ac['browse_num'] df_ac['buy_click_ratio'] = df_ac['buy_num'] / df_ac['click_num'] df_ac['buy_favor_ratio'] = df_ac['buy_num'] / df_ac['favor_num'] df_ac.ix[df_ac['buy_addcart_ratio'] > 1., 'buy_addcart_ratio'] = 1. df_ac.ix[df_ac['buy_browse_ratio'] > 1., 'buy_browse_ratio'] = 1. df_ac.ix[df_ac['buy_click_ratio'] > 1., 'buy_click_ratio'] = 1. df_ac.ix[df_ac['buy_favor_ratio'] > 1., 'buy_favor_ratio'] = 1. return df_ac

item_base = get_from_jdata_product() item_behavior = merge_action_data() item_comment = get_from_jdata_comment() # SQL: left join item_behavior = pd.merge( item_base, item_behavior, on=['sku_id'], how='left') item_behavior = pd.merge( item_behavior, item_comment, on=['sku_id'], how='left') item_behavior.to_csv(ITEM_TABLE_FILE, index=False)

Iteration is stopped Iteration is stopped Iteration is stopped

item_table = pd.read_csv(ITEM_TABLE_FILE)

item_table.head()

1.8 用户清洗

import pandas as pd df_user = pd.read_csv('data/User_table.csv',header=0) pd.options.display.float_format = '{:,.3f}'.format #输出格式设置,保留三位小数 df_user.describe() #第一行中根据User_id统计发现有105321个用户,发现有几个用户没有age,sex字段, #而且根据浏览、加购、删购、购买等记录却只有105180条记录,说明存在用户无任何交互记录,因此可以删除上述用户。

#删除少数的3行的年龄 df_user[df_user['age'].isnull()]

#删除无交互记录的用户 df_naction = df_user[(df_user['browse_num'].isnull()) & (df_user['addcart_num'].isnull()) & (df_user['delcart_num'].isnull()) & (df_user['buy_num'].isnull()) & (df_user['favor_num'].isnull()) & (df_user['click_num'].isnull())] df_user.drop(df_naction.index,axis=0,inplace=True) print (len(df_user))

105180

#统计无购买记录的用户 df_bzero = df_user[df_user['buy_num']==0] #输出购买数为0的总记录数 print (len(df_bzero))

75695

#删除无购买记录的用户 df_user = df_user[df_user['buy_num']!=0]

#浏览购买转换比和点击购买转换比小于0.0005的用户为惰性用户 # 删除爬虫及惰性用户 bindex = df_user[df_user['buy_browse_ratio']<0.0005].index print (len(bindex)) df_user.drop(bindex,axis=0,inplace=True)

90

# 点击购买转换比和点击购买转换比小于0.0005的用户为惰性用户 # 删除爬虫及惰性用户 cindex = df_user[df_user['buy_click_ratio']<0.0005].index print (len(cindex)) df_user.drop(cindex,axis=0,inplace=True)

323

df_user.describe()