需要部署的组件

- kube-apiserver

- kube-contraller-manager

- kube-scheduler

部署步骤:

- 配置文件

- systemd管理组件

- 启动

安装kube-apiserver组件

安装前准备:

安装包下载:我们安装的是目前的最新稳定版本1.18版本

wget https://dl.k8s.io/v1.18.0/kubernetes-server-linux-amd64.tar.gz

部署脚本:

vim apiserver.sh

#!/bin/bash MASTER_ADDRESS=$1 ETCD_SERVERS=$2 cat <<EOF >/opt/k8s/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 \ --etcd-servers=${ETCD_SERVERS} \ --bind-address=${MASTER_ADDRESS} \ --secure-port=6443 \ --advertise-address=${MASTER_ADDRESS} \ --allow-privileged=true \ --service-cluster-ip-range=10.0.0.0/24 \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \ --authorization-mode=RBAC,Node \ --kubelet-https=true \ --enable-bootstrap-token-auth \ --token-auth-file=/opt/kubernetes/cfg/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/opt/kubernetes/ssl/server.pem \ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \ --client-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/opt/etcd/ssl/ca.pem \ --etcd-certfile=/opt/etcd/ssl/server.pem \ --etcd-keyfile=/opt/etcd/ssl/server-key.pem" EOF cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/k8s/kubernetes/cfg/kube-apiserver ExecStart=/opt/k8s/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-apiserver systemctl restart kube-apiserver

vim controller-manager.sh

#!/bin/bash MASTER_ADDRESS=$1 cat <<EOF >/opt/k8s/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \ --v=4 \ --master=${MASTER_ADDRESS}:8080 \ --leader-elect=true \ --address=127.0.0.1 \ --service-cluster-ip-range=10.0.0.0/24 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/opt/k8s/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/opt/k8s/kubernetes/ssl/ca-key.pem \ --root-ca-file=/opt/k8s/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/opt/k8s/kubernetes/ssl/ca-key.pem \ --experimental-cluster-signing-duration=87600h0m0s" EOF cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/k8s/kubernetes/cfg/kube-controller-manager ExecStart=/opt/k8s/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-controller-manager systemctl restart kube-controller-manager

vim scheduler.sh

#!/bin/bash MASTER_ADDRESS=$1 cat <<EOF >/opt/k8s/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true \ --v=4 \ --master=${MASTER_ADDRESS}:8080 \ --leader-elect" EOF cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/k8s/kubernetes/cfg/kube-scheduler ExecStart=/opt/k8s/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-scheduler systemctl restart kube-scheduler

开始部署master节点:

创建安装目录

mkdir -p /opt/k8s/kubernetes/{bin,cfg,ssl,logs}

解压k8s压缩包并将可执行文件放到bin目录中

[root@k8s-master01 k8s]# tar -zxvf kubernetes-server-linux-amd64.tar.gz [root@k8s-master01 soft]# cd kubernetes/server/bin/ [root@k8s-master01 bin]# cp -r kube-apiserver kube-controller-manager kube-scheduler /opt/k8s/kubernetes/bin/

执行kube-apiserver.sh脚本

#可以看下脚本文件,$1指定master节点的IP地址 $2指定etcd集群

[root@k8s-master01 k8s-cert]# sh apiserver.sh 172.16.204.133 https://172.16.204.133:2379,https://172.16.204.134:2379,https://172.16.204.135:2379

通过kube-apiserver的配置文件我们发现还缺少kube-apiserver的证书和token.csv文件

1.通过脚创建apiserver证书,除了server-csr.json中的IP地址需要改成自己的,其它不变

mkdir -p /opt/k8s/k8s-cert/

vim k8s-cert.sh

cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF cat > ca-csr.json <<EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca - #----------------------- # "172.16.204.134", "172.16.204.136","172.16.204.137","172.16.204.138" 这四个IP是k8s集群的master节点和LB节点的IP地址,可以多写几个备用 cat > server-csr.json <<EOF { "CN": "kubernetes", "hosts": [ "10.0.0.1", "127.0.0.1", "172.16.204.134", "172.16.204.136", "172.16.204.137", "172.16.204.138", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server #----------------------- cat > admin-csr.json <<EOF { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "system:masters", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin #----------------------- cat > kube-proxy-csr.json <<EOF { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

执行生成证书的脚本并将证书放到k8s目录中

[root@k8s-master01 k8s-cert]# sh k8s-cert.sh [root@k8s-master01 k8s-cert]# cp -r ca.pem server.pem server-key.pem ca-key.pem /opt/k8s/kubernetes/ssl/

生成token文件,这个字符串可以自己写一个,位数一致就好,也使用官方提供的命令自己生成一个

[root@k8s-master01 k8s-cert]# BOOTSTRAP_TOKEN=0fb61c46f8991b718eb38d27b605b008 [root@k8s-master01 k8s-cert]# cat > token.csv <<EOF > ${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap" > EOF [root@k8s-master01 k8s-cert]# cat token.csv 0fb61c46f8991b718eb38d27b605b008,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

将token文件放到kubernetes中的配置文件中

[root@k8s-master01 k8s-cert]# cp -r token.csv /opt/k8s/kubernetes/cfg/

启动kube-apiserver

[root@k8s-master01 k8s-cert]# systemctl restart kube-apiserver

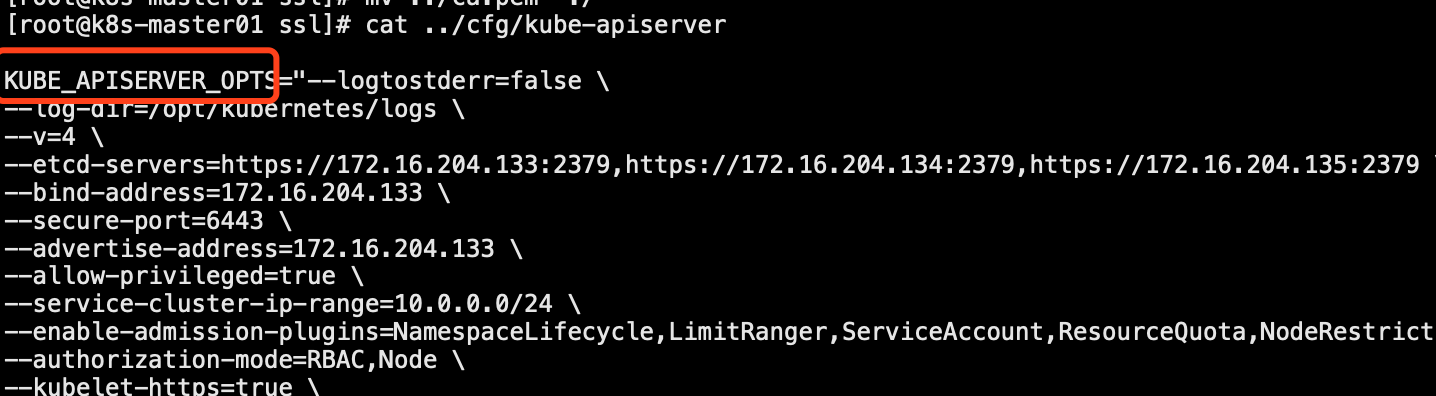

敲黑板:服务启动失败排查方法

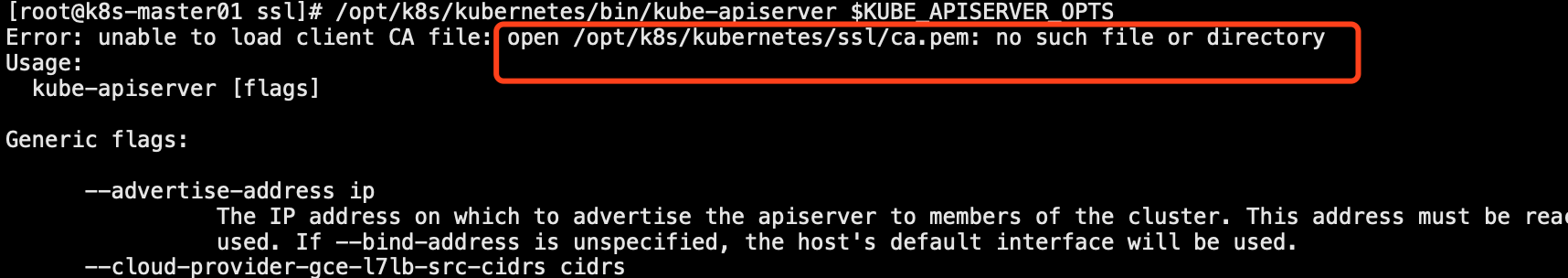

#1.寻找启动失败的日志,一般在message文件中 #2.使用启动脚本启动,查看实时启动失败的报错 以kube-apiserver为例: #netstat排查kube-apiserver端口未正常启动 [root@k8s-master01 ssl]# source /opt/k8s/kubernetes/cfg/kube-apiserver #KUBE_APISERVER_OPTS变量名称可以在配置文件中确认 [root@k8s-master01 ssl]# /opt/k8s/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

日志还是挺明显的,缺少ca.pem证书,这样kube-apiserver启动失败的原因就找到了,其它k8s服务也可以使用这种方式来排查问题

安装controller-manager:k8s安装方式几乎都一样,编写配置文件、编写systemd启动脚本、启动服务即可

vim controller-manages.sh

#!/bin/bash MASTER_ADDRESS=$1 cat <<EOF >/opt/k8s/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \ --v=4 \ --master=${MASTER_ADDRESS}:8080 \ --leader-elect=true \ --address=127.0.0.1 \ --service-cluster-ip-range=10.0.0.0/24 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/opt/k8s/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/opt/k8s/kubernetes/ssl/ca-key.pem \ --root-ca-file=/opt/k8s/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/opt/k8s/kubernetes/ssl/ca-key.pem \ --experimental-cluster-signing-duration=87600h0m0s" EOF cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/k8s/kubernetes/cfg/kube-controller-manager ExecStart=/opt/k8s/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

启动controller-manager

[root@k8s-master01 master]# sh controller-manager.sh

部署scheduler

vim scheduler.sh

#!/bin/bash MASTER_ADDRESS=$1 cat <<EOF >/opt/k8s/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true \ --v=4 \ --master=${MASTER_ADDRESS}:8080 \ --leader-elect" EOF cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/k8s/kubernetes/cfg/kube-scheduler ExecStart=/opt/k8s/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-scheduler systemctl restart kube-scheduler

启动scheduler

[root@k8s-master01 master]# sh scheduler.sh 127.0.0.1

配置kubectl管理k8s集群的工具

拷贝k8s集群管理工具kubectl到/usr/bin下,方便我们使用

cp /opt/k8s/soft/kubernetes/server/bin/kubectl /usr/bin/

使用kubectl查看集群状态

#可以看到master节点上的服务都是正常的

[root@k8s-master01 bin]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health":"true"} etcd-1 Healthy {"health":"true"} etcd-2 Healthy {"health":"true"}

如果使用kubectl报错如下:

[root@k8s-master01 bin]# kubectl get cs

error: no configuration has been provided, try setting KUBERNETES_MASTER environment variable

解决方案:

通过报错可以看到缺少环境变量,可以在/etc/peofile文件中写入 export KUBERNETES_MASTER="127.0.0.1:8080"

==========如果使用kubectl检测集群信息是正常的,说明master节点已部署完成,继续看接下来的node节点的部署配置