linux的cgroup理论:https://www.cnblogs.com/menkeyi/p/10941843.html

Linux CGroup全称Linux Control Group, 是Linux内核的一个功能,用来限制,控制与分离一个进程组群的资源。

容器的资源限制就是基于linux的cgroup实现。

docker版本:1.13.1(可通过 docker info查看)

1.实践linux cgroup

[crmop@localhost ~]$ mount -t cgroup ##查看cgroup挂载,子目录(cpuset即cpu核数,cpu,memory)表示可以被cgroup限制的资源种类

cgroup on /sys/fs/cgroup/systemd type cgroup (rw,nosuid,nodev,noexec,relatime,xattr,release_agent=/usr/lib/systemd/systemd-cgroups-agent,name=systemd)

cgroup on /sys/fs/cgroup/devices type cgroup (rw,nosuid,nodev,noexec,relatime,devices)

cgroup on /sys/fs/cgroup/cpuset type cgroup (rw,nosuid,nodev,noexec,relatime,cpuset)

cgroup on /sys/fs/cgroup/hugetlb type cgroup (rw,nosuid,nodev,noexec,relatime,hugetlb)

cgroup on /sys/fs/cgroup/net_cls,net_prio type cgroup (rw,nosuid,nodev,noexec,relatime,net_cls,net_prio)

cgroup on /sys/fs/cgroup/blkio type cgroup (rw,nosuid,nodev,noexec,relatime,blkio)

cgroup on /sys/fs/cgroup/memory type cgroup (rw,nosuid,nodev,noexec,relatime,memory)

cgroup on /sys/fs/cgroup/perf_event type cgroup (rw,nosuid,nodev,noexec,relatime,perf_event)

cgroup on /sys/fs/cgroup/freezer type cgroup (rw,nosuid,nodev,noexec,relatime,freezer)

cgroup on /sys/fs/cgroup/cpu,cpuacct type cgroup (rw,nosuid,nodev,noexec,relatime,cpu,cpuacct)

cgroup on /sys/fs/cgroup/pids type cgroup (rw,nosuid,nodev,noexec,relatime,pids)

cgroup on /sys/fs/cgroup/rdma type cgroup (rw,nosuid,nodev,noexec,relatime,rdma)

[root@localhost ~]# cd /sys/fs/cgroup [root@localhost cgroup]# ls ##子目录(cpuset即cpu核数,cpu,memory,blkio块设置io)表示可以被cgroup限制的资源种类 blkio cpuacct cpuset freezer memory net_cls,net_prio perf_event rdma cpu cpu,cpuacct devices hugetlb net_cls net_prio pids systemd [root@localhost cgroup]# cd cpu ##对cpu子系统来说,提供具体可以被限制的方法,如cfs_period_us与cfs_quota_us组合,

##表示在cfs_period_us时间长度内分得cfs_quota_us的cpu时间,即限制cpu的最高使用率为cfs_quota_us/cfs_period_us*100% [root@localhost cpu]# ls cgroup.clone_children cpuacct.usage cpuacct.usage_percpu_user cpu.cfs_quota_us cpu.stat cgroup.procs cpuacct.usage_all cpuacct.usage_sys cpu.rt_period_us notify_on_release cgroup.sane_behavior cpuacct.usage_percpu cpuacct.usage_user cpu.rt_runtime_us release_agent cpuacct.stat cpuacct.usage_percpu_sys cpu.cfs_period_us cpu.shares tasks [root@localhost cpu]# ll total 0 -rw-r--r-- 1 root root 0 Aug 15 00:55 cgroup.clone_children -rw-r--r-- 1 root root 0 Jun 4 10:18 cgroup.procs -r--r--r-- 1 root root 0 Aug 15 00:55 cgroup.sane_behavior -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.stat -rw-r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_all -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_percpu -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_percpu_sys -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_percpu_user -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_sys -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_user -rw-r--r-- 1 root root 0 Aug 15 00:55 cpu.cfs_period_us -rw-r--r-- 1 root root 0 Aug 15 00:55 cpu.cfs_quota_us -rw-r--r-- 1 root root 0 Aug 15 00:55 cpu.rt_period_us -rw-r--r-- 1 root root 0 Aug 15 00:55 cpu.rt_runtime_us -rw-r--r-- 1 root root 0 Aug 15 00:55 cpu.shares -r--r--r-- 1 root root 0 Aug 15 00:55 cpu.stat -rw-r--r-- 1 root root 0 Aug 15 00:55 notify_on_release -rw-r--r-- 1 root root 0 Aug 15 00:55 release_agent -rw-r--r-- 1 root root 0 Aug 15 00:55 tasks [root@localhost cpu]# mkdir container ##这个目录称为一个“控制组”,在该目录下,自动生成该子系统对应的资源限制文件,

##删除时先yum -y install libcgroup再cgdelete cpu:container [root@localhost cpu]# ll total 0 -rw-r--r-- 1 root root 0 Aug 15 00:55 cgroup.clone_children -rw-r--r-- 1 root root 0 Jun 4 10:18 cgroup.procs -r--r--r-- 1 root root 0 Aug 15 00:55 cgroup.sane_behavior drwxr-xr-x 2 root root 0 Aug 15 00:56 container -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.stat -rw-r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_all -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_percpu -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_percpu_sys -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_percpu_user -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_sys -r--r--r-- 1 root root 0 Aug 15 00:55 cpuacct.usage_user -rw-r--r-- 1 root root 0 Aug 15 00:55 cpu.cfs_period_us -rw-r--r-- 1 root root 0 Aug 15 00:55 cpu.cfs_quota_us -rw-r--r-- 1 root root 0 Aug 15 00:55 cpu.rt_period_us -rw-r--r-- 1 root root 0 Aug 15 00:55 cpu.rt_runtime_us -rw-r--r-- 1 root root 0 Aug 15 00:55 cpu.shares -r--r--r-- 1 root root 0 Aug 15 00:55 cpu.stat -rw-r--r-- 1 root root 0 Aug 15 00:55 notify_on_release -rw-r--r-- 1 root root 0 Aug 15 00:55 release_agent -rw-r--r-- 1 root root 0 Aug 15 00:55 tasks [root@localhost cpu]# cd container/ [root@localhost container]# ls cgroup.clone_children cpuacct.usage cpuacct.usage_percpu_sys cpuacct.usage_user cpu.rt_period_us cpu.stat cgroup.procs cpuacct.usage_all cpuacct.usage_percpu_user cpu.cfs_period_us cpu.rt_runtime_us notify_on_release cpuacct.stat cpuacct.usage_percpu cpuacct.usage_sys cpu.cfs_quota_us cpu.shares tasks [root@localhost container]# ls cgroup.clone_children cpuacct.usage cpuacct.usage_percpu_sys cpuacct.usage_user cpu.rt_period_us cpu.stat cgroup.procs cpuacct.usage_all cpuacct.usage_percpu_user cpu.cfs_period_us cpu.rt_runtime_us notify_on_release cpuacct.stat cpuacct.usage_percpu cpuacct.usage_sys cpu.cfs_quota_us cpu.shares tasks [root@localhost container]# cat cpu.cfs_period_us 100000 [root@localhost container]# cat cpu.cfs_quota_us ## -1表示不作限制 -1 [root@localhost container]# cat tasks [root@localhost container]# top top - 00:58:19 up 2 days, 7:05, 2 users, load average: 0.01, 0.03, 0.00 Tasks: 187 total, 1 running, 133 sleeping, 0 stopped, 0 zombie %Cpu(s): 0.0 us, 0.3 sy, 0.0 ni, 99.7 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st KiB Mem : 977804 total, 149268 free, 608916 used, 219620 buff/cache KiB Swap: 1048572 total, 1031848 free, 16724 used. 178692 avail Mem

写一个死循环且不作限制,cpu被打满

[root@localhost container]# while : ; do : ; done & [1] 35789 [root@localhost container]# top top - 00:59:04 up 2 days, 7:05, 2 users, load average: 0.16, 0.06, 0.01 Tasks: 187 total, 2 running, 132 sleeping, 0 stopped, 0 zombie %Cpu(s):100.0 us, 0.0 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st KiB Mem : 977804 total, 151268 free, 606924 used, 219612 buff/cache KiB Swap: 1048572 total, 1031848 free, 16724 used. 180692 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

35789 root 20 0 116376 1328 0 R 99.3 0.1 0:13.47 bash

作资源隔离限制

[root@localhost container]# pwd /sys/fs/cgroup/cpu/container [root@localhost container]# cat cpu.cfs_period_us 100000 [root@localhost container]# cat cpu.cfs_quota_us -1 [root@localhost container]# echo 20000 > cpu.cfs_quota_us ##及限制cpu最高作用率为20% [root@localhost container]# cat cpu.cfs_quota_us 20000 [root@localhost container]# cat tasks [root@localhost container]# echo 35798 > tasks ## 将该进程对应的pid写入对应控制组container的tasks文件 [root@localhost container]# cat tasks 35798 [root@localhost container]# top top - 01:04:12 up 2 days, 7:11, 2 users, load average: 0.80, 0.46, 0.19 Tasks: 189 total, 2 running, 132 sleeping, 0 stopped, 0 zombie %Cpu(s): 12.9 us, 0.4 sy, 0.0 ni, 86.7 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st KiB Mem : 977804 total, 149876 free, 608284 used, 219644 buff/cache KiB Swap: 1048572 total, 1031848 free, 16724 used. 179316 avail Mem PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 35798 root 20 0 116376 1328 0 R 19.9 0.1 2:16.42 bash 1157 gdm 20 0 2975120 186892 26480 S 1.0 19.1 3:46.17 gnome-shell

2.docker实践

启动一个容器 docker启动busybox

-i表示输入有效。

-t表示提供一个伪终端,用于我们输入内容。

[root@localhost container]# docker run -it --cpu-period=100000 --cpu-quota=20000 --name my-busybox1 docker.io/busybox /bin/sh / # top Mem: 841296K used, 136508K free, 18500K shrd, 0K buff, 151804K cached CPU: 0.0% usr 10.0% sys 0.0% nic 90.0% idle 0.0% io 0.0% irq 0.0% sirq Load average: 0.00 0.00 0.02 2/365 6 PID PPID USER STAT VSZ %VSZ CPU %CPU COMMAND 1 0 root S 1308 0.1 0 0.0 /bin/sh 6 1 root R 1304 0.1 0 0.0 top Mem: 842244K used, 135560K free, 18500K shrd, 0K buff, 151892K cached CPU: 1.2% usr 0.6% sys 0.0% nic 97.5% idle 0.6% io 0.0% irq 0.0% sirq Load average: 0.00 0.00 0.02 3/365 6 PID PPID USER STAT VSZ %VSZ CPU %CPU COMMAND 1 0 root S 1308 0.1 0 0.0 /bin/sh 6 1 root R 1304 0.1 0 0.0 top Mem: 842276K used, 135528K free, 18500K shrd, 0K buff, 151892K cached CPU: 0.4% usr 0.4% sys 0.0% nic 99.1% idle 0.0% io 0.0% irq 0.0% sirq Load average: 0.00 0.00 0.02 2/365 6 PID PPID USER STAT VSZ %VSZ CPU %CPU COMMAND 1 0 root S 1308 0.1 0 0.0 /bin/sh 6 1 root R 1304 0.1 0 0.0 top / # ps -ef PID USER TIME COMMAND 1 root 0:00 /bin/sh 7 root 0:00 ps -ef

退出后重新启动已暂停的容器

docker ps查看正在运行的容器列表

[root@localhost container]# docker ps -a ##查看已经暂停的容器 CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 9b8c4b4cce0d docker.io/busybox "/bin/sh" About an hour ago Exited (130) 6 minutes ago my-busybox1 [root@localhost container]# docker start 9b8c4b4cce0d ## 启动 9b8c4b4cce0d [root@localhost container]#

进入容器,加入一个死循环,容器内查看cpu使用率

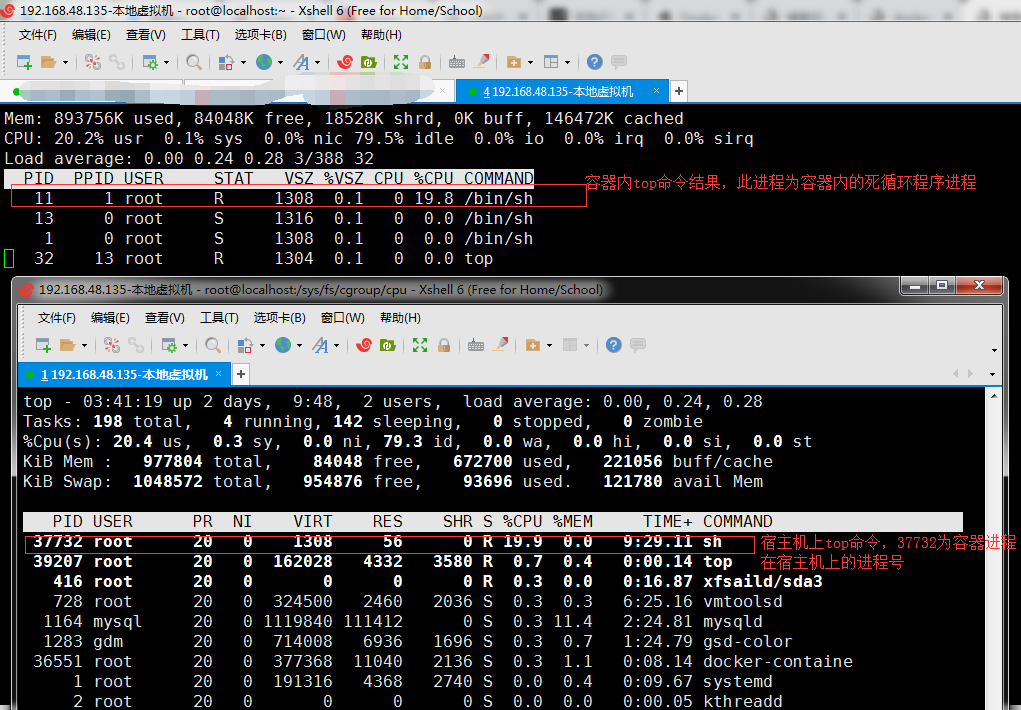

[root@localhost cpu]# docker exec -it 9b8c4b4cce0d /bin/sh / # while : ; do : ; done & / # top Mem: 869356K used, 108448K free, 18536K shrd, 0K buff, 167576K cached CPU: 20.0% usr 0.0% sys 0.0% nic 80.0% idle 0.0% io 0.0% irq 0.0% sirq Load average: 0.03 0.02 0.00 3/374 12 PID PPID USER STAT VSZ %VSZ CPU %CPU COMMAND 11 6 root R 1308 0.1 0 20.0 /bin/sh 6 0 root S 1312 0.1 0 0.0 /bin/sh 1 0 root S 1308 0.1 0 0.0 /bin/sh 12 6 root R 1304 0.1 0 0.0 top

容器外查看,说明容器内的top命令查看的资源是宿主机上,验证了容器只是挂载文件目录,内核还是用的宿主机操作系统内核

同时37732进程即为容器内死循环的进程,说明容器本质就是一个特殊进程

[root@localhost ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 9b8c4b4cce0d docker.io/busybox "/bin/sh" About an hour ago Up 8 minutes my-busybox1 [root@localhost ~]# top top - 02:56:52 up 2 days, 9:03, 2 users, load average: 0.04, 0.02, 0.00 Tasks: 197 total, 5 running, 141 sleeping, 0 stopped, 0 zombie %Cpu(s): 18.8 us, 0.3 sy, 0.0 ni, 80.9 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st KiB Mem : 977804 total, 84432 free, 683024 used, 210348 buff/cache KiB Swap: 1048572 total, 999976 free, 48596 used. 133088 avail Mem PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 37732 root 20 0 1308 60 4 R 19.9 0.0 0:36.01 sh 1164 mysql 20 0 1119840 156260 0 S 0.3 16.0 2:22.78 mysqld

在容器外的cgroup并找到了相关的控制组/sys/fs/cgroup/cpu/system.slice/docker-9b8c4b4cce0d64476ec3370dc1c0a264054a86eb52440d63ccdaee1a18562b62.scope,但在容器内找到对应的限制pid 11

[root@localhost cpu]# docker ps ##查看宿主机上docker进程 CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 9b8c4b4cce0d docker.io/busybox "/bin/sh" About an hour ago Up 40 minutes my-busybox1 [root@localhost cpu]# docker container top 9b8c4b4cce0d ##37609为docker run,37732运行死循环程序的进程,父进程ppid为37609,其它进程为docker exec命令进入已经运行的同一容器运行的命令

##即同一运行中的容器,可以被不同的连接(进程37609,38519,它们并没有新生成容器)进入

UID PID PPID C STIME TTY TIME CMD root 37609 37593 0 02:47 pts/0 00:00:00 /bin/sh root 37732 37609 19 02:53 ? 00:06:59 /bin/sh root 38159 38142 0 03:09 pts/4 00:00:00 /bin/sh

[root@localhost cpu]# top top - 03:17:02 up 2 days, 9:23, 2 users, load average: 0.41, 0.32, 0.21 Tasks: 198 total, 2 running, 143 sleeping, 0 stopped, 0 zombie %Cpu(s): 16.7 us, 5.6 sy, 0.0 ni, 72.2 id, 5.6 wa, 0.0 hi, 0.0 si, 0.0 st KiB Mem : 977804 total, 83188 free, 686168 used, 208448 buff/cache KiB Swap: 1048572 total, 972028 free, 76544 used. 106920 avail Mem PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 37732 root 20 0 1308 56 0 R 12.5 0.0 4:37.74 sh 38908 root 20 0 162028 4580 3864 R 6.2 0.5 0:00.02 top 1 root 20 0 191316 3152 1524 S 0.0 0.3 0:09.62 systemd 2 root 20 0 0 0 0 S 0.0 0.0 0:00.05 kthreadd 3 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 rcu_gp 4 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 rcu_par_gp 6 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 kworker/0:0H-kb 8 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 mm_percpu_wq 9 root 20 0 0 0 0 S 0.0 0.0 0:08.29 ksoftirqd/0 10 root 20 0 0 0 0 I 0.0 0.0 0:06.56 rcu_sched 11 root rt 0 0 0 0 S 0.0 0.0 0:01.99 migration/0 13 root 20 0 0 0 0 S 0.0 0.0 0:00.00 cpuhp/0 14 root 20 0 0 0 0 S 0.0 0.0 0:00.00 kdevtmpfs 15 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 netns 16 root 20 0 0 0 0 S 0.0 0.0 0:00.03 kauditd 17 root 20 0 0 0 0 S 0.0 0.0 0:00.20 khungtaskd 18 root 20 0 0 0 0 S 0.0 0.0 0:00.00 oom_reaper 19 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 writeback 20 root 20 0 0 0 0 S 0.0 0.0 0:00.59 kcompactd0 21 root 25 5 0 0 0 S 0.0 0.0 0:00.00 ksmd 22 root 39 19 0 0 0 S 0.0 0.0 0:04.96 khugepaged 112 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 kintegrityd

[root@localhost cpu]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9b8c4b4cce0d docker.io/busybox "/bin/sh" About an hour ago Up 22 minutes my-busybox1

[root@localhost cpu]# cd /sys/fs/cgroup/

[root@localhost cgroup]# ls

blkio cpuacct cpuset freezer memory net_cls,net_prio perf_event rdma cpu cpu,cpuacct devices hugetlb net_cls

net_prio pids systemd

[root@localhost cgroup]# cd cpu

[root@localhost cpu]# find . -name tasks|xargs grep 37609 ##在容器外37609为docker run进程即容器本身进程,找到控制组

./system.slice/docker-9b8c4b4cce0d64476ec3370dc1c0a264054a86eb52440d63ccdaee1a18562b62.scope/tasks:37609

[root@localhost cpu]# cd system.slice/docker-9b8c4b4cce0d64476ec3370dc1c0a264054a86eb52440d63ccdaee1a18562b62.scope

进入容器内

[root@localhost ~]# docker exec -it 9b8c4b4cce0d /bin/sh ##进入容器 / # cd /sys/fs/cgroup/ /sys/fs/cgroup # ls blkio cpuacct freezer net_cls perf_event systemd cpu cpuset hugetlb net_cls,net_prio pids cpu,cpuacct devices memory net_prio rdma /sys/fs/cgroup # cd cpu /sys/fs/cgroup/cpu,cpuacct # ls cgroup.clone_children cpu.rt_runtime_us cpuacct.usage_all cpuacct.usage_user cgroup.procs cpu.shares cpuacct.usage_percpu notify_on_release cpu.cfs_period_us cpu.stat cpuacct.usage_percpu_sys tasks cpu.cfs_quota_us cpuacct.stat cpuacct.usage_percpu_user cpu.rt_period_us cpuacct.usage cpuacct.usage_sys /sys/fs/cgroup/cpu,cpuacct # cd .. /sys/fs/cgroup # ls blkio cpuacct freezer net_cls perf_event systemd cpu cpuset hugetlb net_cls,net_prio pids cpu,cpuacct devices memory net_prio rdma /sys/fs/cgroup # cd cpu /sys/fs/cgroup/cpu,cpuacct # ls cgroup.clone_children cpu.rt_runtime_us cpuacct.usage_all cpuacct.usage_user cgroup.procs cpu.shares cpuacct.usage_percpu notify_on_release cpu.cfs_period_us cpu.stat cpuacct.usage_percpu_sys tasks cpu.cfs_quota_us cpuacct.stat cpuacct.usage_percpu_user cpu.rt_period_us cpuacct.usage cpuacct.usage_sys /sys/fs/cgroup/cpu,cpuacct # ll /bin/sh: ll: not found /sys/fs/cgroup/cpu,cpuacct # ls cgroup.clone_children cpu.rt_runtime_us cpuacct.usage_all cpuacct.usage_user cgroup.procs cpu.shares cpuacct.usage_percpu notify_on_release cpu.cfs_period_us cpu.stat cpuacct.usage_percpu_sys tasks cpu.cfs_quota_us cpuacct.stat cpuacct.usage_percpu_user cpu.rt_period_us cpuacct.usage cpuacct.usage_sys /sys/fs/cgroup/cpu,cpuacct # ls -l total 0 -rw-r--r-- 1 root root 0 Aug 15 10:10 cgroup.clone_children -rw-r--r-- 1 root root 0 Aug 15 10:09 cgroup.procs -rw-r--r-- 1 root root 0 Aug 15 09:47 cpu.cfs_period_us -rw-r--r-- 1 root root 0 Aug 15 09:47 cpu.cfs_quota_us -rw-r--r-- 1 root root 0 Aug 15 10:10 cpu.rt_period_us -rw-r--r-- 1 root root 0 Aug 15 10:10 cpu.rt_runtime_us -rw-r--r-- 1 root root 0 Aug 15 09:47 cpu.shares -r--r--r-- 1 root root 0 Aug 15 10:10 cpu.stat -r--r--r-- 1 root root 0 Aug 15 10:10 cpuacct.stat -rw-r--r-- 1 root root 0 Aug 15 10:10 cpuacct.usage -r--r--r-- 1 root root 0 Aug 15 10:10 cpuacct.usage_all -r--r--r-- 1 root root 0 Aug 15 10:10 cpuacct.usage_percpu -r--r--r-- 1 root root 0 Aug 15 10:10 cpuacct.usage_percpu_sys -r--r--r-- 1 root root 0 Aug 15 10:10 cpuacct.usage_percpu_user -r--r--r-- 1 root root 0 Aug 15 10:10 cpuacct.usage_sys -r--r--r-- 1 root root 0 Aug 15 10:10 cpuacct.usage_user -rw-r--r-- 1 root root 0 Aug 15 10:10 notify_on_release -rw-r--r-- 1 root root 0 Aug 15 10:10 tasks /sys/fs/cgroup/cpu,cpuacct # cat tasks 1 11 13 24 /sys/fs/cgroup/cpu,cpuacct # top Mem: 897488K used, 80316K free, 18616K shrd, 0K buff, 181188K cached CPU: 27.2% usr 0.0% sys 0.0% nic 72.7% idle 0.0% io 0.0% irq 0.0% sirq Load average: 0.40 0.34 0.18 4/389 25 PID PPID USER STAT VSZ %VSZ CPU %CPU COMMAND 11 1 root R 1308 0.1 0 27.2 /bin/sh 13 0 root S 1316 0.1 0 0.0 /bin/sh 1 0 root S 1308 0.1 0 0.0 /bin/sh 25 13 root R 1304 0.1 0 0.0 top /sys/fs/cgroup/cpu,cpuacct # /sys/fs/cgroup/cpu,cpuacct # /sys/fs/cgroup/cpu,cpuacct # ll /bin/sh: ll: not found /sys/fs/cgroup/cpu,cpuacct # ls cgroup.clone_children cpu.rt_runtime_us cpuacct.usage_all cpuacct.usage_user cgroup.procs cpu.shares cpuacct.usage_percpu notify_on_release cpu.cfs_period_us cpu.stat cpuacct.usage_percpu_sys tasks cpu.cfs_quota_us cpuacct.stat cpuacct.usage_percpu_user cpu.rt_period_us cpuacct.usage cpuacct.usage_sys /sys/fs/cgroup/cpu,cpuacct # cat cpu.cfs_quota_us ##发现在输入的限制参数 20000 /sys/fs/cgroup/cpu,cpuacct # cat cpu.cfs_period_us 100000 /sys/fs/cgroup/cpu,cpuacct # cat tasks ##发现容器内执行死循环的进程号11,被限制了 1 11 13 29 /sys/fs/cgroup/cpu,cpuacct #

比较容器内外top命令结果,发现docker内部top命令展示的资源为宿主机上的资源信息(是宿主机上/proc信息,并不是限制后的容器内部资源使用率,容器内部cpu应为100%),原因为docker隔离不彻底造成,要解决此问题可参照lxcfs https://www.jianshu.com/p/c99611bffe6f

在宿主机上查看容器进程,docker进程=containerd+runc(namespace和cgroup)

[root@localhost ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 9b8c4b4cce0d docker.io/busybox "/bin/sh" 20 hours ago Up 19 hours my-busybox1 [root@localhost ~]# docker top my-busybox1 UID PID PPID C STIME TTY TIME CMD root 37609(对应容器进程pid=1 docker run运行容器在宿主机上的进程号,单进程模式要求只应该存在此进程,即不要再容器内运行多于一个应用) 37593 0 19:45 pts/0 00:00:00 /bin/sh

root 37732(对应容器进程pid=11,运行死循环的那个进程,上面图片说明有误) 37609 19 19:51 ? 00:27:58 /bin/sh

root 38159(对应容器进程pid=13) 38142 0 20:07 pts/4 00:00:00 /bin/sh

root 39206(对应容器进程pid=32) 38159 0 20:38 pts/4 00:00:01 top

[root@localhost cpu]# ps -ef|grep 37609 ##进程追踪

root 37609 1657 0 22:46 pts/1 00:00:00 /bin/sh

[root@localhost cpu]# ps -ef|grep 1657

root 1657 1111 0 22:46 ? 00:00:00 /usr/bin/docker-containerd-shim-current 9b8c4b4cce0d64476ec3370dc1c0a264054a86eb52440d63ccdaee1a18562b62 /var/run/docker/libcontainerd/9b8c4b4cce0d64476ec3370dc1c0a264054a86eb52440d63ccdaee1a18562b62 /usr/libexec/docker/docker-runc-current

[root@localhost cpu]# ps -ef|grep 1111

root 1111 1055 0 22:44 ? 00:00:03 /usr/bin/docker-containerd-current -l unix:///var/run/docker/libcontainerd/docker-containerd.sock --metrics-interval=0 --start-timeout 2m --state-dir /var/run/docker/libcontainerd/containerd --shim docker-containerd-shim --runtime docker-runc --runtime-args --systemd-cgroup=true

[root@localhost cpu]# ps -ef|grep 1055

root 1055 1 0 22:44 ? 00:00:05 /usr/bin/dockerd-current --add-runtime docker-runc=/usr/libexec/docker/docker-runc-current --default-runtime=docker-runc --exec-opt native.cgroupdriver=systemd --userland-proxy-path=/usr/libexec/docker/docker-proxy-current --init-path=/usr/libexec/docker/docker-init-current --seccomp-profile=/etc/docker/seccomp.json --selinux-enabled --log-driver=journald --signature-verification=false --storage-driver overlay2

[root@localhost cpu]# ps -ef|grep 1

root 1 0 0 22:44 ? 00:00:02 /usr/lib/systemd/systemd --switched-root --system --deserialize 22

3.结论:

docker的资源限制cgroup是通过容器内部的cgroup来限制的,因容器与宿主机共用内核,即限制是直接作用于宿主机上的,并没有叠加或者转化运算