org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version. Underlying cause: java.sql.SQLException : Access denied for user 'root'@'centos35' (using password: YES)

上面是问题,下面是相应的解决方法,很明显是mysql访问出现问题:

grant all privileges on *.* to 'root' @'centos35' identified by '123456'

hive分布式启动进程运行时间过长后,发现启动jps服务出现以下问题:

Error occurred during initialization of VM java.lang.OutOfMemoryError: unable to create new native thread

内存不够了。

echo 1 > /proc/sys/vm/drop_caches

hive操作:

主要从csv中导入数据库,代码如下

创建表:

create external table if not exists batch_task(task_name STRING,inst_num INT,job_name STRING,task_type STRING,status STRING,start_time INT,end_time INT,plan_cpu INT,plan_mem INT) ROW format delimited fields terminated by ',' STORED AS TEXTFILE;

导入数据:这里不加local说明是在hdfs上存储的文件

load data inpath '/clusterdata/batch_task.csv' into table batch_task

查询数据:

select * from batch_task limit 10;

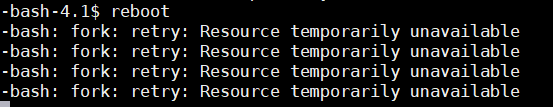

发现某个进程还是啥一直在占用,导致连重启都不行了:

exec sudo reboot

文件清理:

du -h --max-depth=1 /home/zc/ 看路径下文件的磁盘占用

df -hl

整个文件系统的磁盘占用

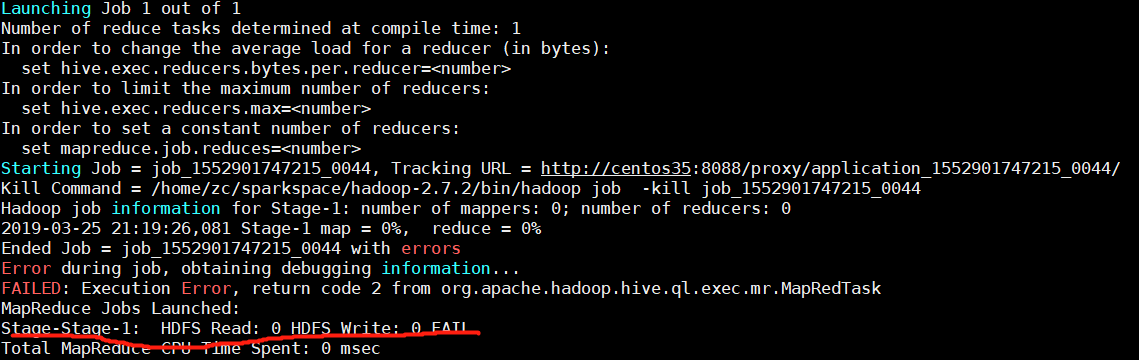

hive查询变大的时候,会提交job,但是会发现job运行总是失败,

最终看网上的解决方法也没能解决,但知道我看到hadoop集群中存在大量的unhealthy node....

数据上传到hdfs

hdfs dfs -put ak.csv /data

hive分布式启动:

主节点

hive --service metastore &

从节点

hive

hive groupby

出现问题

Expression Not In Group By Key这篇解释的很清楚

由于每一组的group by出来的值都是一个集合,因此在hive中使用collect_set(),

select task_name,job_name,collect_set(machine_id)[0],collect_set(cpu_avg)[0],collect_set(cpu_max)[0],collect_set(mem_avg)[0],collect_set(mem_max)[0] from batch_instance group by task_name,job_name

hive查询结果的保存方式,这篇讲的很好

注意:collect_set()显示的是去重后的集合,collect_list()显示的是单纯满足需求的列转行!!

存储到文件中

hive> insert overwrite directory "/home/zc/dzx"

> row format delimited fields terminated by ","

> select user, login_time from user_login;

存储在表中

hive> create table query_result

> as

> select user, login_time from user_login;

查询hive表中某一列不包含某个字符

select * from task where task_name not like 'task'

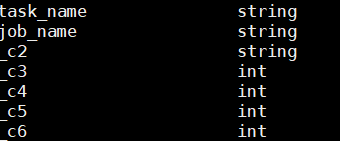

这里需要注意:group by后会出现自动命名列的情况,比如出现

这时候需要重新命名这些列名

alter table batch_ins change `_c2` machine_id String;

导出hive中数据到csv

hive -e "set hive.cli.print.header=true; select * from data_table" | sed 's/[ ]/,/g' > a.csv

hive处理空值:

select * from dag_small where cpu_avg is not null and cpu_max is not null and mem_avg is not null and mem_max is not null;

hive双表联合

create table user as select pv.pageid, u.age FROM page_view pv JOIN user u ON (pv.userid = u.userid);

左连接

select * from A left outer join B on xxx;