参考文档:

- 部署kubernetes集群1:https://github.com/opsnull/follow-me-install-kubernetes-cluster

- 部署kubernetes集群2:https://blog.frognew.com/2017/04/install-ha-kubernetes-1.6-cluster.html

- Building High-Availability Clusters:https://kubernetes.io/docs/admin/high-availability/building/

- 高可用原理:http://blog.csdn.net/horsefoot/article/details/52247277

- TLS bootstrapping:https://kubernetes.io/docs/admin/kubelet-tls-bootstrapping/

- TLS bootstrap引导程序:https://jimmysong.io/posts/kubernetes-tls-bootstrapping/

- kubernetes:https://github.com/kubernetes/kubernetes

- etcd:https://github.com/coreos/etcd

- flanneld:https://github.com/coreos/flannel

- cfssl:https://github.com/cloudflare/cfssl

- Manage TLS Certificates in a Cluster:https://kubernetes.io/docs/tasks/tls/managing-tls-in-a-cluster/

一.环境

1. 组件

|

组件 |

版本 |

Remark |

|

centos |

7.4 |

|

|

kubernetes |

v1.9.2 |

|

|

etcd |

v3.3.0 |

|

|

flanneld |

v0.10.0 |

vxlan网络 |

|

docker |

1.12.6 |

已提前部署 |

|

cfssl |

1.2.0 |

ca证书与秘钥 |

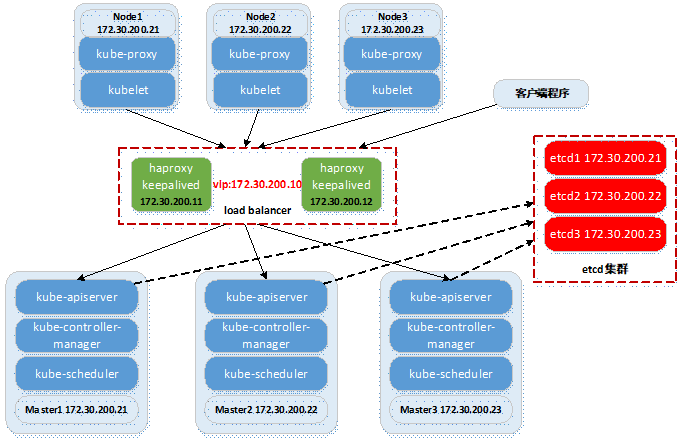

2. 拓扑(逻辑)

- kube-master含有服务组件:kube-apiserver,kube-controller-manager,kube-scheduler;

- kube-node含有服务组件:kubelet,kube-proxy;

- 数据库采用etcd集群方式;

- 为节省资源,kube-master,kube-node,etcd等融合部署;

- 前端采用haproxy+keepalived做高可用。

3. 整体规划

|

Host |

Role |

IP |

Service |

Remark |

|

kubenode1 |

kube-master kube-node etcd-node |

172.30.200.21 |

kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy etcd |

|

|

kubenode2 |

kube-master kube-node etcd-node |

172.30.200.22 |

kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy etcd |

|

|

kubenode3 |

kube-master kube-node etcd-node |

172.30.200.23 |

kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy etcd |

|

|

ipvs01 |

ha |

172.30.200.11 vip:172.30.200.10 |

haproxy keepalived |

|

|

ipvs02 |

ha |

172.30.200.12 vip:172.30.200.10 |

haproxy keepalived |

二.部署前端HA

以ipvs01为例,ipvs02做适当调整。

1. 配置haproxy

# 具体安装配置可参考调整:http://www.cnblogs.com/netonline/p/7593762.html # 这里给出frontend与backend的部分配置,如下:

#frontend, 名字自定义 frontend kube-api-https_frontend #定义前端监听端口, 建议采用bind *:80的形式,否则做集群高可用的时候有问题,vip切换到其余机器就不能访问; #根据kube-apiserver的端口配置 bind 0.0.0.0:6443 #只做端口的映射转发

mode tcp #将请求转交到default_backend组处理. default_backend kube-api-https_backend

#backend后端配置 backend kube-api-https_backend #定义负载均衡方式为roundrobin方式, 即基于权重进行轮询调度的算法, 在服务器性能分布较均匀情况下推荐. balance roundrobin mode tcp #基于源地址实现持久连接. stick-table type ip size 200k expire 30m stick on src #后端服务器定义, maxconn 1024表示该服务器的最大连接数, cookie 1表示serverid为1, weight代表权重(默认1,最大为265,0则表示不参与负载均衡), #check inter 1500是检测心跳频率, rise 2是2次正确认为服务器可用, fall 3是3次失败认为服务器不可用. server kubenode1 172.30.200.21:6443 maxconn 1024 cookie 1 weight 3 check inter 1500 rise 2 fall 3 server kubenode2 172.30.200.22:6443 maxconn 1024 cookie 2 weight 3 check inter 1500 rise 2 fall 3 server kubenode3 172.30.200.23:6443 maxconn 1024 cookie 3 weight 3 check inter 1500 rise 2 fall 3

2. 配置keepalived

keepalived.conf

# 具体安装配置可参考调整:http://www.cnblogs.com/netonline/p/7598744.html # 这里给出配置,如下: [root@ipvs01 ]# cat /usr/local/keepalived/etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { notification_email { root@localhost.local } notification_email_from root@localhost.local smtp_server 172.30.200.11 smtp_connect_timeout 30 router_id HAproxy_DEVEL } vrrp_script chk_haproxy { script "/usr/local/keepalived/etc/chk_haproxy.sh" interval 2 weight 2 rise 1 } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 51 priority 101 advert_int 1 nopreempt authentication { auth_type PASS auth_pass 987654 } virtual_ipaddress { 172.30.200.10 } track_script { chk_haproxy } }

心跳检测

[root@ipvs01 ]# cat /usr/local/keepalived/etc/chk_haproxy.sh #!/bin/bash # 2018-02-05 v0.1 if [ $(ps -C haproxy --no-header | wc -l) -ne 0 ]; then exit 0 else # /etc/rc.d/init.d/keepalived restart exit 1 fi

3. 设置iptables

# 放开部分端口,这里kube-apiserver映射到haproxy的tcp 6443端口会用到,其余端口可不打开 [root@ipvs01 ~]# vim /etc/sysconfig/iptables -A INPUT -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 443 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 514 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 1080 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 6443 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 8080 -j ACCEPT # vrrp通告采用组播协议 -A INPUT -m pkttype --pkt-type multicast -j ACCEPT [root@ipvs01 ~]# service iptables restart

三.设置集群环境变量

以kubenode1为例,kubenode2&kubenode3做适当小调整。

1. 集群环境变量

# 以下环境变量在配置各模块开机启动项时会大量使用,环境变量更方便调用; # 放置在/etc/profile.d/下,可开机即载入环境变量 [root@kubenode1 ~]# cd /etc/profile.d/ [root@kubenode1 profile.d]# touch kubernetes_variable.sh [root@kubenode1 profile.d]# vim kubernetes_variable.sh

# 服务网段 (Service CIDR),部署前路由不可达,部署后集群内使用IP:Port可达;

# 建议使用主机未采用的网段定义服务网段与Pod网段 export SERVICE_CIDR="169.169.0.0/16" # POD 网段 (Cluster CIDR),部署前路由不可达,部署后路由可达(flanneld保证) export CLUSTER_CIDR="10.254.0.0/16" # 服务端口范围 (NodePort Range) export NODE_PORT_RANGE="10000-60000" # etcd 集群服务地址列表 export ETCD_ENDPOINTS="https://172.30.200.21:2379,https://172.30.200.22:2379,https://172.30.200.23:2379" # flanneld 在etcd中的网络配置前缀 export FLANNEL_ETCD_PREFIX="/kubernetes/network" # kubernetes 服务 IP (一般是 SERVICE_CIDR 中第一个IP) export CLUSTER_KUBERNETES_SVC_IP="169.169.0.1" # 集群 DNS 服务 IP (从 SERVICE_CIDR 中预分配) export CLUSTER_DNS_SVC_IP="169.169.0.11" # 集群 DNS 域名, 注意最后的"." export CLUSTER_DNS_DOMAIN="cluster.local." # TLS Bootstrapping 使用的 Token,可以使用命令生成:head -c 16 /dev/urandom | od -An -t x | tr -d ' ' export BOOTSTRAP_TOKEN="962283d223c76bd7b6f806936de64a23"

2. kubernetes相关服务可执行文件路径环境变量

# 这里将相关的服务均放置在相应的/usr/loca/xxx目录下; # 设置环境变量后开机即可载入 [root@kubenode1 ~]# cd /etc/profile.d/ [root@kubenode1 profile.d]# touch kubernetes_path.sh [root@kubenode1 profile.d]# vim kubernetes_path.sh export PATH=$PATH:/usr/local/cfssl:/usr/local/etcd:/usr/local/kubernetes/bin:/usr/local/flannel # 重新载入/etc/prifile,或重启服务器,环境变量生效 [root@kubenode1 profile.d]# source /etc/profile