DPDK版本19.02

初始化:

/* Launch threads, called at application init(). */ int rte_eal_init(int argc, char **argv) { ... /* rte_eal_cpu_init() -> * eal_cpu_core_id() * eal_cpu_socket_id() * 读取/sys/devices/system/[cpu|node] * 设置lcore_config->[core_role|core_id|socket_id] */ if (rte_eal_cpu_init() < 0) { rte_eal_init_alert("Cannot detect lcores."); rte_errno = ENOTSUP; return -1; } /* eal_parse_args() -> * eal_parse_common_option() -> * eal_parse_coremask() * eal_parse_master_lcore() * eal_parse_lcores() * eal_adjust_config() * 解析-c、--master_lcore、--lcores参数 * 在eal_parse_lcores()中确认可用的logical CPU * 在eal_adjust_config()中设置rte_config.master_lcore为0 (设置第一个lcore为MASTER lcore) */ fctret = eal_parse_args(argc, argv); if (fctret < 0) { rte_eal_init_alert("Invalid 'command line' arguments."); rte_errno = EINVAL; rte_atomic32_clear(&run_once); return -1; } ... /* 初始化大页信息 */ if (rte_eal_memory_init() < 0) { rte_eal_init_alert("Cannot init memory "); rte_errno = ENOMEM; return -1; } ... /* eal_thread_init_master() -> * eal_thread_set_affinity() * 设置当前线程为MASTER lcore * 在eal_thread_set_affinity()中绑定MASTER lcore到logical CPU */ eal_thread_init_master(rte_config.master_lcore); ... /* rte_bus_scan() -> * rte_pci_scan() -> * pci_scan_one() -> * pci_parse_sysfs_resource() * rte_pci_add_device() * 遍历rte_bus_list链表,调用每个bus的scan函数,pci为rte_pci_scan() * 遍历/sys/bus/pci/devices目录,为每个DBSF分配struct rte_pci_device * 逐行读取并解析每个DBSF的resource,保存到dev->mem_resource[i] * 将dev插入rte_pci_bus.device_list链表 */ if (rte_bus_scan()) { rte_eal_init_alert("Cannot scan the buses for devices "); rte_errno = ENODEV; return -1; } /* pthread_create() -> * eal_thread_loop() -> * eal_thread_set_affinity() * 为每个SLAVE lcore创建线程,线程函数为eal_thread_loop() * 在eal_thread_set_affinity()中绑定SLAVE lcore到logical CPU */ RTE_LCORE_FOREACH_SLAVE(i) { /* * create communication pipes between master thread * and children */ /* MASTER lcore创建pipes用于MASTER和SLAVE lcore间通信(父子线程间通信) */ if (pipe(lcore_config[i].pipe_master2slave) < 0) rte_panic("Cannot create pipe "); if (pipe(lcore_config[i].pipe_slave2master) < 0) rte_panic("Cannot create pipe "); lcore_config[i].state = WAIT; /* 设置SLAVE lcore的状态为WAIT */ /* create a thread for each lcore */ ret = pthread_create(&lcore_config[i].thread_id, NULL, eal_thread_loop, NULL); ... } /* * Launch a dummy function on all slave lcores, so that master lcore * knows they are all ready when this function returns. */ rte_eal_mp_remote_launch(sync_func, NULL, SKIP_MASTER); rte_eal_mp_wait_lcore(); ... /* Probe all the buses and devices/drivers on them */ /* rte_bus_probe() -> * rte_pci_probe() -> * pci_probe_all_drivers() -> * rte_pci_probe_one_driver() -> * rte_pci_match() * rte_pci_map_device() -> * pci_uio_map_resource() * eth_ixgbe_pci_probe() * 遍历rte_bus_list链表,调用每个bus的probe函数,pci为rte_pci_probe() * rte_pci_probe()/pci_probe_all_drivers()分别遍历rte_pci_bus.device_list/driver_list链表,匹配设备和驱动 * 映射BAR,调用驱动的probe函数,ixgbe为eth_ixgbe_pci_probe() */ if (rte_bus_probe()) { rte_eal_init_alert("Cannot probe devices "); rte_errno = ENOTSUP; return -1; } ... }

在dpdk 16.11是没有bud这一层抽象的,直接通过rte_eal_initàrte_eal_pci_init调用pci设备的初始化。

也就是dpdk 16.11只支持pci这一种总线设备。但是到了dpdk17.11引入了bus的概念。在rte_bus_scan 进行bus的初始化

1 /* Scan all the buses for registered devices */ 2 int 3 rte_bus_scan(void) 4 { 5 int ret; 6 struct rte_bus *bus = NULL; 7 8 TAILQ_FOREACH(bus, &rte_bus_list, next) { 9 ret = bus->scan(); 10 if (ret) 11 RTE_LOG(ERR, EAL, "Scan for (%s) bus failed. ", 12 bus->name); 13 } 14 15 return 0; 16 }

这个函数会调用rte_bus_list上注册的所有bus的scan函数,这些bus是通过rte_bus_register函数注册上去的,而宏RTE_REGISTER_BUS又是rte_bus_register的封装。

重要结构体

rte_bus_list

1 struct rte_bus { 2 TAILQ_ENTRY(rte_bus) next; /**< Next bus object in linked list */ 3 const char *name; /**< Name of the bus */ 4 rte_bus_scan_t scan; /**< Scan for devices attached to bus */ 5 rte_bus_probe_t probe; /**< Probe devices on bus */ 6 rte_bus_find_device_t find_device; /**< Find a device on the bus */ 7 rte_bus_plug_t plug; /**< Probe single device for drivers */ 8 rte_bus_unplug_t unplug; /**< Remove single device from driver */ 9 rte_bus_parse_t parse; /**< Parse a device name */ 10 struct rte_bus_conf conf; /**< Bus configuration */ 11 }; 12 13 TAILQ_HEAD(rte_bus_list, rte_bus); 14 15 #define TAILQ_HEAD(name, type) 16 struct name { 17 struct type *tqh_first; /* first element */ 18 struct type **tqh_last; /* addr of last next element */ 19 } 20 21 /* 定义rte_bus_list */ 22 struct rte_bus_list rte_bus_list = 23 TAILQ_HEAD_INITIALIZER(rte_bus_list);

注册pci bus

将rte_pci_bus插入rte_bus_list链表

1 struct rte_pci_bus { 2 struct rte_bus bus; /**< Inherit the generic class */ 3 struct rte_pci_device_list device_list; /**< List of PCI devices */ 4 struct rte_pci_driver_list driver_list; /**< List of PCI drivers */ 5 }; 6 7 /* 定义rte_pci_bus */ 8 struct rte_pci_bus rte_pci_bus = { 9 .bus = { 10 .scan = rte_pci_scan, 11 .probe = rte_pci_probe, 12 .find_device = pci_find_device, 13 .plug = pci_plug, 14 .unplug = pci_unplug, 15 .parse = pci_parse, 16 }, 17 .device_list = TAILQ_HEAD_INITIALIZER(rte_pci_bus.device_list), 18 .driver_list = TAILQ_HEAD_INITIALIZER(rte_pci_bus.driver_list), 19 }; 20 21 RTE_REGISTER_BUS(pci, rte_pci_bus.bus); 22 23 #define RTE_REGISTER_BUS(nm, bus) 24 RTE_INIT_PRIO(businitfn_ ##nm, 101); /* 声明为gcc构造函数,先于main()执行 */ 25 static void businitfn_ ##nm(void) 26 { 27 (bus).name = RTE_STR(nm); 28 rte_bus_register(&bus); 29 } 30 31 void 32 rte_bus_register(struct rte_bus *bus) 33 { 34 RTE_VERIFY(bus); 35 RTE_VERIFY(bus->name && strlen(bus->name)); 36 /* A bus should mandatorily have the scan implemented */ 37 RTE_VERIFY(bus->scan); 38 RTE_VERIFY(bus->probe); 39 RTE_VERIFY(bus->find_device); 40 /* Buses supporting driver plug also require unplug. */ 41 RTE_VERIFY(!bus->plug || bus->unplug); 42 43 /* 将rte_pci_bus.bus插入rte_bus_list链表 */ 44 TAILQ_INSERT_TAIL(&rte_bus_list, bus, next); 45 RTE_LOG(DEBUG, EAL, "Registered [%s] bus. ", bus->name); 46 }

设备初始化过程:

pci设备的初始化是通过以下路径完成的:rte_eal_inità rte_bus_scan->rte_pci_scan。而对应驱动的加载则是通过如下调用完成的:rte_eal_init->rte_bus_probe->rte_pci_probe完成的。

注册pci driver

将rte_ixgbe_pmd插入rte_pci_bus.driver_list链表

1 struct rte_pci_driver { 2 TAILQ_ENTRY(rte_pci_driver) next; /**< Next in list. */ 3 struct rte_driver driver; /**< Inherit core driver. */ 4 struct rte_pci_bus *bus; /**< PCI bus reference. */ 5 pci_probe_t *probe; /**< Device Probe function. */ 6 pci_remove_t *remove; /**< Device Remove function. */ 7 const struct rte_pci_id *id_table; /**< ID table, NULL terminated. */ 8 uint32_t drv_flags; /**< Flags contolling handling of device. */ 9 }; 10 11 /* 定义rte_ixgbe_pmd */ 12 static struct rte_pci_driver rte_ixgbe_pmd = { 13 .id_table = pci_id_ixgbe_map, 14 .drv_flags = RTE_PCI_DRV_NEED_MAPPING | RTE_PCI_DRV_INTR_LSC, 15 .probe = eth_ixgbe_pci_probe, 16 .remove = eth_ixgbe_pci_remove, 17 }; 18 19 RTE_PMD_REGISTER_PCI(net_ixgbe, rte_ixgbe_pmd); 20 21 #define RTE_PMD_REGISTER_PCI(nm, pci_drv) 22 RTE_INIT(pciinitfn_ ##nm); /* 声明为gcc构造函数,先于main()执行 */ 23 static void pciinitfn_ ##nm(void) 24 { 25 (pci_drv).driver.name = RTE_STR(nm); 26 rte_pci_register(&pci_drv); 27 } 28 RTE_PMD_EXPORT_NAME(nm, __COUNTER__) 29 30 void 31 rte_pci_register(struct rte_pci_driver *driver) 32 { 33 /* 将rte_ixgbe_pmd插入rte_pci_bus.driver_list链表 */ 34 TAILQ_INSERT_TAIL(&rte_pci_bus.driver_list, driver, next); 35 driver->bus = &rte_pci_bus; 36 }

eth_ixgbe_dev_init()

1 static int 2 eth_ixgbe_dev_init(struct rte_eth_dev *eth_dev) 3 { 4 ... 5 eth_dev->dev_ops = &ixgbe_eth_dev_ops; /* 注册ixgbe_eth_dev_ops函数表 */ 6 eth_dev->rx_pkt_burst = &ixgbe_recv_pkts; /* burst收包函数 */ 7 eth_dev->tx_pkt_burst = &ixgbe_xmit_pkts; /* burst发包函数 */ 8 eth_dev->tx_pkt_prepare = &ixgbe_prep_pkts; 9 ... 10 hw->device_id = pci_dev->id.device_id; /* device_id */ 11 hw->vendor_id = pci_dev->id.vendor_id; /* vendor_id */ 12 hw->hw_addr = (void *)pci_dev->mem_resource[0].addr; /* mmap()得到的BAR的虚拟地址 */ 13 ... 14 /* ixgbe_init_shared_code() -> 15 * ixgbe_set_mac_type() 16 * ixgbe_init_ops_82599() 17 * 在ixgbe_set_mac_type()中根据vendor_id和device_id设置hw->mac.type,82599为ixgbe_mac_82599EB 18 * 根据hw->mac.type调用对应的函数设置hw->mac.ops,82599为ixgbe_init_ops_82599() */ 19 diag = ixgbe_init_shared_code(hw); 20 ... 21 /* ixgbe_init_hw() -> 22 * ixgbe_call_func() -> 23 * ixgbe_init_hw_generic() -> 24 * ixgbe_reset_hw_82599() -> 25 * ixgbe_get_mac_addr_generic() 26 * 得到网卡的mac地址 */ 27 diag = ixgbe_init_hw(hw); 28 ... 29 ether_addr_copy((struct ether_addr *) hw->mac.perm_addr, 30 ð_dev->data->mac_addrs[0]); /* 复制网卡的mac地址到eth_dev->data->mac_addrs */ 31 ... 32 } 33 34 static const struct eth_dev_ops ixgbe_eth_dev_ops = { 35 .dev_configure = ixgbe_dev_configure, 36 .dev_start = ixgbe_dev_start, 37 ... 38 .rx_queue_setup = ixgbe_dev_rx_queue_setup, 39 ... 40 .tx_queue_setup = ixgbe_dev_tx_queue_setup, 41 ... 42 };

eth_ixgbe_pci_probe()

1 static int eth_ixgbe_pci_probe(struct rte_pci_driver *pci_drv __rte_unused, 2 struct rte_pci_device *pci_dev) 3 { 4 return rte_eth_dev_pci_generic_probe(pci_dev, 5 sizeof(struct ixgbe_adapter), eth_ixgbe_dev_init); 6 } 7 8 static inline int 9 rte_eth_dev_pci_generic_probe(struct rte_pci_device *pci_dev, 10 size_t private_data_size, eth_dev_pci_callback_t dev_init) 11 { 12 ... 13 eth_dev = rte_eth_dev_pci_allocate(pci_dev, private_data_size); 14 ... 15 ret = dev_init(eth_dev); /* ixgbe为eth_ixgbe_dev_init() */ 16 ... 17 } 18 19 static inline struct rte_eth_dev * 20 rte_eth_dev_pci_allocate(struct rte_pci_device *dev, size_t private_data_size) 21 { 22 ... 23 /* rte_eth_dev_allocate() -> 24 * rte_eth_dev_find_free_port() 25 * rte_eth_dev_data_alloc() 26 * eth_dev_get() */ 27 eth_dev = rte_eth_dev_allocate(name); 28 ... 29 /* 分配private data,ixgbe为struct ixgbe_adapter */ 30 eth_dev->data->dev_private = rte_zmalloc_socket(name, 31 private_data_size, RTE_CACHE_LINE_SIZE, 32 dev->device.numa_node); 33 ... 34 } 35 36 struct rte_eth_dev * 37 rte_eth_dev_allocate(const char *name) 38 { 39 ... 40 /* 遍历rte_eth_devices数组,找到一个空闲的设备 */ 41 port_id = rte_eth_dev_find_free_port(); 42 ... 43 /* 分配rte_eth_dev_data数组 */ 44 rte_eth_dev_data_alloc(); 45 ... 46 /* 设置port_id对应的设备的state为RTE_ETH_DEV_ATTACHED */ 47 eth_dev = eth_dev_get(port_id); 48 ... 49 }

ixgbe_recv_pkts()

接收时回写:

1、网卡使用DMA写Rx FIFO中的Frame到Rx Ring Buffer中的mbuf,设置desc的DD为1

2、网卡驱动取走mbuf后,设置desc的DD为0,更新RDT

1 uint16_t 2 ixgbe_recv_pkts(void *rx_queue, struct rte_mbuf **rx_pkts, 3 uint16_t nb_pkts) 4 { 5 ... 6 nb_rx = 0; 7 nb_hold = 0; 8 rxq = rx_queue; 9 rx_id = rxq->rx_tail; /* 相当于ixgbe的next_to_clean */ 10 rx_ring = rxq->rx_ring; 11 sw_ring = rxq->sw_ring; 12 ... 13 while (nb_rx < nb_pkts) { 14 ... 15 /* 得到rx_tail指向的desc的指针 */ 16 rxdp = &rx_ring[rx_id]; 17 /* 若网卡回写的DD为0,跳出循环 */ 18 staterr = rxdp->wb.upper.status_error; 19 if (!(staterr & rte_cpu_to_le_32(IXGBE_RXDADV_STAT_DD))) 20 break; 21 /* 得到rx_tail指向的desc */ 22 rxd = *rxdp; 23 ... 24 /* 分配新mbuf */ 25 nmb = rte_mbuf_raw_alloc(rxq->mb_pool); 26 ... 27 nb_hold++; /* 统计接收的mbuf数 */ 28 rxe = &sw_ring[rx_id]; /* 得到旧mbuf */ 29 rx_id++; /* 得到下一个desc的index,注意是一个环形缓冲区 */ 30 if (rx_id == rxq->nb_rx_desc) 31 rx_id = 0; 32 ... 33 rte_ixgbe_prefetch(sw_ring[rx_id].mbuf); /* 预取下一个mbuf */ 34 ... 35 if ((rx_id & 0x3) == 0) { 36 rte_ixgbe_prefetch(&rx_ring[rx_id]); 37 rte_ixgbe_prefetch(&sw_ring[rx_id]); 38 } 39 ... 40 rxm = rxe->mbuf; /* rxm指向旧mbuf */ 41 rxe->mbuf = nmb; /* rxe->mbuf指向新mbuf */ 42 dma_addr = 43 rte_cpu_to_le_64(rte_mbuf_data_dma_addr_default(nmb)); /* 得到新mbuf的总线地址 */ 44 rxdp->read.hdr_addr = 0; /* 清零新mbuf对应的desc的DD,后续网卡会读desc */ 45 rxdp->read.pkt_addr = dma_addr; /* 设置新mbuf对应的desc的总线地址,后续网卡会读desc */ 46 ... 47 pkt_len = (uint16_t) (rte_le_to_cpu_16(rxd.wb.upper.length) - 48 rxq->crc_len); /* 包长 */ 49 rxm->data_off = RTE_PKTMBUF_HEADROOM; 50 rte_packet_prefetch((char *)rxm->buf_addr + rxm->data_off); 51 rxm->nb_segs = 1; 52 rxm->next = NULL; 53 rxm->pkt_len = pkt_len; 54 rxm->data_len = pkt_len; 55 rxm->port = rxq->port_id; 56 ... 57 if (likely(pkt_flags & PKT_RX_RSS_HASH)) /* RSS */ 58 rxm->hash.rss = rte_le_to_cpu_32( 59 rxd.wb.lower.hi_dword.rss); 60 else if (pkt_flags & PKT_RX_FDIR) { /* FDIR */ 61 rxm->hash.fdir.hash = rte_le_to_cpu_16( 62 rxd.wb.lower.hi_dword.csum_ip.csum) & 63 IXGBE_ATR_HASH_MASK; 64 rxm->hash.fdir.id = rte_le_to_cpu_16( 65 rxd.wb.lower.hi_dword.csum_ip.ip_id); 66 } 67 ... 68 rx_pkts[nb_rx++] = rxm; /* 将旧mbuf放入rx_pkts数组 */ 69 } 70 rxq->rx_tail = rx_id; /* rx_tail指向下一个desc */ 71 ... 72 nb_hold = (uint16_t) (nb_hold + rxq->nb_rx_hold); 73 /* 若已处理的mbuf数大于上限(默认为32),更新RDT */ 74 if (nb_hold > rxq->rx_free_thresh) { 75 ... 76 rx_id = (uint16_t) ((rx_id == 0) ? 77 (rxq->nb_rx_desc - 1) : (rx_id - 1)); 78 IXGBE_PCI_REG_WRITE(rxq->rdt_reg_addr, rx_id); /* 将rx_id写入RDT */ 79 nb_hold = 0; /* 清零nb_hold */ 80 } 81 rxq->nb_rx_hold = nb_hold; /* 更新nb_rx_hold */ 82 return nb_rx; 83 }

ixgbe_xmit_pkts()

1 uint16_t 2 ixgbe_xmit_pkts(void *tx_queue, struct rte_mbuf **tx_pkts, 3 uint16_t nb_pkts) 4 { 5 ... 6 txq = tx_queue; 7 sw_ring = txq->sw_ring; 8 txr = txq->tx_ring; 9 tx_id = txq->tx_tail; /* 相当于ixgbe的next_to_use */ 10 txe = &sw_ring[tx_id]; /* 得到tx_tail指向的entry */ 11 txp = NULL; 12 ... 13 /* 若空闲的mbuf数小于下限(默认为32),清理空闲的mbuf */ 14 if (txq->nb_tx_free < txq->tx_free_thresh) 15 ixgbe_xmit_cleanup(txq); 16 ... 17 /* TX loop */ 18 for (nb_tx = 0; nb_tx < nb_pkts; nb_tx++) { 19 ... 20 tx_pkt = *tx_pkts++; /* 待发送的mbuf */ 21 pkt_len = tx_pkt->pkt_len; /* 待发送的mbuf的长度 */ 22 ... 23 nb_used = (uint16_t)(tx_pkt->nb_segs + new_ctx); /* 使用的desc数 */ 24 ... 25 tx_last = (uint16_t) (tx_id + nb_used - 1); /* tx_last指向最后一个desc */ 26 ... 27 if (tx_last >= txq->nb_tx_desc) /* 注意是一个环形缓冲区 */ 28 tx_last = (uint16_t) (tx_last - txq->nb_tx_desc); 29 ... 30 if (nb_used > txq->nb_tx_free) { 31 ... 32 if (ixgbe_xmit_cleanup(txq) != 0) { 33 /* Could not clean any descriptors */ 34 if (nb_tx == 0) /* 若是第一个包(未发包),return 0 */ 35 return 0; 36 goto end_of_tx; /* 若非第一个包(已发包),停止发包,更新发送队列参数 */ 37 } 38 ... 39 } 40 ... 41 /* 每个包可能包含多个分段,m_seg指向第一个分段 */ 42 m_seg = tx_pkt; 43 do { 44 txd = &txr[tx_id]; /* desc */ 45 txn = &sw_ring[txe->next_id]; /* 下一个entry */ 46 ... 47 txe->mbuf = m_seg; /* 将m_seg挂载到txe */ 48 ... 49 slen = m_seg->data_len; /* m_seg的长度 */ 50 buf_dma_addr = rte_mbuf_data_dma_addr(m_seg); /* m_seg的总线地址 */ 51 txd->read.buffer_addr = 52 rte_cpu_to_le_64(buf_dma_addr); /* 总线地址赋给txd->read.buffer_addr */ 53 txd->read.cmd_type_len = 54 rte_cpu_to_le_32(cmd_type_len | slen); /* 长度赋给txd->read.cmd_type_len */ 55 ... 56 txe->last_id = tx_last; /* last_id指向最后一个desc */ 57 tx_id = txe->next_id; /* tx_id指向下一个desc */ 58 txe = txn; /* txe指向下一个entry */ 59 m_seg = m_seg->next; /* m_seg指向下一个分段 */ 60 } while (m_seg != NULL); 61 ... 62 /* 最后一个分段 */ 63 cmd_type_len |= IXGBE_TXD_CMD_EOP; 64 txq->nb_tx_used = (uint16_t)(txq->nb_tx_used + nb_used); /* 更新nb_tx_used */ 65 txq->nb_tx_free = (uint16_t)(txq->nb_tx_free - nb_used); /* 更新nb_tx_free */ 66 ... 67 if (txq->nb_tx_used >= txq->tx_rs_thresh) { /* 若使用的mbuf数大于上限(默认为32),设置RS */ 68 ... 69 cmd_type_len |= IXGBE_TXD_CMD_RS; 70 ... 71 txp = NULL; /* txp为NULL表示已设置RS */ 72 } else 73 txp = txd; /* txp非NULL表示未设置RS */ 74 ... 75 txd->read.cmd_type_len |= rte_cpu_to_le_32(cmd_type_len); 76 } 77 ... 78 end_of_tx: 79 /* burst发包的最后一个包的最后一个分段 */ 80 ... 81 if (txp != NULL) /* 若未设置RS,设置RS */ 82 txp->read.cmd_type_len |= rte_cpu_to_le_32(IXGBE_TXD_CMD_RS); 83 ... 84 IXGBE_PCI_REG_WRITE_RELAXED(txq->tdt_reg_addr, tx_id); /* 将tx_id写入TDT */ 85 txq->tx_tail = tx_id; /* tx_tail指向下一个desc */ 86 ... 87 return nb_tx; 88 }

rte_eth_rx/tx_burst()

1 static inline uint16_t 2 rte_eth_rx_burst(uint8_t port_id, uint16_t queue_id, 3 struct rte_mbuf **rx_pkts, const uint16_t nb_pkts) 4 { 5 /* 得到port_id对应的设备 */ 6 struct rte_eth_dev *dev = &rte_eth_devices[port_id]; 7 ... 8 /* ixgbe为ixgbe_recv_pkts() */ 9 int16_t nb_rx = (*dev->rx_pkt_burst)(dev->data->rx_queues[queue_id], 10 rx_pkts, nb_pkts); 11 ... 12 } 13 14 static inline uint16_t 15 rte_eth_tx_burst(uint8_t port_id, uint16_t queue_id, 16 struct rte_mbuf **tx_pkts, uint16_t nb_pkts) 17 { 18 /* 得到port_id对应的设备 */ 19 struct rte_eth_dev *dev = &rte_eth_devices[port_id]; 20 ... 21 /* ixgbe为ixgbe_xmit_pkts */ 22 return (*dev->tx_pkt_burst)(dev->data->tx_queues[queue_id], tx_pkts, nb_pkts); 23 }

1、从描述符中获取报文返回给应用层

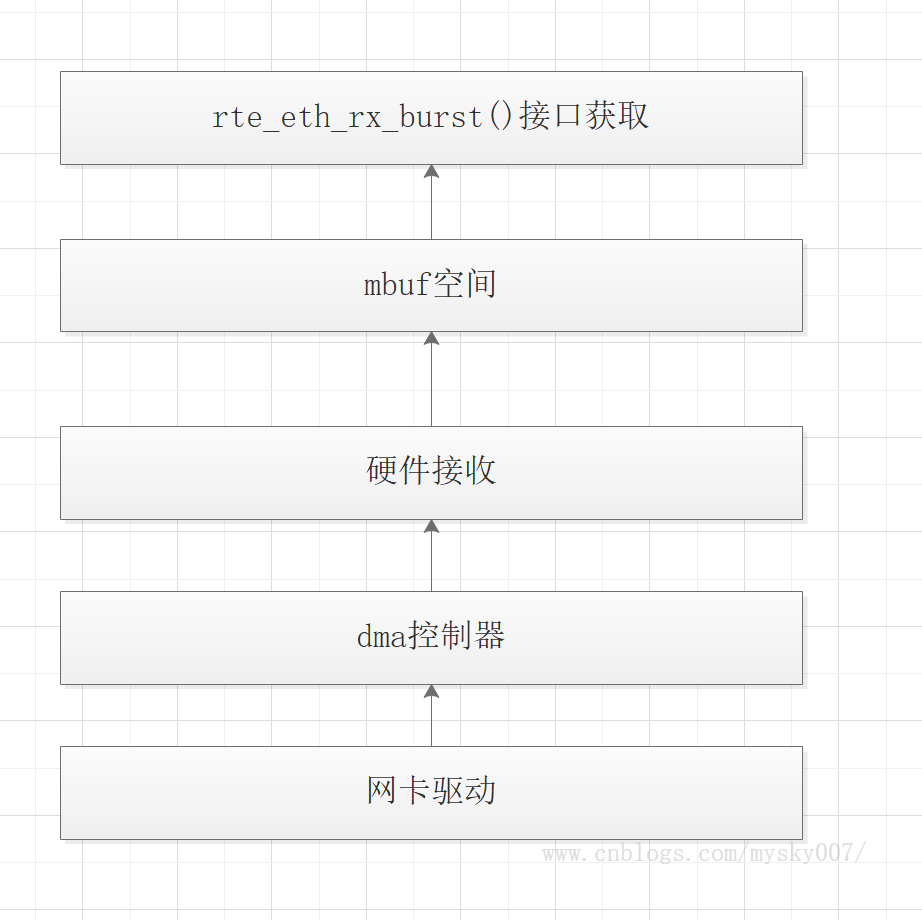

首先根据应用层最后一次获取报文的位置,进而从描述符队列找到待被应用层接收的描述符。此时会判断描述符中的status_error是否已经打上了dd标记,有dd标记说明dma控制器已经把报文放到mbuf中了。这里解释下dd标记,当dma控制器将接收到的报文保存到描述符指向的mbuf空间时,由dma控制器打上dd标记,表示dma控制器已经把报文放到mbuf中了。应用层在获取完报文后,需要清除dd标记。

找到了描述符的位置,也就找到了mbuf空间。此时会根据描述符里面保存的信息,填充mbuf结构。例如填充报文的长度,vlanid, rss等信息。填充完mbuf后,将这个mbuf保存到应用层传进来的结构中,返回给应用层,这样应用层就获取到了这个报文。

1 uint16_t eth_igb_recv_pkts(void *rx_queue, struct rte_mbuf **rx_pkts, uint16_t nb_pkts) 2 { 3 while (nb_rx < nb_pkts) 4 { 5 //从描述符队列中找到待被应用层最后一次接收的那个描述符位置 6 rxdp = &rx_ring[rx_id]; 7 staterr = rxdp->wb.upper.status_error; 8 //检查状态是否为dd, 不是则说明驱动还没有把报文放到接收队列,直接退出 9 if (! (staterr & rte_cpu_to_le_32(E1000_RXD_STAT_DD))) 10 { 11 break; 12 }; 13 //找到了描述符的位置,也就从软件队列中找到了mbuf 14 rxe = &sw_ring[rx_id]; 15 rx_id++; 16 rxm = rxe->mbuf; 17 //填充mbuf 18 pkt_len = (uint16_t) (rte_le_to_cpu_16(rxd.wb.upper.length) - rxq->crc_len); 19 rxm->data_off = RTE_PKTMBUF_HEADROOM; 20 rxm->nb_segs = 1; 21 rxm->pkt_len = pkt_len; 22 rxm->data_len = pkt_len; 23 rxm->port = rxq->port_id; 24 rxm->hash.rss = rxd.wb.lower.hi_dword.rss; 25 rxm->vlan_tci = rte_le_to_cpu_16(rxd.wb.upper.vlan); 26 //保存到应用层 27 rx_pkts[nb_rx++] = rxm; 28 } 29 }

2、从内存池中获取新的mbuf告诉dma控制器

当应用层从软件队列中获取到mbuf后, 需要重新从内存池申请一个mbuf空间,并将mbuf地址放到描述符队列中, 相当于告诉dma控制器,后续将收到的报文保存到这个新的mbuf中, 这也是狸猫换太子的过程。描述符是mbuf与dma控制器的中介,那dma控制器怎么知道描述符队列的地址呢?这在上一篇文章中已经介绍过了,将描述符队列的地址写入到了寄存器中,dma控制器通过读取寄存器就知道描述符队列的地址。

需要注意的是,将mbuf的地址保存到描述符中,此时会将dd标记给清0,这样dma控制器就认为这个mbuf里面的内容已经被应用层接收了,收到新报文后可以重新放到这个mbuf中。

1 uint16_t eth_igb_recv_pkts(void *rx_queue, struct rte_mbuf **rx_pkts, uint16_t nb_pkts) 2 { 3 while (nb_rx < nb_pkts) 4 { 5 //申请一个新的mbuf 6 nmb = rte_rxmbuf_alloc(rxq->mb_pool); 7 //因为原来的mbuf被应用层取走了。这里替换原来的软件队列mbuf,这样网卡收到报文后可以放到这个新的mbuf 8 rxe->mbuf = nmb; 9 dma_addr = rte_cpu_to_le_64(RTE_MBUF_DATA_DMA_ADDR_DEFAULT(nmb)); 10 //将mbuf地址保存到描述符中,相当于高速dma控制器mbuf的地址。 11 rxdp->read.hdr_addr = dma_addr; //这里会将dd标记清0 12 rxdp->read.pkt_addr = dma_addr; 13 } 14 }