0.目录

1.参考

2.问题定位

不间断空格的unicode表示为 uxa0',超出gbk编码范围?

3.如何处理

.extract_first().replace(u'xa0', u' ').strip().encode('utf-8','replace')

1.参考

Beautiful Soup and Unicode Problems

详细解释

unicodedata.normalize('NFKD',string) 实际作用???

Scrapy : Select tag with non-breaking space with xpath

>>> selector.xpath(u'''

... //p[normalize-space()]

... [not(contains(normalize-space(), "u00a0"))]normalize-space() 实际作用???

In [244]: sel.css('.content') Out[244]: [<Selector xpath=u"descendant-or-self::*[@class and contains(concat(' ', normalize-space(@class), ' '), ' content ')]" data=u'<p class="content text-

s.replace(u'xa0', u'').encode('utf-8')

2.问题定位

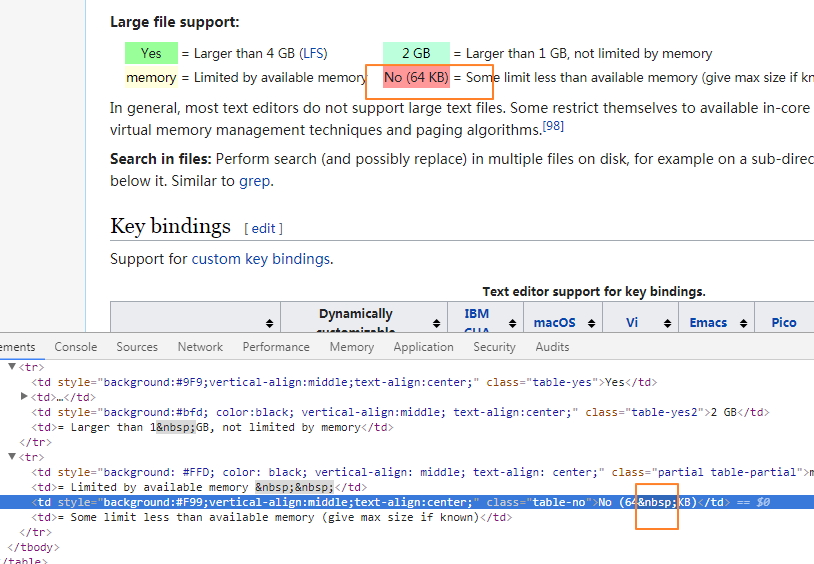

https://en.wikipedia.org/wiki/Comparison_of_text_editors

定位元素显示为 &npsp;

网页源代码表示为

<tr> <td style="background: #FFD; color: black; vertical-align: middle; text-align: center;" class="partial table-partial">memory</td> <td>= Limited by available memory   </td> <td style="background:#F99;vertical-align:middle;text-align:center;" class="table-no">No (64 KB)</td> <td>= Some limit less than available memory (give max size if known)</td> </tr> </table>

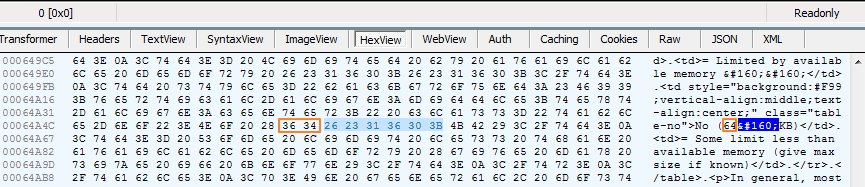

实际传输Hex为:

不间断空格的unicode表示为 uxa0',保存的时候编码 utf-8 则是 'xc2xa0'

In [211]: for tr in response.xpath('//table[8]/tr[2]'): ...: print [u''.join(i.xpath('.//text()').extract()) for i in tr.xpath('./*')] ...: [u'memory', u'= Limited by available memory xa0xa0', u'No (64xa0KB)', u'= Some limit less than available memory (give max size if known)'] In [212]: u'No (64xa0KB)'.encode('utf-8') Out[212]: 'No (64xc2xa0KB)' In [213]: u'No (64xa0KB)'.encode('utf-8').decode('utf-8') Out[213]: u'No (64xa0KB)'

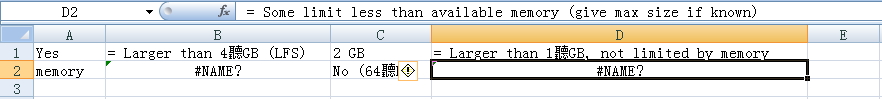

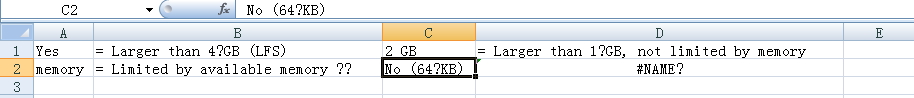

保存 csv 直接使用 excel 打开会有乱码(默认ANSI gbk 打开???,u'xa0' 超出 gbk 能够编码范围???),使用记事本或notepad++能够自动以 utf-8 正常打开。

使用记事本打开csv文件,另存为 ANSI 编码,之后 excel 正常打开。超出 gbk 编码范围的替换为'?'

3.如何处理

.extract_first().replace(u'xa0', u' ').strip().encode('utf-8','replace')