webmagic学习介绍:http://webmagic.io/docs/zh/

webmagic学习视频:https://www.bilibili.com/video/BV1cE411u7RA

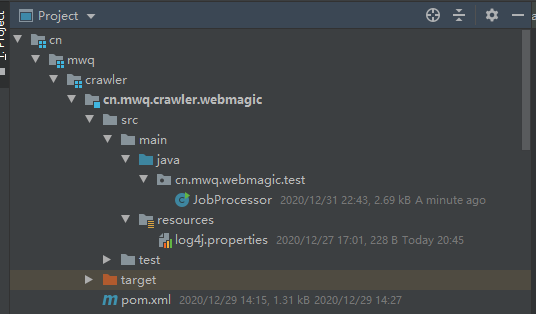

一、maven工程

log4j.porperties

log4j.rootLogger=INFO,A1 log4j.appender.A1=org.apache.log4j.ConsoleAppender log4j.appender.A1.layout=org.apache.log4j.PatternLayout log4j.appender.A1.layout.ConversionPattern=%-d{yyyy-MM-dd HH:mm:ss,SSS} [%t] [%c]-[%p] %m%n

Pom.xml

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>cn.mwq</groupId> <artifactId>cn.mwq.crawler.webmagic</artifactId> <version>1.0-SNAPSHOT</version> <dependencies> <!-- https://mvnrepository.com/artifact/us.codecraft/webmagic-core --> <dependency> <groupId>us.codecraft</groupId> <artifactId>webmagic-core</artifactId> <version>0.7.4</version> </dependency> <!-- https://mvnrepository.com/artifact/us.codecraft/webmagic-extension --> <dependency> <groupId>us.codecraft</groupId> <artifactId>webmagic-extension</artifactId> <version>0.7.4</version> </dependency> <!-- https://mvnrepository.com/artifact/org.slf4j/slf4j-log4j12 --> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> <version>1.7.25</version> </dependency> <dependency> <groupId>com.google.guava</groupId> <artifactId>guava</artifactId> <version>16.0</version> </dependency> </dependencies> </project>

JobProcessor.java

package cn.mwq.webmagic.test; import us.codecraft.webmagic.Page; import us.codecraft.webmagic.Site; import us.codecraft.webmagic.Spider; import us.codecraft.webmagic.processor.PageProcessor; import us.codecraft.webmagic.scheduler.BloomFilterDuplicateRemover; import us.codecraft.webmagic.scheduler.QueueScheduler; public class JobProcessor implements PageProcessor { //解析页面 public void process(Page page) { //解析page,且将返回结果放到resultItems //CSS选择器 // page.putField("div",page.getHtml().css("div.mt h2").all()); // //xpath // page.putField("ul",page.getHtml().xpath("ul[@id=navitems-group1]/li/a")); // //正则表达式 // page.putField("div3",page.getHtml().css("div#navitems-2014 a").regex(".*超市.*").all()); // // //处理结果API // page.putField("div4",page.getHtml().css("div#navitems-2014 a").regex(".*超市.*").get()); // page.putField("div5",page.getHtml().css("div#navitems-2014 a").regex(".*超市.*").toString()); //获取连接 // page.addTargetRequests(page.getHtml().css("div.dtyw").links().all()); // page.putField("url",page.getHtml().css("div.inside h2").all()); page.addTargetRequest("http://jundui.caigou2003.com/liluntansuo/4579143.html"); page.addTargetRequest("http://jundui.caigou2003.com/liluntansuo/4579143.html"); page.addTargetRequest("http://jundui.caigou2003.com/liluntansuo/4579143.html");//添加请求相同时,只下载一个页面 } private Site site = Site.me() .setRetryTimes(3)//设置重试次数 .setSleepTime(5000) .setTimeOut(10000)//设置超时时间,单位是Ms .setRetrySleepTime(3000) //设置重试间隔时间 .setCharset("utf-8") .setUserAgent( "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_2) AppleWebKit/537.31 (KHTML, like Gecko) Chrome/26.0.1410.65 Safari/537.31"); public Site getSite() { return this.site; } public static void main(String[] args) { // Spider.create(new JobProcessor()) // .addUrl("https://www.jd.com/allSort.aspx") // .run();//执行爬虫 Spider.create(new JobProcessor()) .addUrl("http://jundui.caigou2003.com/") //.addPipeline(new FilePipeline("C:\Users\82789\Desktop\pipfile")) .setScheduler(new QueueScheduler().setDuplicateRemover(new BloomFilterDuplicateRemover(10000000))) .thread(2) .run();//执行爬虫 } }