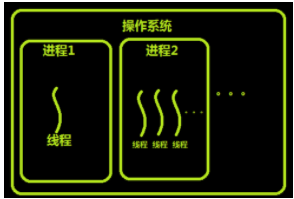

进程是资源分配的最小单位,线程是cpu调度的最小单位。

进程与线程的关系如图

开启多线程

# from threading import Thread # import time,os # # def fun(a,b): # n = a+b # print(n,os.getpid()) # time.sleep(1) # # for i in range(10): #多线程 # t = Thread(target=fun,args=(i,5)) # t.start() # print(i,os.getpid()) # 发现端口号都一样多线程都在一个进程中

多进程与多线程对比

from threading import Thread from multiprocessing import Process import time def fun(n): n + 1 if __name__=='__main__': start = time.time() t_lst = [] for i in range(100): t = Thread(target=fun,args=(i,)) t.start() t_lst.append(t) for t in t_lst:t.join() t1 = time.time() - start start = time.time() t_lst = [] for i in range(100): t = Process(target=fun,args=(i,)) t.start() t_lst.append(t) for t in t_lst:t.join() t2 = time.time() - start print(t1,t2) ##0.015152931213378906 3.884697437286377 ##可见多线程比多进程快得多

守护线程

import time from threading import Thread def func1(): while 1: print('*'*8) time.sleep(1) def func2(): print('in func2') time.sleep(5) t = Thread(target=func1) t.daemon = True # 守护线程 t.start() t2 = Thread(target=func2) t2.start()

守护线程:在主进程结束后,等待子线程结束后才结束

(守护进程:在主进程代码执行完之后结束,不等待子进程)

条件

# from threading import Thread,Condition # # def func(con,i): # con.acquire() # con.wait() # print('在第%s个循环里'%i) # con.release() # # # con = Condition() # for i in range(10): # Thread(target=func,args=(con,i)).start() # while 1: # num = int(input('>>>')) # con.acquire() # con.notify(num) # 创建num个钥匙,执行三个线程之后不归还 # con.release()

定时器

from threading import Timer import time def func(): print('时间同步') while 1: Timer(2,func).start() # 立刻启动线程2s后线程才执行 time.sleep(2)

定时,到了时间才执行。但线程是立即执行的

线程池

# import time # from concurrent.futures import ThreadPoolExecutor # def func(n): # time.sleep(1) # print(n) # return n*n # # tpool = ThreadPoolExecutor(max_workers=5) # t_lst = [] # for i in range(10): # t = tpool.submit(func,i) # t_lst.append(t) # tpool.shutdown() # print('主线程') # for t in t_lst: # print('***',t.result())

ThreadPoolExecutor 线程池 与 ProcessPoolExecutor导入的进程池用法一致

回调函数

import time from concurrent.futures import ThreadPoolExecutor def func(n): time.sleep(1) print(n) return n*n def call_back(m): print('%s的线程'%m.result()) tpool = ThreadPoolExecutor(max_workers=5) for i in range(10): tpool.submit(func,i).add_done_callback(call_back)

与进程中类似,只是调用方式不同。

事件

import time import random from threading import Thread,Event def connect_db(e): count = 0 while count<3: e.wait(0.3) if e.is_set(): print('连接成功') break else: count += 1 print('第%s次连接失败'%count) else: raise TimeoutError('数据库连接超时') # raise 主动抛出异常 def check_web(e): time.sleep(random.randint(0,3)) e.set() e = Event() t1 = Thread(target=connect_db,args=(e,)) t2 = Thread(target=check_web,args=(e,)) t1.start() t2.start()

线程锁

# import time # from threading import Thread,Lock # # def fun(lock): # lock.acquire() # global n # team = n # time.sleep(0.2) # n = team - 1 # lock.release() # # n = 10 # t_lst = [] # lock = Lock() # for i in range(10): # t = Thread(target=fun,args=(lock,)) # t_lst.append(t) # t.start() # for t in t_lst: # t.join() # print(n)

加锁 牺牲了执行效率 保障了数据安全

互斥锁的死锁问题

# from threading import Thread,Lock # import time # noodle_lock = Lock() # fork_lock = Lock() # 互斥锁 # # def eat1(name): # noodle_lock.acquire() # print('%s拿到面条了'%name) # fork_lock.acquire() # print('%s拿到叉子了'%name) # print('%s吃到面了'%name) # fork_lock.release() # noodle_lock.release() # # def eat2(name): # fork_lock.acquire() # print('%s拿到叉子了'%name) # time.sleep(1) # noodle_lock.acquire() # print('%s拿到面条了'%name) # print('%s吃到面了'%name) # fork_lock.release() # noodle_lock.release() # # # Thread(target=eat1,args=('dahuang',)).start() # Thread(target=eat2,args=('大黄',)).start() # Thread(target=eat1,args=('蜘蛛',)).start() # Thread(target=eat2,args=('二狗',)).start()

递归锁改进避免死锁

from threading import Thread,RLock import time fork_lock = noodle_lock = RLock() # 递归锁 一串钥匙 # 两层 一旦有人进入第一层 别人就无法再进入 # 不会出现上面互斥锁的死锁问题 def eat1(name): noodle_lock.acquire() print('%s拿到面条了'%name) fork_lock.acquire() print('%s拿到叉子了'%name) print('%s吃到面了'%name) fork_lock.release() noodle_lock.release() def eat2(name): fork_lock.acquire() print('%s拿到叉子了'%name) time.sleep(1) noodle_lock.acquire() print('%s拿到面条了'%name) print('%s吃到面了'%name) fork_lock.release() noodle_lock.release() Thread(target=eat1,args=('dahuang',)).start() Thread(target=eat2,args=('大黄',)).start() Thread(target=eat1,args=('蜘蛛',)).start() Thread(target=eat2,args=('二狗',)).start()

队列

三种常见队列

# import queue # put方法 # get方法 # put_nowait()方法 不带待 不阻塞 # get_nowait()方法 # q = queue.Queue() # 队列 先进先出 # q = queue.LifoQueue() # 栈 后进先出 # q = queue.PriorityQueue() # q.put((30,'a')) # 左边优先级,右边进队列对的数据 # 优先级队列 优先级高的先出 # 数越小 优先级越高 # 如果优先级相同 按ascll小的先出 # 以上三种队列都是安全的