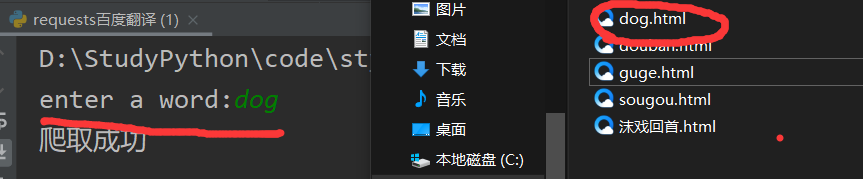

一、百度翻译

import requests import json if __name__ == '__main__': word = input('enter a word:') url = 'https://fanyi.baidu.com/sug' headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:82.0) Gecko/20100101 Firefox/82.0' } data = { 'kw':word } resp = requests.post(url=url,data=data,headers=headers) resp.encoding = 'utf-8' dic_obj = resp.json() #print(dic_obj) # 存储的文件名 filename = word+'.html' fp = open('D:StudyPythonscrapy\'+filename,'w',encoding='utf-8') #print('D:StudyPythonscrapy\'+filename) json.dump(dic_obj,fp=fp,ensure_ascii=False) print('爬取成功')

二、豆瓣电影排行榜喜剧。排行前多少名自己设置

import requests import json if __name__ == '__main__': first = input('从第几个开始:') count = input('爬取多少个:') url = 'https://movie.douban.com/j/chart/top_list' headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:82.0) Gecko/20100101 Firefox/82.0' } param = { 'type':'24', 'interval_id':'100:90', 'action':'', 'start':first, 'limit':count, } resp = requests.get(url=url,params=param,headers=headers) resp.encoding = 'utf-8' list_data = resp.json() # print(list_data) fp = open('D:StudyPythonscrapydouban.html','w',encoding='utf-8') json.dump(list_data,fp=fp,ensure_ascii=False) print('爬取成功')

三、网页采集器:(相当于一个浏览器的搜索而已 )

import requests # User-Agent:(请求载体的身份标识)(两种:浏览器或者爬虫) # UAi检测,门户网站的服务器会检测对应的请求的载体身份标识,如果载体身份标识为某一款浏览器则为正常(是用户发起的), # User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:82.0) Gecko/20100101 Firefox/82.0 (此为正常) # 如果载体身份标识不是浏览器则不正常,则为爬虫,服务器可能拒绝此次请求 # UA伪装: 让爬虫对应的请求载体身份标识伪装成一款浏览器 if __name__ == "__main__": kw = input("输入要查询的关键词:") # UA伪装,对应的user—agent封装到一个字典中 headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:82.0) Gecko/20100101 Firefox/82.0' } url = 'https://www.baidu.com/s' # 封装请求参数 param = { 'wd': kw } # 都指定的url发起的请求是带参数的 resp = requests.get(url=url, params=param,headers=headers) # 防止中文乱码 resp.encoding = 'utf-8' page_text = resp.text print(page_text) # 存储的文件名 filename = kw+'.html' with open('D:StudyPythonscrapy\'+filename,'w',encoding='utf-8') as fp: fp.write(page_text) print(filename,'保存成功')