import urllib

import urllib.request as ur

from bs4 import BeautifulSoup

import xlrd

import jieba

from scipy.misc import imread

from wordcloud import WordCloud, STOPWORDS

list1=[]

def getHtml(url):

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36'}

req = urllib.request.Request(url, headers=headers)

htmls=urllib.request.urlopen(req).read()

return htmls

list = []

def getinfor(url,fdata):

print(url)

htmls = getHtml(url)

soup=BeautifulSoup(htmls,'lxml')

table2 = soup.select('tr')

for i in range(len(table2)):

if (i % 2 == 0):

list_data = []

for j in table2[i].select('td'):

list_data.append(j.get_text().strip())

list.append(list_data)

return list

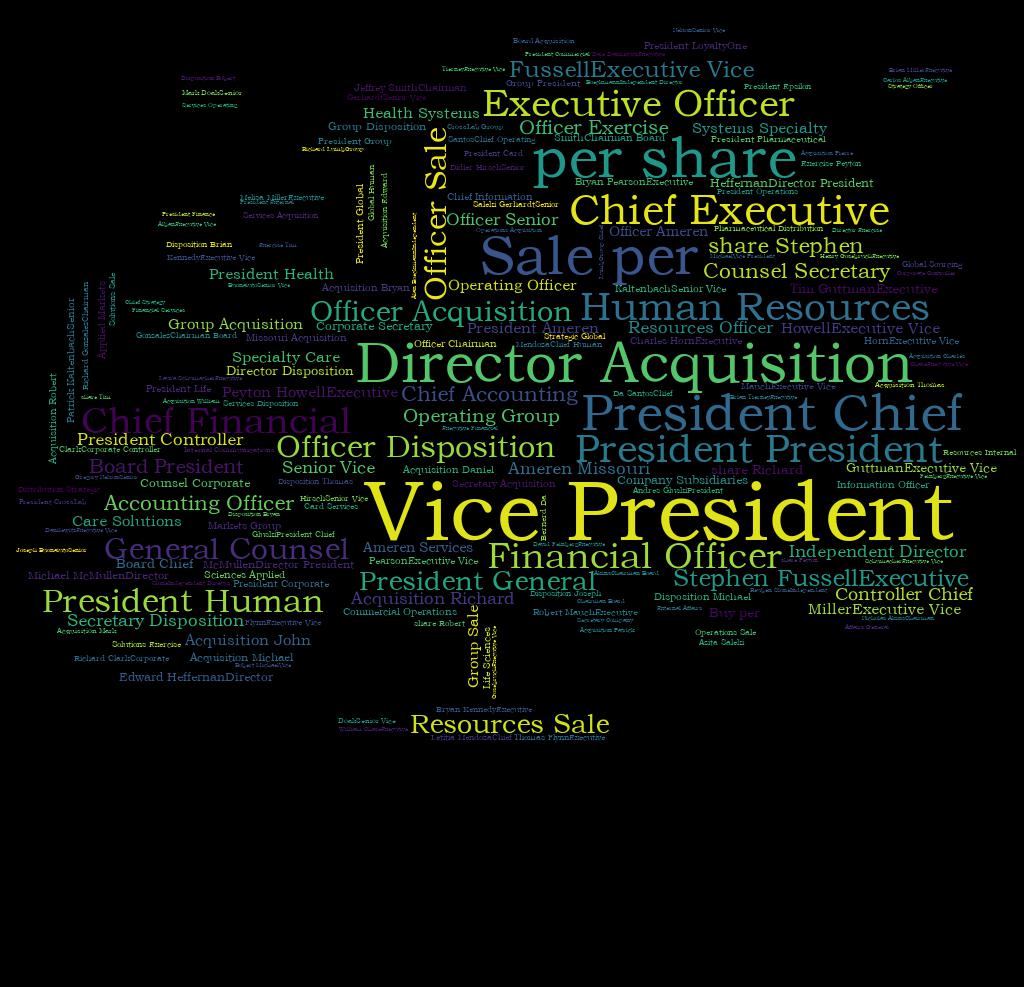

def wordcloud():

content = open('rm.txt', 'r', encoding='utf-8').read()

words = jieba.cut(content, cut_all=True)

words_split = " ".join(words)

print(words_split)

background_pic = imread('tree.JPG')

word_c= WordCloud(

width=2000,

height=2000,

margin=2,

background_color='BLACK',

mask=background_pic,

font_path='C:WindowsFontsSTZHONGS.TTF',

stopwords=STOPWORDS,

max_font_size=500,

random_state=100

)

word_c.generate_from_text(words_split)

word_c.to_file('tree_1.JPG')

if __name__=='__main__':

all_data = []

data = xlrd.open_workbook(r'symbol.xlsx')

table = data.sheets()[0]

nrows = table.nrows

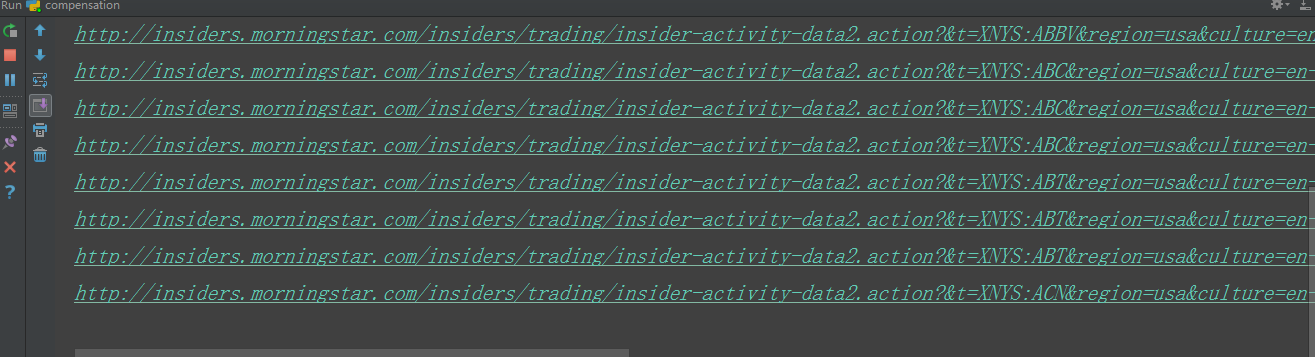

for i in range(0, nrows):

data1 = table.cell(i, 0).value

url = 'http://insiders.morningstar.com/insiders/trading/insider-activity-data2.action?&t=XNYS:'+str(data1)+'®ion=usa&culture=en-US&cur=&yc=2&tc=&title=&recordCount=143&pageIndex='

num = 0

for i in range(3):

pageurl = url + str(num) + '&pageSize=50&init=false&orderBy=date&order=desc&contentOps=page_list&_=1505217377066'

num += 1

getHtml(pageurl)

all_data.append(getinfor(pageurl,data1))

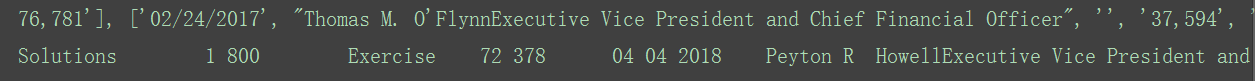

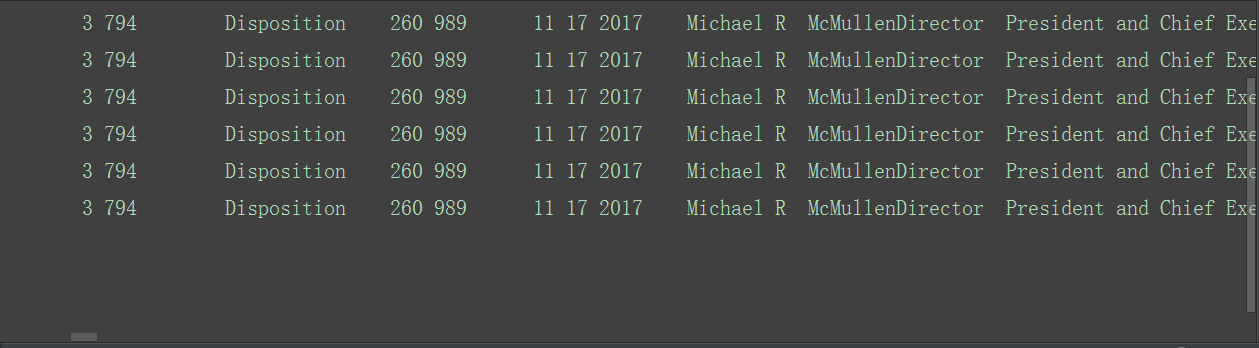

name = open('rm.txt', 'w')

for dd in all_data:

name.write(str(dd) + '

')

name.close()

wordcloud()

一.程序运用了三个主要函数:获取url函数,获取网页文本信息函数,生成词云函数

1.

def getHtml(url)

应对网站的反爬虫机制,添加了'User-Agent'

2.

def getinfor(url,fdata)

运用Beautifulsoup函数对html进行分析,并将数据返回到主函数中,存在txt文本文件中,便于调用

3.

def wordcloud()

运用jieba对爬取的文本文件进行分词,并配置生成词云的图片的各属性

二.遇到的问题

1.爬取速度过慢

解决方法:1.运用scrapy框架进行爬取,因为该网站的反爬虫机制,需要设置自动代理ip

# -*- coding: utf-8 -*-

import random

# Scrapy settings for wordclound project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://doc.scrapy.org/en/latest/topics/settings.html

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html

@setting

BOT_NAME = 'wordclound'

DEFAULT_REQUEST_HEADERS = {

'user-agent': 'Mozilla/5.0 (Windows NT 6.3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

'Accept-Language': 'zh-CN,zh;q=0.8',

'Connection': 'keep-alive',

'Host': 'https://xueqiu.com/'}

SPIDER_MODULES = ['wordclound.spiders']

NEWSPIDER_MODULE = 'wordclound.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'wordclound (+http://www.yourdomain.com)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'wordclound.middlewares.WordcloundSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'wordclound.middlewares.WordcloundDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://doc.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

#ITEM_PIPELINES = {

# 'wordclound.pipelines.WordcloundPipeline': 300,

#}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

# 超时设置

RETRY_ENABLED = True

RETRY_HTTP_CODES = [500, 503, 504, 400, 403, 404, 408]

RETRY_TIMES = 3

DOWNLOADER_MIDDLEWARES = {

'scrapy.contrib.downloadermiddleware.retry.RetryMiddleware': 80,

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware': 110,

'wordclound.middlewares.ProxyMiddleware': 100,

}

PROXIES = [

{'ip_port': 'https://222.186.52.136:90'},

{'ip_port': 'https://36.111.205.166:80'},

{'ip_port': 'https://211.22.55.39:3128'},

{'ip_port': 'https://54.223.94.193:3128'},

{'ip_port': 'https://60.205.205.48:80'},

{'ip_port': 'https://124.47.7.38:80'},

{'ip_port': 'https://183.219.159.202:8123'},

]

USER_AGENTS = [

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

"Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)",

"Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)",

"Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)",

"Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0",

"Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5",

"Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20",

"Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52",

]

# ITEM_PIPELINES = {

# 'wordclound.pipelines.BossJobPipeline': 300,

# }

# -*- coding: utf-8 -*-

# Define here the models for your spider middleware

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals

import requests

import schedule

class WordcloundSpiderMiddleware(object):

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(self, response, spider):

# Called for each response that goes through the spider

# middleware and into the spider.

# Should return None or raise an exception.

return None

def process_spider_output(self, response, result, spider):

# Called with the results returned from the Spider, after

# it has processed the response.

# Must return an iterable of Request, dict or Item objects.

for i in result:

yield i

def process_spider_exception(self, response, exception, spider):

# Called when a spider or process_spider_input() method

# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Response, dict

# or Item objects.

pass

def process_start_requests(self, start_requests, spider):

# Called with the start requests of the spider, and works

# similarly to the process_spider_output() method, except

# that it doesn’t have a response associated.

# Must return only requests (not items).

for r in start_requests:

yield r

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

class WordcloundDownloaderMiddleware(object):

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the downloader middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

# Must either:

# - return None: continue processing this request

# - or return a Response object

# - or return a Request object

# - or raise IgnoreRequest: process_exception() methods of

# installed downloader middleware will be called

return None

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object

# - return a Request object

# - or raise IgnoreRequest

return response

def process_exception(self, request, exception, spider):

# Called when a download handler or a process_request()

# (from other downloader middleware) raises an exception.

# Must either:

# - return None: continue processing this exception

# - return a Response object: stops process_exception() chain

# - return a Request object: stops process_exception() chain

pass

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

class ProxyMiddleware(object):

def process_request(self, request, spider):

url = ""

proxy = 'http://' + requests.get(url=url).content.decode()

print("**********ProxyMiddle no pass************" + proxy)

requests.meta['proxy'] = proxy

def process_do(self, spider):

do = self.process_request

schedule.every(5).seconds.do(do)