https://www.g-truc.net/post-0597.html

https://michaldrobot.com/2014/04/01/gcn-execution-patterns-in-full-screen-passes/

https://fgiesen.wordpress.com/2011/07/10/a-trip-through-the-graphics-pipeline-2011-part-8/

https://www.comp.nus.edu.sg/~cs2100/2_resources/AppendixA_Graphics_and_Computing_GPUs.pdf

https://en.wikipedia.org/wiki/Graphics_Core_Next

https://en.wikipedia.org/wiki/Single_instruction,_multiple_threads

这是它在物理里面的一个解释

- Wavefront

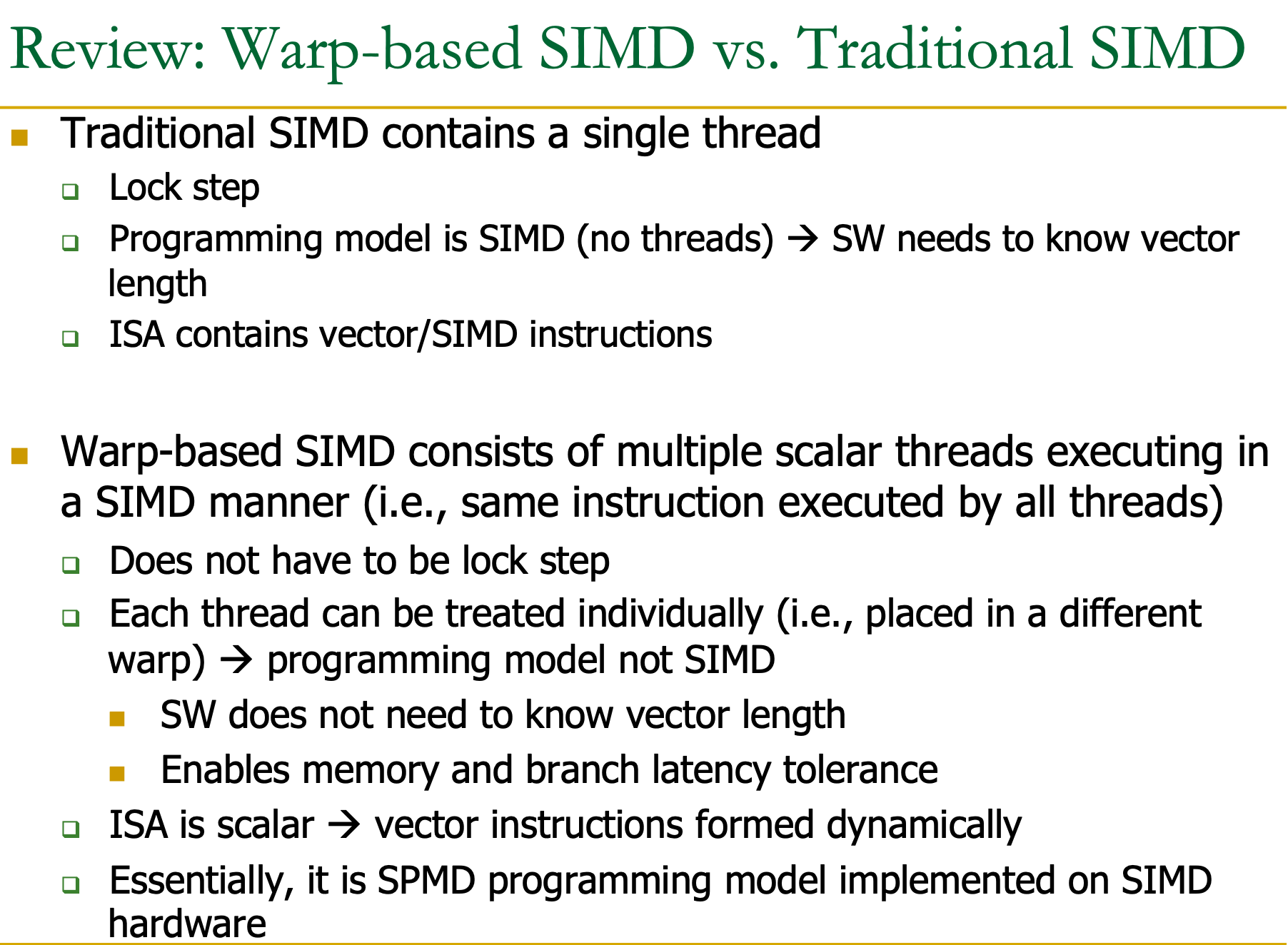

- A 'shader' is a small program written in GLSL which performs graphics processing, and a 'kernel' is a small program written in OpenCL and doing GPGPU processing. These processes don't need that many registers, t

- hey need to load data from system or graphics memory. This operation comes with significant latency. AMD and Nvidia chose similar approaches to hide this unavoidable latency: the grouping of multiple threads.

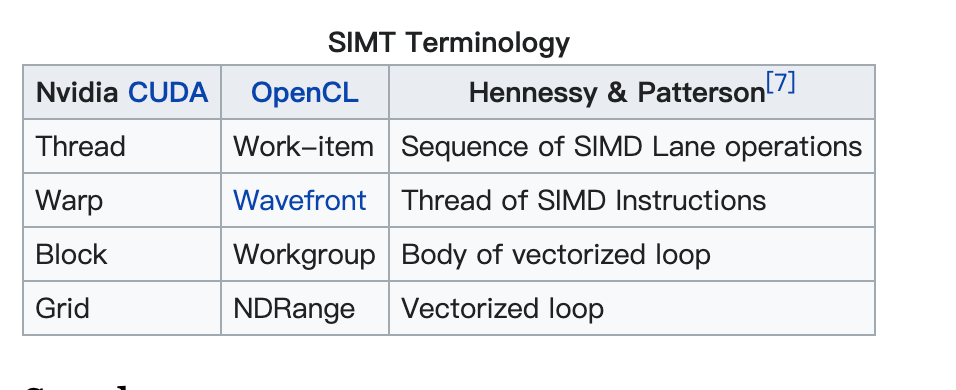

- AMD calls such a group a wavefront, Nvidia calls it a warp. A group of threads is the most basic unit of scheduling of GPUs implementing this approach to hide latency, is minimum size of the data processed in SIMD fashion,

- the smallest executable unit of code, the way to processes a single instruction over all of the threads in it at the same time.

In all GCN-GPUs, a "wavefront" consists of 64 threads, and in all Nvidia GPUs a "warp" consists of 32 threads.

warp--nvidia

wavefront--amd

就是一组这个

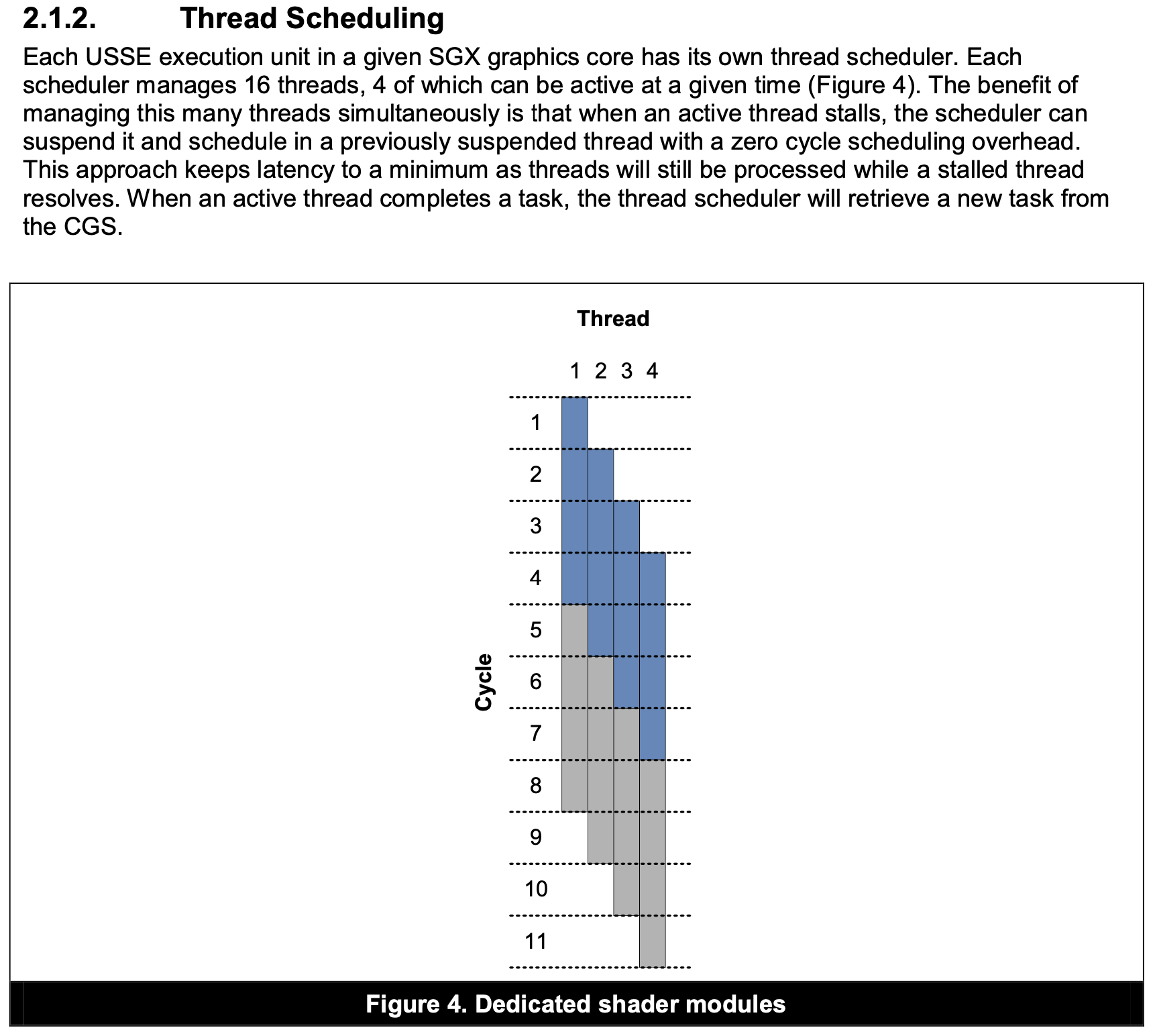

这里是一组16thread

他看起来是 一个方格子一个方格子 比较大些的 在uninitialized rt上