网上关于grpc在k8s上的负载均衡很多,我这里就不在重复了,直接看代码吧: 我的grpc客户端和服务段都是用beego实现的,【我这里比较偷懒,直接把源码放到 k8s的一个master上】,首先需要说明以下我的k8s版本

Server:

协议在protoshello.proto如下:

syntax = "proto3"; option go_package = "./;proto"; package protos; service Greeter { rpc SayHello (HelloRequest) returns (HelloReply) ; } message HelloRequest { string name = 1; } message HelloReply { string message = 1; }

然后到路径下 执行 protoc --go_out=plugins=grpc:./ ./hello.proto

服务端我为了省事直接在main.go文件添加方法:

package main import ( "context" "fmt" pb "grpcdemo/protos" "net" "github.com/kataras/iris/v12" "github.com/kataras/iris/v12/middleware/logger" "github.com/kataras/iris/v12/middleware/recover" "google.golang.org/grpc" "google.golang.org/grpc/reflection" ) func main() { GPRCServer() // http app := iris.New() app.Use(recover.New()) app.Use(logger.New()) app.Handle("GET", "/", func(ctx iris.Context) { ctx.WriteString("pong") }) app.Run(iris.Addr(":8080")) } func GPRCServer() { // 监听本地端口 listener, err := net.Listen("tcp", ":9090") if err != nil { return } s := grpc.NewServer() // 创建GRPC pb.RegisterGreeterServer(s, &server{}) // 在GRPC服务端注册服务 reflection.Register(s) fmt.Println("grpc serve 9090") err = s.Serve(listener) if err != nil { fmt.Println(fmt.Sprintf("failed to serve: %v", err)) } } type server struct{} func NewServer() *server { return &server{} } func (s *server) SayHello(ctx context.Context, in *pb.HelloRequest) (*pb.HelloReply, error) { msg := "Resuest By:" + in.Name + " Response By :" + LocalIp() fmt.Println("GRPC Send: ", msg) return &pb.HelloReply{Message: msg}, nil } func LocalIp() string { addrs, _ := net.InterfaceAddrs() var ip string = "localhost" for _, address := range addrs { if ipnet, ok := address.(*net.IPNet); ok && !ipnet.IP.IsLoopback() { if ipnet.IP.To4() != nil { ip = ipnet.IP.String() } } } return ip }

服务的其他几个文件如下:Dockerfile

FROM golang:1.15.6 RUN mkdir -p /app RUN mkdir -p /app/conf RUN mkdir -p /app/logs WORKDIR /app ADD main /app/main EXPOSE 8080 EXPOSE 9090 CMD ["./main"]

build.sh

#!/bin/bash #cd $WORKSPACE export GOPROXY=https://goproxy.io #根据 go.mod 文件来处理依赖关系。 go mod tidy # linux环境编译 CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build -o main # 构建docker镜像,项目中需要在当前目录下有dockerfile,否则构建失败 docker build -t grpcserver . docker tag grpcserver 192.168.100.30:8080/go/grpcserver:2021 docker login -u admin -p '123456' 192.168.100.30:8080 docker push 192.168.100.30:8080/go/grpcserver docker rmi grpcserver docker rmi 192.168.100.30:8080/go/grpcserver:2021

deploy.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: grpcserver namespace: go labels: name: grpcserver spec: replicas: 3 minReadySeconds: 10 selector: matchLabels: name: grpcserver template: metadata: labels: name: grpcserver spec: imagePullSecrets: - name: regsecret containers: - name: grpcserver image: 192.168.100.30:8080/go/grpcserver:2021 ports: - containerPort: 8080 - containerPort: 9090 imagePullPolicy: Always --- apiVersion: v1 kind: Service metadata: name: grpcserver namespace: go spec: type: NodePort ports: - port: 8080 targetPort: 8080 name: httpserver protocol: TCP - port: 9090 targetPort: 9090 name: grpcserver protocol: TCP selector: name: grpcserver --- apiVersion: extensions/v1beta1 kind: Ingress metadata: namespace: go name: grpcserver annotations: kubernetes.io/ingress.class: "nginx" nginx.ingress.kubernetes.io/grpc-backend: "true" nginx.ingress.kubernetes.io/ssl-redirect: "true" nginx.ingress.kubernetes.io/backend-protocol: "GRPC" spec: rules: - host: grpc.k8s.com http: paths: - backend: serviceName: grpcserver servicePort: 9090 tls: - secretName: grpcs-secret hosts: - grpc.k8s.com

Client:

调用主要在controllers/default.go

其他相关文件:Dockerfile

FROM golang:1.15.6 RUN mkdir -p /app RUN mkdir -p /app/conf RUN mkdir -p /app/logs WORKDIR /app ADD main /app/main EXPOSE 8080 CMD ["./main"]

build.sh

#!/bin/bash #cd $WORKSPACE export GOPROXY=https://goproxy.io #根据 go.mod 文件来处理依赖关系。 go mod tidy # linux环境编译 CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build -o main # 构建docker镜像,项目中需要在当前目录下有dockerfile,否则构建失败 docker build -t grpcclient . docker tag grpcclient 192.168.100.30:8080/go/grpcclient:2021 docker login -u admin -p '123456' 192.168.100.30:8080 docker push 192.168.100.30:8080/go/grpcclient docker rmi grpcclient docker rmi 192.168.100.30:8080/go/grpcclient:2021

deploy.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: grpcclient namespace: go labels: name: grpcclient spec: replicas: 3 minReadySeconds: 10 selector: matchLabels: name: grpcclient template: metadata: labels: name: grpcclient spec: imagePullSecrets: - name: regsecret containers: - name: grpcserver image: 192.168.100.30:8080/go/grpcclient:2021 ports: - containerPort: 8080 - containerPort: 9090 imagePullPolicy: Always --- apiVersion: v1 kind: Service metadata: name: grpcclient namespace: go spec: type: ClusterIP ports: - port: 8080 targetPort: 8080 protocol: TCP selector: name: grpcclient

Deploy

准备阶段:

#安装grpcurl wget https://github.com/fullstorydev/grpcurl/releases/download/v1.8.0/grpcurl_1.8.0_linux_x86_64.tar.gz tar -xvf grpcurl_1.8.0_linux_x86_64.tar.gz chmod +x grpcurl #我本地是有共的环境 mv grpcurl /usr/local/go/bin #证书 openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout grpcs.key -out grpcs.crt -subj "/CN=grpc.k8s.com/O=grpc.k8s.com" kubectl create secret tls grpcs-secret --key grpcs.key --cert grpcs.crt ls grpcs.crt grpcs.key kubectl create secret tls grpcs-secret --key grpcs.key --cert grpcs.crt #kubectl delete secret grpcs-secret //openssl x509 -in grpcs.crt -out grpcs.pem //cp grpcs.crt /usr/local/share/ca-certificates // update-ca-certificates

部署都是进入目录 执行build.sh 然后 kubectl apply -f deploy.yaml

1 clusterIP 有值的结果如下:

2.grpc client 长连接

现在我们修改程序 让grpc client用长连接 在controller下面新建init.go文件

package controllers import ( "fmt" pb "grpcclient/protos" "github.com/astaxie/beego/logs" "google.golang.org/grpc" ) var GrpcClient pb.GreeterClient func init() { conn, err := grpc.Dial("grpcserver:9090", grpc.WithInsecure()) if err != nil { msg := fmt.Sprintf("grpc client did not connect: %v ", err) logs.Error(msg) } GrpcClient = pb.NewGreeterClient(conn) }

default.go 如下:

package controllers import ( "context" "fmt" pb "grpcclient/protos" "net" "github.com/astaxie/beego" ) type MainController struct { beego.Controller } func (c *MainController) Get() { client := GrpcClient req := pb.HelloRequest{Name: "gavin_" + LocalIp()} res, err := client.SayHello(context.Background(), &req) if err != nil { msg := fmt.Sprintf("grpc client client.SayHello has err:%v ", err) c.Ctx.WriteString(msg) return } c.Ctx.WriteString("GRPC Clinet Received:" + res.Message) } func LocalIp() string { addrs, _ := net.InterfaceAddrs() var ip string = "localhost" for _, address := range addrs { if ipnet, ok := address.(*net.IPNet); ok && !ipnet.IP.IsLoopback() { if ipnet.IP.To4() != nil { ip = ipnet.IP.String() } } } return ip }

运行结果:

3 clusterIP=None有值的结果如下:

如果用长链接 ,我们把 grpcserver改为3个实例,grpcclient改为1个实例,无论是否指定clusterIP 为none 都不会切换

短链接 无论是否设置 clusterIP: None 测试结果都如下,都会切换

4.Ingress 如下:

用 kubectl get ingress -n go 查看信息 然后 修改 vim /etc/hostskubectl get ingress -n go

grpcurl -insecure grpc.k8s.com:443 list

grpcurl -insecure -d '{"name": "gRPC"}' grpc.k8s.com:443 protos.Greeter.SayHello

5. istio

安装:

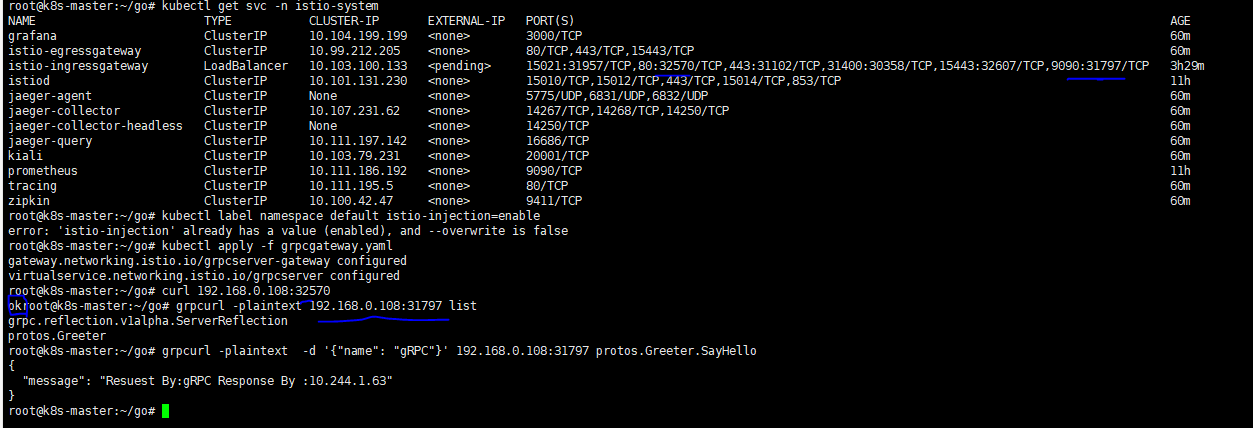

#https://istio.io/latest/docs/setup/getting-started/ curl -L https://istio.io/downloadIstio | sh - #curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.9.2 TARGET_ARCH=x86_64 sh - cd istio-1.9.2 istioctl install --set profile=demo -y 为指定namespace注入istio的sidecar功能 # kubectl label namespace default istio-injection=enable 禁止注入istio的sidecar功能 # kubectl label namespace default istio-injection- 查看istio的sidecar的信息 kubectl get namespace -L istio-injection #kubectl apply -f samples/addons #kubectl get svc -n istio-system

重写部署文件和网关文件

grpcdm.yaml

apiVersion: v1 kind: Service metadata: name: grpcserver labels: app: grpcserver spec: ports: - name: grpc-port port: 9090 - name: http-port port: 8080 selector: app: grpcserver --- apiVersion: apps/v1 kind: Deployment metadata: name: grpcserver labels: app: grpcserver version: v1 spec: replicas: 3 selector: matchLabels: app: grpcserver version: v1 template: metadata: labels: app: grpcserver version: v1 spec: containers: - name: grpcserver image: 192.168.100.30:8080/go/grpcserver:2021 imagePullPolicy: IfNotPresent ports: - containerPort: 8080 - containerPort: 9090

grpcgateway.yaml

apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: grpcserver-gateway spec: selector: istio: ingressgateway # use istio default controller servers: - port: number: 443 name: http protocol: HTTP hosts: - "*" - port: number: 80 name: grpc protocol: HTTP hosts: - "*" --- apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: grpcserver spec: hosts: - "*" gateways: - grpcserver-gateway http: - match: - port: 443 route: - destination: host: grpcserver.default.svc.cluster.local port: number: 8080 - match: - port: 80 route: - destination: host: grpcserver.default.svc.cluster.local port: number: 9090

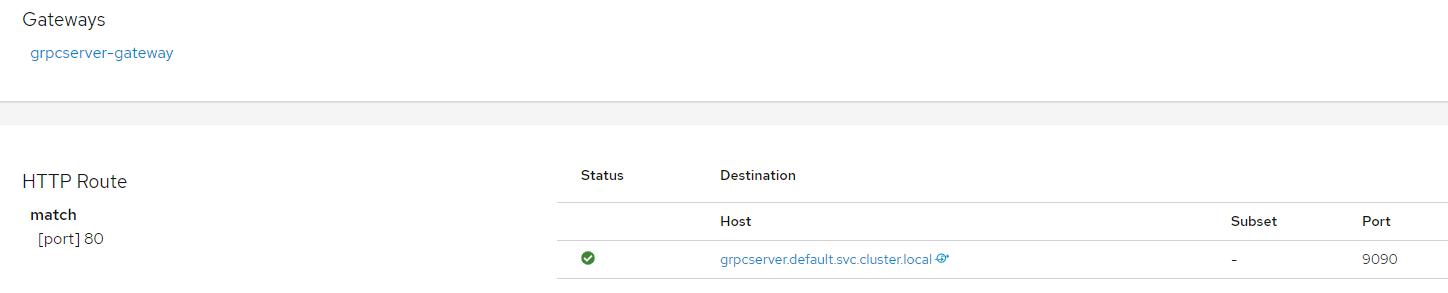

部署后结果如下:

- name: grpc-port #### 关键点在这里, name中的定义,要以grpc- 开头,这样就会被识别为grpc协议

host: grpcserver.default.svc.cluster.local #### 路由后端service 由于跨namespace了,所以定义成全名称

上面我们只暴露了9090这个,那么原本的8080怎么处理了?

增加端口:

# 获取默认的配置项作为参考 #istioctl profile dump default > default.yaml istioctl profile dump demo> demo.yaml #增加自己的端口 ............... - name: grpc port: 9090 targetPort: 9090 name: istio-ingressgateway .................. #部署自定义入口网关 会覆盖的哦 istioctl manifest apply -f demo.yaml #验证部署 kubectl get service -n istio-system

最后的gateway.yaml文件

apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: grpcserver-gateway spec: selector: istio: ingressgateway # use istio default controller servers: - port: number: 80 name: http protocol: HTTP hosts: - "*" - port: number: 9090 name: grpc protocol: HTTP hosts: - "*" --- apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: grpcserver spec: hosts: - "*" gateways: - grpcserver-gateway http: - match: - port: 80 route: - destination: host: grpcserver.default.svc.cluster.local port: number: 8080 - match: - port: 9090 route: - destination: host: grpcserver.default.svc.cluster.local port: number: 9090

备注: k8s开启ipvs

#.加载内核模快 lsmod|grep ip_vs modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 apt install ipvsadm ipset -y #修改kube-proxy配置 kubectl edit configmap kube-proxy -n kube-system #mode: "ipvs" # 修改此处 #删除所有kube-proxy的pod kubectl get pod -n kube-system | grep kube-proxy |awk '{system("kubectl delete pod "$1" -n kube-system")}'

----------------------------------------2021-04-29-----------------------------------

备注以下 默认的k8s , grpc 走服务名连接, 无论是pod的ip变化 还是pod和svc的ip都发生了变化,长连接都是有效的

参考:

http://www.kailing.pub/article/index/arcid/327.html

https://blog.csdn.net/weixin_38166686/article/details/102452691

https://blog.csdn.net/weixin_33782386/article/details/89729502

https://www.cnblogs.com/oscarli/p/13674035.html

https://makeoptim.com/istio-faq/istio-multiple-ports-virtual-service

https://makeoptim.com/istio-faq/istio-custom-ingress-gateway