1.从Spring boot官网根据需求下载脚手架或者到GitHub上去搜索对应的脚手架项目,D_iao ^0^

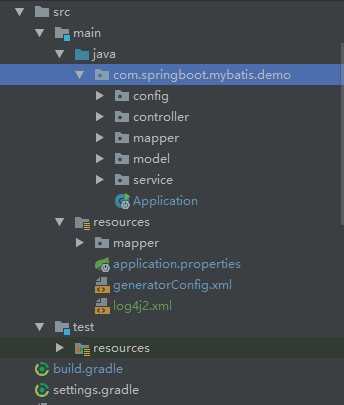

• 文件目录如下(此处generatorConfig.xml 和 log4j2.xml文件请忽略,后续会讲解)

• gradle 相关配置

// Mybatis 代码自动生成所引入的包

compile group: 'org.mybatis.generator', name: 'mybatis-generator-core', version: '1.3.3'

// MyBatis代码自动生成插件工具

apply plugin: "com.arenagod.gradle.MybatisGenerator"

configurations {

mybatisGenerator

}

mybatisGenerator {

verbose = true

// 配置文件路径

configFile = 'src/main/resources/generatorConfig.xml'

}

• generatorConfig.xml配置详解

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE generatorConfiguration

PUBLIC "-//mybatis.org//DTD MyBatis Generator Configuration 1.0//EN"

"http://mybatis.org/dtd/mybatis-generator-config_1_0.dtd">

<generatorConfiguration>

<!--数据库驱动包路径 -->

<classPathEntry

<!--此驱动包路径可在项目的包库中找到,复制过来即可-->

location="C:Userspc.gradlecachesmodules-2files-2.1mysqlmysql-connector-java5.1.38dbbd7cd309ce167ec8367de4e41c63c2c8593cc5mysql-connector-java-5.1.38.jar"/>

<context id="mysql" targetRuntime="MyBatis3">

<!--关闭注释 -->

<commentGenerator>

<property name="suppressAllComments" value="true"/>

</commentGenerator>

<!--数据库连接信息 -->

<jdbcConnection driverClass="com.mysql.jdbc.Driver"

connectionURL="jdbc:mysql://127.0.0.1:3306/xxx" userId="root"

password="">

</jdbcConnection>

<!--生成的model 包路径 ,其中rootClass为model的基类,配置之后他会自动继承该类作为基类,trimStrings会为model字串去空格-->

<javaModelGenerator targetPackage="com.springboot.mybatis.demo.model"

targetProject="D:/self-code/spring-boot-mybatis/spring-boot-mybatis/src/main/java">

<property name="enableSubPackages" value="true"/>

<property name="trimStrings" value="true"/>

<property name="rootClass" value="com.springboot.mybatis.demo.model.common.BaseModel"/>

</javaModelGenerator>

<!--生成mapper xml文件路径 -->

<sqlMapGenerator targetPackage="mapper"

targetProject="D:/self-code/spring-boot-mybatis/spring-boot-mybatis/src/main/resources">

<property name="enableSubPackages" value="true"/>

</sqlMapGenerator>

<!-- 生成的Mapper接口的路径 -->

<javaClientGenerator type="XMLMAPPER"

targetPackage="com.springboot.mybatis.demo.mapper" targetProject="D:/self-code/spring-boot-mybatis/spring-boot-mybatis/src/main/java">

<property name="enableSubPackages" value="true"/>

</javaClientGenerator>

<!-- 对应的表 这个是生成Mapper xml文件的基础,enableCountByExample如果为true则会在xml文件中生成样例,过于累赘所以不要-->

<table tableName="tb_user" domainObjectName="User"

enableCountByExample="false"

enableDeleteByExample="false"

enableSelectByExample="false"

enableUpdateByExample="false"></table>

</context>

</generatorConfiguration>

以上配置中注意targetProject路径请填写绝对路径,避免错误,其中targetPackage是类所处的包路径(确保包是存在的,否则无法生成),也就相当于

• 代码生成

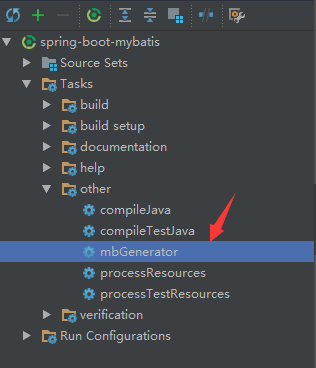

配置完成之后首先得在数据库中新建对应的表,然后确保数据库能正常访问,最后在终端执行gradle mbGenerator或者点击如下任务

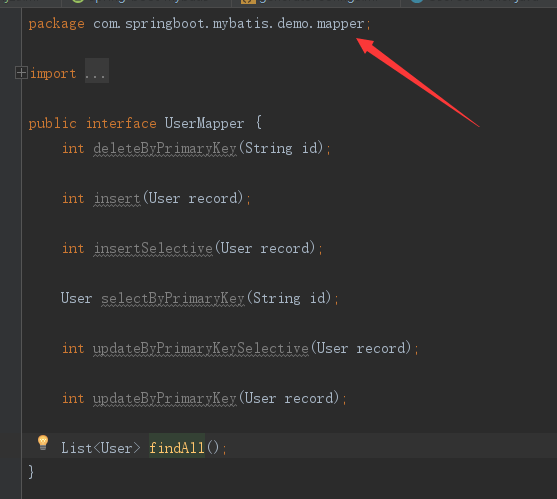

成功之后它会生成model、mapper接口以及xml文件

3.集成日志

• gradle 相关配置

compile group: 'org.springframework.boot', name: 'spring-boot-starter-log4j2', version: '1.4.0.RELEASE'

// 排除冲突

configurations {

mybatisGenerator

compile.exclude module: 'spring-boot-starter-logging'

}

当没有引入spring-boot-starter-log4j2包时会报错:java.lang.IllegalStateException: Logback configuration error detected Logback 配置错误声明

原因参考链接;https://blog.csdn.net/blueheart20/article/details/78111350?locationNum=5&fps=1

解决方案:排除依赖 spring-boot-starter-logging

what???

排除依赖之后使用的时候又报错:Failed to load class "org.slf4j.impl.StaticLoggerBinder" 加载slf4j.impl.StaticLoggerBinder类失败

原因参考链接:https://blog.csdn.net/lwj_199011/article/details/51853110

解决方案:添加依赖 spring-boot-starter-log4j2 此包所依赖的包如下:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starters</artifactId>

<version>1.4.0.RELEASE</version>

</parent>

<artifactId>spring-boot-starter-log4j2</artifactId>

<name>Spring Boot Log4j 2 Starter</name>

<description>Starter for using Log4j2 for logging. An alternative to

spring-boot-starter-logging</description>

<url>http://projects.spring.io/spring-boot/</url>

<organization>

<name>Pivotal Software, Inc.</name>

<url>http://www.spring.io</url>

</organization>

<properties>

<main.basedir>${basedir}/../..</main.basedir>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>jcl-over-slf4j</artifactId>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>jul-to-slf4j</artifactId>

</dependency>

</dependencies>

</project>

它依赖了 log4j-slf4j-impl ,使用的是log4j2日志框架。

这里涉及到log4j、logback、log4j2以及slf4j相关概念,那么它们是啥关系呢?unbelievable...相关知识如下:

slf4j、log4j、logback、log4j2

日志接口(slf4j)

slf4j是对所有日志框架制定的一种规范、标准、接口,并不是一个框架的具体的实现,因为接口并不能独立使用,需要和具体的日志框架实现配合使用(如log4j、logback)

日志实现(log4j、logback、log4j2)

log4j是apache实现的一个开源日志组件

logback同样是由log4j的作者设计完成的,拥有更好的特性,用来取代log4j的一个日志框架,是slf4j的原生实现

Log4j2是log4j 1.x和logback的改进版,据说采用了一些新技术(无锁异步、等等),使得日志的吞吐量、性能比log4j 1.x提高10倍,并解决了一些死锁的bug,而且配置更加简单灵活,官网地址: http://logging.apache.org/log4j/2.x/manual/configuration.html

为什么需要日志接口,直接使用具体的实现不就行了吗?

接口用于定制规范,可以有多个实现,使用时是面向接口的(导入的包都是slf4j的包而不是具体某个日志框架中的包),即直接和接口交互,不直接使用实现,所以可以任意的更换实现而不用更改代码中的日志相关代码。

比如:slf4j定义了一套日志接口,项目中使用的日志框架是logback,开发中调用的所有接口都是slf4j的,不直接使用logback,调用是 自己的工程调用slf4j的接口,slf4j的接口去调用logback的实现,可以看到整个过程应用程序并没有直接使用logback,当项目需要更换更加优秀的日志框架时(如log4j2)只需要引入Log4j2的jar和Log4j2对应的配置文件即可,完全不用更改Java代码中的日志相关的代码logger.info(“xxx”),也不用修改日志相关的类的导入的包(import org.slf4j.Logger;

import org.slf4j.LoggerFactory;)

使用日志接口便于更换为其他日志框架,适配器作用

log4j、logback、log4j2都是一种日志具体实现框架,所以既可以单独使用也可以结合slf4j一起搭配使用)

• 到此我们使用的是Log4j2日志框架,接下来是配置log4j(可以使用properties、xml以及yml三种方式配置,这里使用xml形式;有关log4j详细配置讲解参考链接:https://blog.csdn.net/menghuanzhiming/article/details/77531977),具体配置详解如下:

<?xml version="1.0" encoding="UTF-8"?>

<!--日志级别以及优先级排序: OFF > FATAL > ERROR > WARN > INFO > DEBUG > TRACE > ALL -->

<!--Configuration后面的status,这个用于设置log4j2自身内部的信息输出,可以不设置,当设置成trace时,你会看到log4j2内部各种详细输出-->

<!--monitorInterval:Log4j能够自动检测修改配置 文件和重新配置本身,设置间隔秒数-->

<Configuration status="WARN">

<!--定义一些属性-->

<Properties>

<Property name="PID">????</Property>

<Property name="LOG_PATTERN">

[%d{yyyy-MM-dd HH:mm:ss.SSS}] - ${sys:PID} --- %c{1}: %m%n

</Property>

</Properties>

<!--输出源,用于定义日志输出的地方-->

<Appenders>

<!--输出到控制台-->

<Console name="Console" target="SYSTEM_OUT" follow="true">

<PatternLayout

pattern="${LOG_PATTERN}">

</PatternLayout>

</Console>

<!--文件会打印出所有信息,这个log每次运行程序会自动清空,由append属性决定,适合临时测试用-->

<!--append为TRUE表示消息增加到指定文件中,false表示消息覆盖指定的文件内容,默认值是true-->

<!--<File name="File" fileName="logs/log.log" append="false">-->

<!--<PatternLayout>-->

<!--<pattern>[%-5p] %d %c - %m%n</pattern>-->

<!--</PatternLayout>-->

<!--</File>-->

<!--这个会打印出所有的信息,每次大小超过size,则这size大小的日志会自动存入按年份-月份建立的文件夹下面并进行压缩,作为存档 -->

<RollingFile name="RollingAllFile" fileName="logs/all/all.log"

filePattern="logs/all/$${date:yyyy-MM}/all-%d{yyyy-MM-dd}-%i.log.gz">

<PatternLayout

pattern="${LOG_PATTERN}" />

<Policies>

<!--以下两个属性结合filePattern使用,完成周期性的log文件封存工作-->

<!--TimeBasedTriggeringPolicy 基于时间的触发策略,以下是它的两个参数:

1.interval,integer型,指定两次封存动作之间的时间间隔。单位:以日志的命名精度来确定单位,比如yyyy-MM-dd-HH 单位为小时,yyyy-MM-dd-HH-mm 单位为分钟

2.modulate,boolean型,说明是否对封存时间进行调制。若modulate=true,则封存时间将以0点为边界进行偏移计算。比如,modulate=true,interval=4hours,那么假设上次封存日志的时间为03:00,则下次封存日志的时间为04:00,之后的封存时间依次为08:00,12:00,16:00-->

<!--<TimeBasedTriggeringPolicy/>-->

<!--SizeBasedTriggeringPolicy 基于日志文件大小的触发策略,以下配置解释为:

当单个文件达到20M后,会自动将以前的内容,先创建类似 2014-09(年-月)的目录,然后按 "xxx-年-月-日-序号"命名,打成压缩包-->

<SizeBasedTriggeringPolicy size="200 MB"/>

</Policies>

</RollingFile>

<!--

添加过滤器ThresholdFilter,可以有选择的输出某个级别及以上的类别 onMatch="ACCEPT" onMismatch="DENY" 意思是匹配就接受,否则直接拒绝。

如果有组合过滤的话会用到NEUTRA·L,表示中立,eg:

<Filters>

<ThresholdFilter level="error" onMatch="DENY" onMismatch="NEUTRAL"/>

<ThresholdFilter level="warn" onMatch="ACCEPT" onMismatch="DENY"/>

</Filters>

上面的意思就是:如果匹配到error及以上的日志级别就拒接,没有匹配到的则保持中立即不拒绝也不接受,等待下一个过滤器过滤;然后第二个过滤器匹配到warn及以上的就接受,未匹配到的就拒绝,由于第一个拒绝了error及以上的所以这个组合过滤的结果就是只记录warn日志

-->

<RollingFile name="RollingErrorFile" fileName="logs/error/error.log"

filePattern="logs/error/$${date:yyyy-MM}/%d{yyyy-MM-dd}-%i.log.gz">

<ThresholdFilter level="ERROR"/>

<PatternLayout

pattern="${LOG_PATTERN}" />

<Policies>

<!--<TimeBasedTriggeringPolicy/>-->

<SizeBasedTriggeringPolicy size="200 MB"/>

</Policies>

</RollingFile>

<RollingFile name="RollingWarnFile" fileName="logs/warn/warn.log"

filePattern="logs/warn/$${date:yyyy-MM}/%d{yyyy-MM-dd}-%i.log.gz">

<Filters>

<ThresholdFilter level="WARN"/>

<ThresholdFilter level="ERROR" onMatch="DENY" onMismatch="NEUTRAL"/>

</Filters>

<PatternLayout

pattern="${LOG_PATTERN}" />

<Policies>

<!--<TimeBasedTriggeringPolicy/>-->

<SizeBasedTriggeringPolicy size="200 MB"/>

</Policies>

</RollingFile>

</Appenders>

<!--然后定义Loggers,只有定义了Logger并引入的Appender,Appender才会生效-->

<Loggers>

<Logger name="org.hibernate.validator.internal.util.Version" level="WARN"/>

<Logger name="org.apache.coyote.http11.Http11NioProtocol" level="WARN"/>

<Logger name="org.apache.tomcat.util.net.NioSelectorPool" level="WARN"/>

<Logger name="org.apache.catalina.startup.DigesterFactory" level="ERROR"/>

<Logger name="org.springframework" level="INFO" />

<Logger name="com.springboot.mybatis.demo" level="DEBUG"/>

<Logger name="validateEventLog" level="info" additivity="false">

<AppenderRef ref="RollingFileAbandonEvent"/>

</Logger>

<!--

这里的name是在声明 log 对象时传进去的名字,eg: private final Logger LOG = LogManager.getLogger("logName");

一般我们会使用类的全限定名作为log的名字,eg: private final Logger LOG = LogManager.getLogger(this.getClass());

--> <!--以上的logger会继承Root,也就是说如果在Logger里头没有配置appender,日志就会默认输出到Root下定义的符合条件的Appender中,若不想让它继承可以设置 additivity="false" 并可以在Logger中设置 <AppenderRef ref="Console"/> 指定输出到Console--> <Root level="INFO"> <AppenderRef ref="Console" /> <AppenderRef ref="RollingAllFile"/> <AppenderRef ref="RollingErrorFile"/> <AppenderRef ref="RollingWarnFile"/> </Root> </Loggers> </Configuration>

yml配置案例:

Configuration:

status: info

Properties: # 定义全局变量

Property: # 缺省配置(用于开发环境)。其他环境需要在VM参数中指定,如下:

- name: log.path

value: ./logs/

- name: project.name

value: xx

- name: info.file.name

value: ${log.path}/${project.name}.info.log

- name: error.file.name

value: ${log.path}/${project.name}.error.log

- name: kafka.sync.file.name

value: ${log.path}/${project.name}.kafka.sync.log

Appenders:

Console: #输出到控制台

name: POSEIDON

target: SYSTEM_OUT

ThresholdFilter:

level: info # “sys:”表示:如果VM参数中没指定这个变量值,则使用本文件中定义的缺省全局变量值

onMatch: ACCEPT

onMismatch: DENY

PatternLayout:

pattern: "%d{MM-dd HH:mm:ss.SSS} [%t] %-5level %logger{36} - %msg%n"

RollingFile: # 输出到文件,超过128MB归档

- name: infolog

ThresholdFilter:

level: info # “sys:”表示:如果VM参数中没指定这个变量值,则使用本文件中定义的缺省全局变量值

onMatch: ACCEPT

onMismatch: DENY

ignoreExceptions: false

fileName: ${info.file.name}

PatternLayout:

pattern: "%d{yyyy-MM-dd HH:mm:ss,SSS}:%4p %t (%F:%L) - %m%n"

filePattern: ${log.path}/$${date:yyyy-MM}/${project.name}-%d{yyyy-MM-dd}-%i.error.log.gz

PatternLayout:

pattern: "%d{yyyy-MM-dd HH:mm:ss,SSS}:%4p %t (%F:%L) - %m%n"

Policies:

SizeBasedTriggeringPolicy:

size: "128 MB"

DefaultRolloverStrategy:

max: 1000

- name: ROLLINGFILEERROR

ThresholdFilter:

level: error

onMatch: ACCEPT

onMismatch: DENY

fileName: ${error.file.name}

PatternLayout:

pattern: "%d{yyyy-MM-dd HH:mm:ss,SSS}:%4p %t (%F:%L) - %m%n"

filePattern: ${log.path}/$${date:yyyy-MM}/${project.name}-%d{yyyy-MM-dd}-%i.error.log.gz

PatternLayout:

pattern: "%d{yyyy-MM-dd HH:mm:ss,SSS}:%4p %t (%F:%L) - %m%n"

Policies:

SizeBasedTriggeringPolicy:

size: "128 MB"

DefaultRolloverStrategy:

max: 1000

- name: kafkaSyncLog

ThresholdFilter:

level: info

onMatch: ACCEPT

onMismatch: DENY

fileName: ${kafka.sync.file.name}

PatternLayout:

pattern: "%d{yyyy-MM-dd HH:mm:ss,SSS}:%4p %t (%F:%L) - %m%n"

filePattern: ${log.path}/$${date:yyyy-MM}/${project.name}-%d{yyyy-MM-dd}-%i.kafka.sync.log.gz

PatternLayout:

pattern: "%d{yyyy-MM-dd HH:mm:ss,SSS}:%4p %t (%F:%L) - %m%n"

// 以下是清理策略:每个log文件达到128M时则会打成一个zip包,最大允许1000个zip包,超过的会删除掉老的zip包

Policies:

SizeBasedTriggeringPolicy:

size: "128 MB"

DefaultRolloverStrategy:

max: 1000

Loggers:

Root: // 所有文件的对应级别的日志都会往Root里面配置的对应级别的日志文件里打

level: info

AppenderRef:

- ref: POSEIDON

- ref: infolog

- ref: ROLLINGFILEERROR

Logger:

- name: com.xx.log.common

level: error

- name: com.xx.xx.xx.xx.xx.kafka // 这里指定某个类的日志输出到自定义的logger文件里,注意:additivity = false为此类的日志不会输出到Root里面的logger文件里;kafkaSyncLog不加入到Root里是因为也不想让其他文件的日志打印到kafkaSyncLog日志文件里

additivity: false

level: info

AppenderRef:

- ref: kafkaSyncLog

到此我们就算是把日志集成进去了,可以在终端看到各种log,very exciting!!!

log4j还可以发送邮件

添加依赖:

compile group: 'org.springframework.boot', name: 'spring-boot-starter-mail', version: '2.0.0.RELEASE'

修改log4j配置:

在appender中添加如下: <!-- subject: 邮件主题 to: 接收人,多个以逗号隔开 from: 发送人 replyTo: 发送账号 smtp: QQ查看链接https://service.mail.qq.com/cgi-bin/help?subtype=1&no=167&id=28 smtpDebug: 开启详细日志 smtpPassword: 授权码,参看https://service.mail.qq.com/cgi-bin/help?subtype=1&&id=28&&no=1001256 smtpUsername: 用户名--> <SMTP name="Mail" subject="Error Log" to="xxx.com" from="xxx@qq.com" replyTo="xxx@qq.com" smtpProtocol="smtp" smtpHost="smtp.qq.com" smtpPort="587" bufferSize="50" smtpDebug="false" smtpPassword="授权码" smtpUsername="xxx.com"> </SMTP> 在root里添加上面的appender让其生效 <AppenderRef ref="Mail" level="error"/>

搞定!

总结:Log4j其主要就是Appender和Logger两部分,前者负责指定日志输出位置,后者指定日志输入来源。

注:以上仅仅是个人经验总结,Log4j的功能还有很多很多,比如日志输出到ArangoDB、MySQL、Kafka等等,详细可查看官方文档,很详细!!!

4.集成MybatisProvider

• Why ?

有了它我们可以通过注解的方式结合动态SQL实现基本的增删改查操作,而不需要再在xml中写那么多重复繁琐的SQL了

• Come on ↓

First: 定义一个Mapper接口并实现基本操作,如下:

package com.springboot.mybatis.demo.mapper.common; import com.springboot.mybatis.demo.mapper.common.provider.AutoSqlProvider; import com.springboot.mybatis.demo.mapper.common.provider.MethodProvider; import com.springboot.mybatis.demo.model.common.BaseModel; import org.apache.ibatis.annotations.DeleteProvider; import org.apache.ibatis.annotations.InsertProvider; import org.apache.ibatis.annotations.SelectProvider; import org.apache.ibatis.annotations.UpdateProvider; import java.io.Serializable; import java.util.List; public interface BaseMapper<T extends BaseModel, Id extends Serializable> { @InsertProvider(type = AutoSqlProvider.class, method = MethodProvider.SAVE) int save(T entity); @DeleteProvider(type = AutoSqlProvider.class, method = MethodProvider.DELETE_BY_ID) int deleteById(Id id); @UpdateProvider(type = AutoSqlProvider.class, method = MethodProvider.UPDATE_BY_ID) int updateById(Id id); @SelectProvider(type = AutoSqlProvider.class, method = MethodProvider.FIND_ALL) List<T> findAll(T entity); @SelectProvider(type = AutoSqlProvider.class, method = MethodProvider.FIND_BY_ID) T findById(T entity); @SelectProvider(type = AutoSqlProvider.class, method = MethodProvider.FIND_AUTO_BY_PAGE) List<T> findAutoByPage(T entity); }

其中AutoSqlProvider是提供sql的类,MethodProvider是定义好我们使用MybatisProvider需要实现的基本持久层方法,这两个方法具体实现如下:

package com.springboot.mybatis.demo.mapper.common.provider; import com.google.common.base.CaseFormat; import com.springboot.mybatis.demo.mapper.common.provider.model.MybatisTable; import com.springboot.mybatis.demo.mapper.common.provider.utils.ProviderUtils; import org.apache.ibatis.jdbc.SQL; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import java.lang.reflect.Field; import java.util.List; public class AutoSqlProvider { private static Logger logger = LoggerFactory.getLogger(AutoSqlProvider.class); public String findAll(Object obj) { MybatisTable mybatisTable = ProviderUtils.getMybatisTable(obj); List<Field> fields = mybatisTable.getMybatisColumnList(); SQL sql = new SQL(); fields.forEach(field -> sql.SELECT(CaseFormat.UPPER_CAMEL.to(CaseFormat.LOWER_UNDERSCORE, field.getName()))); sql.FROM(mybatisTable.getName()); logger.info(sql.toString()); return sql.toString(); } public String save(Object obj) {

... return null; } public String deleteById(String id) {

... return null; } public String findById(Object obj) {

... return null; } public String updateById(Object obj) {

... return null; } public String findAutoByPage(Object obj) { return null; } }

package com.springboot.mybatis.demo.mapper.common.provider; public class MethodProvider { public static final String SAVE = "save"; public static final String DELETE_BY_ID = "deleteById"; public static final String UPDATE_BY_ID = "updateById"; public static final String FIND_ALL = "findAll"; public static final String FIND_BY_ID = "findById"; public static final String FIND_AUTO_BY_PAGE = "findAutoByPage"; }

注意:

1.如果你在BaseMapper中定义了某个方法一定要在SqlProvider类中去实现该方法,否则将报找不到该方法的错误

2.在动态拼接SQL的时候遇到一个问题:即使开启了驼峰命名转换,在拼接的时候依然需要手动将表属性转换,否则不会自动转换

3.在SqlProvider中的SQL log可以去除,因为在集成日志的时候已经配置好了

4.ProviderUtils是通过反射的方式拿到表的一些基本属性:表名,表属性

• 到这里MybatisProvider的基础配置已经准备好,接下去就是让每一个mapper接口去继承我们这个基础Mapper,这样所有的基础增删改查都由BaseMapper负责,如下:

package com.springboot.mybatis.demo.mapper; import com.springboot.mybatis.demo.mapper.common.BaseMapper; import com.springboot.mybatis.demo.model.User; import java.util.List; public interface UserMapper extends BaseMapper<User,String> { }

这样UserMapper就不需要再关注那些基础的操作了,wonderful !!!

5. 整合JSP过程

• 引入核心包

compile group: 'org.springframework.boot', name: 'spring-boot-starter-web', version: '2.0.0.RELEASE'

// 注意此处一定要是compile或者缺省,不能使用providedRuntime否则jsp无法渲染

compile group: 'org.apache.tomcat.embed', name: 'tomcat-embed-jasper', version: '9.0.6'

providedRuntime group: 'org.springframework.boot', name: 'spring-boot-starter-tomcat', version: '2.0.2.RELEASE' // 此行代码是用于解决内置tomcat和外部tomcat冲突问题,若仅使用内置tomcat则无需此行代码

这是两个基本的包,其中spring-boot-starter-web会引入tomcat也就是我们常说的SpringBoot内置的tomcat,而tomcat-embed-jasper是解析jsp的包,如果这个包没有引入或是有问题则无法渲染jsp页面

• 修改Application启动类

@EnableTransactionManagement @SpringBootApplication public class Application extends SpringBootServletInitializer { @Override protected SpringApplicationBuilder configure(SpringApplicationBuilder application) { setRegisterErrorPageFilter(false); return application.sources(Application.class); } public static void main(String[] args) throws Exception { SpringApplication.run(Application.class, args); } }

注意:启动类必须继承SpringBootServletInitializer 类并重写configure方法

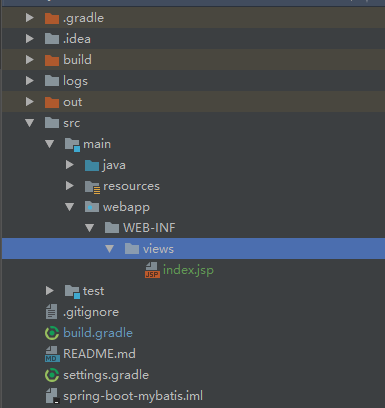

• 创建jsp页面(目录详情如下)

• 接下來就是配置如何去获取jsp页面了,有两中选择

一:通过在application.properties文件中配置

spring.mvc.view.prefix=/WEB-INF/views/

spring.mvc.view.suffix=.jsp

然后创建controller(注意:在Spring 2.0之后如果要返回jsp页面必须使用@Controller而不能使用@RestController)

@Controller // spring 2.0 如果要返回jsp页面必须使用Controller而不能使用RestController public class IndexController { @GetMapping("/") public String index() { return "index"; } }

二:通过配置文件实现,这样的话直接请求 http:localhost:8080/就能直接获取到index.jsp页面,省去了controller代码的书写

@Configuration

@EnableWebMvc

public class WebMvcConfig implements WebMvcConfigurer {

// /static (or /public or /resources or /META-INF/resources

@Bean

public InternalResourceViewResolver viewResolver() {

InternalResourceViewResolver resolver = new InternalResourceViewResolver();

resolver.setPrefix("/WEB-INF/views/");

resolver.setSuffix(".jsp");

return resolver;

}

@Override

public void addViewControllers(ViewControllerRegistry registry) {

registry.addViewController("/").setViewName("index");

}

// 此方法如果不重写的话将无法找到index.jsp资源

@Override

public void configureDefaultServletHandling(DefaultServletHandlerConfigurer configurer) {

configurer.enable();

}

}

6.集成Shiro认证和授权以及Session

• shiro核心

认证、授权、会话管理、缓存、加密

• 集成认证过程

(1)引包(注:包是按需引用的,以下只是个人构建时候引用的,仅供参考↓)

// shiro

compile group: 'org.apache.shiro', name: 'shiro-core', version: '1.3.2' // 必引包,shiro核心包

compile group: 'org.apache.shiro', name: 'shiro-web', version: '1.3.2' // 与web整合的包

compile group: 'org.apache.shiro', name: 'shiro-spring', version: '1.3.2' // 与spring整合的包

compile group: 'org.apache.shiro', name: 'shiro-ehcache', version: '1.3.2' // shiro缓存

(2)shiro配置文件

@Configuration public class ShiroConfig { @Bean(name = "shiroFilter") public ShiroFilterFactoryBean shiroFilterFactoryBean() { ShiroFilterFactoryBean shiroFilterFactoryBean = new ShiroFilterFactoryBean(); //拦截器Map Map<String,String> filterChainDefinitionMap = new LinkedHashMap<String,String>(); //配置不会被拦截的路径 filterChainDefinitionMap.put("/static/**", "anon"); //配置退出 filterChainDefinitionMap.put("/logout", "logout");

//配置需要认证才能访问的路径 filterChainDefinitionMap.put("/**", "authc");

//配置需要认证和admin角色才能访问的路径

filterChainDefinitionMap.put("user/**","authc,roles[admin]") //注意roles中的角色可以为多个且时and的关系,即要拥有所有角色才能访问,如果要or关系可自行写filter

shiroFilterFactoryBean.setFilterChainDefinitionMap(filterChainDefinitionMap); //配置登陆路径 shiroFilterFactoryBean.setLoginUrl("/login"); //配置登陆成功后跳转的路径 shiroFilterFactoryBean.setSuccessUrl("/index"); //登陆失败跳回登陆界面 shiroFilterFactoryBean.setUnauthorizedUrl("/login"); shiroFilterFactoryBean.setSecurityManager(securityManager()); return shiroFilterFactoryBean; } @Bean public ShiroRealmOne shiroRealmOne() { ShiroRealmOne realm = new ShiroRealmOne(); // 此处是自定义shiro规则 return realm; } @Bean(name = "securityManager") public DefaultWebSecurityManager securityManager() { DefaultWebSecurityManager securityManager = new DefaultWebSecurityManager(); securityManager.setRealm(shiroRealmOne());

securityManager.setCacheManager(ehCacheManager());

securityManager.setSessionManager(sessionManager()); return securityManager; }

@Bean(name = "ehCacheManager") // 将用户信息缓存起来

public EhCacheManager ehCacheManager() {

return new EhCacheManager();

}

@Bean(name = "shiroCachingSessionDAO") // shiroSession

public SessionDAO shiroCachingSessionDAO() {

EnterpriseCacheSessionDAO sessionDao = new EnterpriseCacheSessionDAO();

sessionDao.setSessionIdGenerator(new JavaUuidSessionIdGenerator()); // SessionId生成器

sessionDao.setCacheManager(ehCacheManager()); // 缓存

return sessionDao;

}

@Bean(name = "sessionManager")

public DefaultWebSessionManager sessionManager() {

DefaultWebSessionManager defaultWebSessionManager = new DefaultWebSessionManager();

defaultWebSessionManager.setGlobalSessionTimeout(1000 * 60);

defaultWebSessionManager.setSessionDAO(shiroCachingSessionDAO());

return defaultWebSessionManager;

}

}

自定义realm,继承了AuthorizationInfo实现简单的登陆验证

package com.springboot.mybatis.demo.config.realm; import com.springboot.mybatis.demo.model.Permission; import com.springboot.mybatis.demo.model.Role; import com.springboot.mybatis.demo.model.User; import com.springboot.mybatis.demo.service.PermissionService; import com.springboot.mybatis.demo.service.RoleService; import com.springboot.mybatis.demo.service.UserService; import com.springboot.mybatis.demo.service.impl.PermissionServiceImpl; import com.springboot.mybatis.demo.service.impl.RoleServiceImpl; import com.springboot.mybatis.demo.service.impl.UserServiceImpl; import org.apache.shiro.SecurityUtils; import org.apache.shiro.authc.*; import org.apache.shiro.authz.AuthorizationInfo; import org.apache.shiro.authz.SimpleAuthorizationInfo; import org.apache.shiro.realm.AuthorizingRealm; import org.apache.shiro.session.Session; import org.apache.shiro.subject.PrincipalCollection; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import org.springframework.beans.factory.annotation.Autowired; import java.util.ArrayList; import java.util.List; import java.util.stream.Collectors; public class ShiroRealmOne extends AuthorizingRealm { private Logger logger = LoggerFactory.getLogger(this.getClass()); @Autowired private UserService userServiceImpl; @Autowired private RoleService roleServiceImpl; @Autowired private PermissionService permissionServiceImpl; //授权(这里对授权不做讲解,可忽略) @Override protected AuthorizationInfo doGetAuthorizationInfo(PrincipalCollection principalCollection) { logger.info("doGetAuthorizationInfo+" + principalCollection.toString()); User user = userServiceImpl.findByUserName((String) principalCollection.getPrimaryPrincipal()); List<Role> roleList = roleServiceImpl.findByUserId(user.getId()); List<Permission> permissionList = roleList != null && !roleList.isEmpty() ? permissionServiceImpl.findByRoleIds(roleList.stream().map(Role::getId).collect(Collectors.toList())) : new ArrayList<>(); SecurityUtils.getSubject().getSession().setAttribute(String.valueOf(user.getId()), SecurityUtils.getSubject().getPrincipals()); SimpleAuthorizationInfo simpleAuthorizationInfo = new SimpleAuthorizationInfo(); //赋予角色 for (Role role : roleList) { simpleAuthorizationInfo.addRole(role.getRolName()); } //赋予权限 for (Permission permission : permissionList) { simpleAuthorizationInfo.addStringPermission(permission.getPrmName()); } return simpleAuthorizationInfo; } // 认证 @Override protected AuthenticationInfo doGetAuthenticationInfo(AuthenticationToken authenticationToken) throws AuthenticationException { logger.info("doGetAuthenticationInfo +" + authenticationToken.toString()); UsernamePasswordToken token = (UsernamePasswordToken) authenticationToken; String userName = token.getUsername(); logger.info(userName + token.getPassword()); User user = userServiceImpl.findByUserName(token.getUsername()); if (user != null) { Session session = SecurityUtils.getSubject().getSession(); session.setAttribute("user", user); return new SimpleAuthenticationInfo(userName, user.getUsrPassword(), getName()); } else { return null; } } }

到此shrio认证简单配置就配置好了,接下来就是验证了

控制器

package com.springboot.mybatis.demo.controller; import com.springboot.mybatis.demo.common.utils.SelfStringUtils; import com.springboot.mybatis.demo.controller.common.BaseController; import com.springboot.mybatis.demo.model.User; import org.apache.shiro.SecurityUtils; import org.apache.shiro.authc.AuthenticationException; import org.apache.shiro.authc.UsernamePasswordToken; import org.apache.shiro.subject.Subject; import org.springframework.stereotype.Controller; import org.springframework.ui.Model; import org.springframework.web.bind.annotation.GetMapping; import org.springframework.web.bind.annotation.PostMapping; @Controller public class IndexController extends BaseController{ @PostMapping("login") public String login(User user, Model model) { if (user == null || SelfStringUtils.isEmpty(user.getUsrName()) || SelfStringUtils.isEmpty(user.getUsrPassword()) ) { model.addAttribute("warn","请填写完整用户名和密码!"); return "login"; } Subject subject = SecurityUtils.getSubject(); UsernamePasswordToken token = new UsernamePasswordToken(user.getUsrName(), user.getUsrPassword()); token.setRememberMe(true); try { subject.login(token); } catch (AuthenticationException e) { model.addAttribute("error","用户名或密码错误,请重新登陆!"); return "login"; } return "index"; } @GetMapping("login") public String index() { return "login"; } }

login jsp:

<%-- Created by IntelliJ IDEA. User: Administrator Date: 2018/7/29 Time: 14:34 To change this template use File | Settings | File Templates. --%> <%@ page contentType="text/html;charset=UTF-8" language="java" %> <html> <head> <title>登陆</title> </head> <body> <form action="login" method="POST"> User Name: <input type="text" name="usrName"> <br /> User Password: <input type="text" name="usrPassword" /> <input type="submit" value="Submit" /> </form> <span style="color: #b3b20a;">${warn}</span> <span style="color:#b3130f;">${error}</span> </body> </html>

index jsp:

<%-- Created by IntelliJ IDEA. User: pc Date: 2018/7/23 Time: 14:02 To change this template use File | Settings | File Templates. --%> <%@ page contentType="text/html;charset=UTF-8" language="java" %> <html> <head> <title>Title</title> </head> <body> <h1>Welcome to here!</h1> </body> </html>

正常情况分析:

1.未登录时访问非login接口直接跳回login页面

2.登陆失败返回账户或密码错误

3.未填写完整账户和密码返回请填写完整账户和密码

4.登陆成功跳转到index页面,如果不是admin角色则不能访问user/**的路径,其他可以正常访问

7.Docker 部署此项目

(1)基础方式部署

• 构建Dockerfile

FROM docker.io/williamyeh/java8

VOLUME /tmp

VOLUME /opt/workspace

#COPY /build/libs/spring-boot-mybatis-1.0-SNAPSHOT.war /opt/workspace/app.jar

EXPOSE 8080

ENTRYPOINT ["java","-jar","/app.jar"]

创建工作目录挂载点,则可以将工作目录挂载到host机上,然而也可以直接将jar包拷贝到容器中去,二者择其一即可。本人较喜欢前者。

• 在Dockerfile文件目录下,执行 docker build -t 镜像名:tag . 构建镜像

• 因为此项目用到了Mysql,所以还得构建一个Mysql容器,运行命令:docker run --name mysql -v /home/vagrant/docker-compose/spring-boot-compose/enjoy-dir/mysql/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root mysql:5.7;

• 运行刚才构建的项目镜像:docker run --name myproject -v /home/vagrant/workspace/:/opt/workspace --link mysql:mysql -p 8080:8080 -d 镜像名字;挂载的目录 /home/vagrant/workspace 根据自己的目录而定

• 访问8080端口测试

(2)使用docker-compose工具管理单机部署(前提:安装好docker-compose工具)

• 构建docker-compose.yml文件(此处除了有mysql外还加了个redis)

version: '3'

services:

db:

image: docker.io/mysql:5.7

command: --default-authentication-plugin=mysql_native_password

container_name: db

volumes:

- /home/vagrant/docker-compose/spring-boot-compose/enjoy-dir/mysql/data:/var/lib/mysql

- /home/vagrant/docker-compose/spring-boot-compose/enjoy-dir/mysql/logs:/var/log/mysql

environment:

MYSQL_ROOT_PASSWORD: root

MYSQL_USER: 'test'

MYSQL_PASS: 'test'

restart:

always

networks:

- default

redis:

image: docker.io/redis

container_name: redis

command: redis-server /usr/local/etc/redis/redis.conf

volumes:

- /home/vagrant/docker-compose/spring-boot-compose/enjoy-dir/redis/data:/data

- /home/vagrant/docker-compose/spring-boot-compose/enjoy-dir/redis/redis.conf:/usr/local/etc/redis/redis.conf

networks:

- default

spring-boot:

build:

context: ./enjoy-dir/workspace

dockerfile: Dockerfile

image:

spring-boot:1.0-SNAPSHOT

depends_on:

- db

- redis

links:

- db:mysql

- redis:redis

ports:

- "8080:8080"

volumes:

- /home/vagrant/docker-compose/spring-boot-compose/enjoy-dir/workspace:/opt/workspace

networks:

- default

networks:

default:

driver: bridge

注意:其中的挂载目录依自己情况而定;redis密码可以在redis.conf文件中配置,其详细配置参见:https://woodenrobot.me/2018/09/03/%E4%BD%BF%E7%94%A8-docker-compose-%E5%9C%A8-Docker-%E4%B8%AD%E5%90%AF%E5%8A%A8%E5%B8%A6%E5%AF%86%E7%A0%81%E7%9A%84-Redis/

• 在docker-compose.yml文件目录下执行:docker-compose up;在此过程中遇到的问题:mysql无法连接 -> 原因:root用户外部无法使用,于是进入mysql中开放root用户,具体参见:https://www.cnblogs.com/goxcheer/p/8797377.html

• 访问 8080 端口测试

(3)使用docker swarm多机分布式部署

• 构建compose文件基于compose 3.0,其详细配置参见官方网页,

version: '3'

services:

db:

image: docker.io/mysql:5.7

command: --default-authentication-plugin=mysql_native_password // 密码加密机制

volumes:

- "/home/vagrant/docker-compose/spring-boot-compose/enjoy-dir/mysql/data:/var/lib/mysql"

- "/home/vagrant/docker-compose/spring-boot-compose/enjoy-dir/mysql/logs:/var/log/mysql"

environment:

MYSQL_ROOT_PASSWORD: 'root'

MYSQL_USER: 'test'

MYSQL_PASS: 'test'

restart: // 开机启动

always

networks: // mysql 数据库容器连到 mynet overlay 网络,只要连到该网络的容器均可以通过别名 mysql 连接数据库

mynet:

aliases:

- mysql

ports:

- "3306:3306"

deploy: // 使用 swarm 部署需要配置一下

replicas: 1 // stack 启动时默认开启多少个服务

restart_policy: // 重新构建策略

condition: on-failure

placement: // 部署节点

constraints: [node.role == worker]

redis:

image: docker.io/redis

command: redis-server /usr/local/etc/redis/redis.conf

volumes:

- "/home/vagrant/docker-compose/spring-boot-compose/enjoy-dir/redis/data:/data"

- "/home/vagrant/docker-compose/spring-boot-compose/enjoy-dir/redis/redis.conf:/usr/local/etc/redis/redis.conf"

networks:

mynet:

aliases:

- redis

ports:

- "6379:6379"

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints: [node.role == worker]

spring-boot:

build:

context: ./enjoy-dir/workspace

dockerfile: Dockerfile

image:

spring-boot:1.0-SNAPSHOT

depends_on:

- db

- redis

ports:

- "8080:8080"

volumes:

- "/home/vagrant/docker-compose/spring-boot-compose/enjoy-dir/workspace:/opt/workspace"

networks:

mynet:

aliases:

- spring-boot

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints: [node.role == worker]

networks:

mynet:

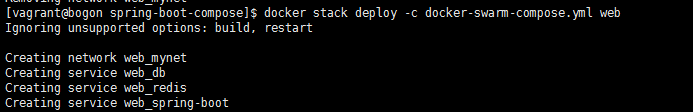

• compose 构建好了则执行 docker stack deploy -c [ compose文件路径 ] [ stack名字 ];如下:

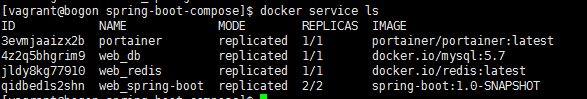

执行完成之后可以在 manager 节点通过命令 docker service ls 查看 service,如下:

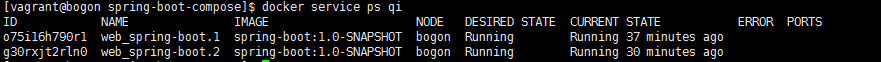

以及查看 service 状态:

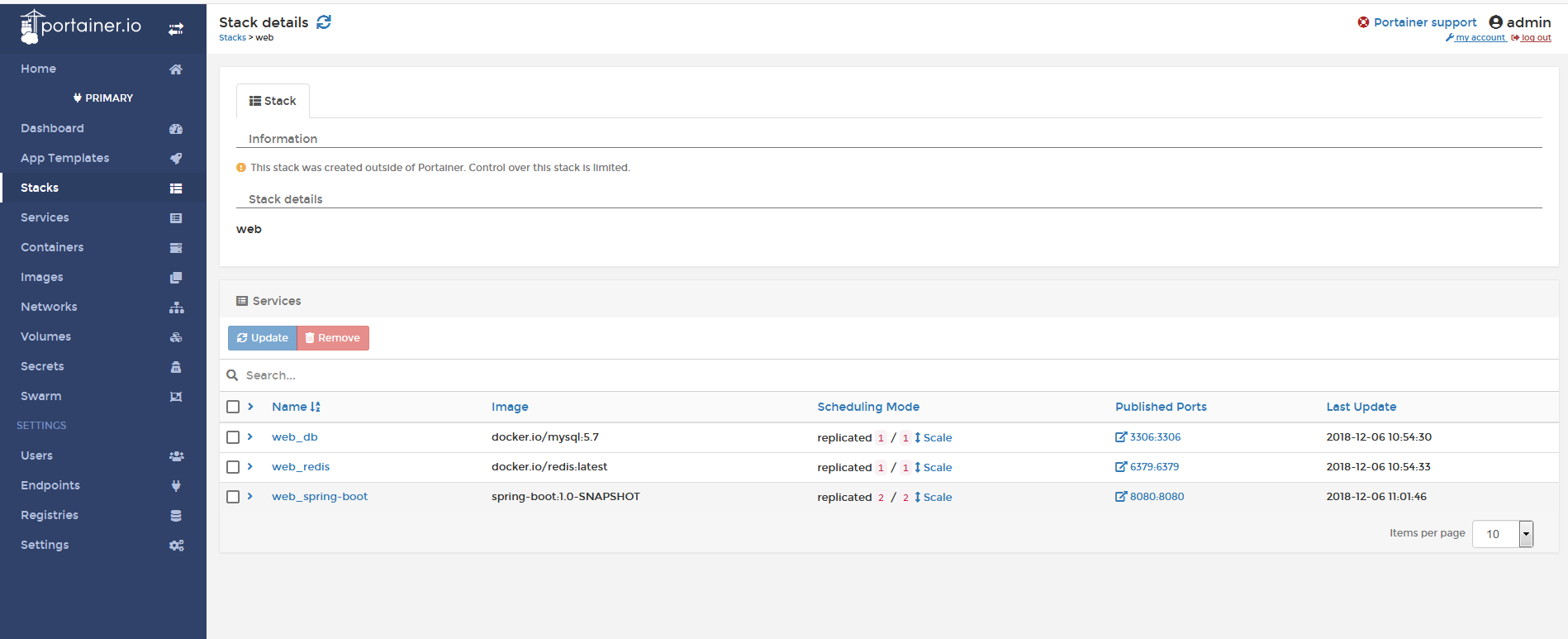

• 通过 Protainer 工具可视化管理 Swarm;首先在任一台机器上安装 Protainer , 安装详解参见:http://www.pangxieke.com/linux/use-protainer-manage-docker.html

安装完成之后则可以进去轻松横向扩展自己的容器也就是service了,自由设置 scale...

总结:由 docker 基础命令创建容器在容器数目不多的情况下很实用,但是容器多了怎么办 -> 用 docker-compose 将容器进行分组管理,这样大大提升效率,一个命令即可启用和关闭多个容器。但是在单机下实用 docke-compose 确实能应付得过来,但是多机怎么办 -> 用 docker swarm, 是的有了docker swarm 无论多少台机器,再也不用一个机器一个机器去部署,docker swarm 会自动帮我们把容器部署到资源足够的机器上去,这样一个高效率的分布式部署就变得 so easy...

8.读写分离

采用读写分离来降低单个数据库的压力,提高访问速度

(1)配置数据库(将原来的数据库配置改成下面的,这里只配置 master 和 slave1 两个数据库)

#----------------------------------------- 数据库连接(单数据库)----------------------------------------

#spring.datasource.url:=jdbc:mysql://localhost:3306/liuzj?useUnicode=true&characterEncoding=gbk&zeroDateTimeBehavior=convertToNull

#spring.datasource.username=root

#spring.datasource.password=

#spring.datasource.driver-class-name=com.mysql.jdbc.Driver

#spring.datasource.type=com.alibaba.druid.pool.DruidDataSource

#----------------------------------------- 数据库连接(单数据库)----------------------------------------

#----------------------------------------- 数据库连接(读写分离)----------------------------------------

# master(写)

spring.datasource.master.url=jdbc:mysql://192.168.10.16:3306/test

spring.datasource.master.username=root

spring.datasource.master.password=123456

spring.datasource.master.driver-class-name=com.mysql.jdbc.Driver

# slave1(读)

spring.datasource.slave1.url=jdbc:mysql://192.168.10.17:3306/test

spring.datasource.slave1.username=test

spring.datasource.slave1.password=123456

spring.datasource.slave1.driver-class-name=com.mysql.jdbc.Driver

#----------------------------------------- 数据库连接(读写分离)----------------------------------------

(2)修改初始化 dataSource(将原来的 dataSource 替换成下面的)

// ----------------------------------- 单数据源 start---------------------------------------- // @Bean // @ConfigurationProperties(prefix = "spring.datasource") // public DataSource dataSource() { // DruidDataSource druidDataSource = new DruidDataSource(); // // 数据源最大连接数 // druidDataSource.setMaxActive(Application.DEFAULT_DATASOURCE_MAX_ACTIVE); // // 数据源最小连接数 // druidDataSource.setMinIdle(Application.DEFAULT_DATASOURCE_MIN_IDLE); // // 配置获取连接等待超时的时间 // druidDataSource.setMaxWait(Application.DEFAULT_DATASOURCE_MAX_WAIT); // return druidDataSource; // } // ----------------------------------- 单数据源 end---------------------------------------- // ----------------------------------- 多数据源(读写分离)start---------------------------------------- @Bean @ConfigurationProperties("spring.datasource.master") public DataSource masterDataSource() { return DataSourceBuilder.create().build(); } @Bean @ConfigurationProperties("spring.datasource.slave1") public DataSource slave1DataSource() { return DataSourceBuilder.create().build(); } @Bean public DataSource myRoutingDataSource(@Qualifier("masterDataSource") DataSource masterDataSource, @Qualifier("slave1DataSource") DataSource slave1DataSource) { Map<Object, Object> targetDataSources = new HashMap<>(2); targetDataSources.put(DBTypeEnum.MASTER, masterDataSource); targetDataSources.put(DBTypeEnum.SLAVE1, slave1DataSource); MyRoutingDataSource myRoutingDataSource = new MyRoutingDataSource(); myRoutingDataSource.setDefaultTargetDataSource(masterDataSource); myRoutingDataSource.setTargetDataSources(targetDataSources); return myRoutingDataSource; } @Resource MyRoutingDataSource myRoutingDataSource; // ----------------------------------- 多数据源(读写分离)end----------------------------------------

(3)使用 AOP 动态切换数据源(当然也可以采用 mycat,具体配置自行查阅资料)

/** * @author admin * @date 2019-02-27 */ @Aspect @Component public class DataSourceAspect { @Pointcut("!@annotation(com.springboot.mybatis.demo.config.annotation.Master) " + "&& (execution(* com.springboot.mybatis.demo.service..*.select*(..)) " + "|| execution(* com.springboot.mybatis.demo.service..*.get*(..))" + "|| execution(* com.springboot.mybatis.demo.service..*.find*(..)))") public void readPointcut() { } @Pointcut("@annotation(com.springboot.mybatis.demo.config.annotation.Master) " + "|| execution(* com.springboot.mybatis.demo.service..*.insert*(..)) " + "|| execution(* com.springboot.mybatis.demo.service..*.add*(..)) " + "|| execution(* com.springboot.mybatis.demo.service..*.update*(..)) " + "|| execution(* com.springboot.mybatis.demo.service..*.edit*(..)) " + "|| execution(* com.springboot.mybatis.demo.service..*.delete*(..)) " + "|| execution(* com.springboot.mybatis.demo.service..*.remove*(..))") public void writePointcut() { } @Before("readPointcut()") public void read() { DBContextHolder.slave(); } @Before("writePointcut()") public void write() { DBContextHolder.master(); } /** * 另一种写法:if...else... 判断哪些需要读从数据库,其余的走主数据库 */ // @Before("execution(* com.springboot.mybatis.demo.service.impl.*.*(..))") // public void before(JoinPoint jp) { // String methodName = jp.getSignature().getName(); // // if (StringUtils.startsWithAny(methodName, "get", "select", "find")) { // DBContextHolder.slave(); // }else { // DBContextHolder.master(); // } // } }

(4)以上只是主要配置及步骤,像 DBContextHolder 等类此处没有贴出,详细参看 github

(5)主从库搭建步骤

配置master

vi /etc/my.cnf #编辑配置文件,在[mysqld]部分添加下面内容

server-id=1 #设置服务器id,为1表示主服务器。

log_bin=mysql-bin #启动MySQ二进制日志系统。

binlog-do-db=abc #需要同步的数据库名,如果有多个数据库,可重复此参数,每个数据库一行

binlog-ignore-db = mysql,information_schema #忽略写入binlog的库

重启master数据库

docker restart mysql

登录master数据库,查看master状态

show master status;

+------------------+----------+--------------+--------------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+------------------+----------+--------------+--------------------------+

| mysql-bin.000001 | 2722 | | mysql,information_schema |

+------------------+----------+--------------+--------------------------+

配置slave

vi /etc/my.cnf #编辑配置文件,在[mysqld]部分添加下面内容

server-id=2 #设置服务器id,为2表示从服务器,这个server-id不做规定,只要主从不一致就好

log_bin=mysql-bin #启动MySQL二进制日志系统,如果该从服务器还有从服务器的话,需要开启,否则不需要

binlog-do-db=abc #需要同步的数据库名,如果有多个数据库,可重复此参数,每个数据库一行

binlog-ignore-db = mysql,information_schema #忽略写入binlog的库,如果该从服务器还有从服务器的话,需要开启,否则不需要

重启slave数据库

docker restart mysql2

登录从数据库,

change master to master_host='192.168.0.133',master_user='slave',master_password='123456',master_log_file='mysql-bin.000001',master_log_pos=2722;//mysql-bin.000001,2722为之前master查到的状态值。

start slave;//开启从数据库

show slave status; //查看从数据库的状态

注:在使用主从数据源时需要注意,一个数据源对应一个事务,也就是说,在一个service事务方法中含有查询和新增操作时,数据源在两次操作中不会切换。解决方案有如下两种:

1.在一个事务service方法中不要将查询和新增插入等混合在一起,可以将查询提出到外层,eg:controller层

2.另开一个线程去执行查询或插入更新操作,因为同一个事务中的connection是从threadLocal中获取的,一旦重新开了一个线程则会重新去获取连接而不是从threadLocal中获取

总结:参看资料:https://www.cnblogs.com/cjsblog/p/9712457.html & https://www.cnblogs.com/liujiaq/p/6125814.html

9. 集成 Quartz 分布式定时任务

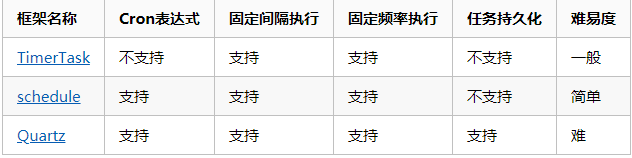

• 几个经典的定时任务比较:

Spring 自带定时器Scheduled是单应用服务上的,不支持分布式环境。如果要支持分布式需要任务调度控制插件spring-scheduling-cluster的配合,其原理是对任务加锁实现控制,支持能实现分布锁的中间件。

(1)初始化数据库脚本(可自行到官网下载)

drop table if exists qrtz_fired_triggers; drop table if exists qrtz_paused_trigger_grps; drop table if exists qrtz_scheduler_state; drop table if exists qrtz_locks; drop table if exists qrtz_simple_triggers; drop table if exists qrtz_simprop_triggers; drop table if exists qrtz_cron_triggers; drop table if exists qrtz_blob_triggers; drop table if exists qrtz_triggers; drop table if exists qrtz_job_details; drop table if exists qrtz_calendars; create table qrtz_job_details ( sched_name varchar(120) not null, job_name varchar(120) not null, job_group varchar(120) not null, description varchar(250) null, job_class_name varchar(250) not null, is_durable varchar(1) not null, is_nonconcurrent varchar(1) not null, is_update_data varchar(1) not null, requests_recovery varchar(1) not null, job_data blob null, primary key (sched_name,job_name,job_group) ); create table qrtz_triggers ( sched_name varchar(120) not null, trigger_name varchar(120) not null, trigger_group varchar(120) not null, job_name varchar(120) not null, job_group varchar(120) not null, description varchar(250) null, next_fire_time bigint(13) null, prev_fire_time bigint(13) null, priority integer null, trigger_state varchar(16) not null, trigger_type varchar(8) not null, start_time bigint(13) not null, end_time bigint(13) null, calendar_name varchar(200) null, misfire_instr smallint(2) null, job_data blob null, primary key (sched_name,trigger_name,trigger_group), foreign key (sched_name,job_name,job_group) references qrtz_job_details(sched_name,job_name,job_group) ); create table qrtz_simple_triggers ( sched_name varchar(120) not null, trigger_name varchar(120) not null, trigger_group varchar(120) not null, repeat_count bigint(7) not null, repeat_interval bigint(12) not null, times_triggered bigint(10) not null, primary key (sched_name,trigger_name,trigger_group), foreign key (sched_name,trigger_name,trigger_group) references qrtz_triggers(sched_name,trigger_name,trigger_group) ); create table qrtz_cron_triggers ( sched_name varchar(120) not null, trigger_name varchar(120) not null, trigger_group varchar(120) not null, cron_expression varchar(200) not null, time_zone_id varchar(80), primary key (sched_name,trigger_name,trigger_group), foreign key (sched_name,trigger_name,trigger_group) references qrtz_triggers(sched_name,trigger_name,trigger_group) ); create table qrtz_simprop_triggers ( sched_name varchar(120) not null, trigger_name varchar(120) not null, trigger_group varchar(120) not null, str_prop_1 varchar(512) null, str_prop_2 varchar(512) null, str_prop_3 varchar(512) null, int_prop_1 int null, int_prop_2 int null, long_prop_1 bigint null, long_prop_2 bigint null, dec_prop_1 numeric(13,4) null, dec_prop_2 numeric(13,4) null, bool_prop_1 varchar(1) null, bool_prop_2 varchar(1) null, primary key (sched_name,trigger_name,trigger_group), foreign key (sched_name,trigger_name,trigger_group) references qrtz_triggers(sched_name,trigger_name,trigger_group) ); create table qrtz_blob_triggers ( sched_name varchar(120) not null, trigger_name varchar(120) not null, trigger_group varchar(120) not null, blob_data blob null, primary key (sched_name,trigger_name,trigger_group), foreign key (sched_name,trigger_name,trigger_group) references qrtz_triggers(sched_name,trigger_name,trigger_group) ); create table qrtz_calendars ( sched_name varchar(120) not null, calendar_name varchar(120) not null, calendar blob not null, primary key (sched_name,calendar_name) ); create table qrtz_paused_trigger_grps ( sched_name varchar(120) not null, trigger_group varchar(120) not null, primary key (sched_name,trigger_group) ); create table qrtz_fired_triggers ( sched_name varchar(120) not null, entry_id varchar(95) not null, trigger_name varchar(120) not null, trigger_group varchar(120) not null, instance_name varchar(200) not null, fired_time bigint(13) not null, sched_time bigint(13) not null, priority integer not null, state varchar(16) not null, job_name varchar(200) null, job_group varchar(200) null, is_nonconcurrent varchar(1) null, requests_recovery varchar(1) null, primary key (sched_name,entry_id) ); create table qrtz_scheduler_state ( sched_name varchar(120) not null, instance_name varchar(120) not null, last_checkin_time bigint(13) not null, checkin_interval bigint(13) not null, primary key (sched_name,instance_name) ); create table qrtz_locks ( sched_name varchar(120) not null, lock_name varchar(40) not null, primary key (sched_name,lock_name) );

(2)创建并配置好 Quartz 配置文件

# --------------------------------------- quartz --------------------------------------- # 主要分为scheduler、threadPool、jobStore、plugin等部分 org.quartz.scheduler.instanceName=DefaultQuartzScheduler org.quartz.scheduler.rmi.export=false org.quartz.scheduler.rmi.proxy=false org.quartz.scheduler.wrapJobExecutionInUserTransaction=false # 实例化ThreadPool时,使用的线程类为SimpleThreadPool org.quartz.threadPool.class=org.quartz.simpl.SimpleThreadPool # threadCount和threadPriority将以setter的形式注入ThreadPool实例 # 并发个数 org.quartz.threadPool.threadCount=5 # 优先级 org.quartz.threadPool.threadPriority=5 org.quartz.threadPool.threadsInheritContextClassLoaderOfInitializingThread=true org.quartz.jobStore.misfireThreshold=5000 # 默认存储在内存中 #org.quartz.jobStore.class = org.quartz.simpl.RAMJobStore #持久化 org.quartz.jobStore.class=org.quartz.impl.jdbcjobstore.JobStoreTX org.quartz.jobStore.tablePrefix=QRTZ_ org.quartz.jobStore.dataSource=qzDS org.quartz.dataSource.qzDS.driver=com.mysql.jdbc.Driver org.quartz.dataSource.qzDS.URL=jdbc:mysql://192.168.10.16:3306/test?useUnicode=true&characterEncoding=UTF-8 org.quartz.dataSource.qzDS.user=root org.quartz.dataSource.qzDS.password=123456 org.quartz.dataSource.qzDS.maxConnections=10 # --------------------------------------- quartz -----------------------------------------

(3)初始化 Quartz 的初始Bean

@Configuration public class QuartzConfig { /** * 实例化SchedulerFactoryBean对象 * * @return SchedulerFactoryBean * @throws IOException 异常 */ @Bean(name = "schedulerFactory") public SchedulerFactoryBean schedulerFactoryBean() throws IOException { SchedulerFactoryBean factoryBean = new SchedulerFactoryBean(); factoryBean.setQuartzProperties(quartzProperties()); return factoryBean; } /** * 加载配置文件 * * @return Properties * @throws IOException 异常 */ @Bean public Properties quartzProperties() throws IOException { PropertiesFactoryBean propertiesFactoryBean = new PropertiesFactoryBean(); propertiesFactoryBean.setLocation(new ClassPathResource("/quartz.properties")); //在quartz.properties中的属性被读取并注入后再初始化对象 propertiesFactoryBean.afterPropertiesSet(); return propertiesFactoryBean.getObject(); } /** * quartz初始化监听器 * * @return QuartzInitializerListener */ @Bean public QuartzInitializerListener executorListener() { return new QuartzInitializerListener(); } /** * 通过SchedulerFactoryBean获取Scheduler的实例 * * @return Scheduler * @throws IOException 异常 */ @Bean(name = "Scheduler") public Scheduler scheduler() throws IOException { return schedulerFactoryBean().getScheduler(); } }

(3)创建 Quartz 的 service 对 job进行一些基础操作,实现动态调度 job

/** * @author admin * @date 2019-02-28 */ public interface QuartzJobService { /** * 添加任务 * * @param scheduler Scheduler的实例 * @param jobClassName 任务类名称 * @param jobGroupName 任务群组名称 * @param cronExpression cron表达式 * @throws Exception */ void addJob(Scheduler scheduler, String jobClassName, String jobGroupName, String cronExpression) throws Exception; /** * 暂停任务 * * @param scheduler Scheduler的实例 * @param jobClassName 任务类名称 * @param jobGroupName 任务群组名称 * @throws Exception */ void pauseJob(Scheduler scheduler, String jobClassName, String jobGroupName) throws Exception; /** * 继续任务 * * @param scheduler Scheduler的实例 * @param jobClassName 任务类名称 * @param jobGroupName 任务群组名称 * @throws Exception */ void resumeJob(Scheduler scheduler, String jobClassName, String jobGroupName) throws Exception; /** * 重新执行任务 * * @param scheduler Scheduler的实例 * @param jobClassName 任务类名称 * @param jobGroupName 任务群组名称 * @param cronExpression cron表达式 * @throws Exception */ void rescheduleJob(Scheduler scheduler, String jobClassName, String jobGroupName, String cronExpression) throws Exception; /** * 删除任务 * * @param jobClassName * @param jobGroupName * @throws Exception */ void deleteJob(Scheduler scheduler, String jobClassName, String jobGroupName) throws Exception; /** * 获取所有任务,使用前端分页 * * @return List */ List<QuartzJob> findList(); }

/** * @author admin * @date 2019-02-28 * @see QuartzJobService */ @Service public class QuartzJobServiceImpl implements QuartzJobService { @Autowired private QuartzJobMapper quartzJobMapper; @Override public void addJob(Scheduler scheduler, String jobClassName, String jobGroupName, String cronExpression) throws Exception { jobClassName = "com.springboot.mybatis.demo.job." + jobClassName; // 启动调度器 scheduler.start(); //构建job信息 JobDetail jobDetail = JobBuilder.newJob(QuartzJobUtils.getClass(jobClassName).getClass()) .withIdentity(jobClassName, jobGroupName) .build(); //表达式调度构建器(即任务执行的时间) CronScheduleBuilder builder = CronScheduleBuilder.cronSchedule(cronExpression); //按新的cronExpression表达式构建一个新的trigger CronTrigger trigger = TriggerBuilder.newTrigger() .withIdentity(jobClassName, jobGroupName) .withSchedule(builder) .build(); // 配置scheduler相关参数 scheduler.scheduleJob(jobDetail, trigger); } @Override public void pauseJob(Scheduler scheduler, String jobClassName, String jobGroupName) throws Exception { jobClassName = "com.springboot.mybatis.demo.job." + jobClassName; scheduler.pauseJob(JobKey.jobKey(jobClassName, jobGroupName)); } @Override public void resumeJob(Scheduler scheduler, String jobClassName, String jobGroupName) throws Exception { jobClassName = "com.springboot.mybatis.demo.job." + jobClassName; scheduler.resumeJob(JobKey.jobKey(jobClassName, jobGroupName)); } @Override public void rescheduleJob(Scheduler scheduler, String jobClassName, String jobGroupName, String cronExpression) throws Exception { jobClassName = "com.springboot.mybatis.demo.job." + jobClassName; TriggerKey triggerKey = TriggerKey.triggerKey(jobClassName, jobGroupName); CronScheduleBuilder builder = CronScheduleBuilder.cronSchedule(cronExpression); CronTrigger trigger = (CronTrigger) scheduler.getTrigger(triggerKey); // 按新的cronExpression表达式重新构建trigger trigger = trigger.getTriggerBuilder() .withIdentity(jobClassName, jobGroupName) .withSchedule(builder) .build(); // 按新的trigger重新设置job执行 scheduler.rescheduleJob(triggerKey, trigger); } @Override public void deleteJob(Scheduler scheduler, String jobClassName, String jobGroupName) throws Exception { jobClassName = "com.springboot.mybatis.demo.job." + jobClassName; scheduler.pauseTrigger(TriggerKey.triggerKey(jobClassName, jobGroupName)); scheduler.unscheduleJob(TriggerKey.triggerKey(jobClassName, jobGroupName)); scheduler.deleteJob(JobKey.jobKey(jobClassName, jobGroupName)); } @Override public List<QuartzJob> findList() { return quartzJobMapper.findList(); } }

(4)创建 job

/** * @author admin * @date 2019-02-28 * @see BaseJob */ public class HelloJob implements BaseJob { private final Logger logger = LoggerFactory.getLogger(getClass()); @Override public void execute(JobExecutionContext jobExecutionContext) throws JobExecutionException { logger.info("hello, I'm quartz job - HelloJob"); } }

(5)然后就可以对 job 进行测试(测试添加、暂停、重启等操作)

总结:

• 以上只展示集成的主要步骤,详细可参看 github。

• 在分布式情况下,quartz 会将任务分布在不同的机器上执行,可以将项目打成jar包,开启两个终端模拟分布式查看 job 的执行情况,会发现 HelloJob 会在两个机器上交替执行。

• 以上集成过程参看资料:https://zhuanlan.zhihu.com/p/38546754

10. 自动分表

(1)概述:

一般来说,分表都是根据最高频查询的字段进行拆分的。但是考虑到很多功能是需要全局查询,所以在这种情况下,是无法避免全局查询的。

对于经常需要全局查询的部分数据,可以单独做个冗余表,这部分就不要分表了。

对于不经常的全局查询,就只能 union 了。但是通常情况下这种查询响应时间都很久。所以就需要在功能上做一定的限制。比如查询间隔之类的,防止数据库长时间无响应。或者把数据同步到只读从库上,在从库上进行搜索。不影响主库运行。

(2)分表准备

• 分表可配置化(启用分表,对哪张表进行分表以及分表策略)

• 如何进行动态分表

(3)实践

• 首先定义自己的配置类

import com.beust.jcommander.internal.Lists; import com.springboot.mybatis.demo.common.constant.Constant; import com.springboot.mybatis.demo.common.utils.SelfStringUtils; import java.util.Arrays; import java.util.List; import java.util.Map; /** * 获取数据源配置信息 * * @author lzj * @date 2019-04-09 */ public class DatasourceConfig { private Master master; private Slave1 slave1; private SubTable subTable; public SubTable getSubTable() { return subTable; } public void setSubTable(SubTable subTable) { this.subTable = subTable; } public Master getMaster() { return master; } public void setMaster(Master master) { this.master = master; } public Slave1 getSlave1() { return slave1; } public void setSlave1(Slave1 slave1) { this.slave1 = slave1; } public static class Master { private String jdbcUrl; private String username; private String password; private String driverClassName; public String getJdbcUrl() { return jdbcUrl; } public void setJdbcUrl(String jdbcUrl) { this.jdbcUrl = jdbcUrl; } public String getUsername() { return username; } public void setUsername(String username) { this.username = username; } public String getPassword() { return password; } public void setPassword(String password) { this.password = password; } public String getDriverClassName() { return driverClassName; } public void setDriverClassName(String driverClassName) { this.driverClassName = driverClassName; } } public static class Slave1 { private String jdbcUrl; private String username; private String password; private String driverClassName; public String getJdbcUrl() { return jdbcUrl; } public void setJdbcUrl(String jdbcUrl) { this.jdbcUrl = jdbcUrl; } public String getUsername() { return username; } public void setUsername(String username) { this.username = username; } public String getPassword() { return password; } public void setPassword(String password) { this.password = password; } public String getDriverClassName() { return driverClassName; } public void setDriverClassName(String driverClassName) { this.driverClassName = driverClassName; } } public static class SubTable{ private boolean enable; private String schemaRoot; private String schemas; private String strategy; public String getStrategy() { return strategy; } public void setStrategy(String strategy) { this.strategy = strategy; } public boolean isEnable() { return enable; } public void setEnable(boolean enable) { this.enable = enable; } public String getSchemaRoot() { return schemaRoot; } public void setSchemaRoot(String schemaRoot) { this.schemaRoot = schemaRoot; } public List<String> getSchemas() { if (SelfStringUtils.isNotEmpty(this.schemas)) { return Arrays.asList(this.schemas.split(Constant.Symbol.COMMA)); } return Lists.newArrayList(); } public void setSchemas(String schemas) { this.schemas = schemas; } } }

因为此项目是配置了多数据源,所以分为master以及slave两个数据源配置,再加上分表配置

#-------------------自动分表配置-----------------

spring.datasource.sub-table.enable = true

spring.datasource.sub-table.schema-root = classpath*:sub/

spring.datasource.sub-table.schemas = smg_user

spring.datasource.sub-table.strategy = each_day

#-------------------自动分表配置-----------------

以上配置是写在application.properties配置文件中的。然后在将我们定义的配置类DataSourceConfig类交给IOC容器管理,即:

@Bean @ConfigurationProperties(prefix = "spring.datasource") public DatasourceConfig datasourceConfig(){ return new DatasourceConfig(); }

这样我们便可以通过自定义的配置类拿到相关的配置

• 然后通过AOP切入mapper方法层,每次调用mapper方法时判断该执行sql的相关实体类是否需要分表

@Aspect @Component public class BaseMapperAspect { private final static Logger logger = LoggerFactory.getLogger(BaseMapperAspect.class); // @Autowired // DataSourceProperties dataSourceProperties; // @Autowired // private DataSource dataSource; @Autowired private DatasourceConfig datasourceConfig; @Autowired SubTableUtilsFactory subTableUtilsFactory; @Autowired private DBService dbService; @Resource MyRoutingDataSource myRoutingDataSource; @Pointcut("execution(* com.springboot.mybatis.demo.mapper.common.BaseMapper.*(..))") public void getMybatisTableEntity() { } /** * 获取runtime class * @param joinPoint target * @throws ClassNotFoundException 异常 */ @Before("getMybatisTableEntity()") public void setThreadLocalMap(JoinPoint joinPoint) throws ClassNotFoundException { ... // 自动分表 MybatisTable mybatisTable = MybatisTableUtils.getMybatisTable(Class.forName(actualTypeArguments[0].getTypeName())); Assert.isTrue(mybatisTable != null, "Null of the MybatisTable"); String oldTableName = mybatisTable.getName(); if (datasourceConfig.getSubTable().isEnable() && datasourceConfig.getSubTable().getSchemas().contains(oldTableName)) { ThreadLocalUtils.setSubTableName(subTableUtilsFactory.getSubTableUtil(datasourceConfig.getSubTable().getStrategy()).getTableName(oldTableName)); // 判断是否需要分表 dbService.autoSubTable(ThreadLocalUtils.getSubTableName(),oldTableName,datasourceConfig.getSubTable().getSchemaRoot()); }else {

ThreadLocalUtils.setSubTableName(oldTableName);

}

}

如果需要分表则会通过配置的策略获取表名,然后判断数据库是否有该表,如果没有则自动创建,否则跳过

• 创建对应分表后,则是对sql进行拦截修改,这里是定义mybatis拦截器拦截sql,如果该sql对应的实体类需要分表,则修改sql的表名,即定位到对应表进行操作

/** * 动态定位表 * * @author liuzj * @date 2019-04-15 */ @Intercepts({@Signature(type = StatementHandler.class, method = "prepare", args = {Connection.class,Integer.class})}) public class SubTableSqlHandler implements Interceptor { Logger logger = LoggerFactory.getLogger(SubTableSqlHandler.class); @Override public Object intercept(Invocation invocation) throws Throwable { StatementHandler handler = (StatementHandler)invocation.getTarget(); BoundSql boundSql = handler.getBoundSql(); String sql = boundSql.getSql(); // 修改 sql if (SelfStringUtils.isNotEmpty(sql)) { MybatisTable mybatisTable = MybatisTableUtils.getMybatisTable(ThreadLocalUtils.get()); Assert.isTrue(mybatisTable != null, "Null of the MybatisTable"); Field sqlField = boundSql.getClass().getDeclaredField("sql"); sqlField.setAccessible(true); sqlField.set(boundSql,sql.replaceAll(mybatisTable.getName(),ThreadLocalUtils.getSubTableName())); } return invocation.proceed(); } @Override public Object plugin(Object target) { return Plugin.wrap(target, this); } @Override public void setProperties(Properties properties) { } }

以上是此项目动态分表的基本思路,详细代码参见GitHub

未完!待续。。。如有不妥之处,请提建议和意见,谢谢