此文转载必须注明原文地址,请尊重作者的劳动成果! http://www.cnblogs.com/lyongerr/p/5040071.html

目录

3.10 编译folly(Facebook Open-source Library) 10

3.10.1 编译double-conversion. 10

4 mcrouter和memcached的ssl通信... 12

4.2 mcrouter和memcached直接通信.. 12

4.2.4 在stunnel server启动tcpdump. 13

4.2.6 stunnel server端抓包分析.. 14

4.3 mcrouter和memcached使用stunnel进行通信.. 15

4.3.7 在stunnel server启动tcpdump. 17

4.3.9 stunnel server端抓包分析.. 18

5.4.1.1 OperationSelectorRoute. 23

1 mcrouter简介

mcrouter是一个memcached协议的路由器,被facebook用于在他们遍布全球的数据中心中的数十个集群几千个服务器之间控制流量。它适用于大规模的级别中,在峰值的时候,mcrouter处理接近50亿的请求/秒。

2 mcrouter特性

l Memcached ASCII protocol

l Connection pooling

l Multiple hashing schemes

l Prefix routing

l Replicated pools

l Production traffic shadowing

l Online reconfiguration

l Flexible routing

l Destination health monitoring/automatic failover

l Cold cache warm up

l Broadcast operations

l Reliable delete stream

l Multi-cluster support

l Rich stats and debug commands

l Quality of service

l Large values

l Multi-level caches

l IPv6 support

l SSL support

3 mcrouter编译过程

3.1 编译环境

|

功能 |

备注 |

|

|

192.168.75.130 |

mcrouter编译机器 |

定制系统/vm |

3.2 配置epel源

yum -y install epel-release

可以yum list 试试,如果不成功需要注释mirrorlist这行,取消baseurl这行的注释。需要epel源的原因是由于3.3中许多包都对它有依赖。

3.3 安装编译环境

yum -y install bzip2-devel libevent-devel libcap-devel scons

jemalloc-devel gmp-devel mpfr-devel libmpc-devel wget

python-devel rpm-build

m4 cmake libicu-devel chrpath openmpi-devel

mpich-devel openssl-devel

glibc-devel.i686 glibc-devel.x86_64 gcc gcc-c++ zlib-devel

gmp-devel mpfr-devel libmpc-devel

gflags-devel git bzip2

unzip libtool bison flex snappy-devel

numactl-devel cyrus-sasl-devel

3.4 编译gcc4.9

mcrouter的编译必须基于gcc4.8+,folly用到了诸如 chrono 之类的C++11库,必须使用gcc 4.8以上版本,才能够完整支持这些用到的C++11特性和标准库。而我这里选择的是4.9版本,编译gcc4.8+的版本需要gmp、mpfr、mpc,故先编译之。

注:以下所有编译步骤中,随着时间的推移,可能安装包所在路径在wget的时候会not found,这是正常的,遇到这种情况请通过其他官方渠道下载对应版本,目前我是测试过本文所有软件包均可以通过给出的链接进行下载。

3.4.1 编译gmp

cd /opt && wget https://gmplib.org/download/gmp/gmp-5.1.3.tar.bz2

tar jxf gmp-5.1.3.tar.bz2 && cd gmp-5.1.3/

./configure --prefix=/usr/local/gmp

make && make install

3.4.2 编译mpfr

cd /opt && wget http://www.mpfr.org/mpfr-3.1.2/mpfr-3.1.2.tar.bz2

tar jxf mpfr-3.1.2.tar.bz2 ;cd mpfr-3.1.2/

./configure --prefix=/usr/local/mpfr -with-gmp=/usr/local/gmp

make && make install

3.4.3 编译mpc

cd /opt && wget http://ftp.gnu.org/gnu/mpc/mpc-1.0.1.tar.gz

tar xzf mpc-1.0.1.tar.gz ;cd mpc-1.0.1

./configure --prefix=/usr/local/mpc -with-mpfr=/usr/local/mpfr -with-gmp=/usr/local/gmp

make && make install

3.4.4 编译gcc-4.9.1

cd /opt && wget http://ftp.gnu.org/gnu/gcc/gcc-4.9.1/gcc-4.9.1.tar.bz2

tar jxf gcc-4.9.1.tar.bz2 ;cd gcc-4.9.1

./configure --prefix=/usr/local/gcc -enable-threads=posix -disable-checking -disable-multilib -enable-languages=c,c++ -with-gmp=/usr/local/gmp -with-mpfr=/usr/local/mpfr/ -with-mpc=/usr/local/mpc/

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/mpc/lib:/usr/local/gmp/lib:/usr/local/mpfr/lib/

make && make install

gcc4.9编译完成以后,需要处理相关的环境变量和库才能够使用,否则后面编译folly和boost会有问题。

3.4.4.1 配置gcc环境变量

echo "/usr/local/gcc/lib/" >> /etc/ld.so.conf.d/gcc-4.9.1.conf

echo "/usr/local/mpc/lib/" >> /etc/ld.so.conf.d/gcc-4.9.1.conf

echo "/usr/local/gmp/lib/" >> /etc/ld.so.conf.d/gcc-4.9.1.conf

echo "/usr/local/mpfr/lib/" >> /etc/ld.so.conf.d/gcc-4.9.1.conf

ldconfig

mv /usr/bin/gcc /usr/bin/gcc_old

mv /usr/bin/g++ /usr/bin/g++_old

mv /usr/bin/c++ /usr/bin/c++_old

ln -s -f /usr/local/gcc/bin/gcc /usr/bin/gcc

ln -s -f /usr/local/gcc/bin/g++ /usr/bin/g++

ln -s -f /usr/local/gcc/bin/c++ /usr/bin/c++

cp /usr/local/gcc/lib64/libstdc++.so.6.0.20 /usr/lib64/.

mv /usr/lib64/libstdc++.so.6 /usr/lib64/libstdc++.so.6.bak

ln -s -f /usr/lib64/libstdc++.so.6.0.20 /usr/lib64/libstdc++.so.6

使用gcc –v 、g++ --version为4.9.1则代表成功,若这一步没有达到请不要继续下面的步骤了,因为后面folly和boost的编译都是基于这个gcc环境。

3.5 编译cmake

cd /opt && wget http://www.cmake.org/files/v2.8/cmake-2.8.12.2.tar.gz

tar xvf cmake-2.8.12.2.tar.gz && cd cmake-2.8.12.2

./configure && make && make install

3.6 编译autoconf

cd /opt && wget http://ftp.gnu.org/gnu/autoconf/autoconf-2.69.tar.gz

tar xvf autoconf-2.69.tar.gz && cd autoconf-2.69

./configure && make && make install

3.7 编译glog

cd /opt && wget https://google-glog.googlecode.com/files/glog-0.3.3.tar.gz

tar xvf glog-0.3.3.tar.gz && cd glog-0.3.3

./configure && make && make install

3.8 编译ragel

由于编译ragel之前需要colm、kelbt,故先编译之,当然你直接编译ragel也不会有错,但是会缺少东西。

3.8.1 编译colm

cd /opt && wget http://www.colm.net/files/colm/colm-0.13.0.2.tar.gz

tar xvf colm-0.13.0.2.tar.gz && cd colm-0.13.0.2

./configure && make && make install

cd /opt

3.8.2 编译kelbt

cd /opt && wget http://www.colm.net/files/kelbt/kelbt-0.16.tar.gz

tar xvf kelbt-0.16.tar.gz && cd kelbt-0.16

./configure && make && make install

3.8.3 编译ragel

cd /opt && wget http://www.colm.net/files/ragel/ragel-6.9.tar.gz

tar xvf ragel-6.9.tar.gz && cd ragel-6.9

./configure --prefix=/usr --disable-manual && make && make install

3.9 编译Boost

Boost必须是Boost 1.51+,这里选择boost_1_56_0的版本,由于定制系统的python环境是2.6的,而boost1.56必须基于python2.7+,当然不使用python2.6环境编译boost也能成功,但是后面编译folly就会有报错,建议以下所有章节的编译过程均要基于python2.7+。

3.9.1 编译python2.7

yum -y install centos-release-SCL

yum -y install python27

scl enable python27 "easy_install pip"

scl enable python27 bash

python --version

3.9.2 编译boost

cd /opt && wget http://downloads.sourceforge.net/boost/boost_1_56_0.tar.bz2

tar jxf boost_1_56_0.tar.bz2 && cd boost_1_56_0

./bootstrap.sh --prefix=/usr && ./b2 stage threading=multi link=shared

./b2 install threading=multi link=shared

3.10 编译folly(Facebook Open-source Library)

Folly is an open-source C++ library developed and used at Facebook,Folly有用到double-conversion中的库,故先编译之。

3.10.1 编译double-conversion

rpm -Uvh http://sourceforge.net/projects/scons/files/scons/2.3.3/scons-2.3.3-1.noarch.rpm

cd/opt && git clone https://code.google.com/p/double-conversion/

cd double-conversion && scons install

cd /opt/ && git clone https://github.com/genx7up/folly.git

cp folly/folly/SConstruct.double-conversion /opt/double-conversion/

cd double-conversion && scons -f SConstruct.double-conversion

ln -sf src double-conversion

ldconfig

rm –rf /opt/folly

3.10.2 编译folly

cd /opt

git clone https://github.com/facebook/folly

cd /opt/folly/folly/

export LD_LIBRARY_PATH="/opt/folly/folly/lib:$LD_LIBRARY_PATH"

export LD_RUN_PATH="/opt/folly/folly/lib"

export LDFLAGS="-L/opt/folly/folly/lib -L/opt/double-conversion -L/usr/local/lib -ldl"

export CPPFLAGS="-I/opt/folly/folly/include -I/opt/double-conversion"

autoreconf -ivf

./configure --with-boost-libdir=/usr/lib/

make && make install

folly make的时候停止在如下界面许久才代表正常,之前尝试过很快就编译完folly了,而且也没有报错,但是最后编译mcrouter会有错。

libtool: compile: g++ -DHAVE_CONFIG_H -I./.. -pthread -I/usr/include -std=gnu++0x -g -O2 -MT futures/Future.lo -MD -MP -MF futures/.deps/Future.Tpo -c futures/Future.cpp -o futures/Future.o >/dev/null 2>&1

3.10.3 解压folly_test

cd /opt/folly/folly/test

wget https://googletest.googlecode.com/files/gtest-1.7.0.zip

unzip gtest-1.7.0.zip

3.11 编译mcrouter

3.11.1 准备Thrift库

cd /opt && git clone https://github.com/facebook/fbthrift.git

cd fbthrift/thrift

ln -sf thrifty.h "/opt/fbthrift/thrift/compiler/thrifty.hh"

export LD_LIBRARY_PATH="/opt/fbthrift/thrift/lib:$LD_LIBRARY_PATH"

export LD_RUN_PATH="/opt/fbthrift/thrift/lib"

export LDFLAGS="-L/opt/fbthrift/thrift/lib -L/usr/local/lib"

export CPPFLAGS="-I/opt/fbthrift/thrift/include -I/opt/fbthrift/thrift/include/python2.7 -I/opt/folly -I/opt/double-conversion"

echo "/usr/local/lib/" >> /etc/ld.so.conf.d/gcc-4.9.1.conf && ldconfig

3.11.2 编译mcrouter

开始这一步之前必须保证以上所有的编译步骤完全没有任何报错,否则编译mcrouter会有问题。

cd /opt && git clone https://github.com/facebook/mcrouter.git

cd mcrouter/mcrouter

export LD_LIBRARY_PATH="/opt/mcrouter/mcrouter/lib:$LD_LIBRARY_PATH"

export LD_RUN_PATH="/opt/folly/folly/test/.libs:/opt/mcrouter/mcrouter/lib"

export LDFLAGS="-L/opt/mcrouter/mcrouter/lib -L/usr/local/lib -L/opt/folly/folly/test/.libs"

export CPPFLAGS="-I/opt/folly/folly/test/gtest-1.7.0/include -I/opt/mcrouter/mcrouter/include -I/opt/folly -I/opt/double-conversion -I/opt/fbthrift -I/opt/boost_1_56_0"

export CXXFLAGS="-fpermissive"

autoreconf --install && ./configure --with-boost-libdir=/usr/lib/

make && make install

mcrouter --help

注意:make mcrouter的时候出现如下输出才代表正常,而且会停在这个界面一会儿。

g++ -DHAVE_CONFIG_H -I.. -I/opt/mcrouter/install/include -DLIBMC_FBTRACE_DISABLE -Wno-missing-field-initializers -Wno-deprecated -W -Wall -Wextra -Wno-unused-parameter -fno-strict-aliasing -g -O2 -std=gnu++1y -MT mcrouter-server.o -MD -MP -MF .deps/mcrouter-server.Tpo -c -o mcrouter-server.o `test -f 'server.cpp' || echo './'`server.cpp

以上是比较顺利的情况下所需的完整步骤,通过mcrouter –help可以检验是否编译成功,如编译失败可以参考最后的常见错误汇总章节。

4 mcrouter和memcached的ssl通信

本文不介绍stunnel安装以及使用

|

IP |

功能 |

备注 |

|

192.168.75.130 |

mcrouter/stunnel client |

定制系统/vm |

|

192.168.75.131 |

stunnel server |

4.2 mcrouter和memcached直接通信

4.2.1 启动memcache测试实例

stunnel server启动一个实例监听11211端口。

sh memcached_stop

/usr/local/mcc/bin/memcached -d -m 128 -c 4096 -p 11211 -u www -t 10 -l 192.168.75.131

l -l <ip_addr>:指定进程监听的地址;

l -d: 以服务模式运行;

l -u <username>:以指定的用户身份运行memcached进程;

l -m <num>:用于缓存数据的最大内存空间,单位为MB,默认为64MB;

l -c <num>:最大支持的并发连接数,默认为1024;

l -p <num>: 指定监听的TCP端口,默认为11211;

l -U <num>:指定监听的UDP端口,默认为11211,0表示关闭UDP端口;

l -t <threads>:用于处理入站请求的最大线程数,仅在memcached编译时开启了支持线程才有效;

l -f <num>:设定Slab Allocator定义预先分配内存空间大小固定的块时使用的增长因子;

l -M:当内存空间不够使用时返回错误信息,而不是按LRU算法利用空间;

l -n: 指定最小的slab chunk大小;单位是字节;

l -S: 启用sasl进行用户认证;

4.2.2 配置mcrouer

stunel client上配置mcrouter的配置文件config.json。

cat config.json

{

"pools": {

"A": {

"servers": [

// hosts of replicated pool, e.g.:

"192.168.75.131:11211",

]

}

},

"route": {

"type": "PrefixPolicyRoute",

"operation_policies": {

"delete": "AllSyncRoute|Pool|A",

"add": "AllSyncRoute|Pool|A",

"get": "LatestRoute|Pool|A",

"set": "AllSyncRoute|Pool|A"

}

}

}

红色部分为mcrouter的memcached node 监听的IP和端口, mcrouter启动后会和它建立通信。

注意: mcrouter和mecached node两机器间防火墙要互加白名单。

4.2.3 启动mcrouter

启动mcrouter并监听1919端口

mcrouter -p 1919 -f config.json &

4.2.4 在stunnel server启动tcpdump

tcpdump -i eth1 -nn -A -s 0 -w /home/open/stunnel_test.pcap port 11211

l -i 指定监听的网络接口。

l -nn 直接以IP和端口号显示,而非主机与服务器名称。

l -w 直接将分组写入文件中,而不是不分析并打印出来。

l -A 以ASCII格式打印出所有分组,并将链路层的头最小化。

l -s 从每个分组中读取最开始的snaplen个字节,而不是默认的68个字节。-s 0表示不限制长度,输出整个包。

4.2.5 写入测试数据

往mcrouter写入测试数据

telnet 127.0.0.1 1919

Trying 127.0.0.1...

Connected to 127.0.0.1.

Escape character is '^]'.

set testkey1 0 0 3

liu

STORED

set testkey2 0 0 4

yong

STORED

set testkey3 0 0 5

43999

STORED

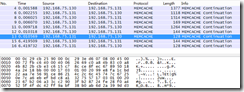

4.2.6 stunnel server端抓包分析

info字段显示了明文信息。具体如下图。

由以上可知mcrouter和memcached在不使用stunnel加密的情况下通信是明文传输的。

4.3 mcrouter和memcached使用stunnel进行通信

4.3.1 停止memcached

两台机器均执行

sh /root/memcached_stop

主要是怕启动stunnel server端口有冲突

4.3.2 启动memcached测试实例

stunnel server上

/usr/local/mcc/bin/memcached -d -m 128 -c 4096 -p 11211 -u www -t 10 -l 127.0.0.1

4.3.3 配置stunnel

4.3.3.1 server

cat /usr/local/stunnel/etc/stunnel/stunnel.conf

sslVersion = TLSv1

CAfile = /usr/local/stunnel/etc/stunnel/stunnel.pem

verify = 2

cert = /usr/local/stunnel/etc/stunnel/stunnel.pem

pid = /var/run/stunnel/stunnel.pid

socket = l:TCP_NODELAY=1

socket = r:TCP_NODELAY=1

debug = 7

output = /data/logs/stunnel.log

setuid = root

setgid = root

[memcached]

accept = 192.168.75.131:11211

connect = 127.0.0.1:11211

l accept = 192.168.75.131:11211 代表stunnel server监听的端口

l connect = 127.0.0.1:11211 代表stunnel解密数据后要转发的目的地

4.3.3.2 client

cat /usr/local/stunnel/etc/stunnel/stunnel.conf

cert = /usr/local/stunnel/etc/stunnel/stunnel.pem

socket = l:TCP_NODELAY=1

socket = r:TCP_NODELAY=1

verify = 2

CAfile = /usr/local/stunnel/etc/stunnel/stunnel.pem

client = yes

delay = no

sslVersion = TLSv1

output = /data/logs/stunnel.log

[memcached]

accept = 127.0.0.1:11211

connect = 192.168.75.131:11211

4.3.4 启动stunnel

server和client均执行

/usr/local/stunnel/sbin/stunnel

stunnel server启动过程中输出如下信息,显示成功加载了证书和秘钥。

2015.11.01 16:35:22 LOG7[21016:140179061770176]: Snagged 64 random bytes from /root/.rnd

2015.11.01 16:35:22 LOG7[21016:140179061770176]: Wrote 1024 new random bytes to /root/.rnd

2015.11.01 16:35:22 LOG7[21016:140179061770176]: RAND_status claims sufficient entropy for the PRNG

2015.11.01 16:35:22 LOG7[21016:140179061770176]: PRNG seeded successfully

2015.11.01 16:35:22 LOG4[21016:140179061770176]: Wrong permissions on /usr/local/stunnel/etc/stunnel/stunnel.pem

2015.11.01 16:35:22 LOG7[21016:140179061770176]: Certificate: /usr/local/stunnel/etc/stunnel/stunnel.pem

2015.11.01 16:35:22 LOG7[21016:140179061770176]: Certificate loaded

2015.11.01 16:35:22 LOG7[21016:140179061770176]: Key file: /usr/local/stunnel/etc/stunnel/stunnel.pem

2015.11.01 16:35:22 LOG7[21016:140179061770176]: Private key loaded

2015.11.01 16:35:22 LOG7[21016:140179061770176]: Loaded verify certificates from /usr/local/stunnel/etc/stunnel/stunnel.pem

4.3.5 修改mcrouter配置文件

cat config.json

{

"pools": {

"A": {

"servers": [

// hosts of replicated pool, e.g.:

"127.0.0.1:11211",

]

}

},

"route": {

"type": "PrefixPolicyRoute",

"operation_policies": {

"delete": "AllSyncRoute|Pool|A",

"add": "AllSyncRoute|Pool|A",

"get": "LatestRoute|Pool|A",

"set": "AllSyncRoute|Pool|A"

}

}

}

127.0.0.1:11211为stunnel client监听的地址。

4.3.6 启动mcrouter

mcrouter -p 1919 -f config.json &

4.3.7 在stunnel server启动tcpdump

tcpdump -i eth1 -nn -A -s 0 -w /home/open/mcrouter1.pcap port 11211

-i 后面为stunel server监听的网卡,不是memcached监听的网卡。因为传给memcached的时候stunnel已经进行解密了。

4.3.8 写入测试数据

mcrouter上写入测试数据

telnet 127.0.0.1 1919

Trying 127.0.0.1...

Connected to 127.0.0.1.

Escape character is '^]'.

set testkey4 0 0 4

hell

STORED

set testkey5 0 0 12

hello world!

STORED

set testkey6 0 0 3

liu

STORED

在stunnel server端读取数据进行验证

telnet 127.0.0.1 11211

Trying 127.0.0.1...

Connected to 127.0.0.1.

Escape character is '^]'.

get testkey4

VALUE testkey4 0 4

hell

END

get testkey5

VALUE testkey5 0 12

hello world!

END

get testkey6

VALUE testkey6 0 3

liu

END

由以上可知数据已经从stunnel client端传入到了memcached。

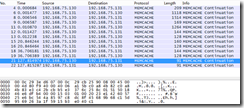

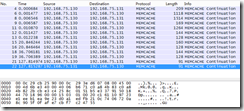

4.3.9 stunnel server端抓包分析

info字段已经没有显示明文信息。我们取最后四条数据包来分析,如下图。

由此可见从mcouter传入到stunnel server的数据已经加密,无法抓包获取。

5 mcrouter的分布式测试

5.1 概念

l Pools: Destination hosts are grouped into "pools". A pool is a basic building block of a routing config. At a minimum, a pool consists of an ordered list of destination hosts and a hash function.

l Key:A memcached key is typically a short (mcrouter limit is 250 characters) ASCII string which does not contain any whitespace or control characters.

l Route handles:Routes are composed of blocks called "route handles". Each route handle encapsulates some piece of routing logic, such as "send a request to a single destination host" or "provide failover."

l 普通分布式: 没有冗余的分布式,即数据分布在不同的memcahed上,而且每个memcached上的数据都不相同。

l 高可用分布式:数据分布在不同的memcahed上,同时每个memcached都有一个冗余的memcached作为互备,即有冗余的分布式+高可用。

5.2 测试环境

|

IP |

功能 |

备注 |

|

192.168.75.130 |

mcrouter测试机、 memcached localhost池 |

定制系统/vm |

|

192.168.75.131 |

memcached bakcup池 |

5.3 mcrouter的普通分布式

5.3.1 用到的路由句柄

5.3.1.1 RandomRoute

l Definition:Routes to one random destination from list of children.

l Properties:children.

5.3.2 mcrouter配置

cat config.json

{

"pools": {

"backup": { "servers": [

"192.168.75.131:11210",

"192.168.75.131:11211",

"192.168.75.131:11212",

] },

"localhost": { "servers": [

"127.0.0.1:11210",

"127.0.0.1:11211",

"127.0.0.1:11212",

] }

},

"route": {

"type": "RandomRoute",

"children" : [ "PoolRoute|localhost", "PoolRoute|backup" ]

}

}

上述配置文件分别定义了两个名为bakcup和localhost的memcached池,每个池均有三个memcached实例。关键词 RandomRoute是指路由方式,即路由句柄。由于RandomRoute的路由方式符合普通分布式需求,故选择之。

5.3.3 启动memcached测试实例

192.168.75.130上

sh memcached_stop

/usr/local/mcc/bin/memcached -d -m 128 -c 4096 -p 11210 -u www -t 10 -l 127.0.0.1 -vv >> /tmp/memcached_11210.log 2>&1

/usr/local/mcc/bin/memcached -d -m 2048 -c 4096 -p 11211 -u www -t 10 -l 127.0.0.1 -vv >> /tmp/memcached_11211.log 2>&1

/usr/local/mcc/bin/memcached -d -m 64 -c 4096 -p 11212 -u www -t 10 -l 127.0.0.1 -vv >> /tmp/memcached_11212.log 2>&1

192.168.75.131上

sh memcached_stop

/usr/local/mcc/bin/memcached -d -m 128 -c 4096 -p 11210 -u www -t 10 -l 192.168.75.131 -vv >> /tmp/memcached_11210.log 2>&1

/usr/local/mcc/bin/memcached -d -m 2048 -c 4096 -p 11211 -u www -t 10 -l 192.168.75.131 -vv >> /tmp/memcached_11211.log 2>&1

/usr/local/mcc/bin/memcached -d -m 64 -c 4096 -p 11212 -u www -t 10 -l 192.168.75.131 -vv >> /tmp/memcached_11212.log 2>&1

l -l <ip_addr>:指定进程监听的地址;

l -d: 以服务模式运行;

l -u <username>:以指定的用户身份运行memcached进程;

l -m <num>:用于缓存数据的最大内存空间,单位为MB,默认为64MB;

l -c <num>:最大支持的并发连接数,默认为1024;

l -p <num>: 指定监听的TCP端口,默认为11211;

l -t <threads>:用于处理入站请求的最大线程数,仅在memcached编译时开启了支持线程才有效;

l -v:代表打印普通的错误或者警告类型的日志信息

l -vv:比-v打印的日志更详细,包含了客户端命令和server端的响应信息

l -vvv:则是最详尽的,甚至包含了内部的状态信息打印

这里是使用-vv的目的是方便查看测试信息而已。

5.3.4 启动mcrouter

192.168.75.130上

mcrouter -p 1919 -f /data/backup/config.json

后面不加&,方便输出调试信息,下文提到的mcrouter输出均指这类调试信息。

5.3.5 测试过程

5.3.5.1 编写数据写入工具

cat setkey.sh

#!/bin/bash

sum=0

num=$1

for i in `seq 1 $num`

do

echo -e "set key${i} 0 0 4 test" | nc 127.0.0.1 1919

sum=$((sum+1))

done

echo

echo "total writes: ${sum}"

此脚本方便一次性向mcrouter写入多条测试数据。

5.3.5.2 编写数据读取工具

cat getkey.sh

#!/bin/bash

key=$1

for port in 11210 11211 11212

do

echo "Port $port values:"

echo "get $1" | nc 192.168.75.131 $port

echo

done

此脚本方便读取数据。

5.3.5.3 写入测试数据

随机写入10w条数据,在192.168.75.130执行

sh setkey.sh 100000

写入数据的时候输出信息如下所示

I1113 11:47:54.126411 117652 ProxyDestination.cpp:359] server 192.168.75.131:11212:TCP:ascii-1000 up (1 of 6)

I1113 11:47:54.130604 117652 ProxyDestination.cpp:359] server 192.168.75.131:11210:TCP:ascii-1000 up (2 of 6)

I1113 11:47:54.134222 117652 ProxyDestination.cpp:359] server 127.0.0.1:11211:TCP:ascii-1000 up (3 of 6)

I1113 11:47:54.137917 117652 ProxyDestination.cpp:359] server 127.0.0.1:11212:TCP:ascii-1000 up (4 of 6)

I1113 11:47:54.146790 117652 ProxyDestination.cpp:359] server 127.0.0.1:11210:TCP:ascii-1000 up (5 of 6)

I1113 11:47:54.151669 117652 ProxyDestination.cpp:359] server 192.168.75.131:11211:TCP:ascii-1000 up (6 of 6)

I1113 11:51:49.416658 117652 ProxyDestination.cpp:359] server 127.0.0.1:11212:TCP:ascii-1000 closed (5 of 6)

I1113 11:51:49.416856 117652 ProxyDestination.cpp:359] server 192.168.75.131:11210:TCP:ascii-1000 closed (4 of 6)

I1113 11:51:49.416931 117652 ProxyDestination.cpp:359] server 127.0.0.1:11211:TCP:ascii-1000 closed (3 of 6)

I1113 11:51:49.417023 117652 ProxyDestination.cpp:359] server 192.168.75.131:11212:TCP:ascii-1000 closed (2 of 6)

I1113 11:51:49.417177 117652 ProxyDestination.cpp:359] server 192.168.75.131:11211:TCP:ascii-1000 closed (1 of 6)

I1113 11:51:49.417248 117652 ProxyDestination.cpp:359] server 127.0.0.1:11210:TCP:ascii-1000 closed (0 of 6)

上述输出中显示了mcrouter与哪些实例建立了链接、与多少个memcached建立了连接,比如第一条红色输出,表示当前数据是与192.168.75.131:11211建立了连接,此时mcrouter已经和6个memcached建立了连接。最后一条红色输出表示此时和192.168.75.131:11211断开了连接,同时已经和0个memcached建立连接。

5.3.6 数据分析

|

memcached实例 |

cmd_set次数 |

bytes_written大小 |

|

127.0.0.1:11210 |

16881 |

135048 |

|

127.0.0.1:11211 |

16569 |

132552 |

|

127.0.0.1:11212 |

16561 |

132488 |

|

192.168.75.131:11210 |

16824 |

134592 |

|

192.168.75.131:11211 |

16604 |

132832 |

|

192.168.75.131:11212 |

16561 |

132488 |

从以上数据可以看出,cmd_set总数是10w次,符合预想的结果。向mcrouter写入10w条数据,数据几乎是平均分布在每个memcached实例中,符合普通分布式的特点。如果想观察的更细,可以看一下对应实例的memcached日志。

5.4 mcrouter的高可用分布式

5.4.1 用到的路由句柄

5.4.1.1 OperationSelectorRoute

l Definition:Sends to different targets based on specified operations.

l Properties:default_policy、operation_policies.

5.4.1.2 WarmUpRoute

l Definition:All sets and deletes go to the target ("cold") route handle. Gets are attempted on the "cold" route handle and, in case of a miss, data is fetched from the "warm" route handle (where the request is likely to result in a cache hit). If "warm" returns a hit, the response is forwarded to the client and an asynchronous request, with the configured expiration time, updates the value in the "cold" route handle.

l Properties:cold、warm、exptime .

5.4.1.3 AllSyncRoute

l Definition:Immediately sends the same request to all child route handles. Collects all replies and responds with the "worst" reply (i.e., the error reply, if any).

l Properties:children.

5.4.2 mcrouter配置

cat config.json

{

"pools": {

"backup": { "servers": [

"192.168.75.131:11210",

"192.168.75.131:11211",

"192.168.75.131:11212",

] },

"localhost": { "servers": [

"127.0.0.1:11210",

"127.0.0.1:11211",

"127.0.0.1:11212",

] }

},

"route": {

"type": "OperationSelectorRoute",

"operation_policies": {

"get": {

"type": "WarmUpRoute",

"cold": "PoolRoute|localhost",

"warm": "PoolRoute|backup",

"exptime": 0

}

},

"default_policy": {

"type": "AllSyncRoute",

"children": [

"PoolRoute|localhost",

"PoolRoute|backup"

]

}

}

}

配置文件中同样定义了两个名为backup和localhost的memcached池,每个池包含三个memcached实例。OperationSelectorRoute路由句柄定义了如果是get操作,则先从localhost池里面取数据,若miss,则从backup池取,若从backup池取到数据则同时把数据写入localhost池。exptime为数据写入localhost池的有效期,0为永久。而default_policy则定义了除get以外的其他操作(set、add、delete)则通过AllSyncRoute句柄同时写入localhost和backup池,最后也就是两个池的数据是互备的。下面来进行测试并验证。

5.4.3 启动memcached测试实例

192.168.75.130上:

sh memcached_stop

/usr/local/mcc/bin/memcached -d -m 128 -c 4096 -p 11210 -u www -t 10 -l 127.0.0.1 -vv >> /tmp/memcached_11210.log 2>&1

/usr/local/mcc/bin/memcached -d -m 2048 -c 4096 -p 11211 -u www -t 10 -l 127.0.0.1 -vv >> /tmp/memcached_11211.log 2>&1

/usr/local/mcc/bin/memcached -d -m 64 -c 4096 -p 11212 -u www -t 10 -l 127.0.0.1 -vv >> /tmp/memcached_11212.log 2>&1

192.168.75.131上:

sh memcached_stop

/usr/local/mcc/bin/memcached -d -m 128 -c 4096 -p 11210 -u www -t 10 -l 192.168.75.131 -vv >> /tmp/memcached_11210.log 2>&1

/usr/local/mcc/bin/memcached -d -m 2048 -c 4096 -p 11211 -u www -t 10 -l 192.168.75.131 -vv >> /tmp/memcached_11211.log 2>&1

/usr/local/mcc/bin/memcached -d -m 64 -c 4096 -p 11212 -u www -t 10 -l 192.168.75.131 -vv >> /tmp/memcached_11212.log 2>&1

目的是清空上次实验的残留数据。

5.4.4 启动mcrouter

mcrouter -p 1919 -f /data/backup/config.json

后面不加&,方便输出调试信息。

5.4.5 测试过程

随机写入10w条数据,在192.168.75.130执行

sh setkey.sh 100000

5.4.6 数据分析

|

memcached实例 |

cmd_set次数 |

bytes_written大小 |

|

127.0.0.1:11210 |

33705 |

269640 |

|

127.0.0.1:11211 |

33173 |

265384 |

|

127.0.0.1:11212 |

33122 |

264976 |

|

192.168.75.131:11210 |

33705 |

269640 |

|

192.168.75.131:11211 |

33173 |

265384 |

|

192.168.75.131:11212 |

33122 |

264976 |

从以上数据可以看出,cmd_set总数是20w次,符合预想的结果。向mcrouter写入10w条数据时,127.0.0.1:11210和192.168.75.131:11210实例写入的数据次数、大小一致,也就是它们是互备的并且位于不同的池,同时可以看到其他实例也是互备。符合有冗余的分布式特性。

5.4.7 模拟localhost池故障

为了测试高可用,我们手动停止192.168.75.130上的所有实例,模拟故障或数据丢失。

sh memcached_stop

sh memcached_start

在192.168.75.130(loaclhost池)读取key1、key2,此时由于重启了memcached所有实例,应该没有数据。

Ø 读key1

sh getkey.sh key1

Port 11210 values:

END

Port 11211 values:

END

Port 11212 values:

END

Ø 读key2

Port 11210 values:

END

Port 11211 values:

END

Port 11212 values:

END

从mcrouter读取key1、key2,并观察相应实例的memcached日志和mcrouter输出。

Ø get key1

mcrouter的输出如下:

I1113 15:44:45.147187 84128 ProxyDestination.cpp:359] server 127.0.0.1:11212:TCP:ascii-1000 up (1 of 6)

I1113 15:44:45.148263 84128 ProxyDestination.cpp:359] server 192.168.75.131:11212:TCP:ascii-1000 up (2 of 6)

memcached相应实例的日志显示如下:

127.0.0.1:11212

<58 new auto-negotiating client connection

58: Client using the ascii protocol

<58 get key1

>58 END

<58 add key1 0 0 4

>58 STORED

192.168.75.131:11212

<58 new auto-negotiating client connection

58: Client using the ascii protocol

<58 get key1

>58 sending key key1

>58 END

Ø get key2

mcrouter的输出如下:

I1113 15:48:07.653182 84128 ProxyDestination.cpp:359] server 127.0.0.1:11210:TCP:ascii-1000 up (1 of 6)

I1113 15:48:07.654258 84128 ProxyDestination.cpp:359] server 192.168.75.131:11210:TCP:ascii-1000 up (2 of 6)

memcached相应实例的日志显示如下:

127.0.0.1:11210

<58 new auto-negotiating client connection

58: Client using the ascii protocol

<58 get key2

>58 END

<58 add key2 0 0 4

>58 STORED

192.168.75.131:11210

<58 new auto-negotiating client connection

58: Client using the ascii protocol

<58 get key2

>58 sending key key2

>58 END

我们再从192.168.75.130(localhost池)读取key1、key2。

Ø 读key1

sh getkey.sh key1

Port 11210 values:

END

Port 11211 values:

END

Port 11212 values:

VALUE key1 0 4

test

END

Ø 读key2

sh getkey.sh key2

Port 11210 values:

VALUE key2 0 4

test

END

Port 11211 values:

END

Port 11212 values:

END

由此可以看出mcrouter支持高可用功能,但有个缺陷,就是localhost池故障恢复后,备池不会主动且及时的向恢复后的池同步数据,mcrouter需要人工请求后,并判定从localhost池取不到数据后才会再重新写入数据到localhost池。

5.4.8 结果分析

由5.4.6章节的数据分析和5.4.7章节的日志显示,说明在mcrouter中写入10w条数据时,符合高可用分布式的特性。即mcrouter本身具有高可用分布式的特点。验证了5.4.2章节的说法。

5.5 reload特性

mcrouter的配置文件支持reload,而且默认会主动加载生效,正如官方作者提到(mcrouter supports dynamic reconfiguration so you don't need to restart mcrouter to apply config changes.),如果你配置文件修改出错并保存,mcrouter会有错误提示,并依然保持之前的正确配置。当然这个功能是可选的,你可以在启动时加上--disable-reload-configs参数,然后你编辑配置文件并保存后,mcrouter不会自动刷新配置。

6 常见错误汇总

6.1 编译folly报错

6.1.1 报错1

error: Could not link against boost_thread-mt !

或者

checking whether the Boost::Context library is available... yes

configure: error: Could not find a version of the library!

解决:./configure --with-boost-libdir=/usr/lib/ 加上boost的库所在路径

6.1.2 报错2

/usr/bin/ld: /usr/local/gcc-4.8.3/lib/gcc/x86_64-unknown-linux-gnu/4.8.3/../../../../lib64/libiberty.a(cp-demangle.o): relocation R_X86_64_32S against `.rodata' can not be used when making a shared object; recompile with -fPIC

/usr/local/gcc-4.8.3/lib/gcc/x86_64-unknown-linux-gnu/4.8.3/../../../../lib64/libiberty.a: could not read symbols: Bad value

collect2: error: ld returned 1 exit status

make[2]: *** [libfolly.la] Error 1

make[2]: Leaving directory `/data/src/folly/folly'

make[1]: *** [all-recursive] Error 1

make[1]: Leaving directory `/data/src/folly/folly'

make: *** [all] Error 2

解决:检查之前的gcc编译和boost编译过程有无问题,gcc必须是4.8+,boost必须是1.51+

6.2 编译mcrouter报错

g++ -DHAVE_CONFIG_H -I../.. -DLIBMC_FBTRACE_DISABLE -Wno-missing-field-initializers -Wno-deprecated -W -Wall -Wextra -Wno-unused-parameter -fno-strict-aliasing -g -O2 -std=gnu++1y -MT fbi/cpp/libmcrouter_a-LogFailure.o -MD -MP -MF fbi/cpp/.deps/libmcrouter_a-LogFailure.Tpo -c -o fbi/cpp/libmcrouter_a-LogFailure.o `test -f 'fbi/cpp/LogFailure.cpp' || echo './'`fbi/cpp/LogFailure.cpp

fbi/cpp/LogFailure.cpp:24:29: fatal error: folly/Singleton.h: No such file or directory

#include <folly/Singleton.h>

解决:需要检查folly在make过程中是否有wraning信息输出 ,有wraning信息的话,需要检查folly的编译过程,make mcrouter编译时如果有类似以下输出才代表是正确的,而且会停止在这个界面比较久,否则有可能是folly那里make的时候有问题导致了mcrouter最后编译失败。

libtool: compile: g++ -DHAVE_CONFIG_H -I./.. -pthread -I/usr/include -std=gnu++0x -g -O2 -MT futures/Future.lo -MD -MP -MF futures/.deps/Future.Tpo -c futures/Future.cpp -o futures/Future.o >/dev/null 2>&1

6.3 启动stunnel server报错

.10.31 01:05:50 LOG7[18392:140063756588992]: Certificate: /usr/local/stunnel/etc/private.pem

2015.10.31 01:05:50 LOG3[18392:140063756588992]: Error reading certificate file: /usr/local/stunnel/etc/private.pem

2015.10.31 01:05:50 LOG3[18392:140063756588992]: error stack: 140DC009 : error:140DC009:SSL routines:SSL_CTX_use_certificate_chain_file:PEM lib

2015.10.31 01:05:50 LOG3[18392:140063756588992]: SSL_CTX_use_certificate_chain_file: 906D06C: error:0906D06C:PEM routines:PEM_read_bio:no start line

检查stunnel.conf文件中cert和CAfile字段对应证书是否为相应文件,文件所属权限是否正确等。

6.4 启动stunnel client 报错

2015.11.01 15:31:31 LOG3[71906:140502252201920]: Cannot create pid file /usr/local/stunnel/var/run/stunnel/stunnel.pid

2015.11.01 15:31:31 LOG3[71906:140502252201920]: create: No such file or directory (2)

已经提示非常清楚了,主要是记得在stunnel client的stunnel.conf文件中加上output字段,方便排错。

总之,有错误就看日志和Google。

7 参考资料

http://qiita.com/shivaken/items/8742e0ddc3c72f242d03

http://confluence.sharuru07.jp/pages/viewpage.action?pageId=361455

http://www.tiham.com/cache-cluster/mcrouter-install.html

http://dev.classmethod.jp/cloud/aws/elasticache-carried-mcrouter/

https://github.com/genx7up/docker-mcrouter

http://fuweiyi.com/others/2014/05/15/a-Centos-Squid-Stunnel-proxy.html

http://blog.cloudpack.jp/2014/12/16/router-for-scaling-memcached-with-mcrouter-on-docker/

https://github.com/facebook/mcrouter/wiki

http://www.oschina.net/translate/introducing-mcrouter-a-memcached-protocol-router-for-scaling-memcached-deployments