好久没有写过博客了,趁着年假还有一天,把去年项目所运用的读写分离在这里概述一下及其注意点,以防以后项目再有使用到;

准备工作

1 开发环境:window,idea,maven,spring boot,mybatis,druid(淘宝数据库连接池)

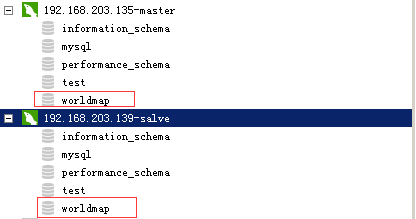

2 数据库服务器:linux,mysql master(192.168.203.135),mysql salve(192.168.203.139)

3 读写分离之前必须先做好数据库的主从复制,关于主从复制不是该篇幅的主要叙述重点,关于主从复制读者可以自行google或者百度,教程基本都是一样,可行

注意以下几点:

a:做主从复制时,首先确定两台服务器的mysql没任何自定义库(否则只可以配置完后之前的东西没法同步,或者两个库都有完全相同的库应该也是可以同步)

b:server_id必须配置不一样

c:防火墙不能把mysql服务端口给拦截了(默认3306)

d:确保两台mysql可以相互访问

e:重置master,slave。Reset master;reset slave;开启关闭slave,start slave;stop slave;

f:主DB server和从DB server数据库的版本一致

4 读写分离方式:

4-1 基于程序代码内部实现: 在代码中根据select 、insert进行路由分类,这类方法也是目前生产环境下应用最广泛的。优点是性能较好,因为程序在代码中实现,不需要增加额外的硬件开支,缺点是需要开发人员来实现,运维人员无从下手。

4-2 基于中间代理层实现: 代理一般介于应用服务器和数据库服务器之间,代理数据库服务器接收到应用服务器的请求后根据判断后转发到,后端数据库,有以下代表性的程序。

本文基于两种方式的叙述:

基于应用层代码实现方式(内容都是通过代码体现,必要的说明存在代码中)

1 配置pom.xml,导入需要的jar包

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.lishun</groupId>

<artifactId>mysql_master_salve</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>mysql_master_salve</name>

<description>Demo project for Spring Boot</description>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>1.5.10.RELEASE</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<version>RELEASE</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.0.18</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-aop</artifactId>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

<plugin>

<groupId>org.mybatis.generator</groupId>

<artifactId>mybatis-generator-maven-plugin</artifactId>

<version>1.3.2</version>

<dependencies>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.43</version>

</dependency>

</dependencies>

<configuration>

<overwrite>true</overwrite>

</configuration>

</plugin>

</plugins>

</build>

</project>

2 配置application.properties

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

|

server.port=9022#mybatis配置*mapper.xml文件和实体别名mybatis.mapper-locations=classpath:mapper/*.xmlmybatis.type-aliases-package=com.lishun.entityspring.datasource.driver-class-name=com.mysql.jdbc.Driverspring.datasource.password=123456spring.datasource.username=root#写节点spring.datasource.master.url=jdbc:mysql://192.168.203.135:3306/worldmap#两个个读节点(为了方便测试这里用的是同一个服务器数据库,生产环境应该不使用)spring.datasource.salve1.url=jdbc:mysql://192.168.203.139:3306/worldmapspring.datasource.salve2.url=jdbc:mysql://192.168.203.139:3306/worldmap# druid 连接池 Setting# 初始化大小,最小,最大spring.datasource.type=com.alibaba.druid.pool.DruidDataSourcespring.datasource.initialSize=5spring.datasource.minIdle=5spring.datasource.maxActive=20# 配置获取连接等待超时的时间spring.datasource.maxWait=60000# 配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒spring.datasource.timeBetweenEvictionRunsMillis=60000# 配置一个连接在池中最小生存的时间,单位是毫秒spring.datasource.minEvictableIdleTimeMillis=300000spring.datasource.validationQuery=SELECT 1 FROM rscipc_sys_userspring.datasource.testWhileIdle=truespring.datasource.testOnBorrow=falsespring.datasource.testOnReturn=false# 打开PSCache,并且指定每个连接上PSCache的大小spring.datasource.poolPreparedStatements=truespring.datasource.maxPoolPreparedStatementPerConnectionSize=20# 配置监控统计拦截的filters,去掉后监控界面sql无法统计,'wall'用于防火墙spring.datasource.filters=stat,wall,log4j# 通过connectProperties属性来打开mergeSql功能;慢SQL记录spring.datasource.connectionProperties=druid.stat.mergeSql=true;druid.stat.slowSqlMillis=5000spring.datasource.logSlowSql=true#End |

3 启动类(注意:其他需要spring管理的bean(service,config等)必须放在该启动类的子包下,不然会扫描不到bean,导致注入失败)

|

1

2

3

4

5

6

7

|

@SpringBootApplication@MapperScan("com.lishun.mapper") //!!!!!! 注意:扫描所有mapperpublic class MysqlMasterSalveApplication { public static void main(String[] args) { SpringApplication.run(MysqlMasterSalveApplication.class, args); }} |

4 动态数据源 DynamicDataSource

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

/** * @author lishun * @Description:动态数据源, 继承AbstractRoutingDataSource * @date 2017/8/9 */public class DynamicDataSource extends AbstractRoutingDataSource { public static final Logger log = LoggerFactory.getLogger(DynamicDataSource.class); /** * 默认数据源 */ public static final String DEFAULT_DS = "read_ds"; private static final ThreadLocal<String> contextHolder = new ThreadLocal<>(); public static void setDB(String dbType) {// 设置数据源名 log.info("切换到{}数据源", dbType); contextHolder.set(dbType); } public static void clearDB() { contextHolder.remove(); }// 清除数据源名 @Override protected Object determineCurrentLookupKey() { return contextHolder.get(); }} |

5 线程池配置数据源

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

|

@Configurationpublic class DruidConfig { private Logger logger = LoggerFactory.getLogger(DruidConfig.class); @Value("${spring.datasource.master.url}") private String masterUrl; @Value("${spring.datasource.salve1.url}") private String salve1Url; @Value("${spring.datasource.salve2.url}") private String salve2Url; @Value("${spring.datasource.username}") private String username; @Value("${spring.datasource.password}") private String password; @Value("${spring.datasource.driver-class-name}") private String driverClassName; @Value("${spring.datasource.initialSize}") private int initialSize; @Value("${spring.datasource.minIdle}") private int minIdle; @Value("${spring.datasource.maxActive}") private int maxActive; @Value("${spring.datasource.maxWait}") private int maxWait; @Value("${spring.datasource.timeBetweenEvictionRunsMillis}") private int timeBetweenEvictionRunsMillis; @Value("${spring.datasource.minEvictableIdleTimeMillis}") private int minEvictableIdleTimeMillis; @Value("${spring.datasource.validationQuery}") private String validationQuery; @Value("${spring.datasource.testWhileIdle}") private boolean testWhileIdle; @Value("${spring.datasource.testOnBorrow}") private boolean testOnBorrow; @Value("${spring.datasource.testOnReturn}") private boolean testOnReturn; @Value("${spring.datasource.filters}") private String filters; @Value("${spring.datasource.logSlowSql}") private String logSlowSql; @Bean public ServletRegistrationBean druidServlet() { logger.info("init Druid Servlet Configuration "); ServletRegistrationBean reg = new ServletRegistrationBean(); reg.setServlet(new StatViewServlet()); reg.addUrlMappings("/druid/*"); reg.addInitParameter("loginUsername", username); reg.addInitParameter("loginPassword", password); reg.addInitParameter("logSlowSql", logSlowSql); return reg; } @Bean public FilterRegistrationBean filterRegistrationBean() { FilterRegistrationBean filterRegistrationBean = new FilterRegistrationBean(); filterRegistrationBean.setFilter(new WebStatFilter()); filterRegistrationBean.addUrlPatterns("/*"); filterRegistrationBean.addInitParameter("exclusions", "*.js,*.gif,*.jpg,*.png,*.css,*.ico,/druid/*"); filterRegistrationBean.addInitParameter("profileEnable", "true"); return filterRegistrationBean; } @Bean public DataSource druidDataSource() { DruidDataSource datasource = new DruidDataSource(); datasource.setUrl(masterUrl); datasource.setUsername(username); datasource.setPassword(password); datasource.setDriverClassName(driverClassName); datasource.setInitialSize(initialSize); datasource.setMinIdle(minIdle); datasource.setMaxActive(maxActive); datasource.setMaxWait(maxWait); datasource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis); datasource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis); datasource.setValidationQuery(validationQuery); datasource.setTestWhileIdle(testWhileIdle); datasource.setTestOnBorrow(testOnBorrow); datasource.setTestOnReturn(testOnReturn); try { datasource.setFilters(filters); } catch (SQLException e) { logger.error("druid configuration initialization filter", e); } Map<Object, Object> dsMap = new HashMap(); dsMap.put("read_ds_1", druidDataSource_read1()); dsMap.put("read_ds_2", druidDataSource_read2()); dsMap.put("write_ds", datasource); DynamicDataSource dynamicDataSource = new DynamicDataSource(); dynamicDataSource.setTargetDataSources(dsMap); return dynamicDataSource; } public DataSource druidDataSource_read1() { DruidDataSource datasource = new DruidDataSource(); datasource.setUrl(salve1Url); datasource.setUsername(username); datasource.setPassword(password); datasource.setDriverClassName(driverClassName); datasource.setInitialSize(initialSize); datasource.setMinIdle(minIdle); datasource.setMaxActive(maxActive); datasource.setMaxWait(maxWait); datasource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis); datasource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis); datasource.setValidationQuery(validationQuery); datasource.setTestWhileIdle(testWhileIdle); datasource.setTestOnBorrow(testOnBorrow); datasource.setTestOnReturn(testOnReturn); try { datasource.setFilters(filters); } catch (SQLException e) { logger.error("druid configuration initialization filter", e); } return datasource; } public DataSource druidDataSource_read2() { DruidDataSource datasource = new DruidDataSource(); datasource.setUrl(salve2Url); datasource.setUsername(username); datasource.setPassword(password); datasource.setDriverClassName(driverClassName); datasource.setInitialSize(initialSize); datasource.setMinIdle(minIdle); datasource.setMaxActive(maxActive); datasource.setMaxWait(maxWait); datasource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis); datasource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis); datasource.setValidationQuery(validationQuery); datasource.setTestWhileIdle(testWhileIdle); datasource.setTestOnBorrow(testOnBorrow); datasource.setTestOnReturn(testOnReturn); try { datasource.setFilters(filters); } catch (SQLException e) { logger.error("druid configuration initialization filter", e); } return datasource; }} |

6 数据源注解:在service层通过数据源注解来指定数据源

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

/** * @author lishun * @Description: 读数据源注解 * @date 2017/8/9 */@Target({ElementType.METHOD})@Retention(RetentionPolicy.RUNTIME)public @interface ReadDataSource { String vlaue() default "read_ds";}/** * @author lishun * @Description: 写数据源注解 * @date 2017/8/9 */@Target({ElementType.METHOD})@Retention(RetentionPolicy.RUNTIME)public @interface WriteDataSource { String value() default "write_ds";} |

7 service aop切面来切换数据源

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

|

/** * @author lishun * @Description: TODO * @date 2017/8/9 */@Component@Aspectpublic class ServiceAspect implements PriorityOrdered { @Pointcut("execution(public * com.lishun.service.*.*(..))") public void dataSource(){}; @Before("dataSource()") public void before(JoinPoint joinPoint){ Class<?> className = joinPoint.getTarget().getClass();//获得当前访问的class String methodName = joinPoint.getSignature().getName();//获得访问的方法名 Class[] argClass = ((MethodSignature)joinPoint.getSignature()).getParameterTypes();//得到方法的参数的类型 String dataSource = DynamicDataSource.DEFAULT_DS; try { Method method = className.getMethod(methodName, argClass);// 得到访问的方法对象 if (method.isAnnotationPresent(ReadDataSource.class)) { ReadDataSource annotation = method.getAnnotation(ReadDataSource.class); dataSource = annotation.vlaue(); int i = new Random().nextInt(2) + 1; /* 简单的负载均衡 */ dataSource = dataSource + "_" + i; }else if (method.isAnnotationPresent(WriteDataSource.class)){ WriteDataSource annotation = method.getAnnotation(WriteDataSource.class); dataSource = annotation.value(); } } catch (Exception e) { e.printStackTrace(); } DynamicDataSource.setDB(dataSource);// 切换数据源 } /* 基于方法名 @Before("execution(public * com.lishun.service.*.find*(..)) || execution(public * com.lishun.service.*.query*(..))") public void read(JoinPoint joinPoint){ DynamicDataSource.setDB("read_ds");// 切换数据源 } @Before("execution(public * com.lishun.service.*.insert*(..)) || execution(public * com.lishun.service.*.add*(..))") public void write(JoinPoint joinPoint){ DynamicDataSource.setDB("write_ds");// 切换数据源 } */ @After("dataSource()") public void after(JoinPoint joinPoint){ DynamicDataSource.clearDB();// 切换数据源 } @AfterThrowing("dataSource()") public void AfterThrowing(){ System.out.println("AfterThrowing---------------" ); } @Override public int getOrder() { return 1;//数值越小该切面先被执行,先选择数据源(防止事务aop使用数据源出现空异常) }} |

8 测试 mapper的代码就不贴了,主要是service和controller

service

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

@Service@Transactionalpublic class WmIpInfoServiceImpl implements WmIpInfoService { @Autowired public WmIpInfoMapper wmIpInfoMapper; @Override @ReadDataSource public WmIpInfo findOneById(String id) { //wmIpInfoMapper.selectByPrimaryKey(id); return wmIpInfoMapper.selectByPrimaryKey(id); } @Override @WriteDataSource public int insert(WmIpInfo wmIpInfo) { int result = wmIpInfoMapper.insert(wmIpInfo); return result; }} |

contrlloer

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

@RestControllerpublic class IndexController { @Autowired public WmIpInfoService wmIpInfoService; @GetMapping("/index/{id}") public WmIpInfo index(@PathVariable(value = "id") String id){ WmIpInfo wmIpInfo = new WmIpInfo(); wmIpInfo.setId(UUID.randomUUID().toString()); wmIpInfoService.insert(wmIpInfo); wmIpInfoService.findOneById(id); return null; }} |

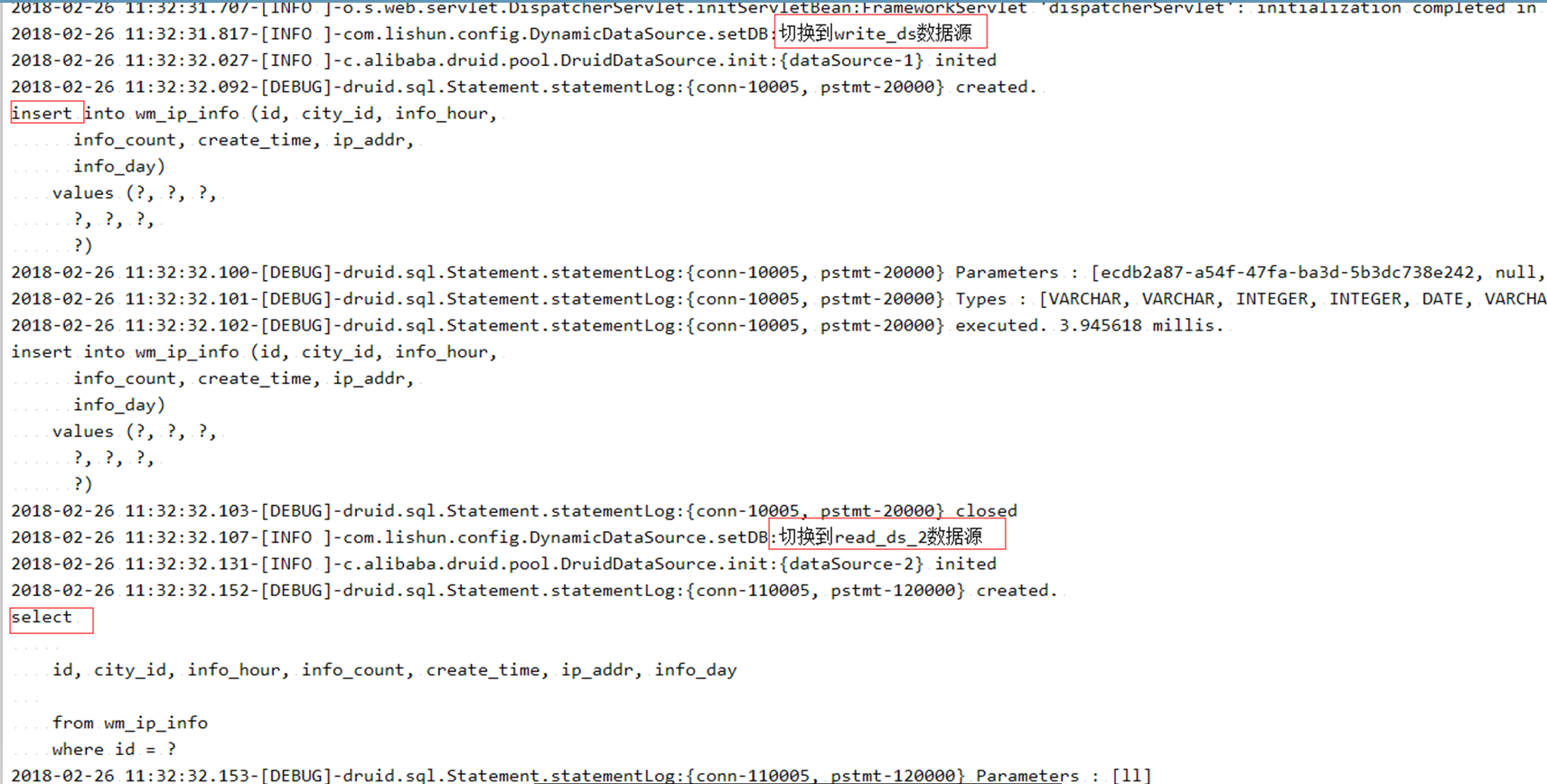

运行spring boot 在浏览器输入http://localhost:9022/index/123456

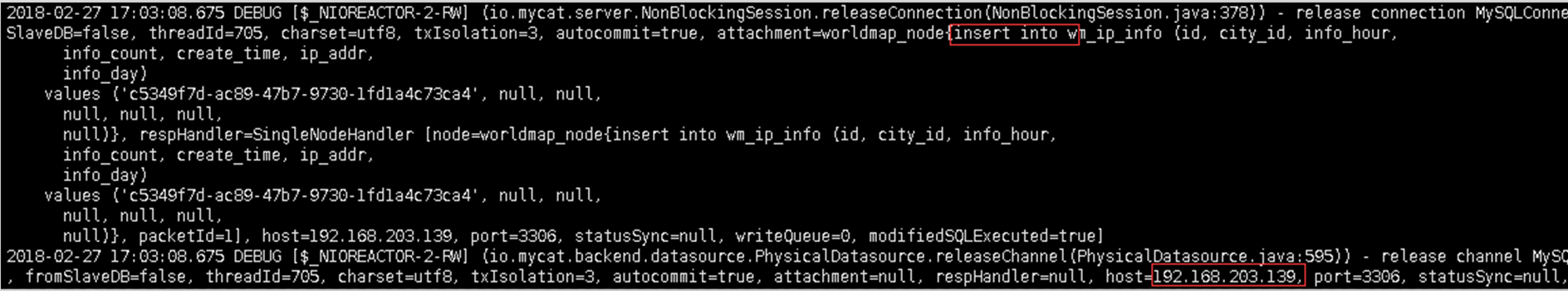

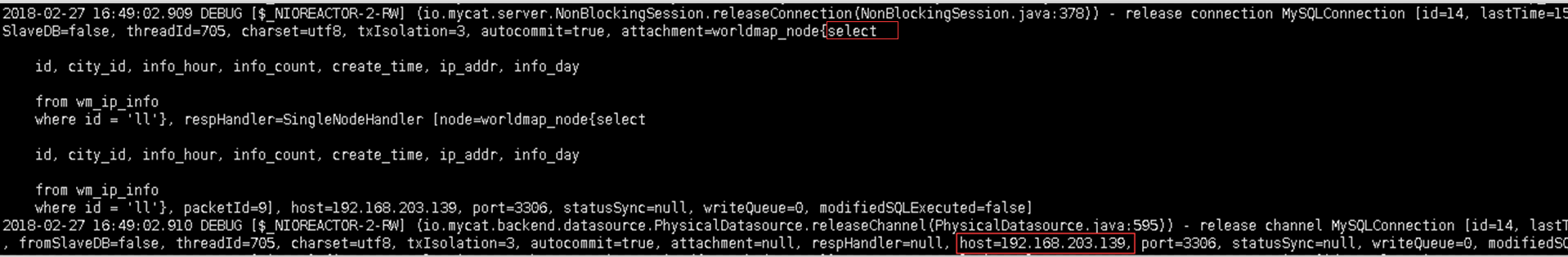

查看日志

基于中间件方式实现读写分离(mycat:主要是mycat安装使用及其注意事项)

3-1 下载 http://dl.mycat.io/

3-2 解压,配置MYCAT_HOME;

3-3 修改文件 vim conf/schema.xml

<?xml version="1.0"?> <!DOCTYPE mycat:schema SYSTEM "schema.dtd"> <mycat:schema xmlns:mycat="http://io.mycat/"> <schema name="worldmap" checkSQLschema="false" sqlMaxLimit="100" dataNode="worldmap_node"></schema> <dataNode name="worldmap_node" dataHost="worldmap_host" database="worldmap" /> <!-- database:数据库名称 --> <dataHost name="worldmap_host" maxCon="1000" minCon="10" balance="1" writeType="0" dbType="mysql" dbDriver="native" switchType="2" slaveThreshold="100"> <heartbeat>select user()</heartbeat> <writeHost host="hostM1" url="192.168.203.135:3306" user="root" password="123456"><!--读写分离模式,写库:192.168.203.135,读库192.168.203.139--> <readHost host="hostR1" url="192.168.203.139:3306" user="root" password="123456" /> </writeHost> <writeHost host="hostM2" url="192.168.203.135:3306" user="root" password="123456"> <!--主从切换模式,当hostM1宕机,读写操作在hostM2服务器数据库执行--> </dataHost> </mycat:schema>

配置说明:

name:属性唯一标识dataHost标签,供上层的标签使用。

maxCon:最大连接数

minCon:最先连接数

balance

1、balance=0 不开启读写分离机制,所有读操作都发送到当前可用的writehost了 .

2、balance=1 全部的readhost与stand by writeHost 参与select语句的负载均衡。简单的说,双主双从模式(M1àS1,M2àS2,并且M1和M2互为主备),正常情况下,M1,S1,S2都参与select语句的复杂均衡。

3、balance=2 所有读操作都随机的在readhost和writehost上分发

writeType 负载均衡类型,目前的取值有3种:

1、writeType="0″, 所有写操作发送到配置的第一个writeHost。

2、writeType="1″,所有写操作都随机的发送到配置的writeHost。

3、writeType="2″,不执行写操作。

switchType

1、switchType=-1 表示不自动切换

2、switchType=1 默认值,自动切换

3、switchType=2 基于MySQL 主从同步的状态决定是否切换

dbType:数据库类型 mysql,postgresql,mongodb、oracle、spark等。

heartbeat:用于和后端数据库进行心跳检查的语句。例如,MYSQL可以使用select user(),Oracle可以使用select 1 from dual等。

这个标签还有一个connectionInitSql属性,主要是当使用Oracla数据库时,需要执行的初始化SQL语句就这个放到这里面来。例如:altersession set nls_date_format='yyyy-mm-dd hh24:mi:ss'

当switchType=2 主从切换的语句必须是:show slave status

writeHost、readHost:这两个标签都指定后端数据库的相关配置给mycat,用于实例化后端连接池。唯一不同的是,writeHost指定写实例、readHost指定读实例,

在一个dataHost内可以定义多个writeHost和readHost。但是,如果writeHost指定的后端数据库宕机,那么这个writeHost绑定的所有readHost都将不可用。

另一方面,由于这个writeHost宕机系统会自动的检测到,并切换到备用的writeHost上去。

3-4 修改文件 vim conf/server.xml

<!DOCTYPE mycat:server SYSTEM "server.dtd"> <mycat:server xmlns:mycat="http://io.mycat/"> <system> </system> <user name="root"> <property name="password">123456</property> <property name="schemas">worldmap</property><!--与schema.xml相对应--> <property name="readOnly">false</property> <!--readOnly是应用连接中间件逻辑库所具有的权限。true为只读,false为读写都有,默认为false。--> </user> </mycat:server>

3-5 启动 mycat start

查看启动日志:logs/wrapper.log;,正常启动成功后会有mycat.log日志,如果服务未启动成功不会有对应日志

3-6:对于开发人员mycat相当于一个新的数据库服务端(默认端口8066),开发人员增删改查不再是直接连接数据库,而是连接数据库中间件,中间件通过其自带的lua脚本进行sql判断,来路由到指定数据库(实质根据selet,insert,update,delete关键字)

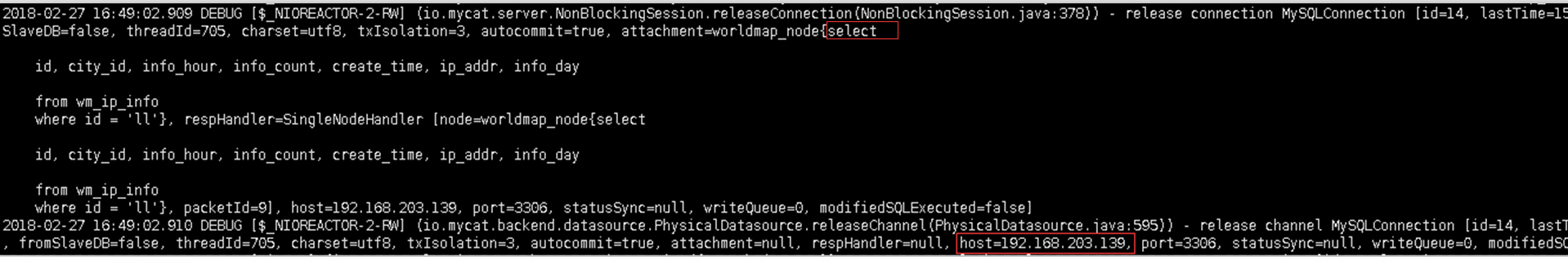

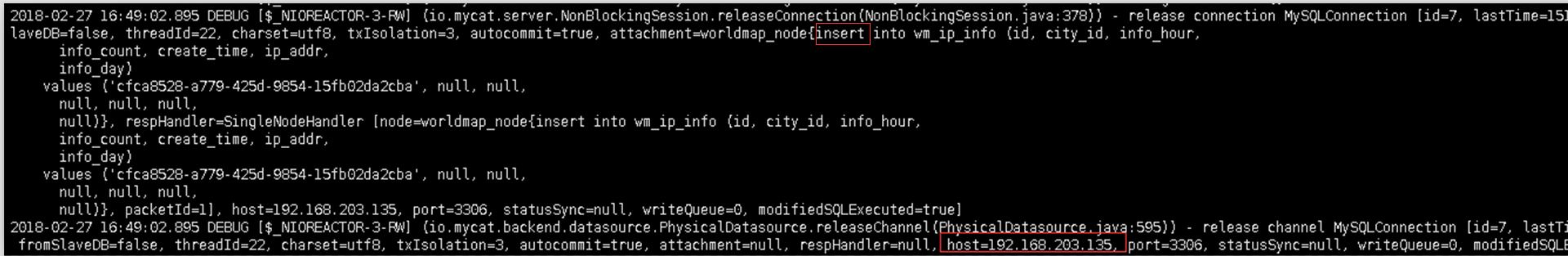

3-7:测试读写分离

读数据路由到 192.168.203.139

写数据路由到192.168.203.135

当主库宕机,读写操作都在192.168.203.139

3-8:注意事项

一般使用框架都会用到事务,如果都要到事务那么就都会访问主服务器,达不到分离的效果,因此配置事务的时候要注意区分,比如只对包含增删改的进行事务配置