第15章 自动编码器

写在前面

参考书

《机器学习实战——基于Scikit-Learn和TensorFlow》

工具

python3.5.1,Jupyter Notebook, Pycharm

使用不完整的线性自动编码器实现PCA

- 一个自动编码器由两部分组成:编码器(或称为识别网络),解码器(或称为生成网络)。

- 输出层的神经元数量必须等于输入层的数量。

- 输出通常被称为重建,因为自动编码器尝试重建输入,并且成本函数包含重建损失,当重建与输入不同时,该损失会惩罚模型。

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_1.py

@time: 2019/6/20 14:31

@desc: 使用不完整的线性自动编码器实现PCA

"""

import tensorflow as tf

from tensorflow.contrib.layers import fully_connected

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits import mplot3d

# 生成数据

import numpy.random as rnd

rnd.seed(4)

m = 200

w1, w2 = 0.1, 0.3

noise = 0.1

angles = rnd.rand(m) * 3 * np.pi / 2 - 0.5

data = np.empty((m, 3))

data[:, 0] = np.cos(angles) + np.sin(angles) / 2 + noise * rnd.randn(m) / 2

data[:, 1] = np.sin(angles) * 0.7 + noise * rnd.randn(m) / 2

data[:, 2] = data[:, 0] * w1 + data[:, 1] * w2 + noise * rnd.randn(m)

# 数据标准化

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

X_train = scaler.fit_transform(data[:100])

X_test = scaler.transform(data[100:])

# 3D inputs

n_inputs = 3

# 2D codings

n_hidden = 2

n_outputs = n_inputs

learning_rate = 0.01

n_iterations = 1000

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

hidden = fully_connected(X, n_hidden, activation_fn=None)

outputs = fully_connected(hidden, n_outputs, activation_fn=None)

# MSE

reconstruction_loss = tf.reduce_mean(tf.square(outputs - X))

optimizer = tf.train.AdamOptimizer(learning_rate)

training_op = optimizer.minimize(reconstruction_loss)

init = tf.global_variables_initializer()

codings = hidden

with tf.Session() as sess:

init.run()

for n_iterations in range(n_iterations):

training_op.run(feed_dict={X: X_train})

codings_val = codings.eval(feed_dict={X: X_test})

fig = plt.figure(figsize=(4, 3), dpi=300)

plt.plot(codings_val[:, 0], codings_val[:, 1], "b.")

plt.xlabel("$z_1$", fontsize=18)

plt.ylabel("$z_2$", fontsize=18, rotation=0)

plt.show()

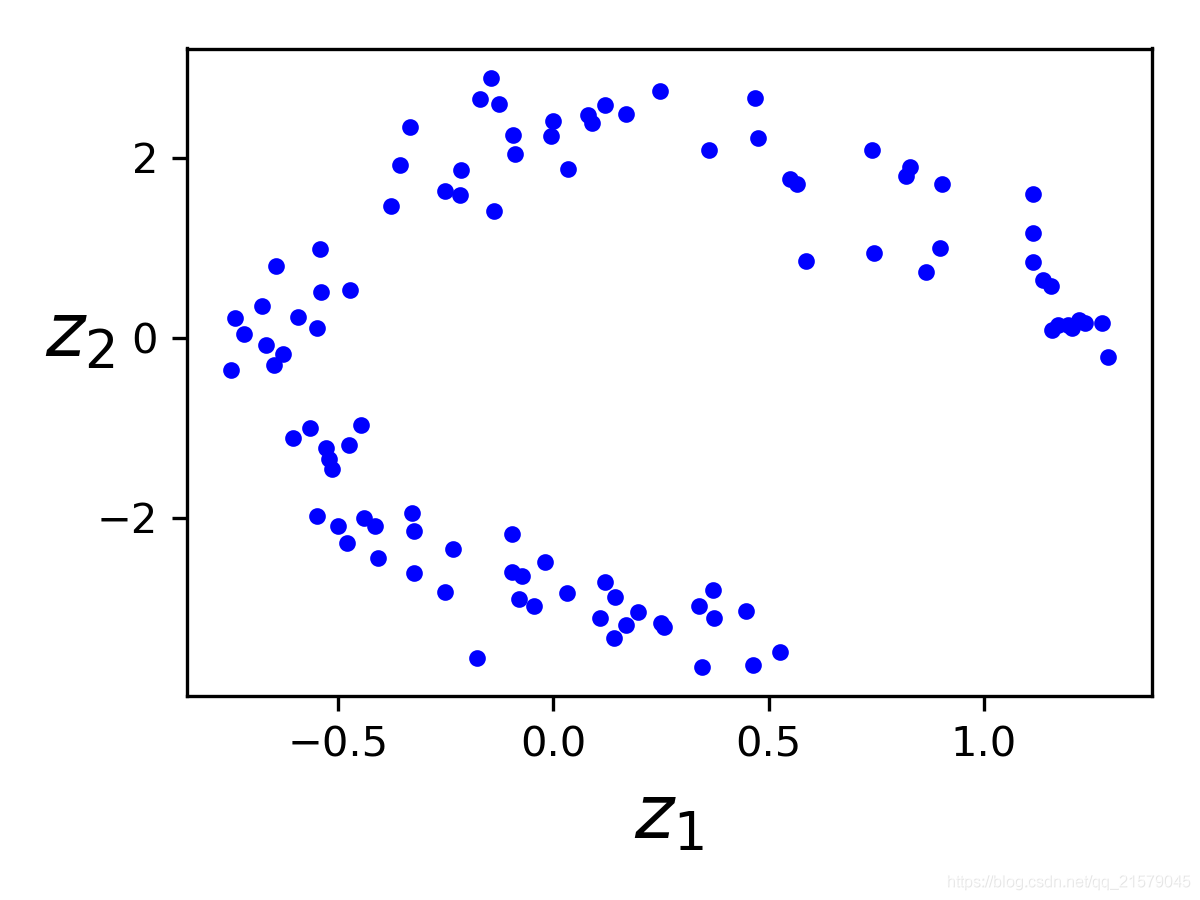

- 运行结果

- 画出的是中间两个神经元隐藏层。(降维)

栈式自动编码器(深度自动编码器)

- 使用He初始化,ELU激活函数,以及$l_2$正则化构建了一个MNIST栈式自动编码器。

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_2.py

@time: 2019/6/21 8:48

@desc: 使用He初始化,ELU激活函数,以及$l_2$正则化构建了一个MNIST栈式自动编码器

"""

import tensorflow as tf

from tensorflow.contrib.layers import fully_connected

from tensorflow.examples.tutorials.mnist import input_data

import matplotlib.pyplot as plt

import sys

n_inputs = 28 * 28 # for MNIST

n_hidden1 = 300

n_hidden2 = 150 # codings

n_hidden3 = n_hidden1

n_outputs = n_inputs

learning_rate = 0.001

l2_reg = 0.001

n_epochs = 30

batch_size = 150

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

with tf.contrib.framework.arg_scope(

[fully_connected],

activation_fn=tf.nn.elu,

weights_initializer=tf.contrib.layers.variance_scaling_initializer(),

weights_regularizer=tf.contrib.layers.l2_regularizer(l2_reg)

):

hidden1 = fully_connected(X, n_hidden1)

hidden2 = fully_connected(hidden1, n_hidden2) # codings

hidden3 = fully_connected(hidden2, n_hidden3)

outputs = fully_connected(hidden3, n_outputs, activation_fn=None)

reconstruction_loss = tf.reduce_mean(tf.square(outputs - X)) # MSE

reg_losses = tf.get_collection(tf.GraphKeys.REGULARIZATION_LOSSES)

loss = tf.add_n([reconstruction_loss] + reg_losses)

optimizer = tf.train.AdamOptimizer(learning_rate)

training_op = optimizer.minimize(loss)

init = tf.global_variables_initializer()

saver = tf.train.Saver()

with tf.Session() as sess:

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

init.run()

for epoch in range(n_epochs):

n_batches = mnist.train.num_examples // batch_size

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="")

sys.stdout.flush()

X_batch, y_batch = mnist.train.next_batch(batch_size)

sess.run(training_op, feed_dict={X: X_batch})

loss_train = reconstruction_loss.eval(feed_dict={X: X_batch})

print("

{}".format(epoch), "Train MSE:", loss_train)

saver.save(sess, "D:/Python3Space/BookStudy/book4/model/my_model_all_layers.ckpt")

# 可视化

def plot_image(image, shape=[28, 28]):

plt.imshow(image.reshape(shape), cmap="Greys", interpolation="nearest")

plt.axis("off")

def show_reconstructed_digits(X, outputs, model_path = None, n_test_digits = 2):

with tf.Session() as sess:

if model_path:

saver.restore(sess, model_path)

X_test = mnist.test.images[:n_test_digits]

outputs_val = outputs.eval(feed_dict={X: X_test})

fig = plt.figure(figsize=(8, 3 * n_test_digits))

for digit_index in range(n_test_digits):

plt.subplot(n_test_digits, 2, digit_index * 2 + 1)

plot_image(X_test[digit_index])

plt.subplot(n_test_digits, 2, digit_index * 2 + 2)

plot_image(outputs_val[digit_index])

plt.show()

show_reconstructed_digits(X, outputs, "D:/Python3Space/BookStudy/book4/model/my_model_all_layers.ckpt")

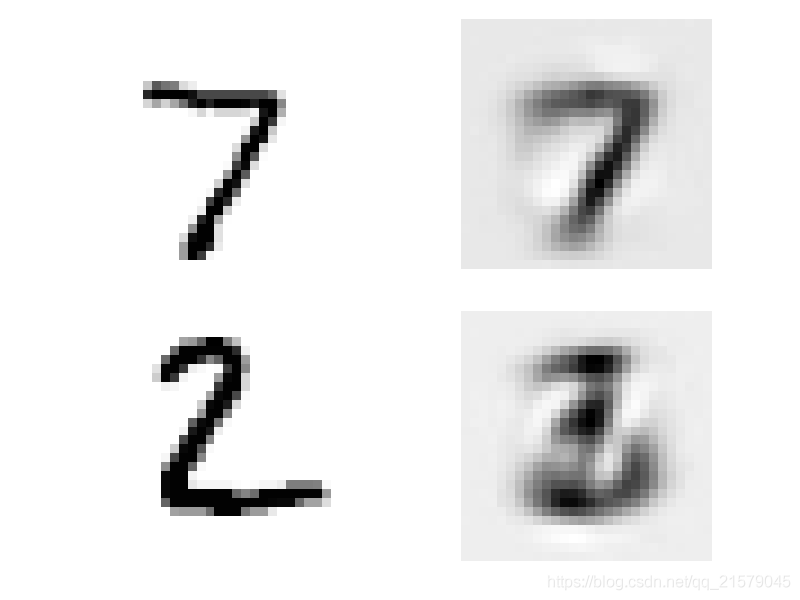

- 运行结果

权重绑定

- 将解码层的权重和编码层的权重联系起来。$W_{N-L+1} = W_L^T$

- weight3和weights4不是变量,它们分别是weights2和weights1的转置(它们被“绑定”在一起)。

- 因为它们不是变量,所以没有必要进行正则化,只正则化weights1和weigts2。

- 偏置项从来不会被绑定,也不会被正则化。

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_3.py

@time: 2019/6/21 9:48

@desc: 权重绑定

"""

import tensorflow as tf

from tensorflow.contrib.layers import fully_connected

from tensorflow.examples.tutorials.mnist import input_data

import matplotlib.pyplot as plt

import sys

# 在复制一遍可视化代码

def plot_image(image, shape=[28, 28]):

plt.imshow(image.reshape(shape), cmap="Greys", interpolation="nearest")

plt.axis("off")

def show_reconstructed_digits(X, outputs, model_path = None, n_test_digits = 2):

with tf.Session() as sess:

if model_path:

saver.restore(sess, model_path)

X_test = mnist.test.images[:n_test_digits]

outputs_val = outputs.eval(feed_dict={X: X_test})

fig = plt.figure(figsize=(8, 3 * n_test_digits))

for digit_index in range(n_test_digits):

plt.subplot(n_test_digits, 2, digit_index * 2 + 1)

plot_image(X_test[digit_index])

plt.subplot(n_test_digits, 2, digit_index * 2 + 2)

plot_image(outputs_val[digit_index])

plt.show()

n_inputs = 28 * 28

n_hidden1 = 300

n_hidden2 = 150 # codings

n_hidden3 = n_hidden1

n_outputs = n_inputs

learning_rate = 0.01

l2_reg = 0.001

n_epochs = 5

batch_size = 150

activation = tf.nn.elu

regularizer = tf.contrib.layers.l2_regularizer(l2_reg)

initializer = tf.contrib.layers.variance_scaling_initializer()

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

weights1_init = initializer([n_inputs, n_hidden1])

weights2_init = initializer([n_hidden1, n_hidden2])

weights1 = tf.Variable(weights1_init, dtype=tf.float32, name='weights1')

weights2 = tf.Variable(weights2_init, dtype=tf.float32, name='weights2')

weights3 = tf.transpose(weights2, name='weights3') # 权重绑定

weights4 = tf.transpose(weights1, name='weights4') # 权重绑定

biases1 = tf.Variable(tf.zeros(n_hidden1), name='biases1')

biases2 = tf.Variable(tf.zeros(n_hidden2), name='biases2')

biases3 = tf.Variable(tf.zeros(n_hidden3), name='biases3')

biases4 = tf.Variable(tf.zeros(n_outputs), name='biases4')

hidden1 = activation(tf.matmul(X, weights1) + biases1)

hidden2 = activation(tf.matmul(hidden1, weights2) + biases2)

hidden3 = activation(tf.matmul(hidden2, weights3) + biases3)

outputs = tf.matmul(hidden3, weights4) + biases4

reconstruction_loss = tf.reduce_mean(tf.square(outputs - X))

reg_loss = regularizer(weights1) + regularizer(weights2)

loss = reconstruction_loss + reg_loss

optimizer = tf.train.AdamOptimizer(learning_rate)

training_op = optimizer.minimize(loss)

init = tf.global_variables_initializer()

saver = tf.train.Saver()

with tf.Session() as sess:

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

init.run()

for epoch in range(n_epochs):

n_batches = mnist.train.num_examples // batch_size

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="")

sys.stdout.flush()

X_batch, y_batch = mnist.train.next_batch(batch_size)

sess.run(training_op, feed_dict={X: X_batch})

loss_train = reconstruction_loss.eval(feed_dict={X: X_batch})

print("

{}".format(epoch), "Train MSE:", loss_train)

saver.save(sess, "D:/Python3Space/BookStudy/book4/model/my_model_tying_weights.ckpt")

show_reconstructed_digits(X, outputs, "D:/Python3Space/BookStudy/book4/model/my_model_tying_weights.ckpt")

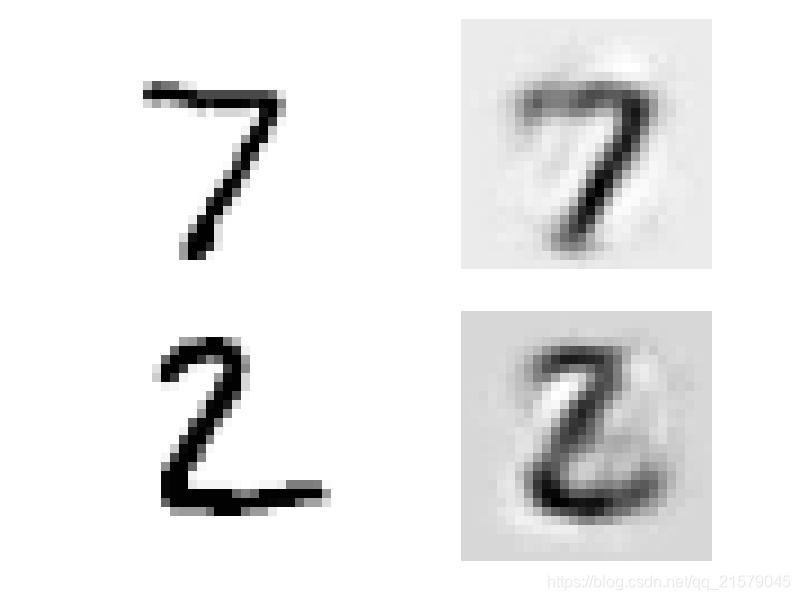

- 运行结果

在多个图中一次训练一个自动编码器

- 有许多方法可以一次训练一个自动编码器。第一种方法是使用不同的图形对每个自动编码器进行训练,然后我们通过简单地初始化它和从这些自动编码器复制的权重和偏差来创建堆叠的自动编码器。

- 让我们创建一个函数来训练一个自动编码器并返回转换后的训练集(即隐藏层的输出)和模型参数。

- 现在让我们训练两个自动编码器。第一个是关于训练数据的训练,第二个是关于上一个自动编码器隐藏层输出的训练。

- 最后,通过简单地重用我们刚刚训练的自动编码器的权重和偏差,我们可以创建一个堆叠的自动编码器。

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_6.py

@time: 2019/6/24 10:29

@desc: 在多个图中一次训练一个自动编码器

"""

import tensorflow as tf

from tensorflow.contrib.layers import fully_connected

from tensorflow.examples.tutorials.mnist import input_data

import matplotlib.pyplot as plt

import sys

import numpy.random as rnd

from functools import partial

# 在复制一遍可视化代码

def plot_image(image, shape=[28, 28]):

plt.imshow(image.reshape(shape), cmap="Greys", interpolation="nearest")

plt.axis("off")

def show_reconstructed_digits(X, outputs, n_test_digits=2):

with tf.Session() as sess:

# if model_path:

# saver.restore(sess, model_path)

X_test = mnist.test.images[:n_test_digits]

outputs_val = outputs.eval(feed_dict={X: X_test})

fig = plt.figure(figsize=(8, 3 * n_test_digits))

for digit_index in range(n_test_digits):

plt.subplot(n_test_digits, 2, digit_index * 2 + 1)

plot_image(X_test[digit_index])

plt.subplot(n_test_digits, 2, digit_index * 2 + 2)

plot_image(outputs_val[digit_index])

plt.show()

# 有许多方法可以一次训练一个自动编码器。第一种方法是使用不同的图形对每个自动编码器进行训练,

# 然后我们通过简单地初始化它和从这些自动编码器复制的权重和偏差来创建堆叠的自动编码器。

def train_autoencoder(X_train, n_neurons, n_epochs, batch_size,

learning_rate=0.01, l2_reg=0.0005,

activation=tf.nn.elu, seed=42):

graph = tf.Graph()

with graph.as_default():

tf.set_random_seed(seed)

n_inputs = X_train.shape[1]

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

my_dense_layer = partial(

tf.layers.dense,

activation=activation,

kernel_initializer=tf.contrib.layers.variance_scaling_initializer(),

kernel_regularizer=tf.contrib.layers.l2_regularizer(l2_reg))

hidden = my_dense_layer(X, n_neurons, name="hidden")

outputs = my_dense_layer(hidden, n_inputs, activation=None, name="outputs")

reconstruction_loss = tf.reduce_mean(tf.square(outputs - X))

reg_losses = tf.get_collection(tf.GraphKeys.REGULARIZATION_LOSSES)

loss = tf.add_n([reconstruction_loss] + reg_losses)

optimizer = tf.train.AdamOptimizer(learning_rate)

training_op = optimizer.minimize(loss)

init = tf.global_variables_initializer()

with tf.Session(graph=graph) as sess:

init.run()

for epoch in range(n_epochs):

n_batches = len(X_train) // batch_size

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="")

sys.stdout.flush()

indices = rnd.permutation(len(X_train))[:batch_size]

X_batch = X_train[indices]

sess.run(training_op, feed_dict={X: X_batch})

loss_train = reconstruction_loss.eval(feed_dict={X: X_batch})

print("

{}".format(epoch), "Train MSE:", loss_train)

params = dict([(var.name, var.eval()) for var in tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES)])

hidden_val = hidden.eval(feed_dict={X: X_train})

return hidden_val, params["hidden/kernel:0"], params["hidden/bias:0"], params["outputs/kernel:0"], params["outputs/bias:0"]

mnist = input_data.read_data_sets('F:/JupyterWorkspace/MNIST_data/')

hidden_output, W1, b1, W4, b4 = train_autoencoder(mnist.train.images, n_neurons=300, n_epochs=4, batch_size=150)

_, W2, b2, W3, b3 = train_autoencoder(hidden_output, n_neurons=150, n_epochs=4, batch_size=150)

# 最后,通过简单地重用我们刚刚训练的自动编码器的权重和偏差,我们可以创建一个堆叠的自动编码器。

n_inputs = 28*28

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

hidden1 = tf.nn.elu(tf.matmul(X, W1) + b1)

hidden2 = tf.nn.elu(tf.matmul(hidden1, W2) + b2)

hidden3 = tf.nn.elu(tf.matmul(hidden2, W3) + b3)

outputs = tf.matmul(hidden3, W4) + b4

show_reconstructed_digits(X, outputs)

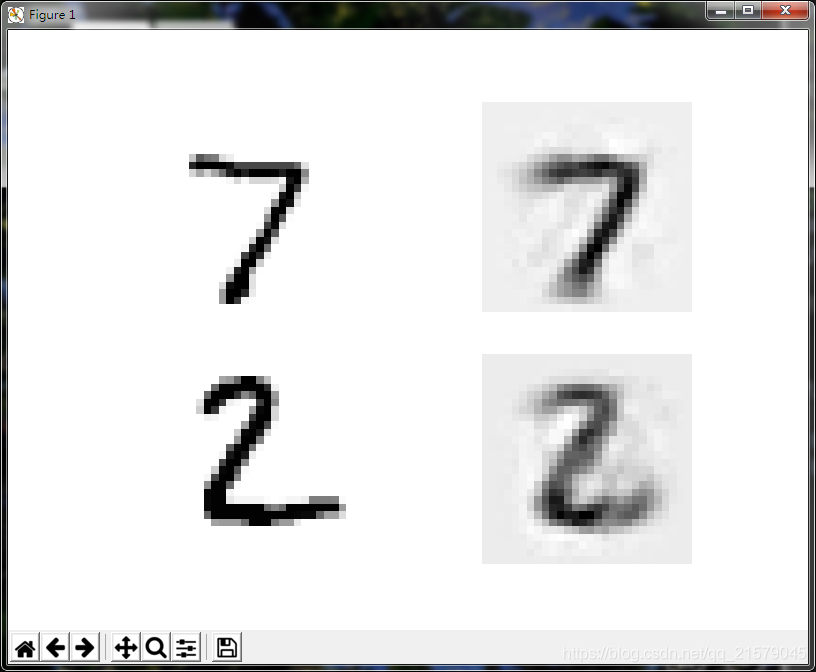

- 运行结果

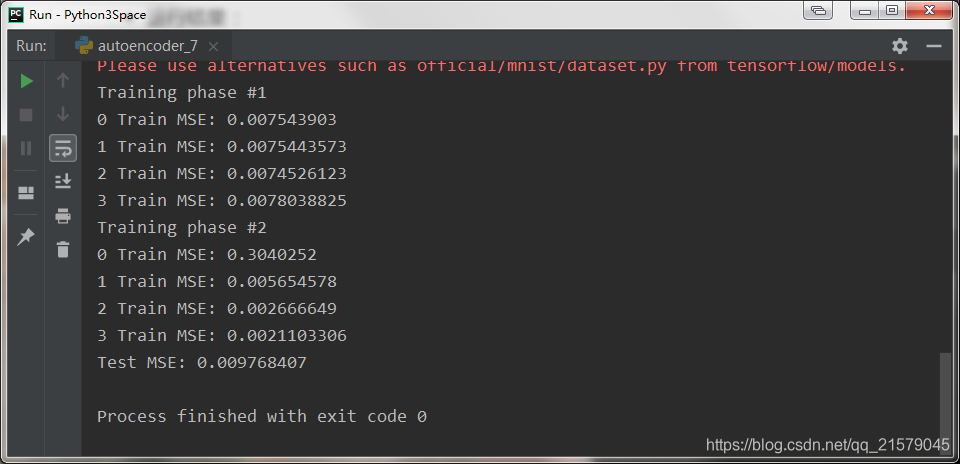

在一个图中一次训练一个自动编码器

- 另一种方法是使用单个图。为此,我们为完整的堆叠式自动编码器创建了图形,但是我们还添加了独立训练每个自动编码器的操作:第一阶段训练底层和顶层(即。第一个自动编码器和第二阶段训练两个中间层(即。第二个自动编码器)。

- 通过设置

optimizer.minimize参数中的var_list,达到freeze其他权重的目的。

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_7.py

@time: 2019/7/2 9:02

@desc: 在一个图中一次训练一个自动编码器

"""

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import matplotlib.pyplot as plt

import sys

import numpy.random as rnd

from functools import partial

n_inputs = 28 * 28

n_hidden1 = 300

n_hidden2 = 150 # codings

n_hidden3 = n_hidden1

n_outputs = n_inputs

learning_rate = 0.01

l2_reg = 0.0001

activation = tf.nn.elu

regularizer = tf.contrib.layers.l2_regularizer(l2_reg)

initializer = tf.contrib.layers.variance_scaling_initializer()

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

weights1_init = initializer([n_inputs, n_hidden1])

weights2_init = initializer([n_hidden1, n_hidden2])

weights3_init = initializer([n_hidden2, n_hidden3])

weights4_init = initializer([n_hidden3, n_outputs])

weights1 = tf.Variable(weights1_init, dtype=tf.float32, name="weights1")

weights2 = tf.Variable(weights2_init, dtype=tf.float32, name="weights2")

weights3 = tf.Variable(weights3_init, dtype=tf.float32, name="weights3")

weights4 = tf.Variable(weights4_init, dtype=tf.float32, name="weights4")

biases1 = tf.Variable(tf.zeros(n_hidden1), name="biases1")

biases2 = tf.Variable(tf.zeros(n_hidden2), name="biases2")

biases3 = tf.Variable(tf.zeros(n_hidden3), name="biases3")

biases4 = tf.Variable(tf.zeros(n_outputs), name="biases4")

hidden1 = activation(tf.matmul(X, weights1) + biases1)

hidden2 = activation(tf.matmul(hidden1, weights2) + biases2)

hidden3 = activation(tf.matmul(hidden2, weights3) + biases3)

outputs = tf.matmul(hidden3, weights4) + biases4

reconstruction_loss = tf.reduce_mean(tf.square(outputs - X))

optimizer = tf.train.AdamOptimizer(learning_rate)

# 第一阶段

with tf.name_scope("phase1"):

phase1_outputs = tf.matmul(hidden1, weights4) + biases4 # bypass hidden2 and hidden3

phase1_reconstruction_loss = tf.reduce_mean(tf.square(phase1_outputs - X))

phase1_reg_loss = regularizer(weights1) + regularizer(weights4)

phase1_loss = phase1_reconstruction_loss + phase1_reg_loss

phase1_training_op = optimizer.minimize(phase1_loss)

# 第二阶段

with tf.name_scope("phase2"):

phase2_reconstruction_loss = tf.reduce_mean(tf.square(hidden3 - hidden1))

phase2_reg_loss = regularizer(weights2) + regularizer(weights3)

phase2_loss = phase2_reconstruction_loss + phase2_reg_loss

train_vars = [weights2, biases2, weights3, biases3]

phase2_training_op = optimizer.minimize(phase2_loss, var_list=train_vars) # freeze hidden1

init = tf.global_variables_initializer()

saver = tf.train.Saver()

training_ops = [phase1_training_op, phase2_training_op]

reconstruction_losses = [phase1_reconstruction_loss, phase2_reconstruction_loss]

n_epochs = [4, 4]

batch_sizes = [150, 150]

with tf.Session() as sess:

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

init.run()

for phase in range(2):

print("Training phase #{}".format(phase + 1))

for epoch in range(n_epochs[phase]):

n_batches = mnist.train.num_examples // batch_sizes[phase]

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="")

sys.stdout.flush()

X_batch, y_batch = mnist.train.next_batch(batch_sizes[phase])

sess.run(training_ops[phase], feed_dict={X: X_batch})

loss_train = reconstruction_losses[phase].eval(feed_dict={X: X_batch})

print("

{}".format(epoch), "Train MSE:", loss_train)

saver.save(sess, "D:/Python3Space/BookStudy/book4/model/my_model_one_at_a_time.ckpt")

loss_test = reconstruction_loss.eval(feed_dict={X: mnist.test.images})

print("Test MSE:", loss_test)

- 运行结果:

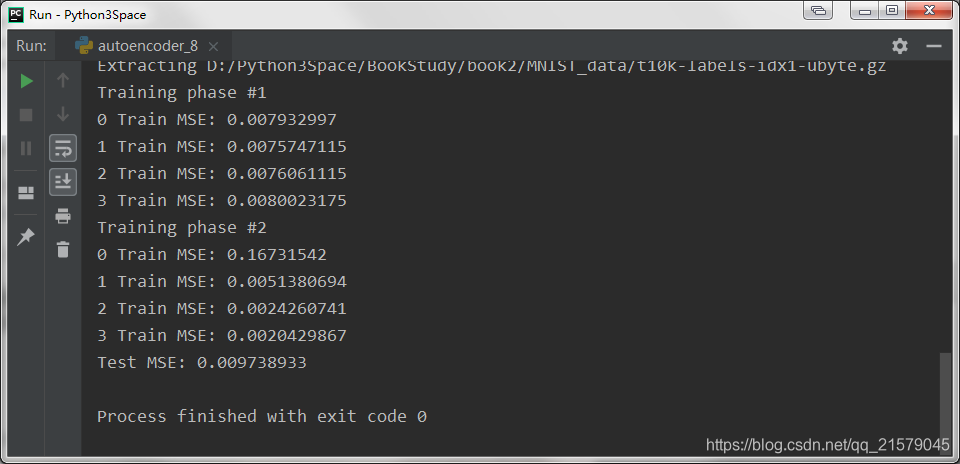

馈送冻结层输出缓存

- 由于隐藏层 1 在阶段 2 期间被冻结,所以对于任何给定的训练实例其输出将总是相同的。为了避免在每个时期重新计算隐藏层1的输出,可以在阶段 1 结束时为整个训练集计算它,然后直接在阶段 2 中输入隐藏层 1 的缓存输出。这可以得到一个不错的性能上的提升。

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_8.py

@time: 2019/7/2 9:32

@desc: 馈送冻结层输出缓存

"""

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import numpy.random as rnd

import sys

n_inputs = 28 * 28

n_hidden1 = 300

n_hidden2 = 150 # codings

n_hidden3 = n_hidden1

n_outputs = n_inputs

learning_rate = 0.01

l2_reg = 0.0001

activation = tf.nn.elu

regularizer = tf.contrib.layers.l2_regularizer(l2_reg)

initializer = tf.contrib.layers.variance_scaling_initializer()

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

weights1_init = initializer([n_inputs, n_hidden1])

weights2_init = initializer([n_hidden1, n_hidden2])

weights3_init = initializer([n_hidden2, n_hidden3])

weights4_init = initializer([n_hidden3, n_outputs])

weights1 = tf.Variable(weights1_init, dtype=tf.float32, name="weights1")

weights2 = tf.Variable(weights2_init, dtype=tf.float32, name="weights2")

weights3 = tf.Variable(weights3_init, dtype=tf.float32, name="weights3")

weights4 = tf.Variable(weights4_init, dtype=tf.float32, name="weights4")

biases1 = tf.Variable(tf.zeros(n_hidden1), name="biases1")

biases2 = tf.Variable(tf.zeros(n_hidden2), name="biases2")

biases3 = tf.Variable(tf.zeros(n_hidden3), name="biases3")

biases4 = tf.Variable(tf.zeros(n_outputs), name="biases4")

hidden1 = activation(tf.matmul(X, weights1) + biases1)

hidden2 = activation(tf.matmul(hidden1, weights2) + biases2)

hidden3 = activation(tf.matmul(hidden2, weights3) + biases3)

outputs = tf.matmul(hidden3, weights4) + biases4

reconstruction_loss = tf.reduce_mean(tf.square(outputs - X))

optimizer = tf.train.AdamOptimizer(learning_rate)

# 第一阶段

with tf.name_scope("phase1"):

phase1_outputs = tf.matmul(hidden1, weights4) + biases4 # bypass hidden2 and hidden3

phase1_reconstruction_loss = tf.reduce_mean(tf.square(phase1_outputs - X))

phase1_reg_loss = regularizer(weights1) + regularizer(weights4)

phase1_loss = phase1_reconstruction_loss + phase1_reg_loss

phase1_training_op = optimizer.minimize(phase1_loss)

# 第二阶段

with tf.name_scope("phase2"):

phase2_reconstruction_loss = tf.reduce_mean(tf.square(hidden3 - hidden1))

phase2_reg_loss = regularizer(weights2) + regularizer(weights3)

phase2_loss = phase2_reconstruction_loss + phase2_reg_loss

train_vars = [weights2, biases2, weights3, biases3]

phase2_training_op = optimizer.minimize(phase2_loss, var_list=train_vars) # freeze hidden1

init = tf.global_variables_initializer()

saver = tf.train.Saver()

training_ops = [phase1_training_op, phase2_training_op]

reconstruction_losses = [phase1_reconstruction_loss, phase2_reconstruction_loss]

n_epochs = [4, 4]

batch_sizes = [150, 150]

with tf.Session() as sess:

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

init.run()

for phase in range(2):

print("Training phase #{}".format(phase + 1))

if phase == 1:

hidden1_cache = hidden1.eval(feed_dict={X: mnist.train.images})

for epoch in range(n_epochs[phase]):

n_batches = mnist.train.num_examples // batch_sizes[phase]

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="")

sys.stdout.flush()

if phase == 1:

indices = rnd.permutation(mnist.train.num_examples)

hidden1_batch = hidden1_cache[indices[:batch_sizes[phase]]]

feed_dict = {hidden1: hidden1_batch}

sess.run(training_ops[phase], feed_dict=feed_dict)

else:

X_batch, y_batch = mnist.train.next_batch(batch_sizes[phase])

feed_dict = {X: X_batch}

sess.run(training_ops[phase], feed_dict=feed_dict)

loss_train = reconstruction_losses[phase].eval(feed_dict=feed_dict)

print("

{}".format(epoch), "Train MSE:", loss_train)

saver.save(sess, "D:/Python3Space/BookStudy/book4/model/my_model_cache_frozen.ckpt")

loss_test = reconstruction_loss.eval(feed_dict={X: mnist.test.images})

print("Test MSE:", loss_test)

- 运行结果:

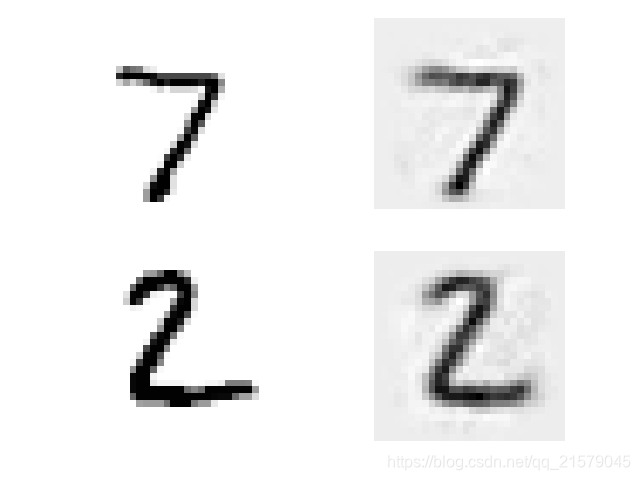

重建可视化

- 确保自编码器得到适当训练的一种方法是比较输入和输出。 它们必须非常相似,差异应该是不重要的细节。 我们来绘制两个随机数字及其重建:

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_9.py

@time: 2019/7/2 9:38

@desc: 重建可视化

"""

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import matplotlib.pyplot as plt

n_inputs = 28 * 28

n_hidden1 = 300

n_hidden2 = 150 # codings

n_hidden3 = n_hidden1

n_outputs = n_inputs

learning_rate = 0.01

l2_reg = 0.0001

activation = tf.nn.elu

regularizer = tf.contrib.layers.l2_regularizer(l2_reg)

initializer = tf.contrib.layers.variance_scaling_initializer()

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

weights1_init = initializer([n_inputs, n_hidden1])

weights2_init = initializer([n_hidden1, n_hidden2])

weights3_init = initializer([n_hidden2, n_hidden3])

weights4_init = initializer([n_hidden3, n_outputs])

weights1 = tf.Variable(weights1_init, dtype=tf.float32, name="weights1")

weights2 = tf.Variable(weights2_init, dtype=tf.float32, name="weights2")

weights3 = tf.Variable(weights3_init, dtype=tf.float32, name="weights3")

weights4 = tf.Variable(weights4_init, dtype=tf.float32, name="weights4")

biases1 = tf.Variable(tf.zeros(n_hidden1), name="biases1")

biases2 = tf.Variable(tf.zeros(n_hidden2), name="biases2")

biases3 = tf.Variable(tf.zeros(n_hidden3), name="biases3")

biases4 = tf.Variable(tf.zeros(n_outputs), name="biases4")

hidden1 = activation(tf.matmul(X, weights1) + biases1)

hidden2 = activation(tf.matmul(hidden1, weights2) + biases2)

hidden3 = activation(tf.matmul(hidden2, weights3) + biases3)

outputs = tf.matmul(hidden3, weights4) + biases4

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

n_test_digits = 2

X_test = mnist.test.images[:n_test_digits]

saver = tf.train.Saver()

with tf.Session() as sess:

saver.restore(sess, "D:/Python3Space/BookStudy/book4/model/my_model_one_at_a_time.ckpt") # not shown in the book

outputs_val = outputs.eval(feed_dict={X: X_test})

def plot_image(image, shape=[28, 28]):

plt.imshow(image.reshape(shape), cmap="Greys", interpolation="nearest")

plt.axis("off")

for digit_index in range(n_test_digits):

plt.subplot(n_test_digits, 2, digit_index * 2 + 1)

plot_image(X_test[digit_index])

plt.subplot(n_test_digits, 2, digit_index * 2 + 2)

plot_image(outputs_val[digit_index])

plt.show()

- 运行结果:

特征可视化

- 一旦你的自编码器学习了一些功能,你可能想看看它们。 有各种各样的技术。 可以说最简单的技术是在每个隐藏层中考虑每个神经元,并找到最能激活它的训练实例。

- 对于第一个隐藏层中的每个神经元,您可以创建一个图像,其中像素的强度对应于给定神经元的连接权重。

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_10.py

@time: 2019/7/2 9:49

@desc: 特征可视化

"""

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import matplotlib.pyplot as plt

n_inputs = 28 * 28

n_hidden1 = 300

n_hidden2 = 150 # codings

n_hidden3 = n_hidden1

n_outputs = n_inputs

learning_rate = 0.01

l2_reg = 0.0001

activation = tf.nn.elu

regularizer = tf.contrib.layers.l2_regularizer(l2_reg)

initializer = tf.contrib.layers.variance_scaling_initializer()

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

weights1_init = initializer([n_inputs, n_hidden1])

weights2_init = initializer([n_hidden1, n_hidden2])

weights3_init = initializer([n_hidden2, n_hidden3])

weights4_init = initializer([n_hidden3, n_outputs])

weights1 = tf.Variable(weights1_init, dtype=tf.float32, name="weights1")

weights2 = tf.Variable(weights2_init, dtype=tf.float32, name="weights2")

weights3 = tf.Variable(weights3_init, dtype=tf.float32, name="weights3")

weights4 = tf.Variable(weights4_init, dtype=tf.float32, name="weights4")

biases1 = tf.Variable(tf.zeros(n_hidden1), name="biases1")

biases2 = tf.Variable(tf.zeros(n_hidden2), name="biases2")

biases3 = tf.Variable(tf.zeros(n_hidden3), name="biases3")

biases4 = tf.Variable(tf.zeros(n_outputs), name="biases4")

hidden1 = activation(tf.matmul(X, weights1) + biases1)

hidden2 = activation(tf.matmul(hidden1, weights2) + biases2)

hidden3 = activation(tf.matmul(hidden2, weights3) + biases3)

outputs = tf.matmul(hidden3, weights4) + biases4

saver = tf.train.Saver()

# 在复制一遍可视化代码

def plot_image(image, shape=[28, 28]):

plt.imshow(image.reshape(shape), cmap="Greys", interpolation="nearest")

plt.axis("off")

with tf.Session() as sess:

saver.restore(sess, "D:/Python3Space/BookStudy/book4/model/my_model_one_at_a_time.ckpt") # not shown in the book

weights1_val = weights1.eval()

for i in range(5):

plt.subplot(1, 5, i + 1)

plot_image(weights1_val.T[i])

plt.show()

- 运行结果:

- 第一层隐藏层为300个神经元,权重为[28*28, 300]。

- 图中为可视化前五个神经元。

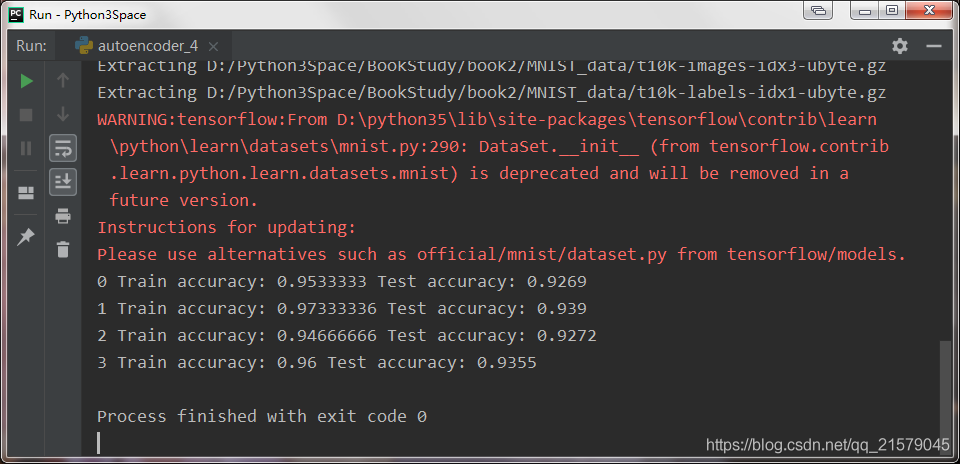

使用堆叠的自动编码器进行无监督的预训练

- 参考链接:Numpy.random中shuffle与permutation的区别

- 函数shuffle与permutation都是对原来的数组进行重新洗牌(即随机打乱原来的元素顺序);区别在于shuffle直接在原来的数组上进行操作,改变原来数组的顺序,无返回值。而permutation不直接在原来的数组上进行操作,而是返回一个新的打乱顺序的数组,并不改变原来的数组。

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_4.py

@time: 2019/6/23 16:50

@desc: 使用堆叠的自动编码器进行无监督的预训练

"""

import tensorflow as tf

from tensorflow.contrib.layers import fully_connected

from tensorflow.examples.tutorials.mnist import input_data

import matplotlib.pyplot as plt

import sys

import numpy.random as rnd

n_inputs = 28 * 28

n_hidden1 = 300

n_hidden2 = 150

n_outputs = 10

learning_rate = 0.01

l2_reg = 0.0005

activation = tf.nn.elu

regularizer = tf.contrib.layers.l2_regularizer(l2_reg)

initializer = tf.contrib.layers.variance_scaling_initializer()

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

y = tf.placeholder(tf.int32, shape=[None])

weights1_init = initializer([n_inputs, n_hidden1])

weights2_init = initializer([n_hidden1, n_hidden2])

weights3_init = initializer([n_hidden2, n_outputs])

weights1 = tf.Variable(weights1_init, dtype=tf.float32, name="weights1")

weights2 = tf.Variable(weights2_init, dtype=tf.float32, name="weights2")

weights3 = tf.Variable(weights3_init, dtype=tf.float32, name="weights3")

biases1 = tf.Variable(tf.zeros(n_hidden1), name="biases1")

biases2 = tf.Variable(tf.zeros(n_hidden2), name="biases2")

biases3 = tf.Variable(tf.zeros(n_outputs), name="biases3")

hidden1 = activation(tf.matmul(X, weights1) + biases1)

hidden2 = activation(tf.matmul(hidden1, weights2) + biases2)

logits = tf.matmul(hidden2, weights3) + biases3

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=y, logits=logits)

reg_loss = regularizer(weights1) + regularizer(weights2) + regularizer(weights3)

loss = cross_entropy + reg_loss

optimizer = tf.train.AdamOptimizer(learning_rate)

training_op = optimizer.minimize(loss)

correct = tf.nn.in_top_k(logits, y, 1)

accuracy = tf.reduce_mean(tf.cast(correct, tf.float32))

init = tf.global_variables_initializer()

pretrain_saver = tf.train.Saver([weights1, weights2, biases1, biases2])

saver = tf.train.Saver()

n_epochs = 4

batch_size = 150

n_labeled_instances = 20000

with tf.Session() as sess:

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

init.run()

for epoch in range(n_epochs):

n_batches = n_labeled_instances // batch_size

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="")

sys.stdout.flush()

indices = rnd.permutation(n_labeled_instances)[:batch_size]

X_batch, y_batch = mnist.train.images[indices], mnist.train.labels[indices]

sess.run(training_op, feed_dict={X: X_batch, y: y_batch})

accuracy_val = accuracy.eval(feed_dict={X: X_batch, y: y_batch})

print("

{}".format(epoch), "Train accuracy:", accuracy_val, end=" ")

saver.save(sess, "D:/Python3Space/BookStudy/book4/model/my_model_supervised.ckpt")

accuracy_val = accuracy.eval(feed_dict={X: mnist.test.images, y: mnist.test.labels})

print("Test accuracy:", accuracy_val)

去噪自动编码器

- 另一种强制自动编码器学习有用特征的方法是在输入中增加噪音,训练它以恢复原始的无噪音输入。这种方法阻止了自动编码器简单的复制其输入到输出,最终必须找到数据中的模式。

- 自动编码器可以用于特征提取。

- 这里要用

tf.shape(X)来获取图片的size,它创建了一个在运行时返回该点完全定义的X向量的操作;而不能用X.get_shape(),因为这将只返回部分定义的X([None], n_inputs)向量。

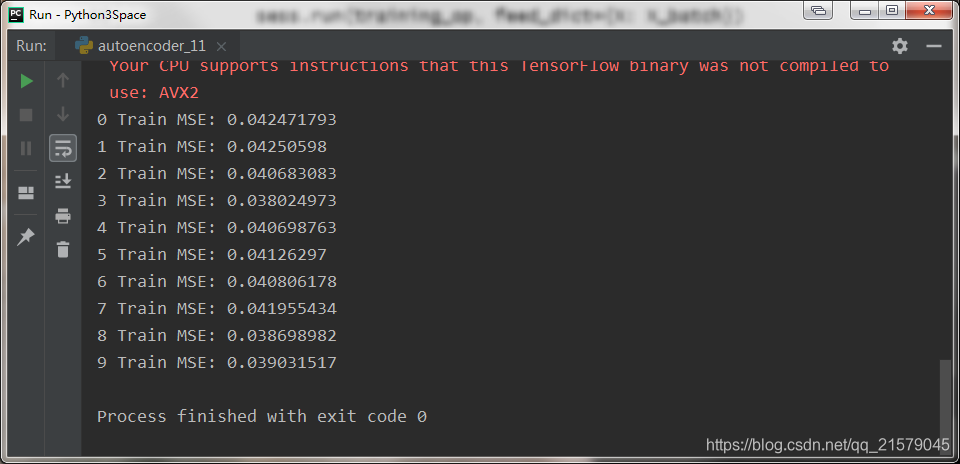

使用高斯噪音

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_11.py

@time: 2019/7/2 10:02

@desc: 去噪自动编码器:使用高斯噪音

"""

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import sys

n_inputs = 28 * 28

n_hidden1 = 300

n_hidden2 = 150 # codings

n_hidden3 = n_hidden1

n_outputs = n_inputs

learning_rate = 0.01

noise_level = 1.0

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

X_noisy = X + noise_level * tf.random_normal(tf.shape(X))

hidden1 = tf.layers.dense(X_noisy, n_hidden1, activation=tf.nn.relu,

name="hidden1")

hidden2 = tf.layers.dense(hidden1, n_hidden2, activation=tf.nn.relu, # not shown in the book

name="hidden2") # not shown

hidden3 = tf.layers.dense(hidden2, n_hidden3, activation=tf.nn.relu, # not shown

name="hidden3") # not shown

outputs = tf.layers.dense(hidden3, n_outputs, name="outputs") # not shown

reconstruction_loss = tf.reduce_mean(tf.square(outputs - X)) # MSE

optimizer = tf.train.AdamOptimizer(learning_rate)

training_op = optimizer.minimize(reconstruction_loss)

init = tf.global_variables_initializer()

saver = tf.train.Saver()

n_epochs = 10

batch_size = 150

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

with tf.Session() as sess:

init.run()

for epoch in range(n_epochs):

n_batches = mnist.train.num_examples // batch_size

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="")

sys.stdout.flush()

X_batch, y_batch = mnist.train.next_batch(batch_size)

sess.run(training_op, feed_dict={X: X_batch})

loss_train = reconstruction_loss.eval(feed_dict={X: X_batch})

print("

{}".format(epoch), "Train MSE:", loss_train)

saver.save(sess, "D:/Python3Space/BookStudy/book4/model/my_model_stacked_denoising_gaussian.ckpt")

- 运行结果:

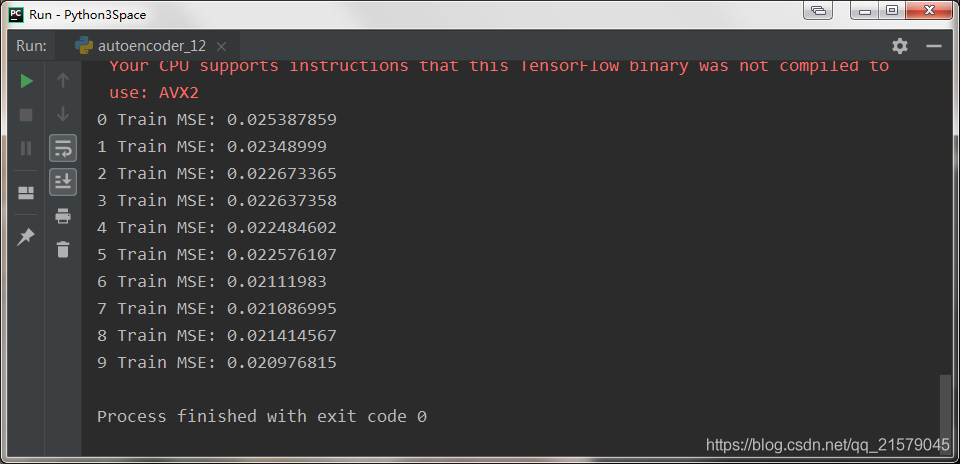

使用dropout

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_12.py

@time: 2019/7/2 10:11

@desc: 去噪自动编码器:使用dropout

"""

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import sys

n_inputs = 28 * 28

n_hidden1 = 300

n_hidden2 = 150 # codings

n_hidden3 = n_hidden1

n_outputs = n_inputs

learning_rate = 0.01

dropout_rate = 0.3

training = tf.placeholder_with_default(False, shape=(), name='training')

X = tf.placeholder(tf.float32, shape=[None, n_inputs])

X_drop = tf.layers.dropout(X, dropout_rate, training=training)

hidden1 = tf.layers.dense(X_drop, n_hidden1, activation=tf.nn.relu,

name="hidden1")

hidden2 = tf.layers.dense(hidden1, n_hidden2, activation=tf.nn.relu, # not shown in the book

name="hidden2") # not shown

hidden3 = tf.layers.dense(hidden2, n_hidden3, activation=tf.nn.relu, # not shown

name="hidden3") # not shown

outputs = tf.layers.dense(hidden3, n_outputs, name="outputs") # not shown

reconstruction_loss = tf.reduce_mean(tf.square(outputs - X)) # MSE

optimizer = tf.train.AdamOptimizer(learning_rate)

training_op = optimizer.minimize(reconstruction_loss)

init = tf.global_variables_initializer()

saver = tf.train.Saver()

n_epochs = 10

batch_size = 150

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

with tf.Session() as sess:

init.run()

for epoch in range(n_epochs):

n_batches = mnist.train.num_examples // batch_size

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="")

sys.stdout.flush()

X_batch, y_batch = mnist.train.next_batch(batch_size)

sess.run(training_op, feed_dict={X: X_batch, training: True})

loss_train = reconstruction_loss.eval(feed_dict={X: X_batch})

print("

{}".format(epoch), "Train MSE:", loss_train)

saver.save(sess, "D:/Python3Space/BookStudy/book4/model/my_model_stacked_denoising_dropout.ckpt")

- 运行结果:

- 在测试期间没有必要设置

is_training为False,因为调用placeholder_with_default()函数时我们设置其为默认值。

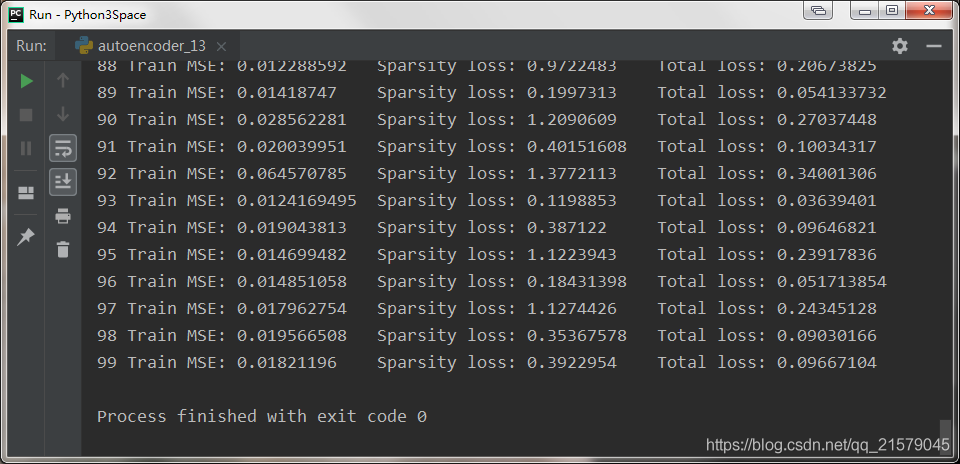

稀疏自动编码器

- 通常良好特征提取的另一种约束是稀疏性:通过向损失函数添加适当的项,自编码器被推动以减少编码层中活动神经元的数量。

- 一种方法可以简单地将平方误差添加到损失函数中,但实际上更好的方法是使用 Kullback-Leibler 散度(相对熵),其具有比均方误差更强的梯度。它表示2个函数或概率分布的差异性:差异越大则相对熵越大,差异越小则相对熵越小,特别地,若2者相同则熵为0。注意,KL散度的非对称性。

- 一旦我们已经计算了编码层中每个神经元的稀疏损失,我们就总结这些损失,并将结果添加到损失函数中。 为了控制稀疏损失和重构损失的相对重要性,我们可以用稀疏权重超参数乘以稀疏损失。 如果这个权重太高,模型会紧贴目标稀疏度,但它可能无法正确重建输入,导致模型无用。 相反,如果它太低,模型将大多忽略稀疏目标,它不会学习任何有用的功能。

- 参考链接:KL散度(相对熵)、交叉熵的解析

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_13.py

@time: 2019/7/2 10:32

@desc: 稀疏自动编码器

"""

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import sys

n_inputs = 28 * 28

n_hidden1 = 1000 # sparse codings

n_outputs = n_inputs

def kl_divergence(p, q):

# Kullback Leibler divergence

return p * tf.log(p / q) + (1 - p) * tf.log((1 - p) / (1 - q))

learning_rate = 0.01

sparsity_target = 0.1

sparsity_weight = 0.2

X = tf.placeholder(tf.float32, shape=[None, n_inputs]) # not shown in the book

hidden1 = tf.layers.dense(X, n_hidden1, activation=tf.nn.sigmoid) # not shown

outputs = tf.layers.dense(hidden1, n_outputs) # not shown

hidden1_mean = tf.reduce_mean(hidden1, axis=0) # batch mean

sparsity_loss = tf.reduce_sum(kl_divergence(sparsity_target, hidden1_mean))

reconstruction_loss = tf.reduce_mean(tf.square(outputs - X)) # MSE

loss = reconstruction_loss + sparsity_weight * sparsity_loss

optimizer = tf.train.AdamOptimizer(learning_rate)

training_op = optimizer.minimize(loss)

init = tf.global_variables_initializer()

saver = tf.train.Saver()

# 训练过程

n_epochs = 100

batch_size = 1000

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

with tf.Session() as sess:

init.run()

for epoch in range(n_epochs):

n_batches = mnist.train.num_examples // batch_size

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="")

sys.stdout.flush()

X_batch, y_batch = mnist.train.next_batch(batch_size)

sess.run(training_op, feed_dict={X: X_batch})

reconstruction_loss_val, sparsity_loss_val, loss_val = sess.run([reconstruction_loss, sparsity_loss, loss], feed_dict={X: X_batch})

print("

{}".format(epoch), "Train MSE:", reconstruction_loss_val, " Sparsity loss:", sparsity_loss_val, " Total loss:", loss_val)

saver.save(sess, "D:/Python3Space/BookStudy/book4/model/my_model_sparse.ckpt")

- 运行结果:

- 一个重要的细节是,编码器激活度的值是在0-1之间(但是不等于0或1),否则KL散度将返回NaN。一个简单的解决方案是为编码层使用逻辑激活函数:

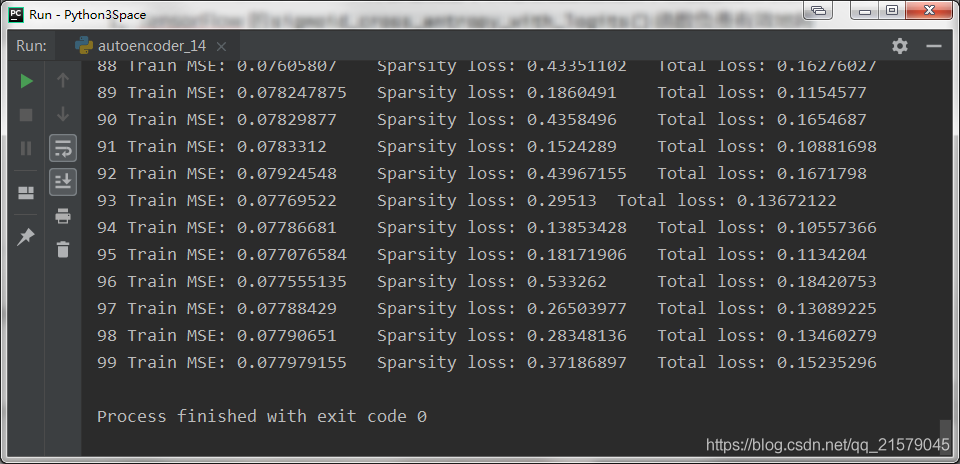

hidden1 = tf.layers.dense(X, n_hidden1, activation=tf.nn.sigmoid) - 一个简单的技巧可以加速收敛:不是使用 MSE,我们可以选择一个具有较大梯度的重建损失。 交叉熵通常是一个不错的选择。 要使用它,我们必须对输入进行规范化处理,使它们的取值范围为 0 到 1,并在输出层中使用逻辑激活函数,以便输出也取值为 0 到 1。TensorFlow 的

sigmoid_cross_entropy_with_logits()函数负责有效地将logistic(sigmoid)激活函数应用于输出并计算交叉熵。

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_14.py

@time: 2019/7/2 10:53

@desc: 稀疏自动编码器:加速收敛方法

"""

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import sys

n_inputs = 28 * 28

n_hidden1 = 1000 # sparse codings

n_outputs = n_inputs

def kl_divergence(p, q):

# Kullback Leibler divergence

return p * tf.log(p / q) + (1 - p) * tf.log((1 - p) / (1 - q))

learning_rate = 0.01

sparsity_target = 0.1

sparsity_weight = 0.2

X = tf.placeholder(tf.float32, shape=[None, n_inputs]) # not shown in the book

hidden1 = tf.layers.dense(X, n_hidden1, activation=tf.nn.sigmoid) # not shown

logits = tf.layers.dense(hidden1, n_outputs) # not shown

outputs = tf.nn.sigmoid(logits)

hidden1_mean = tf.reduce_mean(hidden1, axis=0) # batch mean

sparsity_loss = tf.reduce_sum(kl_divergence(sparsity_target, hidden1_mean))

xentropy = tf.nn.sigmoid_cross_entropy_with_logits(labels=X, logits=logits)

reconstruction_loss = tf.reduce_mean(xentropy)

loss = reconstruction_loss + sparsity_weight * sparsity_loss

optimizer = tf.train.AdamOptimizer(learning_rate)

training_op = optimizer.minimize(loss)

init = tf.global_variables_initializer()

saver = tf.train.Saver()

# 训练过程

n_epochs = 100

batch_size = 1000

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

with tf.Session() as sess:

init.run()

for epoch in range(n_epochs):

n_batches = mnist.train.num_examples // batch_size

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="")

sys.stdout.flush()

X_batch, y_batch = mnist.train.next_batch(batch_size)

sess.run(training_op, feed_dict={X: X_batch})

reconstruction_loss_val, sparsity_loss_val, loss_val = sess.run([reconstruction_loss, sparsity_loss, loss], feed_dict={X: X_batch})

print("

{}".format(epoch), "Train MSE:", reconstruction_loss_val, " Sparsity loss:", sparsity_loss_val, " Total loss:", loss_val)

saver.save(sess, "D:/Python3Space/BookStudy/book4/model/my_model_sparse_speedup.ckpt")

- 运行结果:

变分自动编码器

它和前面所讨论的所有编码器都不同,特别之处在于:

- 它们是概率自动编码器,这就意味着即使经过训练,其输出也部分程度上决定于运气(不同于仅在训练期间使用随机性的去噪自动编码器)。

- 更重要的是,它们是生成自动编码器,意味着它们可以生成看起来像是从训练样本采样的新实例。

- 参考文献: 变分自编码器(一):原来是这么一回事

- VAE的名字中“变分”,是因为它的推导过程用到了KL散度及其性质。

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_15.py

@time: 2019/7/3 9:42

@desc: 变分自动编码器

"""

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import sys

from functools import partial

n_inputs = 28 * 28

n_hidden1 = 500

n_hidden2 = 500

n_hidden3 = 20 # codings

n_hidden4 = n_hidden2

n_hidden5 = n_hidden1

n_outputs = n_inputs

learning_rate = 0.001

initializer = tf.contrib.layers.variance_scaling_initializer()

my_dense_layer = partial(

tf.layers.dense,

activation=tf.nn.elu,

kernel_initializer=initializer)

X = tf.placeholder(tf.float32, [None, n_inputs])

hidden1 = my_dense_layer(X, n_hidden1)

hidden2 = my_dense_layer(hidden1, n_hidden2)

hidden3_mean = my_dense_layer(hidden2, n_hidden3, activation=None)

hidden3_sigma = my_dense_layer(hidden2, n_hidden3, activation=None)

hidden3_gamma = my_dense_layer(hidden2, n_hidden3, activation=None)

noise = tf.random_normal(tf.shape(hidden3_sigma), dtype=tf.float32)

hidden3 = hidden3_mean + hidden3_sigma * noise

hidden4 = my_dense_layer(hidden3, n_hidden4)

hidden5 = my_dense_layer(hidden4, n_hidden5)

logits = my_dense_layer(hidden5, n_outputs, activation=None)

outputs = tf.sigmoid(logits)

xentropy = tf.nn.sigmoid_cross_entropy_with_logits(labels=X, logits=logits)

reconstruction_loss = tf.reduce_sum(xentropy)

eps = 1e-10 # smoothing term to avoid computing log(0) which is NaN

latent_loss = 0.5 * tf.reduce_sum(

tf.square(hidden3_sigma) + tf.square(hidden3_mean)

- 1 - tf.log(eps + tf.square(hidden3_sigma)))

# 一个常见的变体

# latent_loss = 0.5 * tf.reduce_sum(

# tf.exp(hidden3_gamma) + tf.square(hidden3_mean) - 1 - hidden3_gamma)

loss = reconstruction_loss + latent_loss

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate)

training_op = optimizer.minimize(loss)

init = tf.global_variables_initializer()

saver = tf.train.Saver()

n_epochs = 50

batch_size = 150

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

with tf.Session() as sess:

init.run()

for epoch in range(n_epochs):

n_batches = mnist.train.num_examples // batch_size

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="")

sys.stdout.flush()

X_batch, y_batch = mnist.train.next_batch(batch_size)

sess.run(training_op, feed_dict={X: X_batch})

loss_val, reconstruction_loss_val, latent_loss_val = sess.run([loss, reconstruction_loss, latent_loss], feed_dict={X: X_batch})

print("

{}".format(epoch), "Train total loss:", loss_val, " Reconstruction loss:", reconstruction_loss_val, " Latent loss:", latent_loss_val)

saver.save(sess, "D:/Python3Space/BookStudy/book4/model/my_model_variational.ckpt")

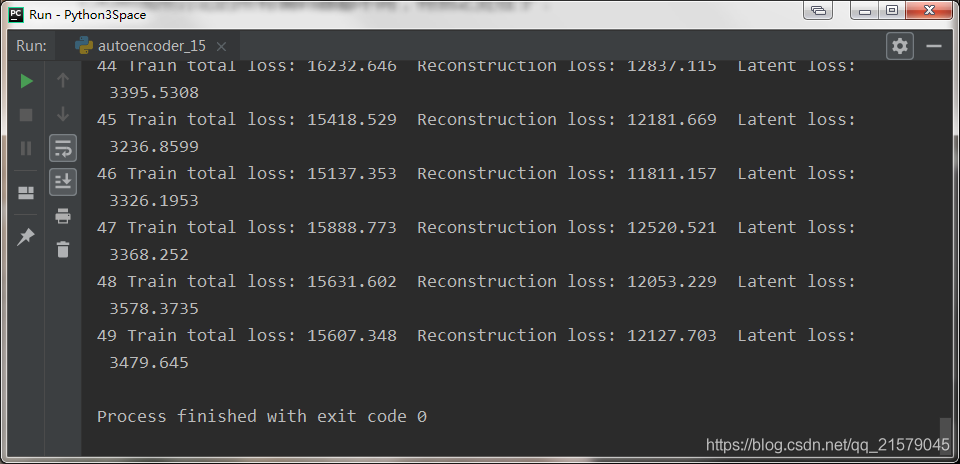

- 运行结果

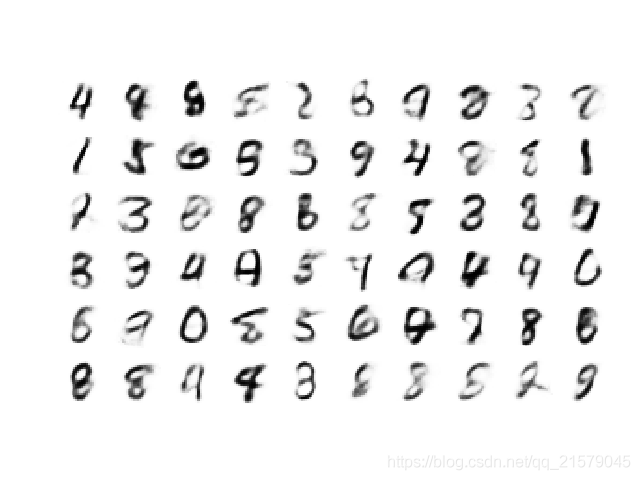

生成数字

- 用上述变分自动编码器生成一些看起来像手写数字的图片。我们需要做的是训练模型,然后从高斯分布中随机采样编码并对其进行解码。

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# coding=utf-8

"""

@author: Li Tian

@contact: 694317828@qq.com

@software: pycharm

@file: autoencoder_16.py

@time: 2019/7/3 10:12

@desc: 生成数字

"""

import numpy as np

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import sys

from functools import partial

import matplotlib.pyplot as plt

n_inputs = 28 * 28

n_hidden1 = 500

n_hidden2 = 500

n_hidden3 = 20 # codings

n_hidden4 = n_hidden2

n_hidden5 = n_hidden1

n_outputs = n_inputs

learning_rate = 0.001

initializer = tf.contrib.layers.variance_scaling_initializer()

my_dense_layer = partial(

tf.layers.dense,

activation=tf.nn.elu,

kernel_initializer=initializer)

X = tf.placeholder(tf.float32, [None, n_inputs])

hidden1 = my_dense_layer(X, n_hidden1)

hidden2 = my_dense_layer(hidden1, n_hidden2)

hidden3_mean = my_dense_layer(hidden2, n_hidden3, activation=None)

hidden3_sigma = my_dense_layer(hidden2, n_hidden3, activation=None)

hidden3_gamma = my_dense_layer(hidden2, n_hidden3, activation=None)

noise = tf.random_normal(tf.shape(hidden3_sigma), dtype=tf.float32)

hidden3 = hidden3_mean + hidden3_sigma * noise

hidden4 = my_dense_layer(hidden3, n_hidden4)

hidden5 = my_dense_layer(hidden4, n_hidden5)

logits = my_dense_layer(hidden5, n_outputs, activation=None)

outputs = tf.sigmoid(logits)

xentropy = tf.nn.sigmoid_cross_entropy_with_logits(labels=X, logits=logits)

reconstruction_loss = tf.reduce_sum(xentropy)

eps = 1e-10 # smoothing term to avoid computing log(0) which is NaN

latent_loss = 0.5 * tf.reduce_sum(

tf.square(hidden3_sigma) + tf.square(hidden3_mean)

- 1 - tf.log(eps + tf.square(hidden3_sigma)))

# 一个常见的变体

# latent_loss = 0.5 * tf.reduce_sum(

# tf.exp(hidden3_gamma) + tf.square(hidden3_mean) - 1 - hidden3_gamma)

loss = reconstruction_loss + latent_loss

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate)

training_op = optimizer.minimize(loss)

init = tf.global_variables_initializer()

saver = tf.train.Saver()

n_digits = 60

n_epochs = 50

batch_size = 150

mnist = input_data.read_data_sets('D:/Python3Space/BookStudy/book2/MNIST_data/')

with tf.Session() as sess:

init.run()

for epoch in range(n_epochs):

n_batches = mnist.train.num_examples // batch_size

for iteration in range(n_batches):

print("

{}%".format(100 * iteration // n_batches), end="") # not shown in the book

sys.stdout.flush() # not shown

X_batch, y_batch = mnist.train.next_batch(batch_size)

sess.run(training_op, feed_dict={X: X_batch})

loss_val, reconstruction_loss_val, latent_loss_val = sess.run([loss, reconstruction_loss, latent_loss],

feed_dict={X: X_batch}) # not shown

print("

{}".format(epoch), "Train total loss:", loss_val, " Reconstruction loss:", reconstruction_loss_val,

" Latent loss:", latent_loss_val) # not shown

saver.save(sess, "D:/Python3Space/BookStudy/book4/model/my_model_variational2.ckpt") # not shown

codings_rnd = np.random.normal(size=[n_digits, n_hidden3])

outputs_val = outputs.eval(feed_dict={hidden3: codings_rnd})

def plot_image(image, shape=[28, 28]):

plt.imshow(image.reshape(shape), cmap="Greys", interpolation="nearest")

plt.axis("off")

def plot_multiple_images(images, n_rows, n_cols, pad=2):

images = images - images.min() # make the minimum == 0, so the padding looks white

w,h = images.shape[1:]

image = np.zeros(((w+pad)*n_rows+pad, (h+pad)*n_cols+pad))

for y in range(n_rows):

for x in range(n_cols):

image[(y*(h+pad)+pad):(y*(h+pad)+pad+h),(x*(w+pad)+pad):(x*(w+pad)+pad+w)] = images[y*n_cols+x]

plt.imshow(image, cmap="Greys", interpolation="nearest")

plt.axis("off")

plt.figure(figsize=(8,50)) # not shown in the book

for iteration in range(n_digits):

plt.subplot(n_digits, 10, iteration + 1)

plot_image(outputs_val[iteration])

plt.show()

n_rows = 6

n_cols = 10

plot_multiple_images(outputs_val.reshape(-1, 28, 28), n_rows, n_cols)

plt.show()

- 运行结果:

其他自动编码器

- 收缩自动编码器(CAE):该自动编码器在训练期间加入限制,使得关于输入编码的衍生物比较小。换句话说,相似的输入会得到相似的编码。

- 栈式卷积自动编码器:该自动编码器通过卷积层重构图像来学习提取视觉特征。

- 随机生成网络(GSN):去噪自动编码器的推广,增加了生成数据的能力。

- 获胜者(WTA)自动编码器:训练期间,在计算了编码层所有神经元的激活度之后,只保留训练批次中前k%的激活度,其余都置为0。自然的,这将导致稀疏编码。此外,类似的WTA方法可以用于产生稀疏卷积自动编码器。

- 对抗自动编码器:一个网络被训练来重现输入,同时另一个网络被训练来找到不能正确重建第一个网络的输入。这促使第一个自动编码器学习鲁棒编码。

部分课后题的摘抄

-

自动编码器的主要任务是什么?

- 特征提取

- 无监督的预训练

- 降低维度

- 生成模型

- 异常检测(自动编码器在重建异常点时通常表现得不太好)

-

加入想训练一个分类器,而且有大量未训练的数据,但是只有几千个已经标记的实例。自动编码器可以如何帮助你?你会如何实现?

首先可以在完整的数据集(已标记和未标记)上训练一个深度自动编码器,然后重用其下半部分作为分类器(即重用上半部分作为编码层)并使用已标记的数据训练分类器。如果已标记的数据集比较小,你可能希望在训练分类器时冻结复用层。

-

如果自动编码器可以完美的重现输入,它就是一个好的自动编码器吗?如何评估自动编码器的性能?

完美的重建并不能保证自动编码器学习到有用的东西。然而,如果产生非常差的重建,它几乎必然是一个糟糕的自动编码器。

为了评估编码器的性能,一种方法是测量重建损失(例如,计算MSE,用输入的均方值减去输入)。同样,重建损失高意味着这是一个不好的编码器,但是重建损失低并不能保证这是一个好的自动编码器。你应该根据它的用途来评估自动编码器。例如,如果它用于无监督分类器的预训练,同样应该评估分类器的性能。

-

什么是不完整和完整自动编码器?不啊完整自动编码器的主要风险是什么?完整的意义是什么?

不完整的自动编码器是编码层比输入和输出层小的自动编码器。如果比其大,那就是一个完整的自动编码器。一个过度不完整的自动编码器的主要风险是:不能重建其输入。完整的自动编码器的主要风险是:它可能只是将输入复制到输出,不学习任何有用的特征。

-

如何在栈式自动编码器上绑定权重?为什么要这样做?

要将自动编码器的权重与其相应的解码层相关联,你可以简单的使得解码权重等于编码权重的转置。这将使得模型参数数量减少一半,通常使得训练在数据较少时快速收敛,减少过度拟合的危险。

-

栈式自动编码器低层学习可视化特征的常用技术是什么?高层又是什么?

为了可视化栈式自动编码器低层学习到的特征,一个常用的技术是通过将每个权重向量重建为输入图像的大小来绘制每个神经元的权重(例如,对MNIST,将权重向量形状[784]重建为[28, 28])。为了可视化高层学习到的特征,一种技术是显示最能激活每个神经元的训练实例。

-

什么是生成模型?你能列举一种生成自动编码器吗?

生成模型是一种可以随机生成类似于训练实例的输出的模型。

例如,一旦在MNIST数据集上训练成功,生成模型就可以用于随机生成逼真的数字图像。输出分布大致和训练数据类似。例如,因为MNIST包含了每个数字的多个图像,生成模型可以输出与每个数字大致数量相同的图像。有些生成模型可以进行参数化,例如,生成某种类型的输出。生成自动编码器的一个例子是变分自动编码器。

我的CSDN:https://blog.csdn.net/qq_21579045

我的博客园:https://www.cnblogs.com/lyjun/

我的Github:https://github.com/TinyHandsome

纸上得来终觉浅,绝知此事要躬行~

欢迎大家过来OB~

by 李英俊小朋友