坐标变换与视觉测量

给我一个摄像头,我可以用它来丈量天下

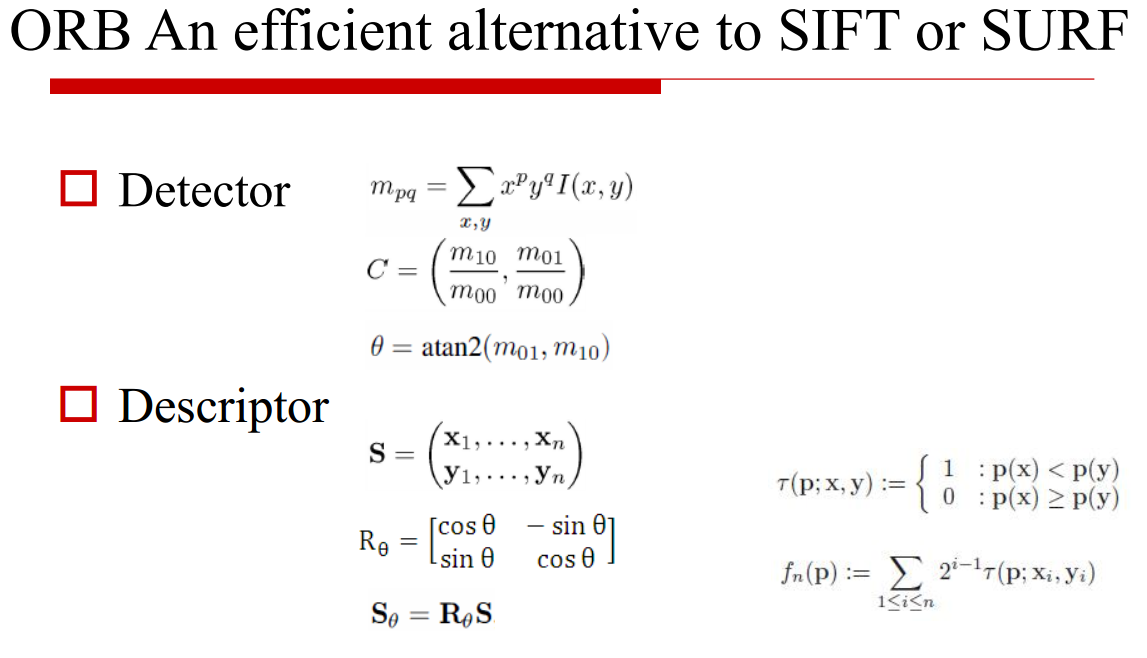

一。特征点检测:

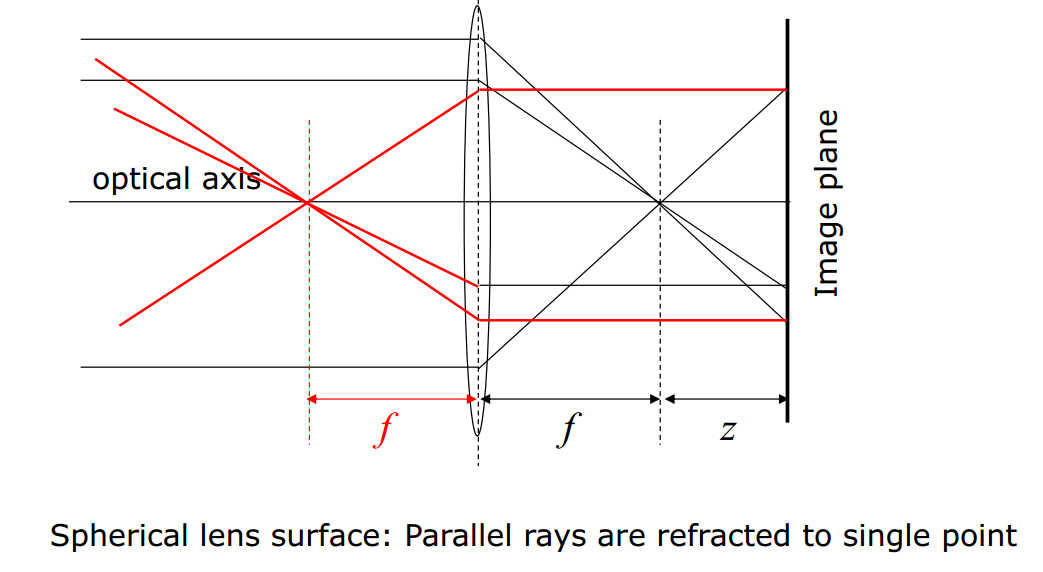

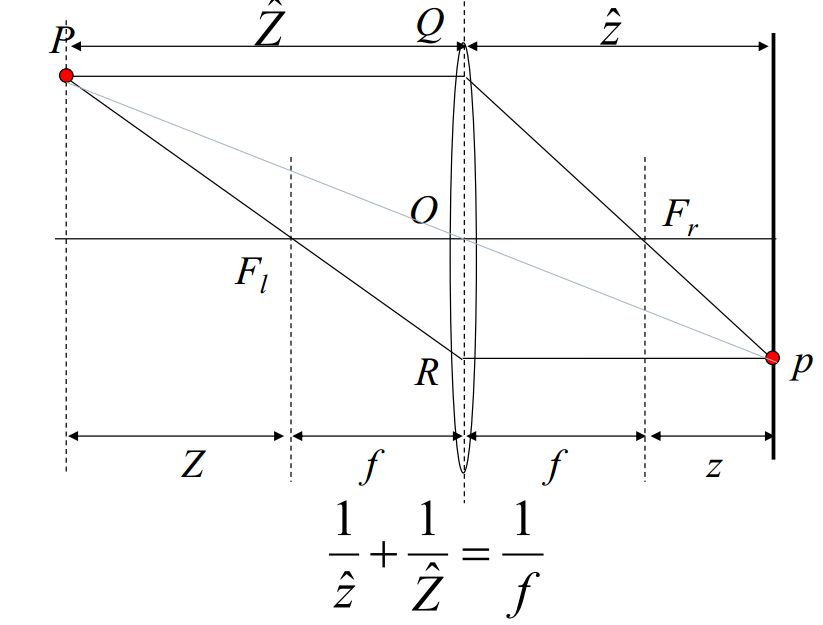

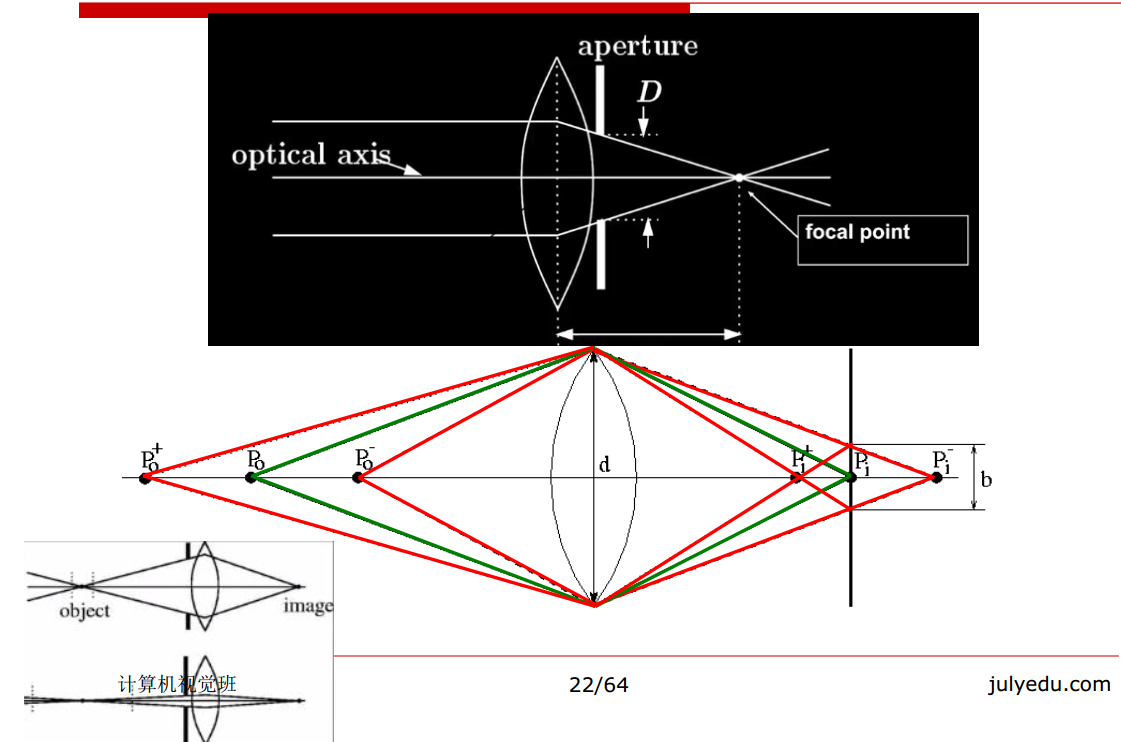

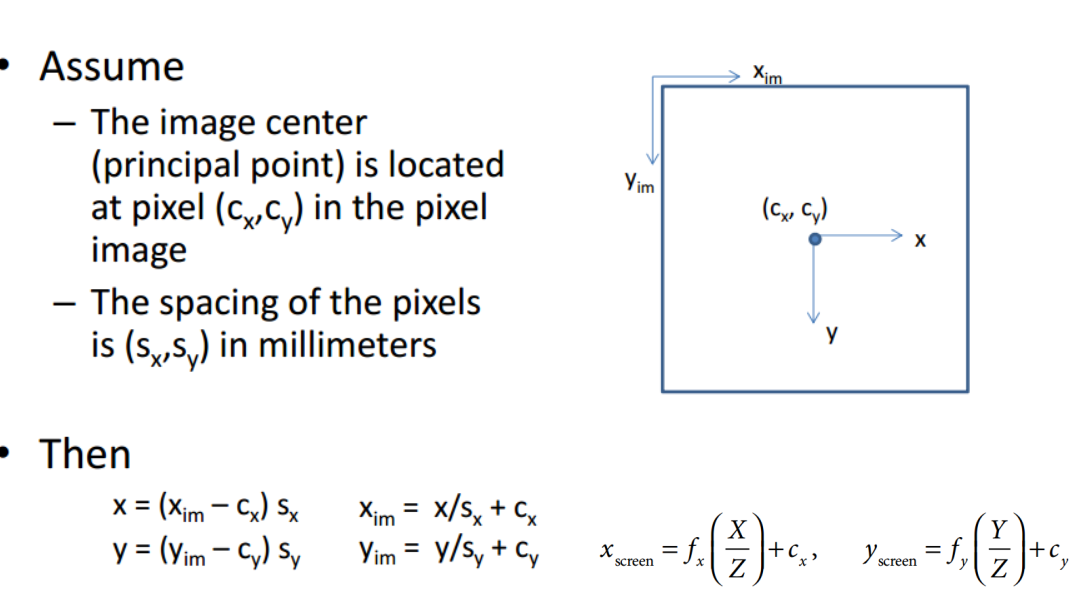

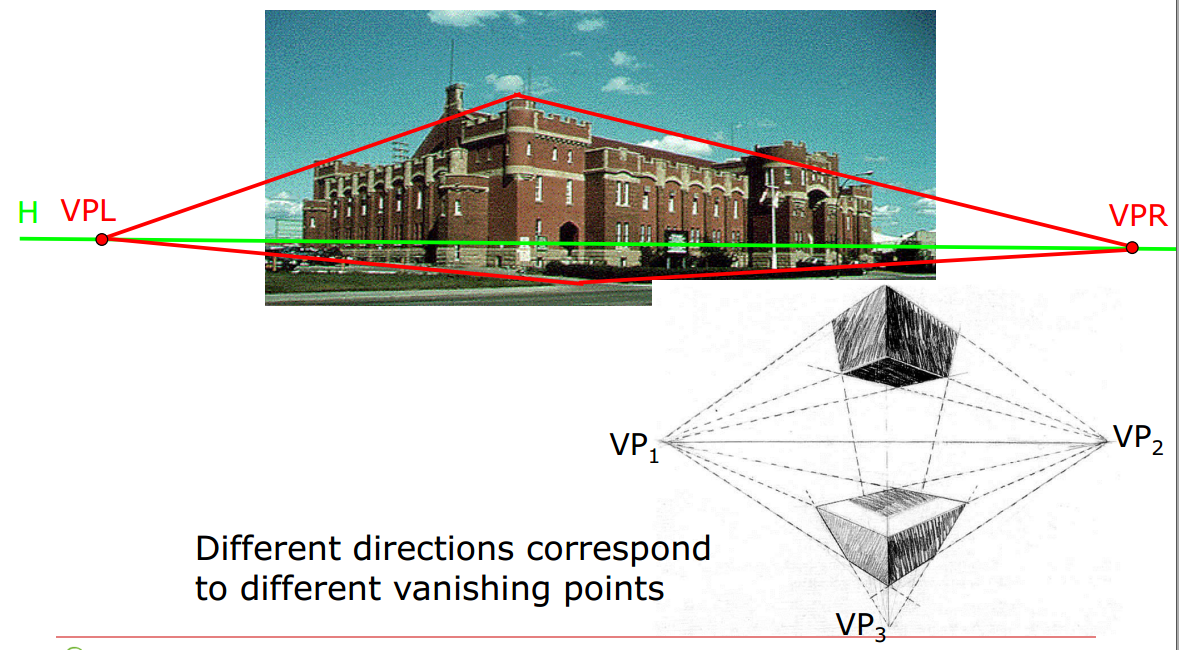

二。成像:相机几何模型

成像原理:

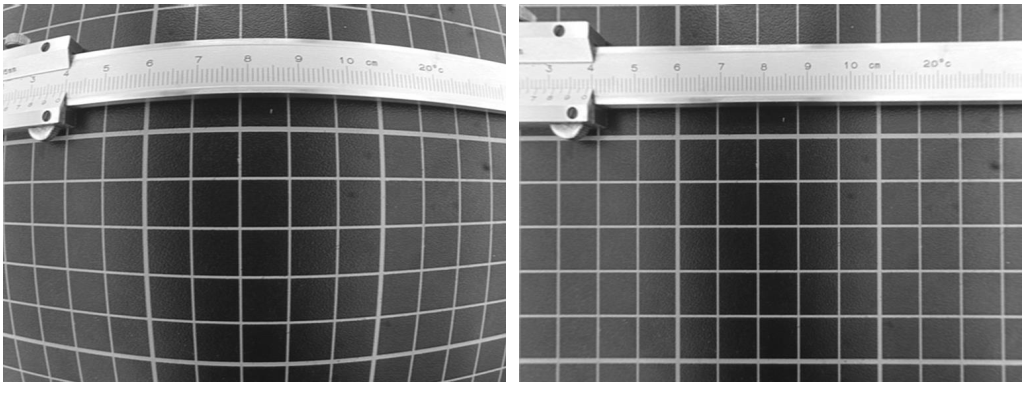

产生的畸变问题:

蓝色区域产生红边:

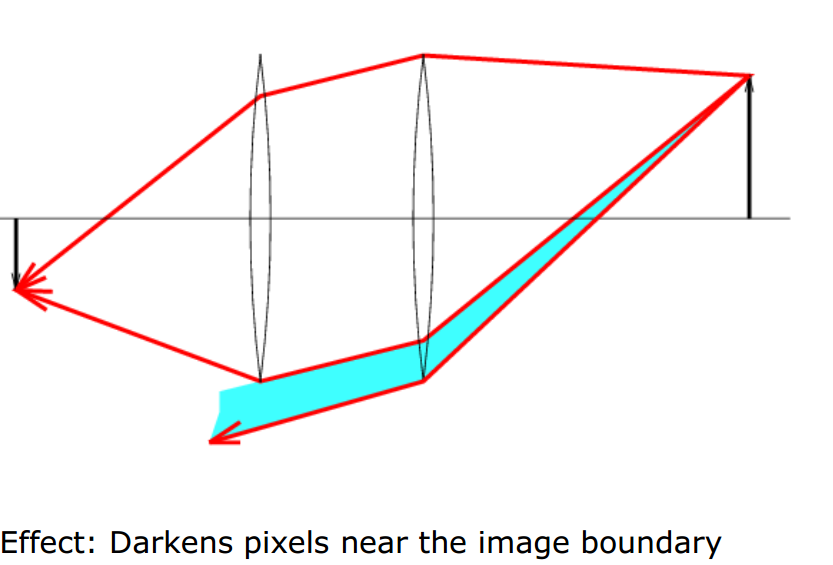

产生暗角(复古风格、老照片):

解决方法:减少畸变、花钱买镜头。

三、坐标系统转换(2D-2D,3D-3D)

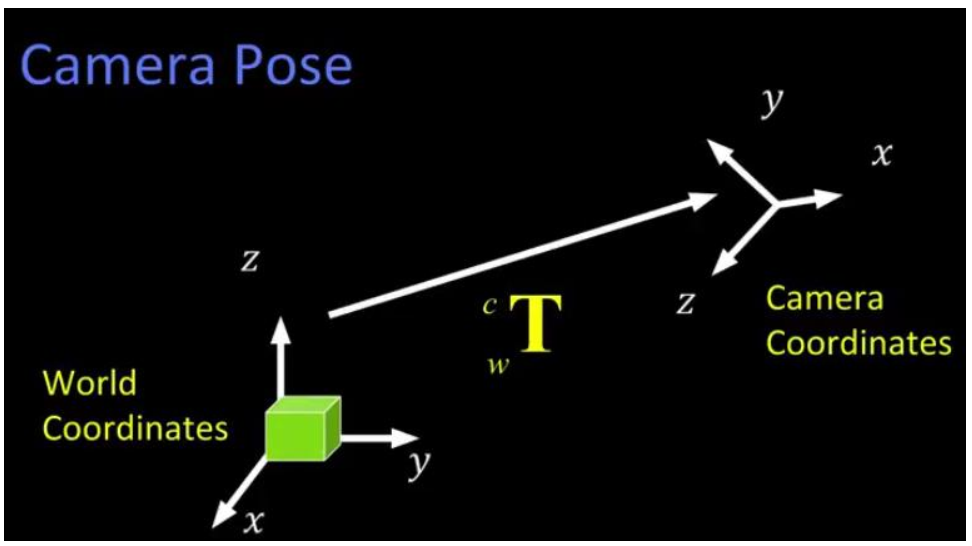

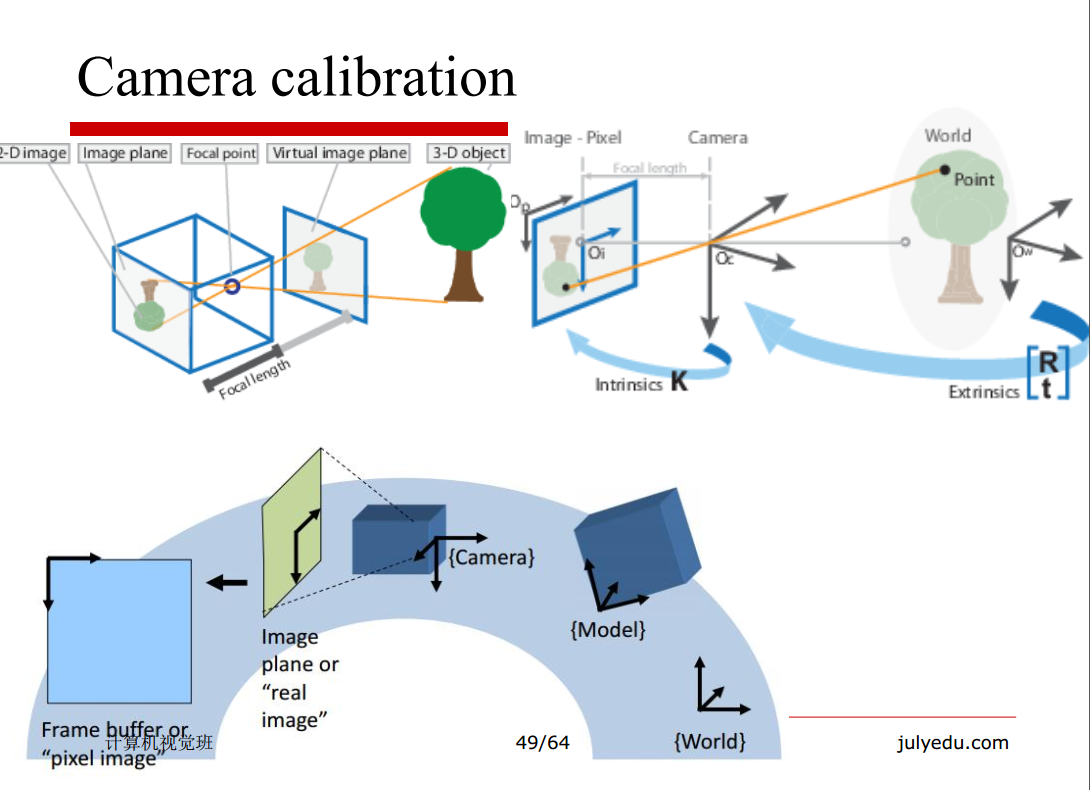

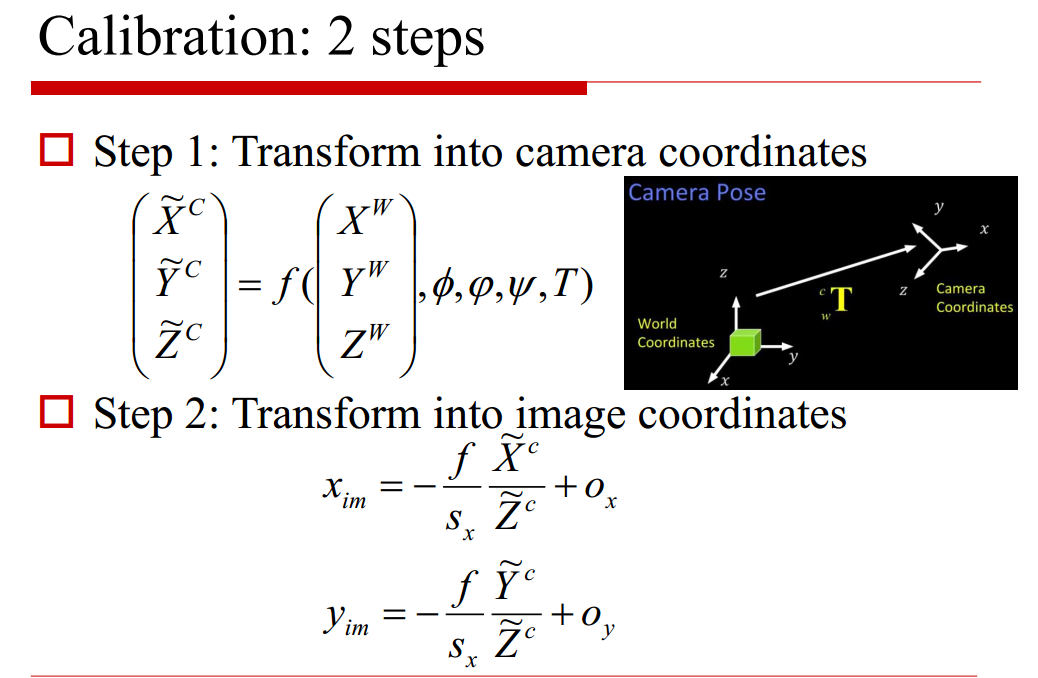

四、相机标定

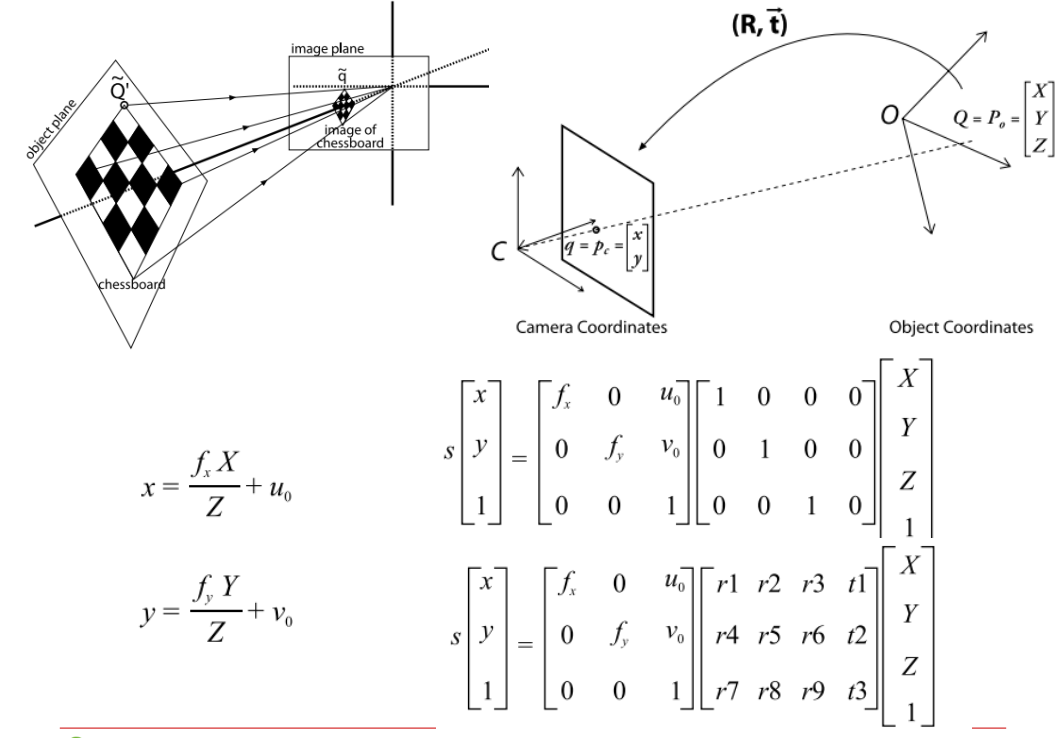

齐次坐标系 在相机坐标系下去描述一个世界坐标系的物体

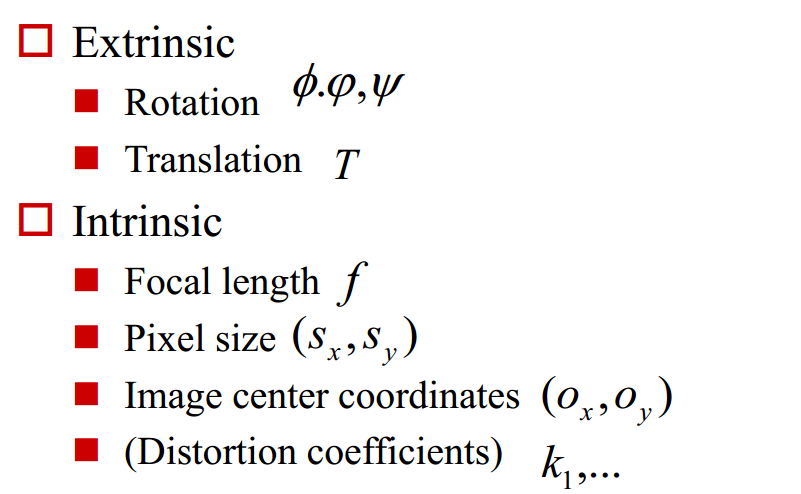

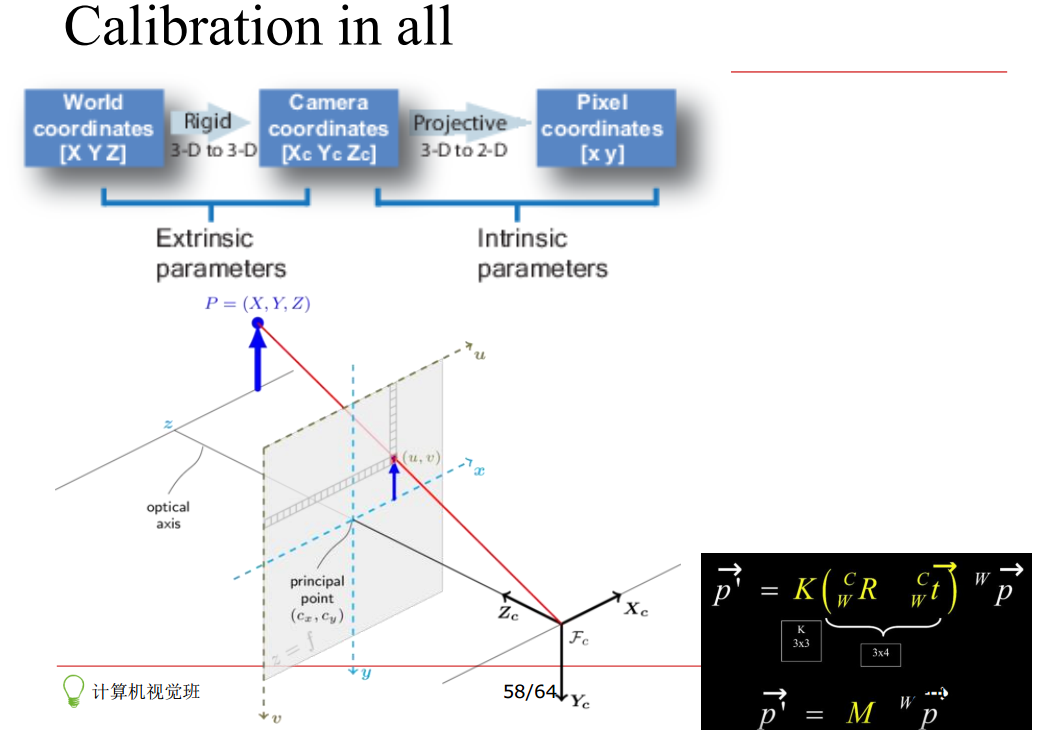

相机的标定主要是求取相机的内参和外参,从而求得相机的旋转加平移

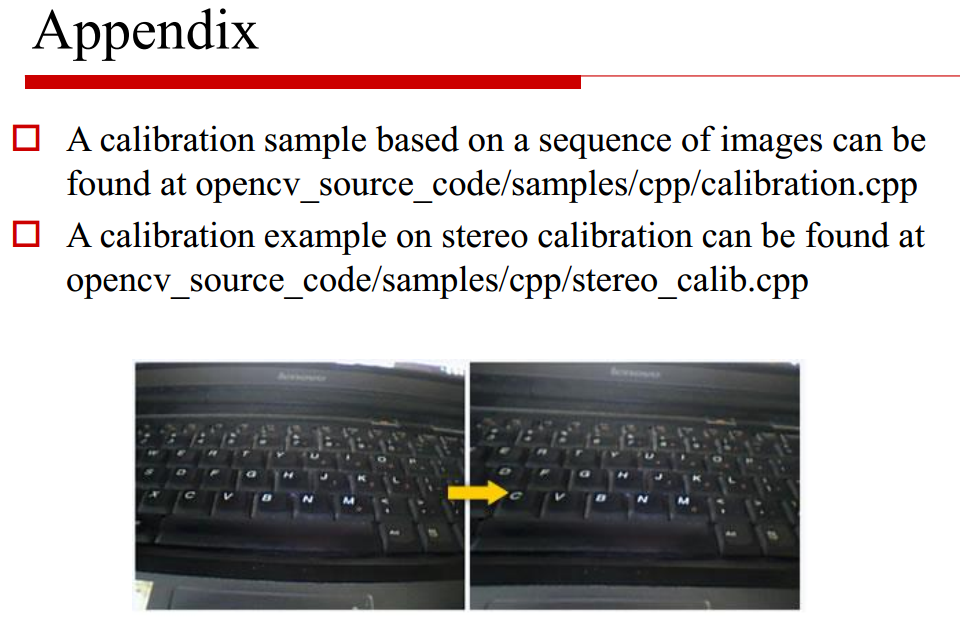

相机标定的过程:从世界坐标系的物体通过相机刚体变换、变换到相机坐标系、通过透视投影得到我们的投影坐标系、通过畸变校正得到一副真实的图像坐标系,这样我们就得到无畸变的图像。

标定在opencv中如何实现

http://docs.opencv.org/2.4/doc/tutorials/calib3d/camera_calibration/camera_calibration.html

标定在Matlab中标定箱如何实现

http://www.vision.caltech.edu/bouguetj/calib_doc/index.html#parameters

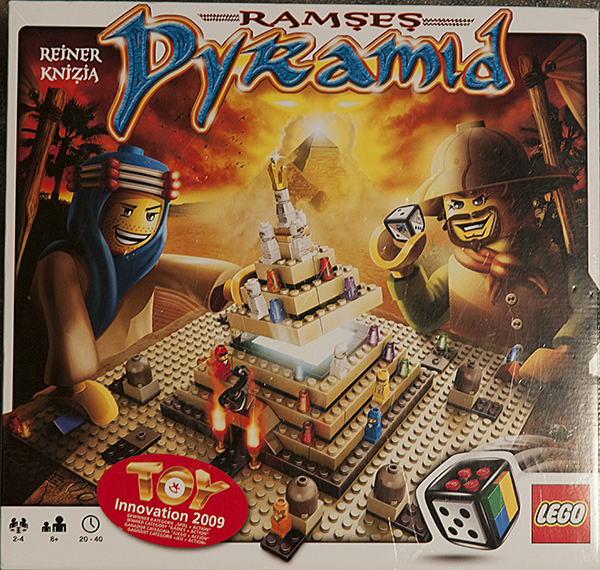

五、Project : Simple AR

//////////////////////////////////////////////////////////////////// // File includes: #include "ARDrawingContext.hpp" #include "ARPipeline.hpp" #include "DebugHelpers.hpp" //////////////////////////////////////////////////////////////////// // Standard includes: #include <opencv2/opencv.hpp> #include <windows.h>//Alvin #include <gl/gl.h> #include <gl/glu.h> /** * Processes a recorded video or live view from web-camera and allows you to adjust homography refinement and * reprojection threshold in runtime. */ void processVideo(const cv::Mat& patternImage, CameraCalibration& calibration, cv::VideoCapture& capture); /** * Processes single image. The processing goes in a loop. * It allows you to control the detection process by adjusting homography refinement switch and * reprojection threshold in runtime. */ void processSingleImage(const cv::Mat& patternImage, CameraCalibration& calibration, const cv::Mat& image); /** * Performs full detection routine on camera frame and draws the scene using drawing context. * In addition, this function draw overlay with debug information on top of the AR window. * Returns true if processing loop should be stopped; otherwise - false. */ bool processFrame(const cv::Mat& cameraFrame, ARPipeline& pipeline, ARDrawingContext& drawingCtx); int main(int argc, const char * argv[]) { // Change this calibration to yours: //CameraCalibration calibration(215.34823346868020f, 215.34823346868020f, 639.50000000000000f, 479.50000000000000f); //CameraCalibration calibration(884.90606895184135f, 884.90606895184135f, 319.50000000000000f, 239.50000000000000f);//fx,fy,cx,cy CameraCalibration calibration(651.54400468036533f, 651.54400468036533f, 319.50000000000000f, 239.50000000000000f); //1, load pattern and test images argc = 3; argv[0] = "marklessAr"; argv[1] = "PyramidPattern.jpg"; argv[2] = "PyramidPatternTest.bmp"; ////2, load a pattern and open a webcam //argc = 2; //argv[0] = "realtimeAR"; //argv[1] = "pattern.bmp"; if (argc < 2) { std::cout << "Input image not specified" << std::endl; std::cout << "Usage: markerless_ar_demo <pattern image> [filepath to recorded video or image]" << std::endl; return 1; } // Try to read the pattern: cv::Mat patternImage = cv::imread(argv[1]); if (patternImage.empty()) { std::cout << "Input image cannot be read" << std::endl; return 2; } if (argc == 2) { processVideo(patternImage, calibration, cv::VideoCapture(0)); } else if (argc == 3) { std::string input = argv[2]; cv::Mat testImage = cv::imread(input); if (!testImage.empty()) { processSingleImage(patternImage, calibration, testImage); } else { cv::VideoCapture cap; if (cap.open(input)) { processVideo(patternImage, calibration, cap); } } } else { std::cerr << "Invalid number of arguments passed" << std::endl; return 1; } return 0; } void processVideo(const cv::Mat& patternImage, CameraCalibration& calibration, cv::VideoCapture& capture) { // Grab first frame to get the frame dimensions cv::Mat currentFrame; capture >> currentFrame; // Check the capture succeeded: if (currentFrame.empty()) { std::cout << "Cannot open video capture device" << std::endl; return; } cv::Size frameSize(currentFrame.cols, currentFrame.rows); ARPipeline pipeline(patternImage, calibration); ARDrawingContext drawingCtx("Markerless AR", frameSize, calibration); bool shouldQuit = false; do { capture >> currentFrame; if (currentFrame.empty()) { shouldQuit = true; continue; } shouldQuit = processFrame(currentFrame, pipeline, drawingCtx); } while (!shouldQuit); } void processSingleImage(const cv::Mat& patternImage, CameraCalibration& calibration, const cv::Mat& image) { cv::Size frameSize(image.cols, image.rows); ARPipeline pipeline(patternImage, calibration); ARDrawingContext drawingCtx("Markerless AR", frameSize, calibration); bool shouldQuit = false; do { shouldQuit = processFrame(image, pipeline, drawingCtx); } while (!shouldQuit); } bool processFrame(const cv::Mat& cameraFrame, ARPipeline& pipeline, ARDrawingContext& drawingCtx) { // Clone image used for background (we will draw overlay on it) cv::Mat img = cameraFrame.clone(); // Draw information: if (pipeline.m_patternDetector.enableHomographyRefinement) cv::putText(img, "Pose refinement: On ('h' to switch off)", cv::Point(10,15), CV_FONT_HERSHEY_PLAIN, 1, CV_RGB(0,200,0)); else cv::putText(img, "Pose refinement: Off ('h' to switch on)", cv::Point(10,15), CV_FONT_HERSHEY_PLAIN, 1, CV_RGB(0,200,0)); cv::putText(img, "RANSAC threshold: " + ToString(pipeline.m_patternDetector.homographyReprojectionThreshold) + "( Use'-'/'+' to adjust)", cv::Point(10, 30), CV_FONT_HERSHEY_PLAIN, 1, CV_RGB(0,200,0)); // Set a new camera frame: drawingCtx.updateBackground(img); // Find a pattern and update it's detection status: drawingCtx.isPatternPresent = pipeline.processFrame(cameraFrame); // Update a pattern pose: drawingCtx.patternPose = pipeline.getPatternLocation(); // Request redraw of the window: drawingCtx.updateWindow(); // Read the keyboard input: int keyCode = cv::waitKey(5); bool shouldQuit = false; if (keyCode == '+' || keyCode == '=') { pipeline.m_patternDetector.homographyReprojectionThreshold += 0.2f; pipeline.m_patternDetector.homographyReprojectionThreshold = min(10.0f, pipeline.m_patternDetector.homographyReprojectionThreshold); } else if (keyCode == '-') { pipeline.m_patternDetector.homographyReprojectionThreshold -= 0.2f; pipeline.m_patternDetector.homographyReprojectionThreshold = max(0.0f, pipeline.m_patternDetector.homographyReprojectionThreshold); } else if (keyCode == 'h') { pipeline.m_patternDetector.enableHomographyRefinement = !pipeline.m_patternDetector.enableHomographyRefinement; } else if (keyCode == 27 || keyCode == 'q') { shouldQuit = true; } return shouldQuit; }

结果是: