作业一

(1)熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架爬取京东商城某类商品信息及图片。

- 候选网站:http://www.jd.com/

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import urllib.request

import threading

import sqlite3

import os

import datetime

from selenium.webdriver.common.keys import Keys

import time

class MySpider:

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36'

}

imagePath = "download"

def startUp(self, url, key):

chrome_options = Options()

# 实现无可视化操作

# chrome_options.add_argument('--headless')

# chrome_options.add_argument('--disable-gpu')

self.driver = webdriver.Chrome(options=chrome_options)

self.threads = []

self.No = 0

self.imgNo = 0

# 创建数据库

try:

self.con = sqlite3.connect("phones.db")

self.cursor = self.con.cursor()

try:

# 如果有表就删除

self.cursor.execute("drop table phones")

except:

pass

try:

# 建立新的表

sql = "create table phones (mNo varchar(32) primary key, mMark varchar(256),mPrice varchar(32),mNote varchar(1024),mFile varchar(256))"

self.cursor.execute(sql)

except:

pass

except Exception as err:

print(err)

# 建立download文件夹

try:

if not os.path.exists(MySpider.imagePath):

os.mkdir(MySpider.imagePath)

images = os.listdir(MySpider.imagePath)

for img in images:

s = os.path.join(MySpider.imagePath, img)

os.remove(s)

except Exception as err:

print(err)

self.driver.get(url)

# 找到输入框,并将关键字输入进去

keyInput = self.driver.find_element_by_id("key")

keyInput.send_keys(key)

keyInput.send_keys(Keys.ENTER)

def closeUp(self):

try:

self.con.commit()

self.con.close()

self.driver.close()

except Exception as err:

print(err)

def insertDB(self, mNo, mMark, mPrice, mNote, mFile):

try:

sql = "insert into phones (mNo,mMark,mPrice,mNote,mFile) values (?,?,?,?,?)"

self.cursor.execute(sql, (mNo, mMark, mPrice, mNote, mFile))

except Exception as err:

print(err)

def showDB(self):

try:

con = sqlite3.connect("phones.db")

cursor = con.cursor()

print("%-8s%-16s%-8s%-16s%s" % ("No", "Mark", "Price", "Image", "Note"))

cursor.execute("select mNo,mMark,mPrice,mFile,mNote from phones order by mNo")

rows = cursor.fetchall()

for row in rows:

print("%-8s %-16s %-8s %-16s %s" % (row[0], row[1], row[2], row[3], row[4]))

con.close()

except Exception as err:

print(err)

# 下载图片

def download(self, src1, src2, mFile):

data = None

if src1:

try:

req = urllib.request.Request(src1, headers=MySpider.headers)

resp = urllib.request.urlopen(req, timeout=10)

data = resp.read()

except:

pass

if not data and src2:

try:

req = urllib.request.Request(src2, headers=MySpider.headers)

resp = urllib.request.urlopen(req, timeout=10)

data = resp.read()

except:

pass

if data:

print("download begin", mFile)

fobj = open(MySpider.imagePath + "\" + mFile, "wb")

fobj.write(data)

fobj.close()

print("download finish", mFile)

def processSpider(self):

try:

time.sleep(1)

print(self.driver.current_url)

lis = self.driver.find_elements_by_xpath("//div[@id='J_goodsList']//li[@class='gl-item']")

for li in lis:

try:

src1 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("src")

except:

src1 = ""

try:

src2 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("data-lazy-img")

except:

src2 = ""

try:

price = li.find_element_by_xpath(".//div[@class='p-price']//i").text

except:

price = "0"

try:

note = li.find_element_by_xpath(".//div[@class='p-name p-name-type-2']//em").text

mark = note.split(" ")[0]

mark = mark.replace("爱心东东

", "")

mark = mark.replace(",", "")

note = note.replace("爱心东东

", "")

note = note.replace(",", "")

except:

note = ""

mark = ""

self.No = self.No + 1

no = str(self.No)

while len(no) < 6:

no = "0" + no

print(no, mark, price)

if src1:

src1 = urllib.request.urljoin(self.driver.current_url, src1)

p = src1.rfind(".")

mFile = no + src1[p:]

elif src2:

src2 = urllib.request.urljoin(self.driver.current_url, src2)

p = src2.rfind(".")

mFile = no + src2[p:]

if src1 or src2:

T = threading.Thread(target=self.download, args=(src1, src2, mFile))

T.setDaemon(False)

T.start()

self.threads.append(T)

else:

mFile = ""

self.insertDB(no, mark, price, note, mFile)

if self.No < 100:

nextPage = self.driver.find_element_by_xpath("//span[@class='p-num']//a[@class='pn-next']")

time.sleep(10)

nextPage.click()

self.processSpider()

except Exception as err:

print(err)

def executeSpider(self, url, key):

starttime = datetime.datetime.now()

print("Spider starting......")

self.startUp(url, key)

print("Spider processing......")

self.processSpider()

print("Spider closing......")

self.closeUp()

for t in self.threads:

t.join()

print("Spider completed......")

endtime = datetime.datetime.now()

elapsed = (endtime - starttime).seconds

print("Total ", elapsed, " seconds elapsed")

url = "http://www.jd.com"

spider = MySpider()

while True:

print("1.爬取")

print("2.显示")

print("3.退出")

s = input("请选择(1,2,3):")

if s == "1":

spider.executeSpider(url, "手机")

continue

elif s == "2":

spider.showDB()

continue

elif s == "3":

break

(2)心得体会

复现一下老师的代码,可能对下面的有些帮助和理解

作业二

(1)熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。

graph TD

A[浏览器发起请求获取当前页面源码数据] -->|不同A股| B(通过id定位)

B --> C{同一只A股的多页}

C -->|xpath定位到下一页按钮| D[click翻页适当sleep一下]

C -->|tag定位到tr/tds| F[获取数据]

def main():

page_url = "http://quote.eastmoney.com/center/gridlist.html"

login = browser.get(page_url) # 让浏览器发起请求

page_text = browser.page_source # 获取浏览器当前页面的页面源码数据

for i in range(3):#爬取不同A股

lists = ['hs', 'sh', 'sz']

different = browser.find_element_by_id('nav_' + lists[i] + '_a_board')

# browser.execute_script("arguments[0].click();",different)--这个方法可以试一下

webdriver.ActionChains(browser).move_to_element(different).click(different).perform()#去寻找到想要click的元素

next_page = browser.find_element_by_id('main-table_paginate')

for i in range(2): # 爬取A股的多页

time.sleep(3)

hsa = browser.find_element_by_id('nav_'+ lists[i]+'_a_board')

informations = hsa.find_element_by_xpath("//div[@class='listview full']//tbody") # 存放想要数据的tbody

for information in informations.find_elements_by_xpath("./tr"):

tds = information.find_elements(By.TAG_NAME, "td")

id = tds[0].text

code = tds[1].text

name = tds[2].text

latest_price = tds[4].text

zhangdiefu = tds[5].text

zhangdiee = tds[6].text

chengjiaoliang = tds[7].text

chengjiaoe = tds[8].text

zhenfu = tds[9].text

zuigao = tds[10].text

zuidi = tds[11].text

jinkai = tds[12].text

zuoshou = tds[13].text

mysql(id,code,name,latest_price,zhangdiefu,zhangdiee,chengjiaoliang,chengjiaoe,zhenfu,zuigao,zuidi,jinkai,zuoshou)

print(id, code, name, latest_price)

button = next_page.find_element_by_xpath("./a[@class='next paginate_button']").click()

time.sleep(3)

time.sleep(12)

if __name__ == '__main__':

main()

(2)心得体会

股票的定位还算合理,找到下一页的按钮click翻页,一个A股爬完之后想要点击下一个A股,记得先对页面进行滚动操作,使得该控件在页面上显示出来,利用ActionChains解决,包裹数据的标签td比较人性,用上述By.TAG_NAME获取,然后直接.text就可以了,再把数据存入MySQL就欧克

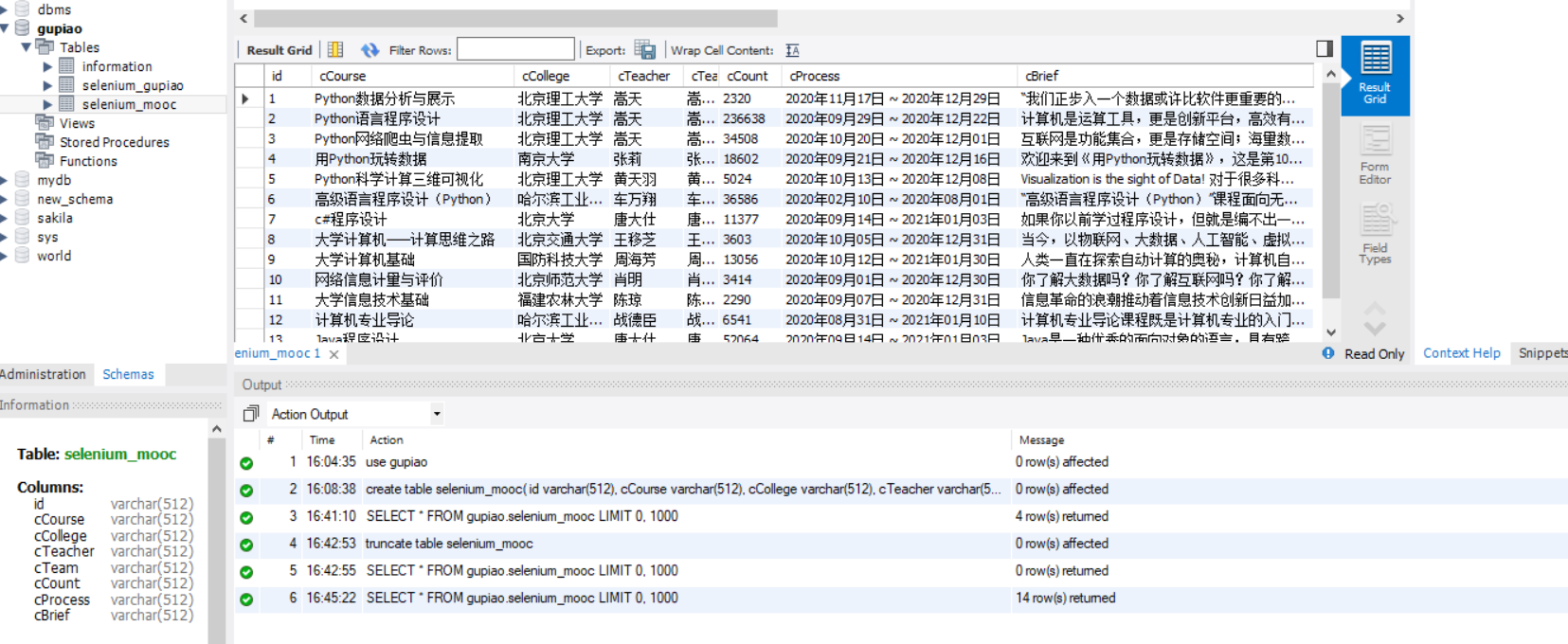

作业三

(1)熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

- 中国mooc网:https://www.icourse163.org

graph TD

A[浏览器发起请求获取当前页面源码数据] -->|避免定位不到| B(利用wait.until)

B --> C(获取当前页面所有课程和每一门课程部分想要的数据)

C -->|点击每一门课程url| D[获取当前页面的源码数据并进行提取想要的数据]

C -->|等待剩余数据存到MySQL| F[和前面的数据一起存到MySQL]

def main():

page_url = "https://www.icourse163.org/search.htm?search=python#type=30&orderBy=0&pageIndex=1&courseTagType=1"

wait = ui.WebDriverWait(browser,10)

browser.get(page_url) # 让浏览器发起请求

wait.until(lambda browser:browser.find_element_by_xpath("//label[@class='ux-check']/div[@class='check_box ux-check_unchecked']"))

browser.find_element_by_xpath("//label[@class='ux-check']/div[@class='check_box ux-check_unchecked']").click()

time.sleep(3)

wait.until(lambda browser:browser.find_element_by_xpath("//div/div[@class='m-course-list']/div"))

informations = browser.find_element_by_xpath("//div/div[@class='m-course-list']/div")

i=0

for information in informations.find_elements_by_xpath("./div"):

datas = information.find_element_by_xpath("./div[@class='g-mn1']/div[@class='g-mn1c']/div[@class='cnt f-pr']")

university = datas.find_element_by_xpath("./div[@class='t2 f-fc3 f-nowrp f-f0']/a[position()=1]")

college = university.text

Teacher = datas.find_element_by_xpath("./div[@class='t2 f-fc3 f-nowrp f-f0']/a[position()=2]")

teacher = Teacher.text

# team = datas.find_element_by_xpath("./div[@class='t2 f-fc3 f-nowrp f-f0']/span/span/a")

href = datas.find_element_by_xpath("./a")

xiangqing = href.get_attribute("href")

i+=1

print(i,college, teacher,xiangqing)

# dianji = datas.find_element_by_xpath("./a").click()

# time.sleep(5)

browser2 = webdriver.Chrome()

wait = ui.WebDriverWait(browser2, 10)

browser2.get(xiangqing) # 让浏览器发起请求

wait.until(lambda browser2: browser2.find_element_by_xpath(

"//div[@class='f-fl course-title-wrapper']/span[position()=1]"))

name = browser2.find_element_by_xpath("//div[@class='f-fl course-title-wrapper']/span[position()=1]")

course = name.text

Process = browser2.find_element_by_xpath("//div[@class='course-enroll-info_course-info_term-info_term-time']/span[position()=2]")

process = Process.text

people = browser2.find_element_by_xpath("//div[@class='course-enroll-info_course-enroll_price-enroll']/span")

count = people.text.split()[1]

jieshao = browser2.find_element_by_xpath("//div[@class='course-heading-intro']/div[position()=1]")

brief = jieshao.text

wait.until(lambda browser2: browser2.find_element_by_xpath(

"//div[@class='um-list-slider f-pr']/div[@class='um-list-slider_con']"))

teams = browser2.find_element_by_xpath("//div[@class='um-list-slider f-pr']/div[@class='um-list-slider_con']")

team = ''

for each in teams.find_elements_by_xpath("./div"):

team_part = each.find_element_by_xpath("./div/div[@class='cnt f-fl']/h3")

part = team_part.text

team+=part

print(team,course,process,count,brief)

mysql(i,course,college,teacher,team,count,process,brief)

if __name__ == '__main__':

main()

#点击下一页

# wait.until(lambda browser:browser.find_element_by_xpath("//li[@class='ux-pager_btn ux-pager_btn__next']/a"))

# next_page = browser.find_element_by_xpath("//li[@class='ux-pager_btn ux-pager_btn__next']/a").click()

(2)心得体会

这个定位元素真的很费事,定位过程经常报错等,反正是够烦的,class的属性值之间有空格好像也没影响,不影响xpath定位,定位之后获取数据然后存到MySQL