Tiny-ImageNet的下载链接如下:http://cs231n.stanford.edu/tiny-imagenet-200.zip

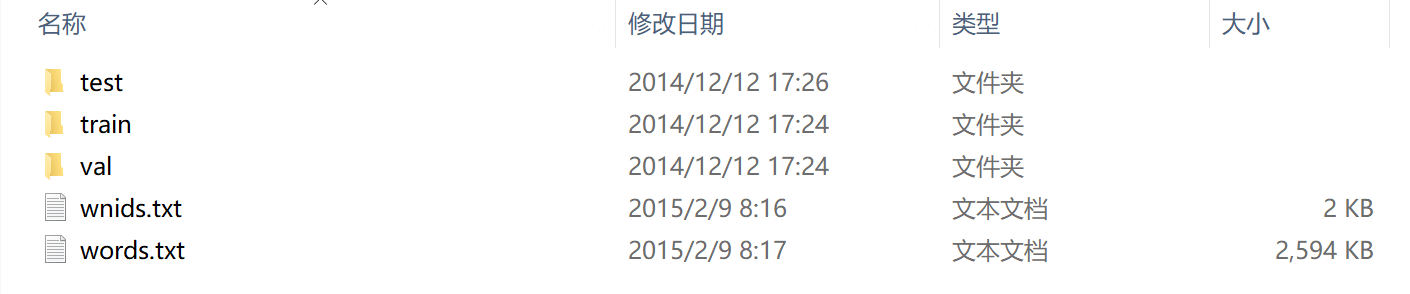

下载完成后进行解压,可以看到在windows下的目录显示为:

可以看到train文件夹中,所有图片都像ImageNet一样放在以类别命名的文件夹中,可以不用管,但是val文件夹中同样也需要像Imagenet一样利用脚本将各文件放置于文件夹中,以符合pytorch读取数据的要求,这里我们通过如下脚本实现:

import glob

import os

from shutil import move

from os import rmdir

target_folder = './tiny-imagenet-200/val/'

val_dict = {}

with open('./tiny-imagenet-200/val/val_annotations.txt', 'r') as f:

for line in f.readlines():

split_line = line.split(' ')

val_dict[split_line[0]] = split_line[1]

paths = glob.glob('./tiny-imagenet-200/val/images/*')

for path in paths:

file = path.split('/')[-1]

folder = val_dict[file]

if not os.path.exists(target_folder + str(folder)):

os.mkdir(target_folder + str(folder))

os.mkdir(target_folder + str(folder) + '/images')

for path in paths:

file = path.split('/')[-1]

folder = val_dict[file]

dest = target_folder + str(folder) + '/images/' + str(file)

move(path, dest)

rmdir('./tiny-imagenet-200/val/images')

就让Tiny-ImageNet的文件格式基本与ImageNet一致了,在DataLoader时,也可以用相似的代码,这里是将尺寸变成了32来处理

def tiny_loader(batch_size, data_dir):

num_label = 200

normalize = transforms.Normalize((0.4802, 0.4481, 0.3975), (0.2770, 0.2691, 0.2821))

transform_train = transforms.Compose(

[transforms.RandomResizedCrop(32), transforms.RandomHorizontalFlip(), transforms.ToTensor(),

normalize, ])

transform_test = transforms.Compose([transforms.Resize(32), transforms.ToTensor(), normalize, ])

trainset = datasets.ImageFolder(root=os.path.join(data_dir, 'train'), transform=transform_train)

testset = datasets.ImageFolder(root=os.path.join(data_dir, 'val'), transform=transform_test)

train_loader = torch.utils.data.DataLoader(trainset, batch_size=batch_size, shuffle=True, pin_memory=True)

test_loader = torch.utils.data.DataLoader(testset, batch_size=batch_size, shuffle=False, pin_memory=True)

return train_loader, test_loader, num_label

就可以正常进行训练了