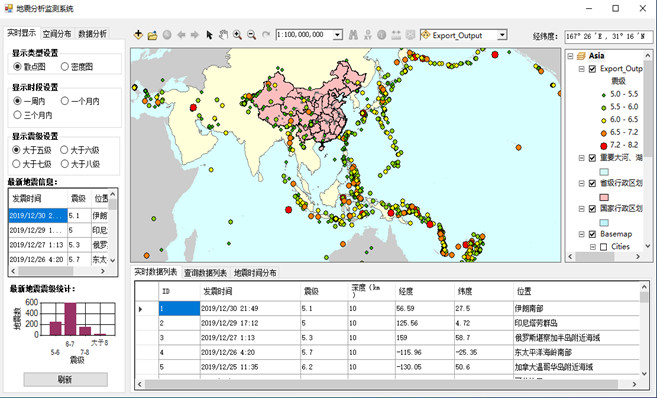

0.简介:以python爬取的地震数据作为动态数据源,可利用ArcGIS二次开发设计一个地震监测分析系统。篇章有限,这里重点记录爬虫部分。

1.数据来源:

2.示例代码如下:

# -*- coding: utf-8 -*- """ Created on Thur Sep 19 08:31:07 2019 @author: L JL """ import requests import urllib import json import csv from bs4 import BeautifulSoup from lxml import etree #print(soup) def get_one_page(i): try: url = 'http://ditu.92cha.com/dizhen.php?page= %s &dizhen_ly=china&dizhen_zjs=5&dizhen_zje=10&dizhen_riqis=2016-01-01&dizhen_riqie=2019-12-30' % (str(i)) head = { 'User-Agent': "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36 Edge/17.17134"} data = requests.get(url,headers = head) #print(data) return data except RequestException: print('爬取失败') def parse_one_page(html,ulist): soup = BeautifulSoup(html.text,'lxml') trs = soup.find_all('tr') i = 0 for tr in trs: if i == 0: ##效率低 待改进 i += 1 else: ui = [] for td in tr: #print(td.string) ui.append(td.string) ulist.append(ui) i = i + 1 def save_contents(ulist): with open(r'data0.csv', 'w', encoding='utf-8-sig', newline='') as f: csv.writer(f).writerow(['发震时间', '震级', '经度', '维度', '深度(km)', '位置']) for i in range(len(ulist)): csv.writer(f).writerow([ulist[i][1],ulist[i][3],ulist[i][5],ulist[i][7],ulist[i][9],ulist[i][11]]) def main(page): ulist = [] for i in range(1,page): html = get_one_page(i) parse_one_page(html,ulist) save_contents(ulist) print('结束') if __name__ == '__main__': main(21) ##需爬取的页数

3.爬取结果如下图所示:

4.方案设计:

---------------------------------------------------------2019.9--------------------------------------------------------------------