1、收集哪些日志

K8S系统的组件日志 ( node节点日志)

K8S Cluster里面部署的应用程序日志 (pod节点日志)

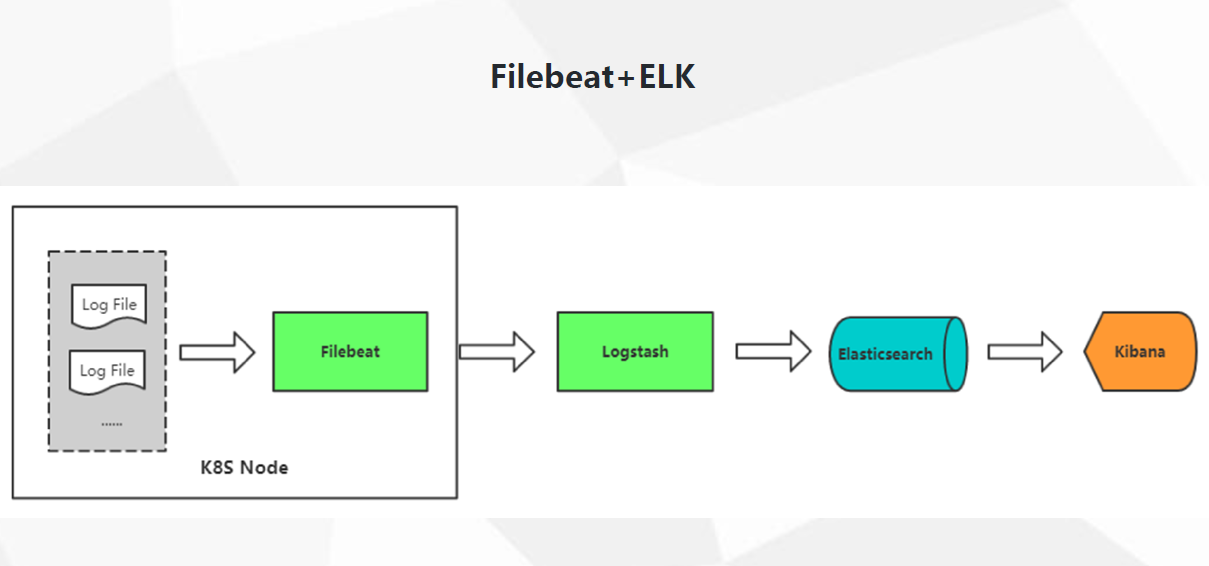

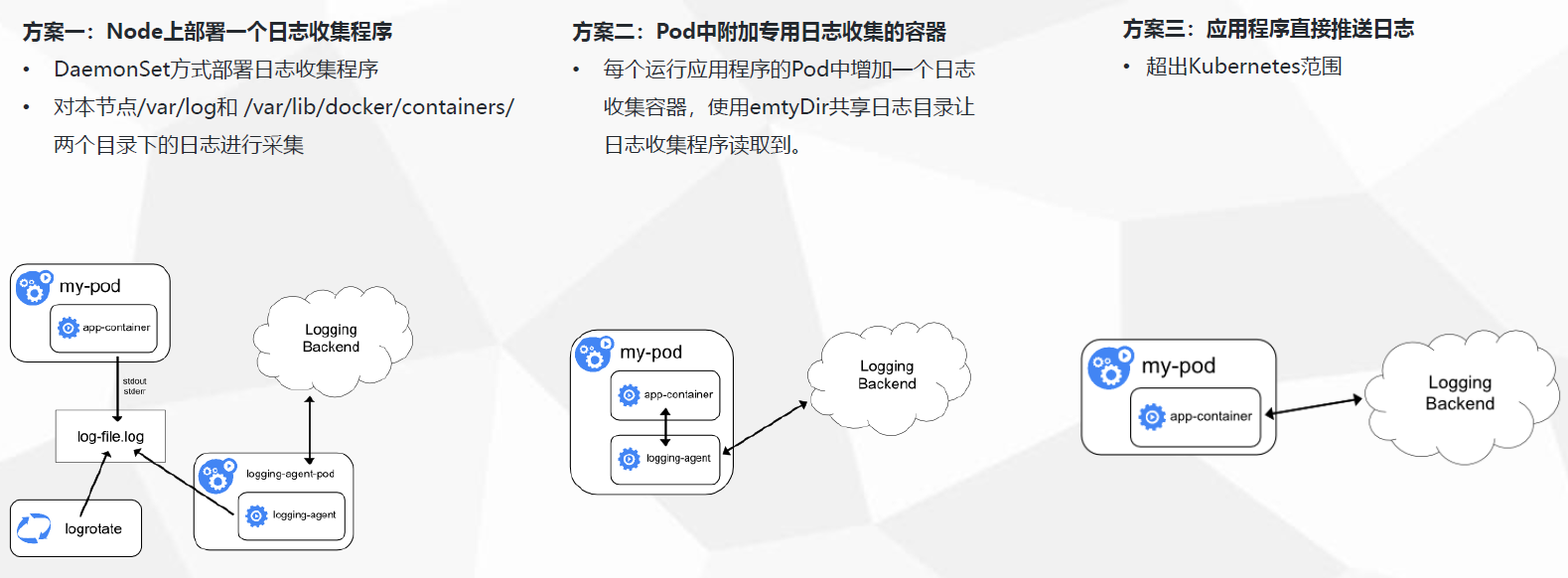

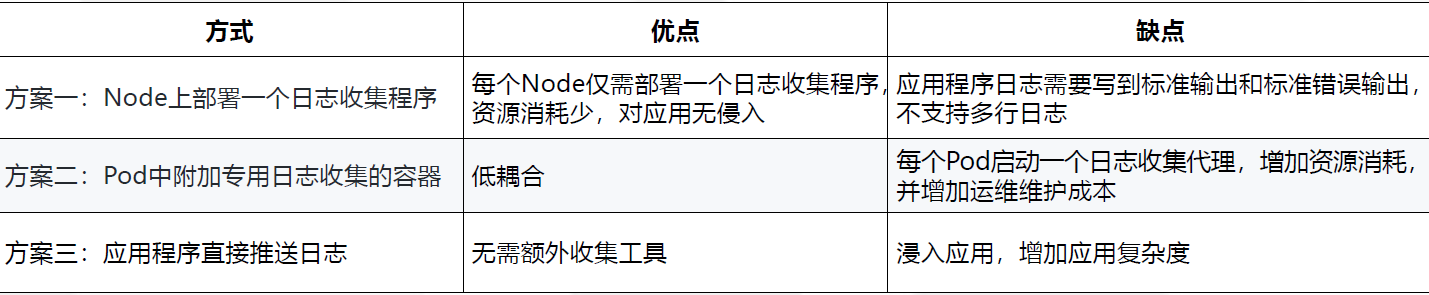

2、日志方案

3.容器中的日志怎么收集

[root@node01 ~]# ls /var/log/containers/ #每个容器都有一个json格式的日志文件 k8s-logs-rk7zz_kube-system_filebeat-055274493f24e173937e8b40a0cf4ae25cc33269eb09ee6c29e373c937c71bcb.log nfs-client-provisioner-86684d5b5f-8k7x6_default_nfs-client-provisioner-f9f8e3d85a43369bf92d9d45a8057a05a46fd356804e5eab052d5b4f063f9e81.log php-demo-6c7d9c9495-ssfx5_test_filebeat-fe595f3fd88661d4271d27d911d8a2ca7f26fc91bdfb115a8f29371995a32452.log php-demo-6c7d9c9495-ssfx5_test_nginx-0218dd3ef7e6eb99f7909b6c58ceb7197efd078990f7aa24e925b49287b83841.log php-demo-6c7d9c9495-vcw65_test_filebeat-d7197a5f0b6ad550056f547b9e40794456a9d1b8db9633e10c3b0374179b0d2d.log php-demo-6c7d9c9495-vcw65_test_nginx-5732202ecb7f9fdbee201d466312fee525627a3b8eb71077b1fe74098ea50fca.log tomcat-java-demo-75c96bcd59-8f75n_test_filebeat-b783c5f1d80e5ad3207887daa1d7f936a2eebb7d83a42d7597e937485e818dcf.log tomcat-java-demo-75c96bcd59-8f75n_test_tomcat-ac868c4aad866a7cef022ec94d6e5efab690c4a0c64b0ca315601a11c67d2e6a.log tomcat-java-demo-75c96bcd59-lbm4s_test_filebeat-86b22ee9acd63aa578c09e9a53642c6a8de2092acf3185d1b8edfe70c9015ec8.log tomcat-java-demo-75c96bcd59-lbm4s_test_tomcat-606543fa6018be36d7e212fa6ff8b62e36d7591cce650434cbbcbe7acf10283c.log [root@node01 ~]# json格式 不支持多行

4、接下我们采取方案二收集 node节点日志和pod日志

第一步安装elk:这里使用用nginx01 10.192.27.111

[root@elk ~]# yum install java-1.8.0-openjdk -y [root@elk yum.repos.d]# vim elasticsearch.repo [root@elk yum.repos.d]# cat elasticsearch.repo [logstash-6.x] name=Elasticsearch repository for 6.x packages baseurl=https://artifacts.elastic.co/packages/6.x/yum gpgcheck=1 gpgkey=http://artifacts.elastic.co/GPG-KEY-elasticsearch enabled=1 autorefresh=1 type=rpm-md [root@elk yum.repos.d]# [root@elk yum.repos.d]# yum repolist 已加载插件:fastestmirror Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com logstash-6.x | 1.3 kB 00:00:00 logstash-6.x/primary | 202 kB 00:00:16 logstash-6.x 541/541 源标识 源名称 状态 base/7/x86_64 CentOS-7 - Base 10,097 docker-ce-stable/x86_64 Docker CE Stable - x86_64 56 extras/7/x86_64 CentOS-7 - Extras 304 logstash-6.x Elasticsearch repository for 6.x packages 541 updates/7/x86_64 CentOS-7 - Updates 332 repolist: 11,330 [root@elk yum.repos.d]# [root@elk yum.repos.d]# yum -y install elasticsearch logstash kibana #安装三个安装包 #es配置文件 并启动服务 [root@elk yum.repos.d]# rpm -qc elasticsearch #查看es 所有配置文件 /etc/elasticsearch/elasticsearch.yml #主配置文件 /etc/elasticsearch/jvm.options /etc/elasticsearch/log4j2.properties /etc/elasticsearch/role_mapping.yml /etc/elasticsearch/roles.yml /etc/elasticsearch/users /etc/elasticsearch/users_roles /etc/init.d/elasticsearch /etc/sysconfig/elasticsearch /usr/lib/sysctl.d/elasticsearch.conf /usr/lib/systemd/system/elasticsearch.service [root@elk yum.repos.d]# vim /etc/elasticsearch/elasticsearch.yml #不做任何修改 [root@elk yum.repos.d]# systemctl daemon-reload [root@elk yum.repos.d]# systemctl enable elasticsearch Created symlink from /etc/systemd/system/multi-user.target.wants/elasticsearch.service to /usr/lib/systemd/system/elasticsearch.service. [root@elk yum.repos.d]# systemctl start elasticsearch.service [root@elk yum.repos.d]# [root@elk yum.repos.d]# systemctl status elasticsearch ● elasticsearch.service - Elasticsearch Loaded: loaded (/usr/lib/systemd/system/elasticsearch.service; enabled; vendor preset: disabled) Active: active (running) since 二 2019-12-03 13:58:12 CST; 2s ago Docs: http://www.elastic.co Main PID: 177424 (java) Tasks: 64 Memory: 1.1G CGroup: /system.slice/elasticsearch.service ├─177424 /bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Des.networkaddress.cache.ttl=60 -Des.network... └─177650 /usr/share/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller 12月 03 13:58:12 elk systemd[1]: Started Elasticsearch. [root@elk yum.repos.d]# [root@elk yum.repos.d]# ps -ef | grep java #查看elasticsearch 状态 elastic+ 5323 1 18 16:27 ? 00:00:11 /bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.io.tmpdir=/tmp/elasticsearch-7380998951032776048 -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/var/lib/elasticsearch -XX:ErrorFile=/var/log/elasticsearch/hs_err_pid%p.log -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintTenuringDistribution -XX:+PrintGCApplicationStoppedTime -Xloggc:/var/log/elasticsearch/gc.log -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=32 -XX:GCLogFileSize=64m -Des.path.home=/usr/share/elasticsearch -Des.path.conf=/etc/elasticsearch -Des.distribution.flavor=default -Des.distribution.type=rpm -cp /usr/share/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -p /var/run/elasticsearch/elasticsearch.pid --quiet root 5775 17109 0 16:28 pts/0 00:00:00 grep --color=auto java [root@elk yum.repos.d]# #配置kibana 并启动服务 [root@elk yum.repos.d]# vim /etc/kibana/kibana.yml 修改配置文件 2 server.port: 5601 #监听端口 7 server.host: "0.0.0.0" #接收任意地址的访问 28 elasticsearch.hosts: ["http://localhost:9200"] [root@elk yum.repos.d]# grep "^[a-z]" /etc/kibana/kibana.yml server.port: 5601 server.host: "0.0.0.0" elasticsearch.hosts: ["http://localhost:9200"] [root@elk yum.repos.d]# systemctl start kibana [root@elk yum.repos.d]# systemctl status kibana ● kibana.service - Kibana Loaded: loaded (/etc/systemd/system/kibana.service; disabled; vendor preset: disabled) Active: active (running) since 二 2019-12-03 14:03:20 CST; 11s ago Main PID: 180943 (node) Tasks: 11 Memory: 233.8M CGroup: /system.slice/kibana.service └─180943 /usr/share/kibana/bin/../node/bin/node --no-warnings /usr/share/kibana/bin/../src/cli -c /etc/kibana/kibana.yml 12月 03 14:03:20 elk systemd[1]: Started Kibana. [root@elk yum.repos.d]# systemctl enable kibana Created symlink from /etc/systemd/system/multi-user.target.wants/kibana.service to /etc/systemd/system/kibana.service. [root@elk yum.repos.d]# [root@elk yum.repos.d]# ps -ef | grep kibana kibana 2286 1 19 16:22 ? 00:01:22 /usr/share/kibana/bin/../node/bin/node --no-warnings --max-http-header-size=65536 /usr/share/kibana/bin/../src/cli -c /etc/kibana/kibana.yml root 6213 17109 4 16:29 pts/0 00:00:00 grep --color=auto kibana [root@elk yum.repos.d]# [root@elk yum.repos.d]# netstat -anptu | grep 5601 tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 2286/node [root@elk yum.repos.d]# 访问http://10.192.27.111:5601/ #配置logstash 并启动服务 [root@elk conf.d]# cat logstash-sample.conf # Sample Logstash configuration for creating a simple # Beats -> Logstash -> Elasticsearch pipeline. input { beats { port => 5044 } } filter { } output { elasticsearch { hosts => ["http://127.0.0.1:9200"] index => "k8s-log-%{+YYYY.MM.dd}" #索引名称 } stdout { codec => rubydebug } #输出到控制台 便于测试 可写可不写 } [root@elk conf.d]# [root@elk conf.d]# systemctl start logstash [root@elk conf.d]# systemctl enable logstash Created symlink from /etc/systemd/system/multi-user.target.wants/logstash.service to /etc/systemd/system/logstash.service. [root@elk conf.d]# systemctl status logstash ● logstash.service - logstash Loaded: loaded (/etc/systemd/system/logstash.service; enabled; vendor preset: disabled) Active: active (running) since 二 2019-12-03 19:54:07 CST; 14h ago Main PID: 17739 (java) CGroup: /system.slice/logstash.service └─17739 /bin/java -Xms1g -Xmx1g -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.awt.headless=true -Dfi... 12月 03 19:54:30 elk logstash[17739]: [2019-12-03T19:54:30,007][INFO ][logstash.outputs.elasticsearch] ES Output version determined {:es_version=>6} 12月 03 19:54:30 elk logstash[17739]: [2019-12-03T19:54:30,008][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won't be us..._version=>6} 12月 03 19:54:30 elk logstash[17739]: [2019-12-03T19:54:30,013][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch"....0.1:9200"]} 12月 03 19:54:30 elk logstash[17739]: [2019-12-03T19:54:30,017][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil} 12月 03 19:54:30 elk logstash[17739]: [2019-12-03T19:54:30,020][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{"template"=>"logs...atch"=>"mess 12月 03 19:54:30 elk logstash[17739]: [2019-12-03T19:54:30,407][INFO ][logstash.inputs.beats ] Beats inputs: Starting input listener {:address=>"0.0.0.0:5044"} 12月 03 19:54:30 elk logstash[17739]: [2019-12-03T19:54:30,439][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0...16777 run>"} 12月 03 19:54:30 elk logstash[17739]: [2019-12-03T19:54:30,523][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]} 12月 03 19:54:30 elk logstash[17739]: [2019-12-03T19:54:30,540][INFO ][org.logstash.beats.Server] Starting server on port: 5044 12月 03 19:54:30 elk logstash[17739]: [2019-12-03T19:54:30,773][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} Hint: Some lines were ellipsized, use -l to show in full. [root@elk conf.d]#

第二步部署一个收集node节点日志的

[root@master01 log]# cat k8s-logs.yaml apiVersion: v1 kind: ConfigMap metadata: name: k8s-logs-filebeat-config namespace: kube-system data: filebeat.yml: |- #生产filebeat配置文件 filebeat.prospectors: - type: log paths: - /messages fields: #设置标识关键字 方便标识处理日志 logstash-to-es.conf app: k8s type: module fields_under_root: true #将上面fields字段设置为顶级 可以再kibana页面上看到 output.logstash: hosts: ['10.192.27.111:5044'] #filebeat 将日志传至的位置 --- apiVersion: apps/v1 kind: DaemonSet #每个node节点部署一个agent 收集 metadata: name: k8s-logs namespace: kube-system spec: selector: matchLabels: project: k8s app: filebeat template: metadata: labels: project: k8s app: filebeat spec: containers: - name: filebeat image: 10.192.27.111/library/filebeat:6.4.2 args: [ "-c", "/etc/filebeat.yml", "-e", ] resources: requests: cpu: 100m memory: 100Mi limits: cpu: 500m memory: 500Mi securityContext: runAsUser: 0 volumeMounts: - name: filebeat-config mountPath: /etc/filebeat.yml #挂载配置文件 subPath: filebeat.yml - name: k8s-logs mountPath: /messages #挂载本地日志文件 volumes: - name: k8s-logs hostPath: path: /var/log/messages type: File - name: filebeat-config configMap: name: k8s-logs-filebeat-config [root@master01 log]# [root@master01 log]# kubectl apply -f k8s-logs.yaml configmap/k8s-logs-filebeat-config configured daemonset.apps/k8s-logs unchanged [root@master01 log]# vim k8s-logs.yaml [root@master01 log]# kubectl get all -n kube-system NAME READY STATUS RESTARTS AGE pod/coredns-5c5d76fdbb-lnhfq 1/1 Running 0 6d19h pod/k8s-logs-c5kng 1/1 Running 0 18h pod/k8s-logs-rk7zz 1/1 Running 0 18h pod/kubernetes-dashboard-587699746d-4njgl 1/1 Running 0 7d NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kube-dns ClusterIP 10.0.0.2 <none> 53/UDP,53/TCP 6d19h service/kubernetes-dashboard NodePort 10.0.0.153 <none> 443:30001/TCP 7d NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/k8s-logs 2 2 2 2 2 <none> 18h NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/coredns 1/1 1 1 6d19h deployment.apps/kubernetes-dashboard 1/1 1 1 7d NAME DESIRED CURRENT READY AGE replicaset.apps/coredns-5c5d76fdbb 1 1 1 6d19h replicaset.apps/kubernetes-dashboard-587699746d 1 1 1 7d [root@master01 log]#

第三步部署一个收集pod日志的

修改logstash日志 并重启服务

[root@elk conf.d]# ls logstash-sample.conf.bak logstash-to-es.conf [root@elk conf.d]# cat logstash-to-es.conf input { beats { port => 5044 } } filter { } output { if [app] == "www" { if [type] == "nginx-access" { elasticsearch { hosts => ["http://127.0.0.1:9200"] index => "nginx-access-%{+YYYY.MM.dd}" } } else if [type] == "nginx-error" { elasticsearch { hosts => ["http://127.0.0.1:9200"] index => "nginx-error-%{+YYYY.MM.dd}" } } else if [type] == "tomcat-catalina" { elasticsearch { hosts => ["http://127.0.0.1:9200"] index => "tomcat-catalina-%{+YYYY.MM.dd}" } } } else if [app] == "k8s" { if [type] == "module" { elasticsearch { hosts => ["http://127.0.0.1:9200"] index => "k8s-log-%{+YYYY.MM.dd}" } } } } [root@elk conf.d]#

1、收集nginx日志

[root@master01 log]# cat ../project/java-demo/namespace.yaml apiVersion: v1 kind: Namespace metadata: name: test [root@master01 log]#

[root@master01 log]# cat filebeat-nginx-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: filebeat-nginx-config namespace: test data: filebeat.yml: |- filebeat.prospectors: - type: log paths: - /usr/local/nginx/logs/access.log # tags: ["access"] fields: #方便标识处理日志 logstash-to-es.conf app: www type: nginx-access fields_under_root: true #将上面fields字段设置为顶级 可以再kibana页面上看到 - type: log paths: - /usr/local/nginx/logs/error.log # tags: ["error"] fields: app: www type: nginx-error fields_under_root: true output.logstash: hosts: ['10.192.27.111:5044'] [root@master01 log]#

[root@master01 log]# cat nginx-deployment.yaml apiVersion: apps/v1beta1 kind: Deployment metadata: name: php-demo namespace: test spec: replicas: 3 selector: matchLabels: project: www app: php-demo template: metadata: labels: project: www app: php-demo spec: # imagePullSecrets: # - name: registry-pull-secret containers: - name: nginx image: 10.192.27.111/library/php-demo:latest imagePullPolicy: Always ports: - containerPort: 80 name: web protocol: TCP resources: requests: cpu: 0.5 memory: 256Mi limits: cpu: 1 memory: 1Gi resources: requests: cpu: 0.5 memory: 256Mi limits: cpu: 1 memory: 1Gi livenessProbe: httpGet: path: /status.php port: 80 initialDelaySeconds: 6 timeoutSeconds: 20 volumeMounts: - name: nginx-logs mountPath: /usr/local/nginx/logs - name: filebeat image: 10.192.27.111/library/filebeat:6.4.2 args: [ "-c", "/etc/filebeat.yml", "-e", ] resources: limits: memory: 500Mi requests: cpu: 100m memory: 100Mi securityContext: runAsUser: 0 volumeMounts: - name: filebeat-config mountPath: /etc/filebeat.yml subPath: filebeat.yml - name: nginx-logs mountPath: /usr/local/nginx/logs volumes: - name: nginx-logs emptyDir: {} - name: filebeat-config configMap: name: filebeat-nginx-config [root@master01 log]#

2、收集tomcat日志

[root@master01 log]# cat filebeat-tomcat-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: filebeat-tomcat-config namespace: test data: filebeat.yml: |- filebeat.prospectors: - type: log paths: - /usr/local/tomcat/logs/catalina.* # tags: ["tomcat"] fields: app: www type: tomcat-catalina fields_under_root: true multiline: pattern: '^[' #将开头为左中括号 到下一个左中括号之间的内容为一行 方便查看 negate: true match: after output.logstash: hosts: ['10.192.27.111:5044'] [root@master01 log]#

[root@master01 log]# cat tomcat-deployment.yaml apiVersion: apps/v1beta1 kind: Deployment metadata: name: tomcat-java-demo namespace: test spec: replicas: 3 selector: matchLabels: project: www app: java-demo template: metadata: labels: project: www app: java-demo spec: # imagePullSecrets: # - name: registry-pull-secret containers: - name: tomcat image: 10.192.27.111/library/tomcat-java-demo:latest imagePullPolicy: Always ports: - containerPort: 8080 name: web protocol: TCP resources: requests: cpu: 0.5 memory: 1Gi limits: cpu: 1 memory: 2Gi livenessProbe: httpGet: path: / port: 8080 initialDelaySeconds: 60 timeoutSeconds: 20 readinessProbe: httpGet: path: / port: 8080 initialDelaySeconds: 60 timeoutSeconds: 20 volumeMounts: - name: tomcat-logs mountPath: /usr/local/tomcat/logs - name: filebeat image: 10.192.27.111/library/filebeat:6.4.2 args: [ "-c", "/etc/filebeat.yml", "-e", ] resources: limits: memory: 500Mi requests: cpu: 100m memory: 100Mi securityContext: runAsUser: 0 volumeMounts: - name: filebeat-config mountPath: /etc/filebeat.yml subPath: filebeat.yml - name: tomcat-logs mountPath: /usr/local/tomcat/logs volumes: - name: tomcat-logs emptyDir: {} - name: filebeat-config configMap: name: filebeat-tomcat-config [root@master01 log]#

[root@master01 log]# kubectl get all -n test NAME READY STATUS RESTARTS AGE pod/php-demo-6c7d9c9495-k29bn 2/2 Running 0 15h pod/php-demo-6c7d9c9495-ssfx5 2/2 Running 0 15h pod/php-demo-6c7d9c9495-vcw65 2/2 Running 0 15h pod/tomcat-java-demo-75c96bcd59-8f75n 2/2 Running 0 15h pod/tomcat-java-demo-75c96bcd59-lbm4s 2/2 Running 0 15h pod/tomcat-java-demo-75c96bcd59-qxhrf 2/2 Running 0 15h NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/php-demo 3/3 3 3 15h deployment.apps/tomcat-java-demo 3/3 3 3 15h NAME DESIRED CURRENT READY AGE replicaset.apps/php-demo-6c7d9c9495 3 3 3 15h replicaset.apps/tomcat-java-demo-75c96bcd59 3 3 3 15h [root@master01 log]#

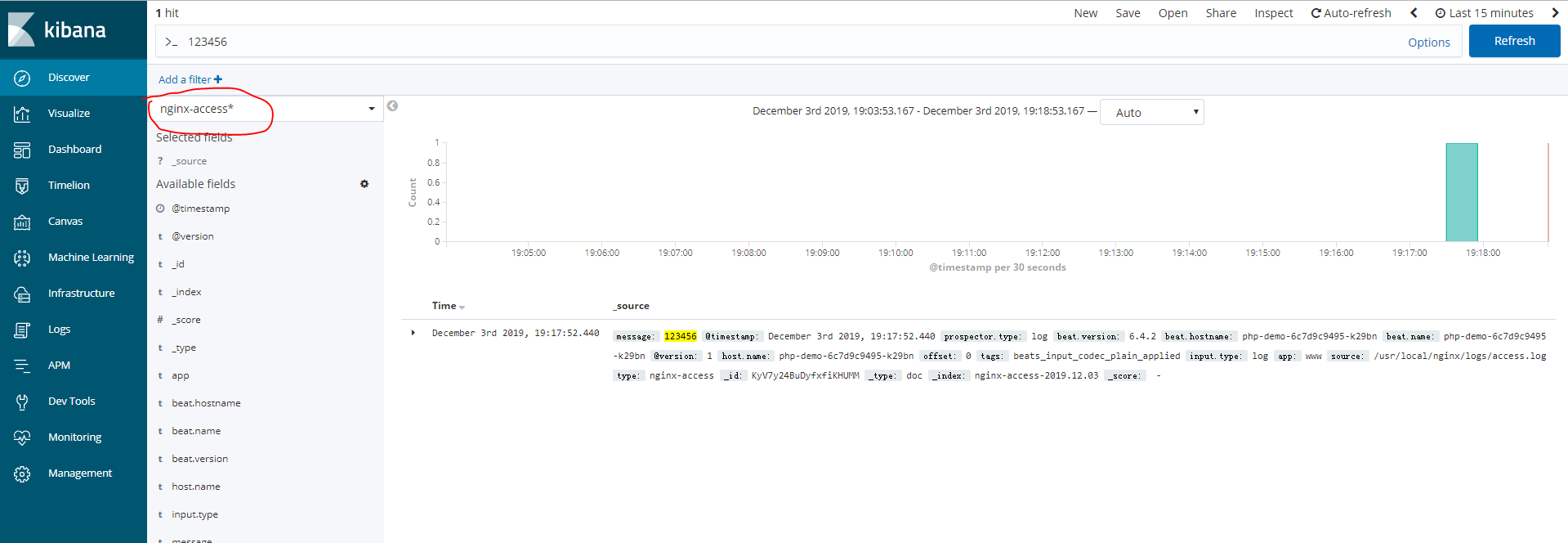

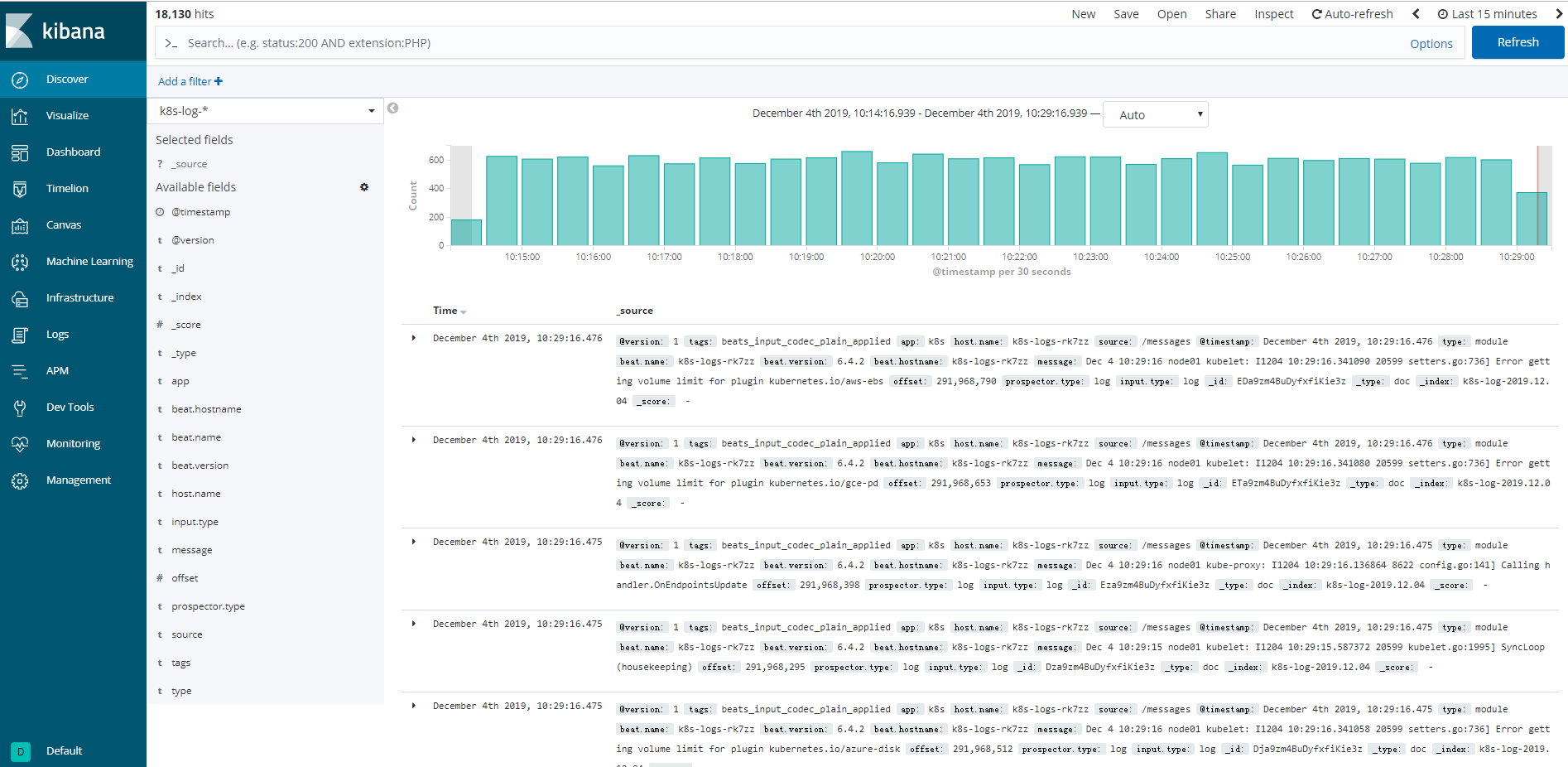

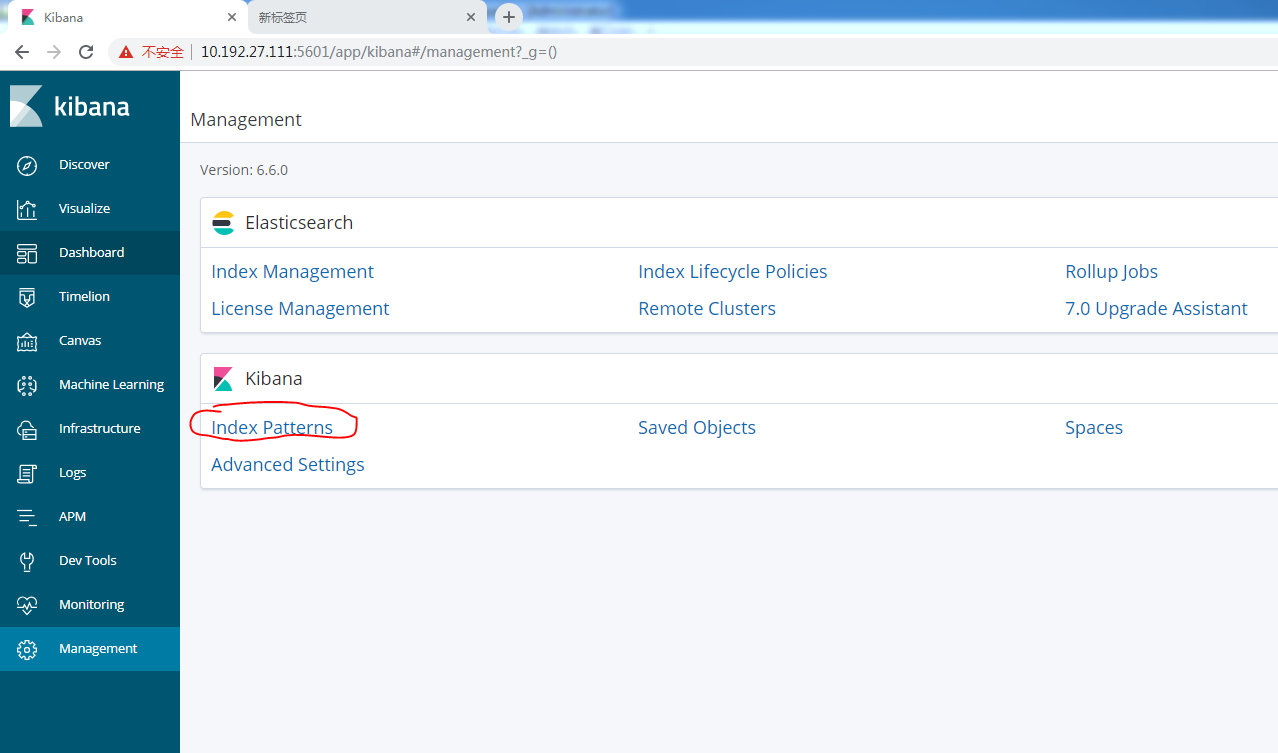

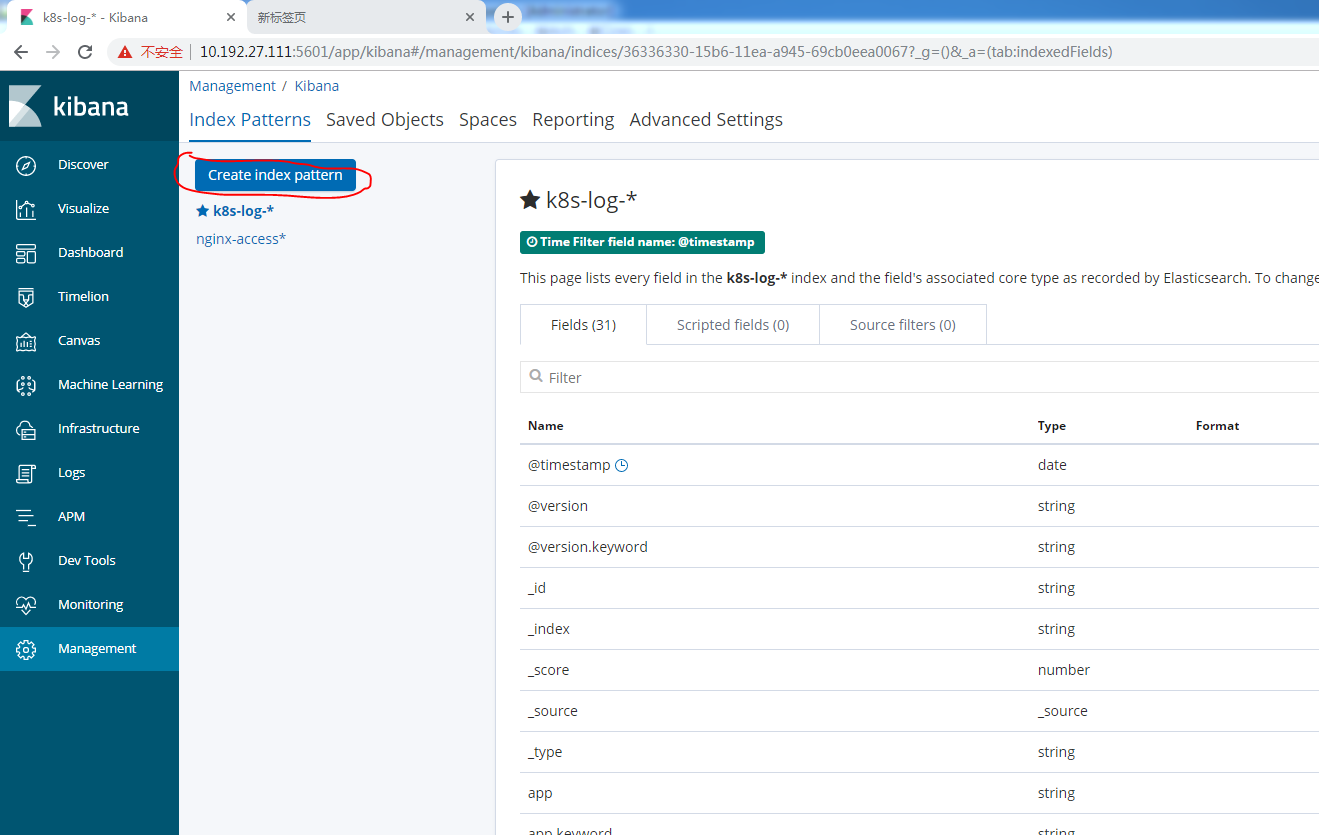

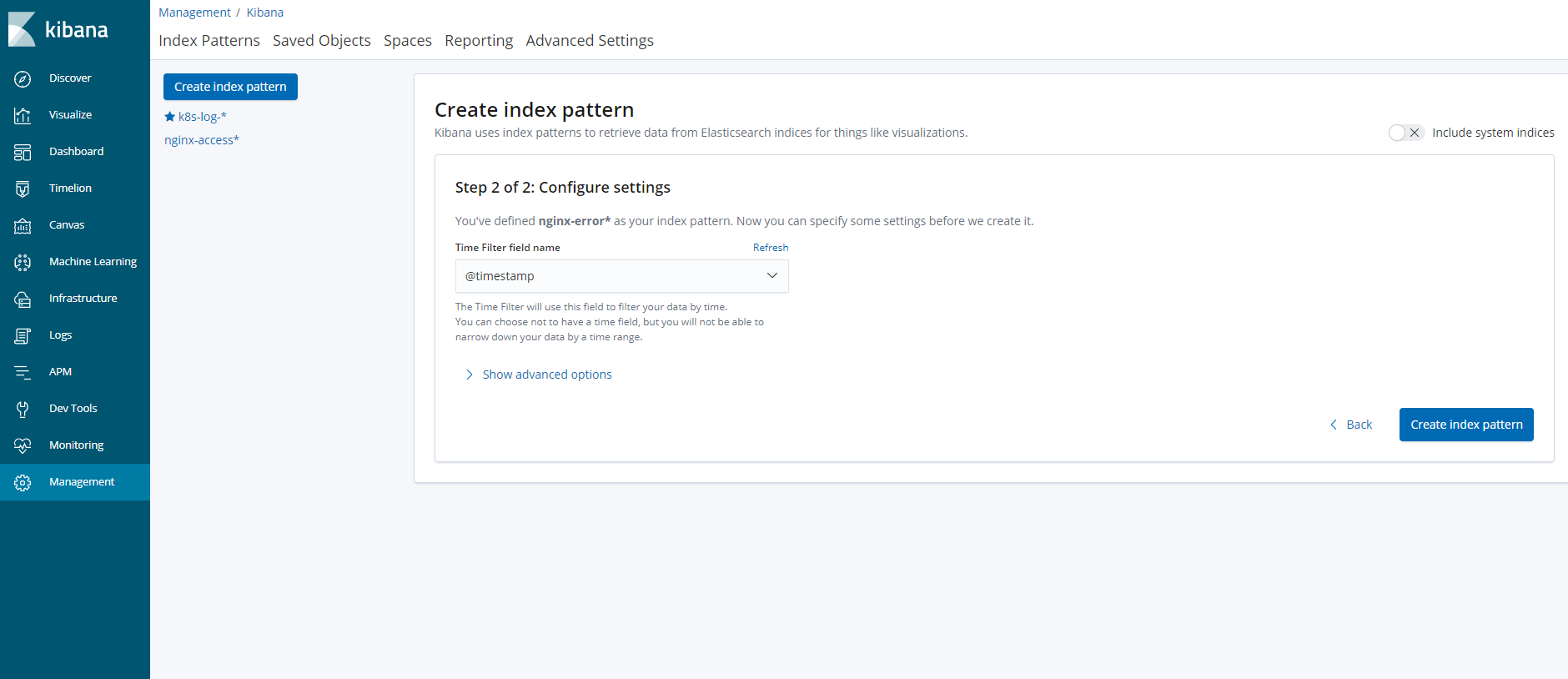

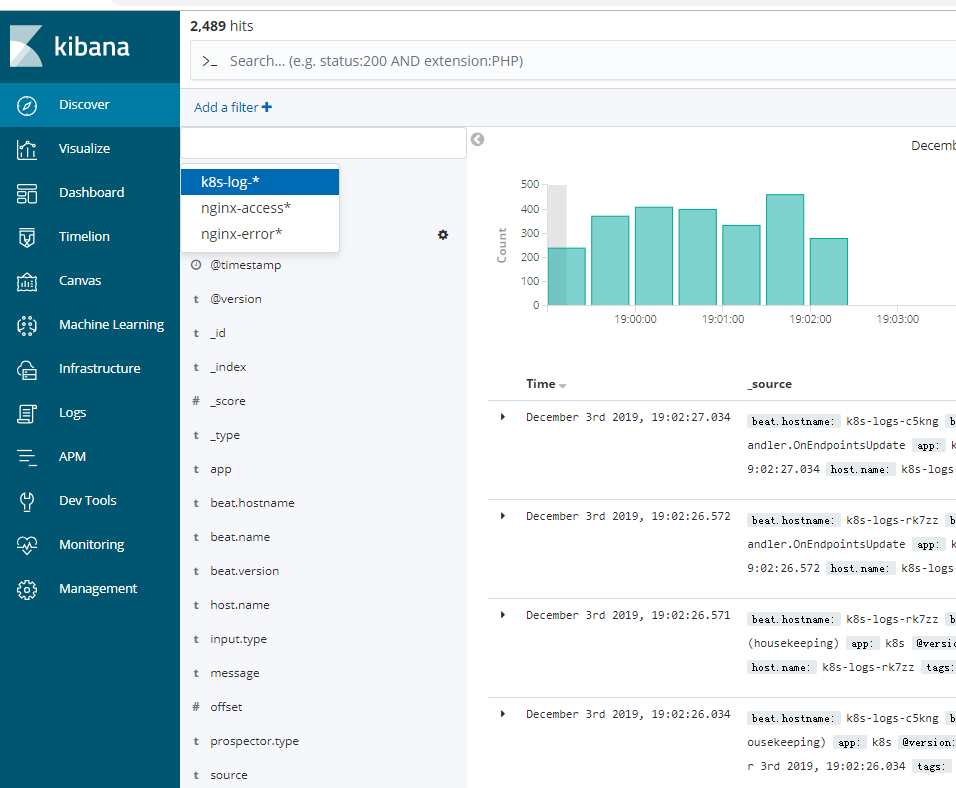

访问:http://10.192.27.111:5601/

[root@master01 log]# kubectl exec -it tomcat-java-demo-75c96bcd59-8f75n bash -n test Defaulting container name to tomcat. Use 'kubectl describe pod/tomcat-java-demo-75c96bcd59-8f75n -n test' to see all of the containers in this pod. [root@tomcat-java-demo-75c96bcd59-8f75n tomcat]# ls BUILDING.txt CONTRIBUTING.md LICENSE NOTICE README.md RELEASE-NOTES RUNNING.txt bin conf lib logs temp webapps work [root@tomcat-java-demo-75c96bcd59-8f75n tomcat]# tailf /usr/local/tomcat/ BUILDING.txt LICENSE README.md RUNNING.txt conf/ logs/ webapps/ CONTRIBUTING.md NOTICE RELEASE-NOTES bin/ lib/ temp/ work/ [root@tomcat-java-demo-75c96bcd59-8f75n tomcat]# tailf /usr/local/tomcat/logs/ catalina.2019-12-03.log host-manager.2019-12-03.log localhost.2019-12-03.log localhost_access_log.2019-12-03.txt manager.2019-12-03.log [root@tomcat-java-demo-75c96bcd59-8f75n tomcat]# echo '123456' >> /usr/local/tomcat/logs/catalina.2019-12-03.log [root@tomcat-java-demo-75c96bcd59-8f75n tomcat]# echo '123456' >> /usr/local/tomcat/logs/catalina.2019-12-03.log [root@tomcat-java-demo-75c96bcd59-8f75n tomcat]# exit