1.第一个程序,输出hello world,1个Block块中含有5个线程

1 #include <stdio.h> 2 #include "cuda_runtime.h" 3 4 __global__ void hello(void) 5 { 6 printf("hello world from GPU! "); 7 } 8 int main() 9 { 10 printf("hello world from CPU! "); 11 hello<<<1,5>>>(); 12 //重置CUDA设置释放程序占用的资源 13 cudaDeviceReset(); 14 return 0; 15 }

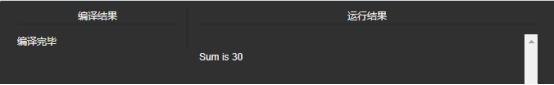

2.参数的传入,

1 #include <stdio.h> 2 #include "cuda_runtime.h" 3 #include "device_launch_parameters.h" 4 __global__ void add(int i,int j) 5 { 6 int count; 7 count = i + j; 8 printf(" Sum is %d ",count); 9 } 10 11 int main() 12 { 13 add<<<1,1>>>(10,20); 14 cudaDeviceReset(); 15 return 0; 16 }

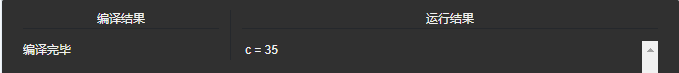

3.数据的传入与传出,我们的数据要从内存copy到显存上面,然后现在又要从显存上面copy回来

1 #include <stdio.h> 2 #include "cuda_runtime.h" 3 #include "device_launch_parameters.h" 4 5 __global__ void decrease(int a, int b, int *c) 6 { 7 *c = a + b; 8 } 9 int main() 10 { 11 int *c=0; 12 int *dev_c=0; 13 //初始化CPU上的内存空间 14 c = (int*)malloc(sizeof(int)); 15 //初始化GPU上的内存空间 16 cudaMalloc((void**)&dev_c,sizeof(int)); 17 //调用内核函数 18 decrease <<<1,1>>>(15,20,dev_c); 19 //等待设备所有线程任务执行完毕 20 cudaDeviceSynchronize(); 21 //将数据从device中复制到hist中 22 cudaMemcpy(c,dev_c,sizeof(int),cudaMemcpyDeviceToHost); 23 //输出 24 printf(" c = %d ",*c); 25 //释放内存 26 cudaFree(dev_c); 27 free(c); 28 return 0; 29 }

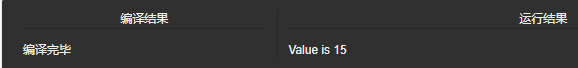

4.传入的值全改为指针类型

1 #include <stdio.h> 2 #include "cuda_runtime.h" 3 #include "device_launch_parameters.h" 4 5 __global__ void addCuda(int* a, int* b, int* c) 6 { 7 *c = *a - *b; 8 } 9 10 void addWithCuda(int *c,int *a,int *b) 11 { 12 int *dev_c = 0; 13 int *dev_a = 0; 14 int *dev_b = 0; 15 16 //初始化CUDA内存 17 cudaMalloc((void**)&dev_c,sizeof(int)); 18 cudaMalloc((void**)&dev_a,sizeof(int)); 19 cudaMalloc((void**)&dev_b,sizeof(int)); 20 21 //从主机复制数据复制到device上 22 cudaMemcpy(dev_a,a,sizeof(int),cudaMemcpyHostToDevice); 23 cudaMemcpy(dev_b,b,sizeof(int),cudaMemcpyHostToDevice); 24 25 //调用内核函数 26 addCuda<<<1,1>>>(dev_a,dev_b,dev_c); 27 cudaDeviceSynchronize(); 28 29 //数据复制到host 30 cudaMemcpy(c,dev_c,sizeof(int),cudaMemcpyDeviceToHost); 31 32 cudaFree(dev_c); 33 cudaFree(dev_a); 34 cudaFree(dev_b); 35 36 } 37 38 int main() 39 { 40 int a, b, c; 41 a = 30; 42 b = 15; 43 c = 10; 44 //传入参数变量(地址) 45 addWithCuda(&c,&a,&b); 46 //重置CUDA设备释放程序占用的程序 47 cudaDeviceReset(); 48 printf("Value is %d ", c); 49 50 return 0; 51 }

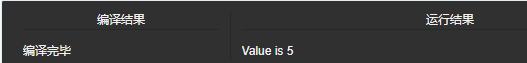

5.传入的值全改为指针类型

1 #include <stdio.h> 2 #include "cuda_runtime.h" 3 #include "device_launch_parameters.h" 4 5 __global__ void deCuda(int* a, int* b, int* c) 6 { 7 *c = *a - *b; 8 } 9 int main() 10 { 11 int *a, *b, *c; 12 a = (int*)malloc(sizeof(int)); 13 b = (int*)malloc(sizeof(int)); 14 c = (int*)malloc(sizeof(int)); 15 *a=10; 16 *b=5; 17 *c=0; 18 int *dev_c = 0; 19 int *dev_a = 0; 20 int *dev_b = 0; 21 //3.请求CUDA设备的内存(显存),执行CUDA函数 22 cudaMalloc((void**)&dev_c, sizeof(int)); 23 cudaMalloc((void**)&dev_a, sizeof(int)); 24 cudaMalloc((void**)&dev_b, sizeof(int)); 25 26 cudaMemcpy(dev_a, a, sizeof(int), cudaMemcpyHostToDevice); 27 cudaMemcpy(dev_b, b, sizeof(int), cudaMemcpyHostToDevice); 28 29 deCuda<<<1,1>>>(dev_a,dev_b,dev_c); 30 cudaMemcpy(c, dev_c, sizeof(int), cudaMemcpyDeviceToHost); 31 printf("Value is %d ", *c); 32 33 cudaFree(dev_c); 34 cudaFree(dev_a); 35 cudaFree(dev_b); 36 free(a); 37 free(b); 38 free(c); 39 //重置CUDA设备释放程序占用的程序 40 cudaDeviceReset(); 41 return 0; 42 }

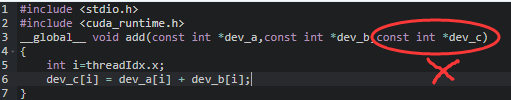

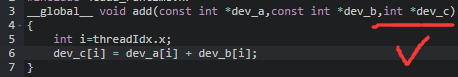

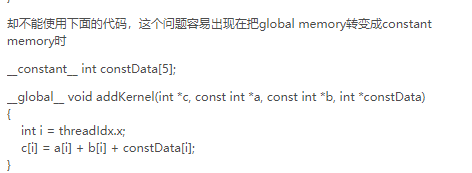

6. 程序实现向量的加法操作,一个block中含有512个线程

1 #include <stdio.h> 2 #include <cuda_runtime.h> 3 __global__ void add( int *dev_a, int *dev_b, int *dev_c) 4 { 5 int i=threadIdx.x; 6 dev_c[i] = dev_a[i] + dev_b[i]; 7 } 8 int main() 9 { 10 int host_a[512], host_b[512], host_c[512]; 11 for(int i = 0; i < 512; i++) 12 { 13 host_a[i] = i; 14 host_b[i] = i<<1; 15 } 16 //定义cudaError,默认为cudaSuccess 17 cudaError_t err = cudaSuccess; 18 int *dev_a, *dev_b, *dev_c; 19 err = cudaMalloc((void**)&dev_a,sizeof(int)*512); 20 err = cudaMalloc((void**)&dev_b,sizeof(int)*512); 21 err = cudaMalloc((void**)&dev_c,sizeof(int)*512); 22 23 if(err!=cudaSuccess) 24 { 25 printf("the cuadaMalloc on GPU is failed"); 26 return 1; 27 } 28 29 printf("SUCCESS "); 30 //从host到device 31 cudaMemcpy(dev_a,host_a,sizeof(host_a),cudaMemcpyHostToDevice); 32 cudaMemcpy(dev_b,host_b,sizeof(host_b),cudaMemcpyHostToDevice); 33 34 //调用核函数 35 add<<<1,512>>>(dev_a,dev_b,dev_c); 36 cudaMemcpy(&host_c,dev_c,sizeof(host_c),cudaMemcpyDeviceToHost); 37 for(int i=0; i<512; i++) 38 { 39 printf("host_a[%d] + host_b[%d] = %d + %d = %d ",i,i,host_a[i],host_b[i],host_c[i]); 40 } 41 42 //释放内存 43 cudaFree(dev_c); 44 cudaFree(dev_b); 45 cudaFree(dev_a); 46 47 return 0; 48 49 }