给定一个线性回归模型 yi = β0 + β1xi1 +…+ βpxi1 + εi

对应数据集(xi1, xi2,…, xip, yi), i=1,…,n,包含n个观察数据. β是系数,ε 是误差项

表示y的期望,

表示y的期望,  就是离差(deviation),注意不是方差(variance);

就是离差(deviation),注意不是方差(variance);  表示对yi预测的值.

表示对yi预测的值.

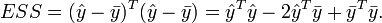

The total sum of squares(TSS) = the explained sum of squares(ESS) + the residual sum of squares(RSS),对应于:

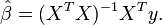

在普通最小二乘法(Ordinary Least Squares)中的应用

对β的估计:

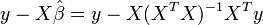

The residual vector  =

=  ,则

,则

用  表示向量,其每个元素都相等,为 y 的期望,则

表示向量,其每个元素都相等,为 y 的期望,则

让 ,则

,则

当且仅当  (也即the sum of the residuals

(也即the sum of the residuals  )时,TSS = ESS + RSS.

)时,TSS = ESS + RSS.

(由于 ,

, ,

,

- 或

而X的第一列全是1,则 第一个元素就是

第一个元素就是 ,并且等于0.

,并且等于0.

因此上面的条件成立,可使TSS = ESS + RSS)

Mean squared error(MSE)