首先,当然,官方文档都有

RNN: https://pytorch.org/docs/stable/generated/torch.nn.RNN.html

RNNCell: https://pytorch.org/docs/stable/generated/torch.nn.RNNCell.html

LSTM: https://pytorch.org/docs/stable/generated/torch.nn.LSTM.html

LSTMCell: https://pytorch.org/docs/stable/generated/torch.nn.LSTMCell.html

GRU: https://pytorch.org/docs/stable/generated/torch.nn.GRU.html

GRUCell: https://pytorch.org/docs/stable/generated/torch.nn.GRUCell.html

这里,只是自己做下笔记

以LSTM和LSTMCell为例

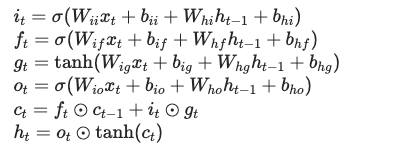

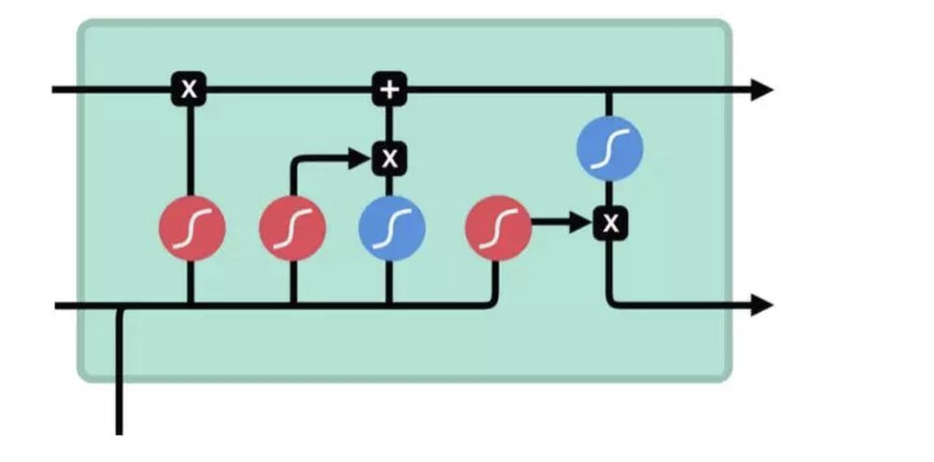

LSTM的结构

LSTM the dim of definition input output weights

LSTM parameters:

- input_size: input x 的 features

- hidden_size: hidden state h 的 features

- num_layers: 层数,默认为1

- batch_first: if True,是(batch, seq, feature),否则是(seq, batch, feature),默认是False

- bidirectional: 默认为False

input:

- input: 当batch_first=False, tensor为(L, N, H_i) ,否则为 (N, L, H_i)

- h_0: tensor of shape (D*num_layers, N, H_out),默认为zeros,如果(h_0, c_0) not provided

- c_0: tensor of shape (D*num_layers, n, H_cell),默认为zeros,如果(h_0, c_0) not provided

where:

N = batch size

L = sequence length

D = 2 if bidirectional=True otherwise 1

H_in = input_size

H_cell = hidden_size

H_out = proj_size if proj_size>0 otherwise hidden_size,通常就是hidden_size咯

Output:

- output: (L, N, D*H_out) when batch_first=False,是一个长度为L的序列,[h_1[-1], h_2[-1], ..., h_L[-1]],就是最后一层的hidden states

- h_n: tensor of shape (D*num_layers, N, H_out)

- c_n: tensor of shape (D*num_layers, N, H_cell)

Variables:

好像新版的有改动

- all_weights

Examples:

>>> rnn = nn.LSTM(10, 20, 2) # (input_size, hidden_size, num_layers) >>> input = torch.randn(5, 3, 10) # (time_steps, batch, input_size) >>> h0 = torch.randn(2, 3, 20) # (num_layers, batch_size, hidden_size) >>> c0 = torch.randn(2, 3, 20) >>> output, (hn, cn) = rnn(input, (h0, c0)) # (time_steps, batch, hidden_size) # output[-1] = h0[-1]

LSTM Cell

就是LSTM的一个单元,许多个LSTM Cell组成一个LSTM

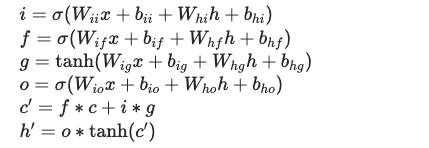

结构

相比LSTM,少了参数t

Parameters:

- 只有input_size 和 hidden_size,没有了 num_layers

Inputs:

- input: (batch, input_size)

- h_0: (batch, hidden_size)

- c_0: (batch, hidden_size)

Outputs:

- h_1: (batch, hidden_size)

- c_1: (batch, hidden_size)

Variables:

- weight_ih: input-hidden weights, of shape (4*hidden_size, input_size),因为是左乘W*input,且有4个W,所以是4*hidden_size

- weight_hh: hidden-hidden weights, of shape (4*hidden_size, hidden_size)

- bias_ih: input-hidden bias, of shape (4*hidden_size)

- bias_hh: hidden-hidden bias, of shape (4*hidden_size)

Example:

>>> rnn = nn.LSTMCell(10, 20) # (input_size, hidden_size) >>> input = torch.randn(2, 3, 10) # (time_steps, batch, input_size) >>> hx = torch.randn(3, 20) # (batch, hidden_size) >>> cx = torch.randn(3, 20) >>> output = [] >>> for i in range(2): hx, cx = rnn(input[i], (hx, cx)) output.append(hx) >>> output = torch.stack(output, dim=0)