前言

之前用python写了一个简单的爬虫项目用来抓取上海链家上的一些房价信息,整理了下代码,特此记录

准备工作

- 安装Scrapy

创建一个新的Scrapy项目

例如,我们可以使用指令 scrapy startproject Lianjia 创建一个名为Lianjia的scrapy项目

$ scrapy startproject Lianjia

New Scrapy project 'Lianjia', using template directory '/usr/local/anaconda3/lib/python3.6/site-packages/scrapy/templates/project', created in:

/Users/lestat/PyProjects/Lianjia

You can start your first spider with:

cd Lianjia

scrapy genspider example example.com

运行完该指令后,scrapy会为该项目创建Lianjia文件及相关文件,Lianjia文件夹下的目录结构如下:

.

├── Lianjia # Python模块,所有的代码都放这里面

│ ├── __init__.py

│ ├── __pycache__

│ ├── items.py # Item定义文件

│ ├── middlewares.py

│ ├── pipelines.py # pipelines定义文件

│ ├── settings.py # 配置文件

│ └── spiders # 所有爬虫spider都放这个文件夹下面

│ ├── __init__.py

│ └── __pycache__

└── scrapy.cfg # 部署配置文件

4 directories, 7 files

定义一个爬虫Spider

以下是一个可以抓取需要的链家搜索结果页面信息的spider

# Lianjia/Lianjia/spiders/summ_info.py

# -*- coding: utf-8 -*-

import scrapy

import time, sys

#scrapy runspider spiders/summ_info.py -a query=ershoufang/ie2y4l2l3a3a4p5 -o ./data/result4.csv

class LianjiaSpider(scrapy.Spider):

name = "fetchSummInfo"

allowed_domains = ['sh.lianjia.com']

headers = { 'user-agent':"Mozilla/5.0"}

query_prefix = "li143685059s100021904/ie2y4l2l3a3a4p5"

query_prefix2 = "ershoufang/huangpu/ie2y4l2l3a3a4p5"

def __init__(self, query='', **kwargs):

self.query = query

self.base_url = "https://sh.lianjia.com"

self.curr_page_no = 1

self.curr_url = "{}/{}pg{}" .format(self.base_url, self.query, self.curr_page_no)

self.last_url = None

super().__init__(**kwargs)

def start_requests(self):

urls = [ self.curr_url ]

for url in urls:

yield scrapy.Request(url=url, callback=self.parse, headers=self.headers)

def parse(self, response):

houseList = response.xpath('//ul[@class="sellListContent"]/li')

if len(houseList) == 0:

sys.exit()

#['title','houseInfo1','houseInfo2','positionInfo1','positionInfo2','followInfo','totalPrice','unitPrice']

for house in houseList:

item = {

'title': house.xpath('.//div[@class="title"]/a/text()').extract(),

'houseInfo1': house.xpath('.//div[@class="houseInfo"]/a/text()').extract(),

'houseInfo2': house.xpath('.//div[@class="houseInfo"]/text()').extract(),

'positionInfo1': house.xpath('.//div[@class="positionInfo"]/a/text()').extract(),

'positionInfo2': house.xpath('.//div[@class="positionInfo"]/text()').extract(),

'followInfo': house.xpath('.//div[@class="followInfo"]/text()').extract(),

'totalPrice': house.xpath('.//div[@class="totalPrice"]/span/text()').extract(),

'unitPrice': house.xpath('.//div[@class="unitPrice"]/@data-price').extract()

}

yield item

self.curr_page_no += 1

time.sleep(30)

curr_url = "{}/{}pg{}/" .format(self.base_url, self.query, self.curr_page_no)

yield scrapy.Request(url=curr_url, callback=self.parse, headers=self.headers)

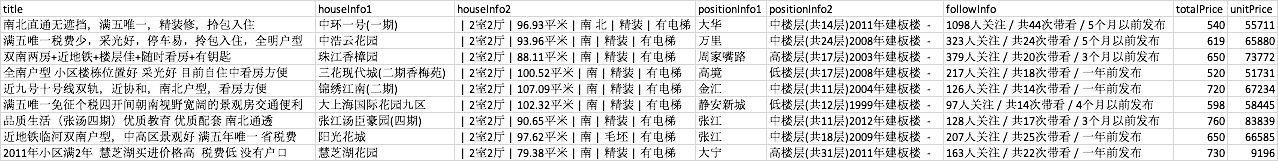

导出抓取数据

简单地,我们可以用 -o 选项保存爬虫的抓取结果,如下

$ scrapy runspider spiders/summ_info.py -a query=ershoufang/ie2y4l2l3a3a4p5 -o ./data/result.csv

结果如下:

head ./data/result.csv

保存数据到数据库(MongoDB)

[TO-DO]