1.前提

基于python3.6 依赖包 selenium ,xlwt,pandas

需要根据自己chrome浏览器的版本下载对应的chromedriver

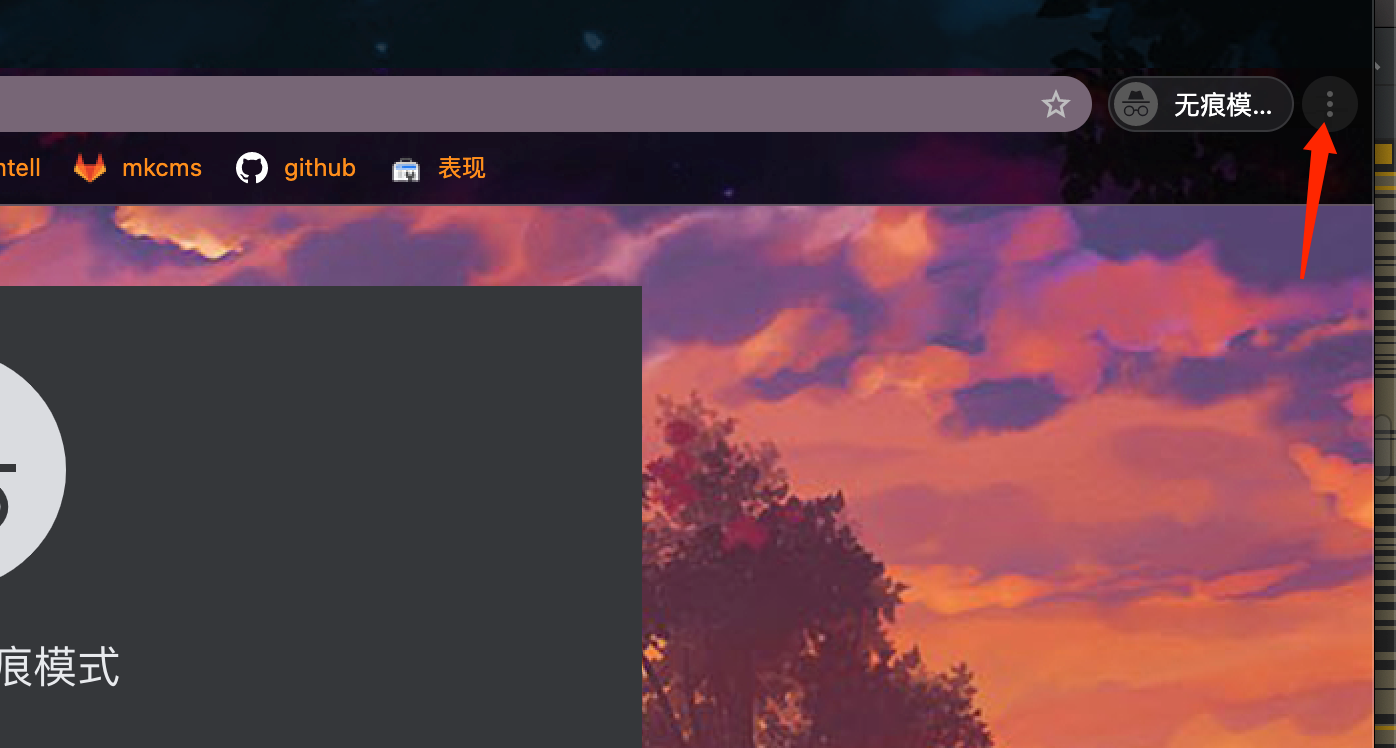

查看chrome版本号

点击 帮助 > 关于google

去下载对应的chromedriver : https://chromedriver.chromium.org/downloads

2.代码

#设置初始存放目录

#1,初始化文件夹

#2,设置需要爬取的链接

#3,对链接进行爬去

4,解析1688列表页

#5,持久化到表格

#6,标记已经爬过的链接

#7,合成一个表格

代码如下: 大家使用的时候,记得把方法都放到 main 之前,我是为了方便大家查看,才把mian方法写在前面

1 import os,xlwt,time 2 import pandas as pd 3 from bs4 import BeautifulSoup 4 from selenium import webdriver 5 from datetime import datetime 6 7 8 9 #chromedriver 地址,记得换成自己的 10 CHROME_DRIVER_PATH = '/Users/huangmengfeng/PycharmProjects/2020_study_plan/chromedriver' 11 12 13 14 if __name__ == '__main__': 15 #设置初始存放目录,记得换成自己的初始目录,只需要设置初始目录,后面的目录会自动生成,输出文件在out里面 16 base_dir_0 = '/Users/huangmengfeng/Desktop/1688_SCRIPY' 17 site_name_0 = 's.1688.com' 18 19 #1,初始化文件夹 20 tmp_data_dir_0,out_dir_0,record_path_0 = createDir(base_dir_0,site_name_0) 21 done_url = getDoneUrl(record_path_0) 22 #2,设置需要爬取的链接 23 need_url_list = [{'page_url':'https://s.1688.com/selloffer/offer_search.htm?keywords=%C5%B7%C3%C0%C5%AE%D7%B0&n=y&netType=1%2C11%2C16','cate_name':'欧美女装','total_num':50}] 24 #3,对链接进行爬去 25 for need_url in need_url_list: 26 for i in range(1,2): 27 now_page_url = "{0}&beginPage={1}#sm-filtbar".format(need_url['page_url'],i) 28 #跳过已经爬过的链接 29 if now_page_url not in done_url: 30 #4,解析1688列表页,模拟滑动,获得全部的商品,页面会有懒加载 31 soup_0 = get_dynamic_html2(now_page_url) 32 now_page_info_list = analysis1688Page(soup_0) 33 #5,持久化到表格 34 heads_0 = ['shop_name','shop_url','desc','url','price'] 35 exportToExcel(heads_0,now_page_info_list,tmp_data_dir_0,'{0}_{1}.xlsx'.format(need_url['cate_name'],i)) 36 #6,标记已经爬过的链接 37 addDoneUrl(record_path_0,now_page_url) 38 #7,合成一个表格 39 connectToOne(tmp_data_dir_0,out_dir_0,"{0}_{1}.xlsx".format(site_name_0,datetime.strftime(datetime.now(),'%Y%m%d%H%M%S'))) 40 41 42 43 #初始化文件夹 44 def createDir(base_dir,site_name): 45 tmp_data_dir_1 = "{0}/{1}".format(base_dir,site_name) 46 tmp_data_dir_2 = "{0}/{1}/{2}".format(base_dir,site_name,datetime.strftime(datetime.now(),'%Y%m%d')) 47 out_dir = '{0}/OUT'.format(base_dir) 48 path_arr = [base_dir,tmp_data_dir_1,tmp_data_dir_2,out_dir] 49 for path in path_arr: 50 if not os.path.exists(path): 51 os.mkdir(path) 52 record_path = '{0}/record.txt'.format(tmp_data_dir_2) 53 if not os.path.exists(record_path): 54 with open(record_path, 'a+', encoding="utf-8") as f: 55 f.write('') 56 f.close() 57 return tmp_data_dir_2,out_dir,record_path 58 59 60 61 # 下载动态界面 62 def get_dynamic_html2(site_url): 63 print('开始加载', site_url, '动态页面') 64 chrome_options = webdriver.ChromeOptions() 65 # ban sandbox 66 chrome_options.add_argument('--no-sandbox') 67 chrome_options.add_argument('--disable-dev-shm-usage') 68 # use headless 69 # chrome_options.add_argument('--headless') 70 chrome_options.add_argument('--disable-gpu') 71 chrome_options.add_argument('--ignore-ssl-errors') 72 driver = webdriver.Chrome(executable_path=CHROME_DRIVER_PATH, chrome_options=chrome_options) 73 # print('dynamic laod web is', site_url) 74 driver.set_page_load_timeout(100) 75 driver.set_window_size(1920, 1080) 76 # driver.set_script_timeout(100) 77 try: 78 driver.get(site_url) 79 except Exception as e: 80 driver.execute_script('window.stop()') # 超出时间则不加载 81 print(e, 'dynamic web load timeout') 82 83 time.sleep(2) 84 85 fullpage_screenshot(driver, 8000) 86 87 data2 = driver.page_source 88 soup2 = BeautifulSoup(data2, 'html.parser') 89 90 try: 91 time.sleep(3) 92 driver.quit() 93 except: 94 pass 95 return soup2 96 97 98 99 # 模拟滚动 100 def fullpage_screenshot(driver, total_height): 101 total_width = driver.execute_script("return document.body.offsetWidth") 102 # total_height = driver.execute_script("return document.body.parentNode.scrollHeight") 103 # total_height = 50000 104 viewport_width = driver.execute_script("return document.body.clientWidth") 105 viewport_height = driver.execute_script("return window.innerHeight") 106 rectangles = [] 107 108 i = 0 109 while i < total_height: 110 ii = 0 111 top_height = i + viewport_height 112 113 if top_height > total_height: 114 top_height = total_height 115 116 while ii < total_ 117 top_width = ii + viewport_width 118 119 if top_width > total_ 120 top_width = total_width 121 rectangles.append((ii, i, top_width, top_height)) 122 123 ii = ii + viewport_width 124 125 i = i + viewport_height 126 127 previous = None 128 part = 0 129 130 for rectangle in rectangles: 131 if not previous is None: 132 driver.execute_script("window.scrollTo({0}, {1})".format(rectangle[0], rectangle[1])) 133 print("Scrolled To ({0},{1})".format(rectangle[0], rectangle[1])) 134 time.sleep(0.5) 135 136 file_name = "part_{0}.png".format(part) 137 print("Capturing {0} ...".format(file_name)) 138 139 # driver.get_screenshot_as_file(file_name) 140 141 if rectangle[1] + viewport_height > total_height: 142 offset = (rectangle[0], total_height - viewport_height) 143 else: 144 offset = (rectangle[0], rectangle[1]) 145 146 print("Adding to stitched image with offset ({0}, {1})".format(offset[0], offset[1])) 147 part = part + 1 148 previous = rectangle 149 print("Finishing chrome full page screenshot workaround...") 150 return True 151 152 153 154 #解析1688列表页 155 def analysis1688Page(soup): 156 info_tag_list = soup.select('.sm-offer-item') 157 now_page_info_list = [] 158 for info_tag in info_tag_list: 159 skc = info_tag.attrs['trace-obj_value'] 160 a_tag_list = info_tag.select('a') 161 price_tag_lsit = info_tag.select('span.sm-offer-priceNum') 162 # print(info_tag) 163 img_tag = a_tag_list[0].select('img')[0] 164 165 shop_tag = a_tag_list[2] 166 desc = img_tag.attrs['alt'] 167 url = 'https://detail.1688.com/offer/{0}.html'.format(skc) 168 shop_name = shop_tag.text 169 shop_url = shop_tag.attrs['href'] 170 171 price = '无' 172 if len(price_tag_lsit) > 0: 173 price_tag = price_tag_lsit[0] 174 price = price_tag.attrs['title'] 175 print({'shop_name': shop_name, 'shop_url': shop_url, 'desc': desc, 'url': url, 'price': price}) 176 now_page_info_list.append( 177 {'shop_name': shop_name, 'shop_url': shop_url, 'desc': desc, 'url': url, 'price': price}) 178 return now_page_info_list 179 180 181 182 #将数据持久化到表格 183 def exportToExcel(heads,task_done,path,filename): 184 if not os.path.exists(path): 185 os.makedirs(path) 186 task_xls = xlwt.Workbook(encoding='utf-8') 187 task_sheet1 = task_xls.add_sheet('sheet1') 188 #表头 189 header_allign = xlwt.Alignment() 190 header_allign.horz = xlwt.Alignment.HORZ_CENTER 191 header_style = xlwt.XFStyle() 192 header_style.alignment = header_allign 193 for i in range(len(heads)): 194 task_sheet1.col(i).width = 12000 195 task_sheet1.write(0,i,heads[i],header_style) 196 #开始插入 197 for i in range(len(task_done)): 198 for j in range(len(heads)): 199 task_sheet1.write(i+1,j,task_done[i][heads[j]]) 200 print(os.path.join(path,filename)) 201 task_xls.save(os.path.join(path,filename)) 202 return filename 203 204 205 206 #获得已经爬去的链接 207 def getDoneUrl(path): 208 done_url = [] 209 with open(path, 'r', encoding="utf-8") as f: 210 url_list = f.readlines() 211 for url in url_list: 212 done_url.append(url.rstrip(' ')) 213 print(done_url) 214 return done_url 215 216 217 #将爬去过的url记录下来 218 def addDoneUrl(path,content): 219 try: 220 with open(path, 'a+', encoding="utf-8") as f: 221 f.write(content + ' ') 222 f.close() 223 except Exception as e: 224 print(e) 225 226 227 228 #汇总全部数据 229 def connectToOne(dir, to_dir, out_file_name): 230 excel_df = pd.DataFrame() 231 for file in os.listdir(dir): 232 if file.endswith('.xlsx'): 233 print("file:", file) 234 excel_df = excel_df.append( 235 pd.read_excel(os.path.join(dir, file), dtype={'url': str}, )) 236 print('开始合并') 237 excel_df['currency'] = '$' 238 writer = pd.ExcelWriter(os.path.join(to_dir, out_file_name), engine='xlsxwriter', 239 options={'strings_to_urls': False}) 240 241 excel_df.to_excel(writer,index=False) 242 writer.close()