1、在安装目录创建多个配置文件夹来启动多个redis服务

cd /usr/local/redis

##递归创建redis配置文件夹

mkdir -p redis_cluster/6370 ## 6370 6371 6372

2、将redis.conf给不同目录下都拷贝一份

cp /usr/local/redis/conf/redis.conf /usr/local/redis/redis_cluster/6370/ ##每个目录都拷贝一份

3、修改不同端口下的redis.conf

port 6370 //端口6370,6371,6372 bind 本机ip //默认ip为127.0.0.1 需要改为其他节点机器可访问的ip 192.168.2.64 否则创建集群时无法访问对应的端口,无法创建集群 daemonize yes //redis后台运行 pidfile /var/run/redis_6370.pid //pidfile文件对应7000,7001,7002 cluster-enabled yes //开启集群 把注释#去掉 cluster-config-file nodes_6370.conf //集群的配置 配置文件首次启动自动生成 7000,7001,7002 cluster-node-timeout 15000 //请求超时 默认15秒,可自行设置 appendonly yes //aof日志开启 有需要就开启,它会每次写操作都记录一条日志

dbfilename dump-6370.rdb //redis持久化时存储的文件名

requirepass 123456 //密码

masterauth 123456 //主服务器密码,建议都设置成一样的

4、启动两台服务器中的多个节点

##第一台机器(192.168.2.64)

/usr/local/redis/bin/redis-server /usr/local/redis/redis_cluster/6370/redis.conf

/usr/local/redis/bin/redis-server /usr/local/redis/redis_cluster/6371/redis.conf

/usr/local/redis/bin/redis-server /usr/local/redis/redis_cluster/6372/redis.conf

##第二台机器(192.168.2.24)

/usr/local/redis/bin/redis-server /usr/local/redis/redis_cluster/6373/redis.conf

/usr/local/redis/bin/redis-server /usr/local/redis/redis_cluster/6374/redis.conf

/usr/local/redis/bin/redis-server /usr/local/redis/redis_cluster/6375/redis.conf

5、检查各个服务是否都已启动成功(如果配置有错,比如端口冲突则会启动失败,又因后台启动,前台无打印),我们可以看到除了6371端口启用 16371端口也启用了,故如果配置防火墙时都应打开

[root@ora redis_cluster]# ps -ef | grep redis

root 14963 1 0 13:30 ? 00:00:01 ./bin/redis-server 192.168.2.64:6370 [cluster]

root 14969 1 0 13:30 ? 00:00:01 ./bin/redis-server 192.168.2.64:6371 [cluster]

root 15044 1 0 13:34 ? 00:00:01 ./bin/redis-server 192.168.2.64:6372 [cluster]

root 15514 14554 0 14:04 pts/0 00:00:00 grep redis

[root@ora redis_cluster]# netstat -tnlp| grep redis

tcp 0 0 127.0.0.1:16370 0.0.0.0:* LISTEN 14963/./bin/redis-s

tcp 0 0 192.168.2.64:16370 0.0.0.0:* LISTEN 14963/./bin/redis-s

tcp 0 0 127.0.0.1:16371 0.0.0.0:* LISTEN 14969/./bin/redis-s

tcp 0 0 192.168.2.64:16371 0.0.0.0:* LISTEN 14969/./bin/redis-s

tcp 0 0 127.0.0.1:16372 0.0.0.0:* LISTEN 15044/./bin/redis-s

tcp 0 0 192.168.2.64:16372 0.0.0.0:* LISTEN 15044/./bin/redis-s

tcp 0 0 127.0.0.1:6370 0.0.0.0:* LISTEN 14963/./bin/redis-s

tcp 0 0 192.168.2.64:6370 0.0.0.0:* LISTEN 14963/./bin/redis-s

tcp 0 0 127.0.0.1:6371 0.0.0.0:* LISTEN 14969/./bin/redis-s

tcp 0 0 192.168.2.64:6371 0.0.0.0:* LISTEN 14969/./bin/redis-s

tcp 0 0 127.0.0.1:6372 0.0.0.0:* LISTEN 15044/./bin/redis-s

tcp 0 0 192.168.2.64:6372 0.0.0.0:* LISTEN 15044/./bin/redis-s

另一台(192.168.2.24)

[root@project-deve redis]# ps -ef |grep redis

root 26281 1 0 14:05 ? 00:00:00 ./bin/redis-server 192.168.2.24:6373 [cluster]

root 26287 1 0 14:05 ? 00:00:00 ./bin/redis-server 192.168.2.24:6374 [cluster]

root 26293 1 0 14:05 ? 00:00:00 ./bin/redis-server 192.168.2.24:6375 [cluster]

root 26299 25341 0 14:05 pts/0 00:00:00 grep --color=auto redis

[root@project-deve redis]# netstat -tnlp | grep redis

tcp 0 0 127.0.0.1:6375 0.0.0.0:* LISTEN 26293/./bin/redis-s

tcp 0 0 192.168.2.24:6375 0.0.0.0:* LISTEN 26293/./bin/redis-s

tcp 0 0 127.0.0.1:16373 0.0.0.0:* LISTEN 26281/./bin/redis-s

tcp 0 0 192.168.2.24:16373 0.0.0.0:* LISTEN 26281/./bin/redis-s

tcp 0 0 127.0.0.1:16374 0.0.0.0:* LISTEN 26287/./bin/redis-s

tcp 0 0 192.168.2.24:16374 0.0.0.0:* LISTEN 26287/./bin/redis-s

tcp 0 0 127.0.0.1:16375 0.0.0.0:* LISTEN 26293/./bin/redis-s

tcp 0 0 192.168.2.24:16375 0.0.0.0:* LISTEN 26293/./bin/redis-s

tcp 0 0 127.0.0.1:6373 0.0.0.0:* LISTEN 26281/./bin/redis-s

tcp 0 0 192.168.2.24:6373 0.0.0.0:* LISTEN 26281/./bin/redis-s

tcp 0 0 127.0.0.1:6374 0.0.0.0:* LISTEN 26287/./bin/redis-s

tcp 0 0 192.168.2.24:6374 0.0.0.0:* LISTEN 26287/./bin/redis-s

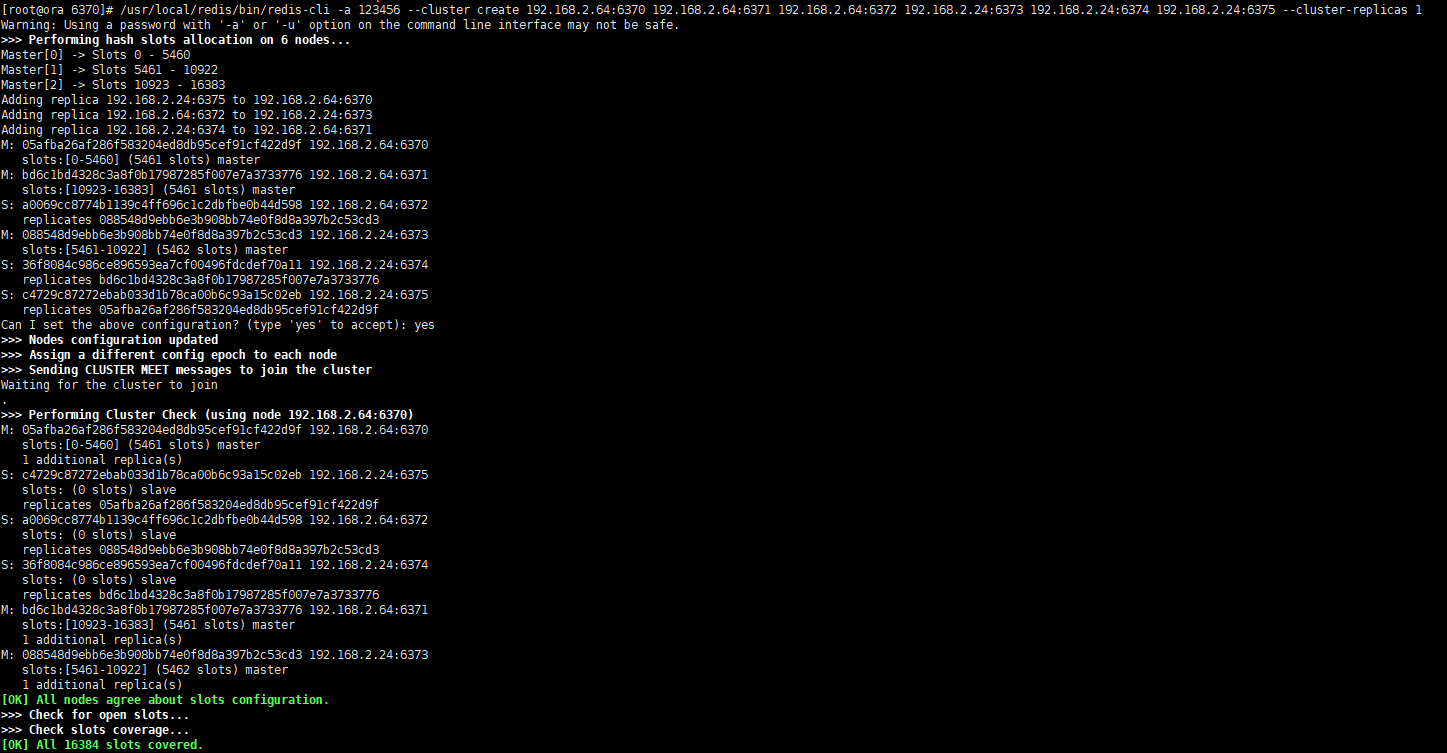

6、创建集群,redis5.0以上已经集成了ruby,不需要单独安装ruby,并且安装集群命令有异 redis-cli(5.0以上)、redis-trib.rb(5.0以下)

##如果设置了密码,则需要加 -a 123456

/usr/local/redis/bin/redis-cli -a 123456 --cluster create 192.168.2.64:6370 192.168.2.64:6371 192.168.2.64:6372 192.168.2.24:6373 192.168.2.24:6374 192.168.2.24:6375 --cluster-replicas 1

#此处需要注意,创建集群时 --cluster-replicas 1 代表为每一个master分配1个slave 根据所提供的node数去分配主从,前三个节点为master,后三个为slave

##在正式环境切勿将全部主节点放在同一台主机,如果集群中超过半数以上master挂掉(需要选举),则无论是否有slave,集群都会进入fail状态,不可用。(比如断电)

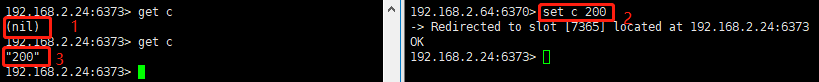

7、出现如上截图说明redis集群已经安装成功,下来登陆集群,所谓登陆集群其实就是带参数 -c 登录任意一个节点

## -h ip -p port -a password -c 表示登录集群,不加表示登录单个节点

/usr/local/redis/bin/redis-cli -h 192.168.2.24 -p 6373 -a 123456 -c

测试过程中会发现一个很有意思的现象,在6370上写入c=200提示写入了7365数据槽,在24服务器的6373服务上,并且直接切换了过去,并且在此服务上查询无误。这或许就是cluster集群中的去中心化、数据分slot存储吧,后面再细细研究。

8、(以下34节点信息为新增的集群节点,不影响展示)模拟集群master节点挂掉,6373(主)、6372(从)互为主从。

192.168.2.64:6370> cluster nodes c4729c87272ebab033d1b78ca00b6c93a15c02eb 192.168.2.24:6375@16375 slave 05afba26af286f583204ed8db95cef91cf422d9f 0 1634021664306 1 connected 05afba26af286f583204ed8db95cef91cf422d9f 192.168.2.64:6370@16370 myself,master - 0 1634021655000 1 connected 0-5460 36f8084c986ce896593ea7cf00496fdcdef70a11 192.168.2.24:6374@16374 slave bd6c1bd4328c3a8f0b17987285f007e7a3733776 0 1634021662301 2 connected bd6c1bd4328c3a8f0b17987285f007e7a3733776 192.168.2.64:6371@16371 master - 0 1634021663304 2 connected 10923-16383 2f899821dbfe2e2d5aa2c22e9e023eb9a9f127f3 192.168.2.34:6378@16378 master - 0 1634021659000 8 connected a0069cc8774b1139c4ff696c1c2dbfbe0b44d598 192.168.2.64:6372@16372 slave 088548d9ebb6e3b908bb74e0f8d8a397b2c53cd3 0 1634021661297 4 connected 0936b30e022b7fad6ddb3ce6aee1b96b8c1d1c6b 192.168.2.34:6376@16376 slave bd6c1bd4328c3a8f0b17987285f007e7a3733776 0 1634021665309 2 connected 088548d9ebb6e3b908bb74e0f8d8a397b2c53cd3 192.168.2.24:6373@16373 master - 0 1634021663000 4 connected 5461-10922 e954db69f71beb9426e01325064de520d156a67a 192.168.2.34:6377@16377 master - 0 1634021661000 0 connected

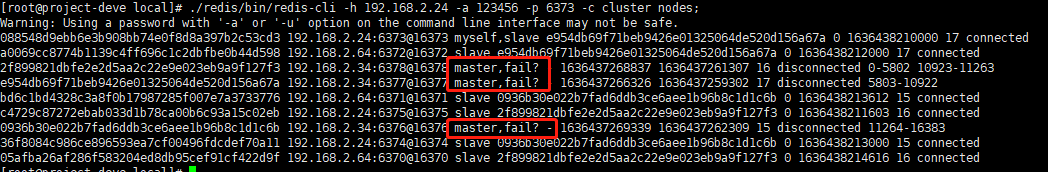

9、停掉6373,再查看集群状态,可以看到6373已经是fail状态,6372自动选举为主节点。

192.168.2.64:6370> cluster nodes c4729c87272ebab033d1b78ca00b6c93a15c02eb 192.168.2.24:6375@16375 slave 05afba26af286f583204ed8db95cef91cf422d9f 0 1634022069602 1 connected 05afba26af286f583204ed8db95cef91cf422d9f 192.168.2.64:6370@16370 myself,master - 0 1634022067000 1 connected 0-5460 36f8084c986ce896593ea7cf00496fdcdef70a11 192.168.2.24:6374@16374 slave bd6c1bd4328c3a8f0b17987285f007e7a3733776 0 1634022065000 2 connected bd6c1bd4328c3a8f0b17987285f007e7a3733776 192.168.2.64:6371@16371 master - 0 1634022067591 2 connected 10923-16383 2f899821dbfe2e2d5aa2c22e9e023eb9a9f127f3 192.168.2.34:6378@16378 master - 0 1634022069602 8 connected a0069cc8774b1139c4ff696c1c2dbfbe0b44d598 192.168.2.64:6372@16372 master - 0 1634022065582 9 connected 5461-10922 0936b30e022b7fad6ddb3ce6aee1b96b8c1d1c6b 192.168.2.34:6376@16376 slave a0069cc8774b1139c4ff696c1c2dbfbe0b44d598 0 1634022068596 9 connected 088548d9ebb6e3b908bb74e0f8d8a397b2c53cd3 192.168.2.24:6373@16373 master,fail - 1634021993275 1634021987000 4 disconnected e954db69f71beb9426e01325064de520d156a67a 192.168.2.34:6377@16377 master - 0 1634022066587 0 connected

10、重新启动6373,发现6373已经加入集群,并且为6372的从节点,即master节点如果挂掉,它的slave节点变为新master节点继续对外提供服务,而原来的master节点如果重启,则变为新master节点的slave节点。

192.168.2.64:6370> cluster nodes c4729c87272ebab033d1b78ca00b6c93a15c02eb 192.168.2.24:6375@16375 slave 05afba26af286f583204ed8db95cef91cf422d9f 0 1634022235270 1 connected 05afba26af286f583204ed8db95cef91cf422d9f 192.168.2.64:6370@16370 myself,master - 0 1634022232000 1 connected 0-5460 36f8084c986ce896593ea7cf00496fdcdef70a11 192.168.2.24:6374@16374 slave bd6c1bd4328c3a8f0b17987285f007e7a3733776 0 1634022232261 2 connected bd6c1bd4328c3a8f0b17987285f007e7a3733776 192.168.2.64:6371@16371 master - 0 1634022233264 2 connected 10923-16383 2f899821dbfe2e2d5aa2c22e9e023eb9a9f127f3 192.168.2.34:6378@16378 master - 0 1634022230000 8 connected a0069cc8774b1139c4ff696c1c2dbfbe0b44d598 192.168.2.64:6372@16372 master - 0 1634022230255 9 connected 5461-10922 0936b30e022b7fad6ddb3ce6aee1b96b8c1d1c6b 192.168.2.34:6376@16376 slave a0069cc8774b1139c4ff696c1c2dbfbe0b44d598 0 1634022232762 9 connected 088548d9ebb6e3b908bb74e0f8d8a397b2c53cd3 192.168.2.24:6373@16373 slave a0069cc8774b1139c4ff696c1c2dbfbe0b44d598 0 1634022235771 9 connected e954db69f71beb9426e01325064de520d156a67a 192.168.2.34:6377@16377 master - 0 1634022234266 0 connected

## 如果master节点不在数据槽,不在(0-16383)范围内,则master挂掉后slave不会切换为master,待验证。

## 数据槽只会分配给master节点,slave节点备用,当master挂掉后接管这片数据槽。