爬取思路

- 用到的第三方库文件

lxml,requests,fake_agent - 用fake_agent里的UserAgent修饰爬虫

- 用requests进行基本的请求

- 用lxml进行html的分析

- 用xpath进行网页元素的选择

爬取的一些问题

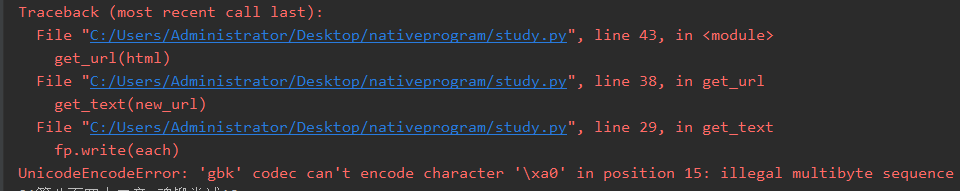

1.编码问题这两个编码无法转换成utf-8

- UnicodeEncodeError: ‘gbk’ codec can’t encode character ‘xa0’ in position 15: illegal multibyte sequence

- UnicodeEncodeError: ‘gbk’ codec can’t encode character ‘xufeff’ in position 15: illegal multibyte sequence

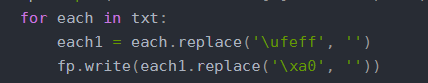

- 解决:将这两个提前换成空字符

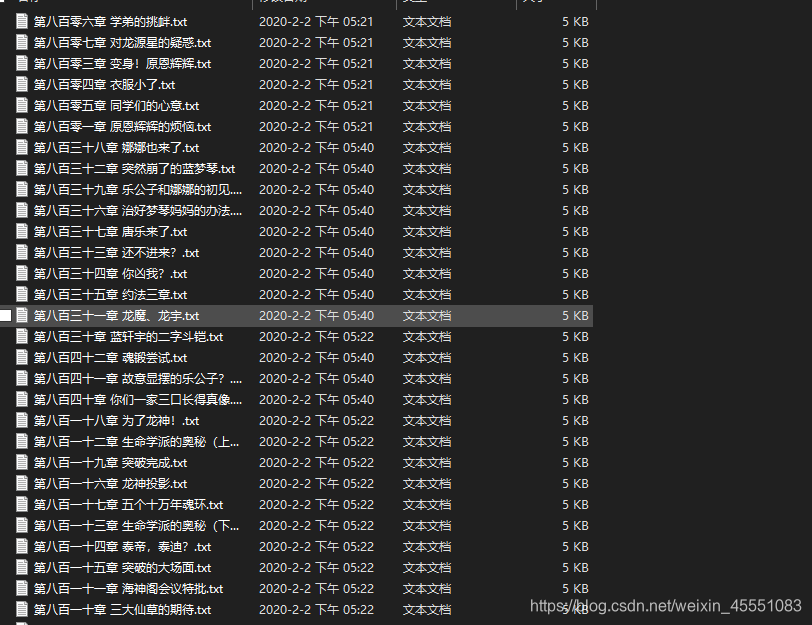

2.要提前建好一个txts的文件夹

全部源码

from lxml import etree

import requests

from fake_useragent import UserAgent

url1 = 'https://www.ibiquge.net/66_66791/'

url2 = 'https://www.ibiquge.net'

# 爬取HTML的函数

def get_html(url):

ua = UserAgent()

kv = {'user-agent': ua.random}

re = requests.get(url, headers=kv)

re.encoding = 'utf-8'

htm1 = re.text

return htm1

# 根据url获得文章并保存的函数

def get_text(url):

html = get_html(url)

selector = etree.HTML(html)

title = selector.xpath('//*[@id="main"]/div/div/div[2]/h1/text()')

txt = selector.xpath('//*[@id="content"]/text()')

print(title)

fp = open('txts\' + title[0] + '.txt', 'w')

for each in txt:

each1 = each.replace('ufeff', '')

fp.write(each1.replace('xa0', ''))

fp.close()

def get_url(html):

selector = etree.HTML(html)

url_list = selector.xpath('//*[@id="list"]/dl/dd/a/@href')

for url in url_list:

new_url = url2 + url

get_text(new_url)

if __name__ == '__main__':

html = get_html(url1)

get_url(html)

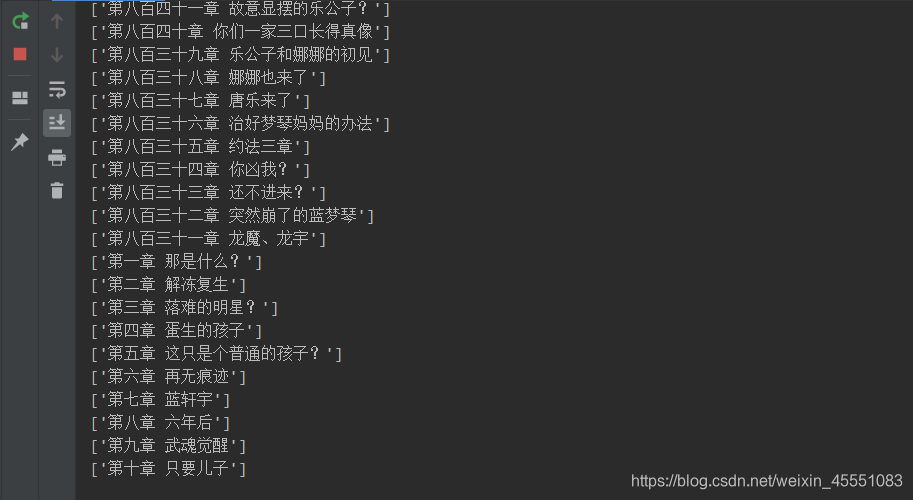

爬取过程

爬取结果

如有侵权,联系删除