1源码demo

package com.kpwong.consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import java.util.Arrays;

import java.util.Properties;

public class MyConsumer {

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"hadoop202:9092");

//自动提交offset

prop.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,true);

prop.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG,"1000");

prop.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringDeserializer");

prop.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringDeserializer");

//消费者组

prop.put(ConsumerConfig.GROUP_ID_CONFIG,"bigdata");

//创建消费者

KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(prop);

//订阅主题

consumer.subscribe(Arrays.asList("two"));

while (true)

{

//获取数据

ConsumerRecords<String, String> records = consumer.poll(100);

//遍历records

for (ConsumerRecord<String, String> record : records) {

System.out.println("consumer : "+" partition:"+record.partition()+" key :"+record.key()+"------value :"+record.value());

}

}

// consumer.close();

}

}

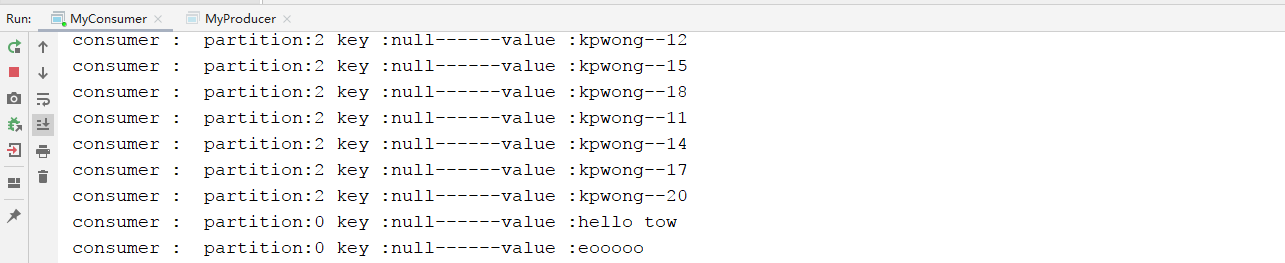

2:运行结果:

3:实现命令行 --from-beginning 效果

//--from-beginning prop.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"earliest"); //消费者组 prop.put(ConsumerConfig.GROUP_ID_CONFIG,"bigdata2");

注意:要换组名 才行

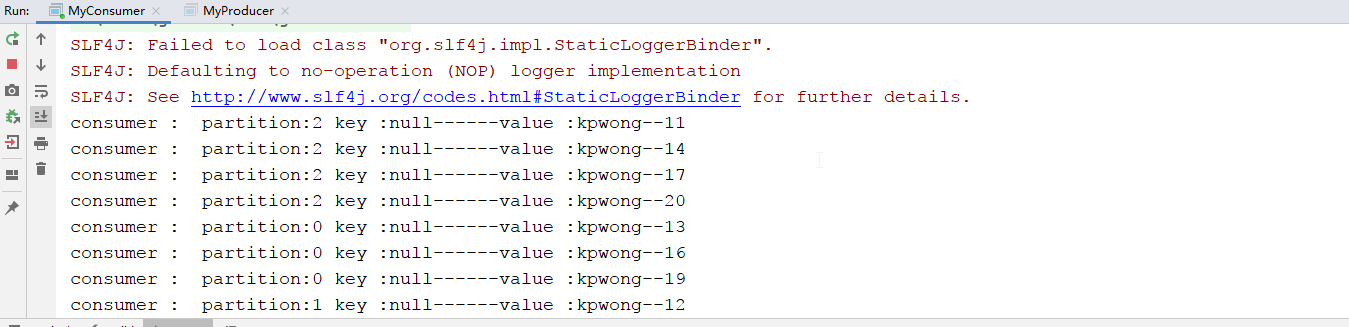

运行效果: