spark客户端提交任务至yarn,后台抛错,FinalStatus:UNDEFINED.

./spark-submit --class org.apache.spark.examples.SparkPi --conf spark.eventLog.dir=hdfs://jenkintest/tmp/spark01 --master yarn --deploy-mode client --driver-memory 1g --principal sparkclient01 --keytab $SPARK_HOME/sparkclient01.keytab --executor-memory 1g --executor-cores 1 $SPARK_HOME/examples/jars/spark-examples*.jar 10

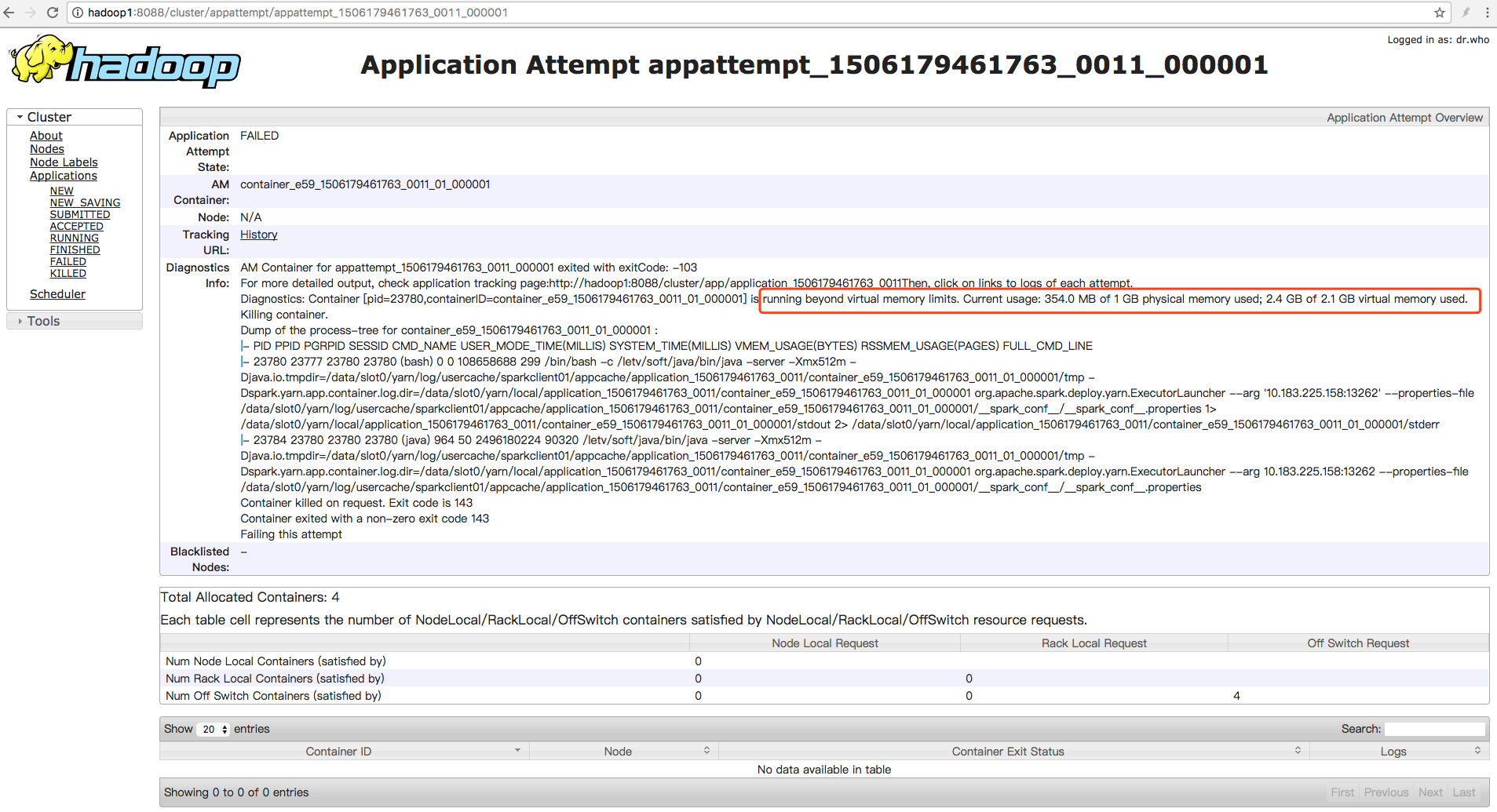

报错如下:

AM Container for appattempt_1506179461763_0011_000001 exited with exitCode: -103 For more detailed output, check application tracking page:http://hadoop1:8088/cluster/app/application_1506179461763_0011Then, click on links to logs of each attempt. Diagnostics: Container [pid=23780,containerID=container_e59_1506179461763_0011_01_000001] is running beyond virtual memory limits. Current usage: 354.0 MB of 1 GB physical memory used; 2.4 GB of 2.1 GB virtual memory used. Killing container. Dump of the process-tree for container_e59_1506179461763_0011_01_000001 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 23780 23777 23780 23780 (bash) 0 0 108658688 299 /bin/bash -c /xxx/soft/java/bin/java -server -Xmx512m -Djava.io.tmpdir=/data/slot0/yarn/log/usercache/sparkclient01/appcache/application_1506179461763_0011/container_e59_1506179461763_0011_01_000001/tmp -Dspark.yarn.app.container.log.dir=/data/slot0/yarn/local/application_1506179461763_0011/container_e59_1506179461763_0011_01_000001 org.apache.spark.deploy.yarn.ExecutorLauncher --arg '10.183.225.158:13262' --properties-file /data/slot0/yarn/log/usercache/sparkclient01/appcache/application_1506179461763_0011/container_e59_1506179461763_0011_01_000001/__spark_conf__/__spark_conf__.properties 1> /data/slot0/yarn/local/application_1506179461763_0011/container_e59_1506179461763_0011_01_000001/stdout 2> /data/slot0/yarn/local/application_1506179461763_0011/container_e59_1506179461763_0011_01_000001/stderr |- 23784 23780 23780 23780 (java) 964 50 2496180224 90320 /xxx/soft/java/bin/java -server -Xmx512m -Djava.io.tmpdir=/data/slot0/yarn/log/usercache/sparkclient01/appcache/application_1506179461763_0011/container_e59_1506179461763_0011_01_000001/tmp -Dspark.yarn.app.container.log.dir=/data/slot0/yarn/local/application_1506179461763_0011/container_e59_1506179461763_0011_01_000001 org.apache.spark.deploy.yarn.ExecutorLauncher --arg 10.183.225.158:13262 --properties-file /data/slot0/yarn/log/usercache/sparkclient01/appcache/application_1506179461763_0011/container_e59_1506179461763_0011_01_000001/__spark_conf__/__spark_conf__.properties Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143 Failing this attempt

原因为,container使用的虚拟内存(2.4G)超过了默认值(2.1G)。

虚拟内存计算公式:yarn.scheduler.minimum-allocation-mb * yarn.nodemanager.vmem-pmem-ratio = 虚拟内存的总量。

yarn.scheduler.minimum-allocation-mb:默认为1G

yarn.nodemanager.vmem-pmem-ratio:默认2.1

如果需要的虚拟内存总量超过这个计算所得的数值,就会出发 Killing container.

使用了2.4,超过了默认计算结果2.1。

修改配置如下:

<!-- yarn 资源分配 -->

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>9216</value>

<discription>每个任务最多可用内存,单位MB,默认8192MB</discription>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>4000</value>

<discription>每个任务最shao可用内存</discription>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>4.1</value>

</property>

也可关闭虚拟内存检查(不推荐):yarn.nodemanager.vmem-check-enabled fase

配置完,重启nodemanager,任务运行SUCCESS,打印结果:

INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Memory usage of ProcessTree 23278 for container-id container_e59_1506179461763_0013_01_000001: 268.5 MB of 1 GB physical memory used; 2.4 GB of 4.1 GB virtual memory used