一, MHA 介绍

MHA(Master High Availability)目前在MySQL高可用方面是一个相对成熟的解决方案,它由日本DeNA公司youshimaton(现就职于Facebook公司)开发,是一套优秀的作为MySQL高可用性环境下故障切换和主从提升的高可用软件。在MySQL故障切换过程中,MHA能做到在0~30秒之内自动完成数据库的故障切换操作,并且在进行故障切换的过程中,MHA能在最大程度上保证数据的一致性,以达到真正意义上的高可用。

该软件由两部分组成:MHA Manager(管理节点)和MHA Node(数据节点)。MHA Manager可以单独部署在一台独立的机器上管理多个master-slave集群,也可以部署在一台slave节点上。MHA Node运行在每台MySQL服务器上,MHA Manager会定时探测集群中的master节点,当master出现故障时,它可以自动将最新数据的slave提升为新的master,然后将所有其他的slave重新指向新的master。整个故障转移过程对应用程序完全透明。

在MHA自动故障切换过程中,MHA试图从宕机的主服务器上保存二进制日志,最大程度的保证数据的不丢失,但这并不总是可行的。例如,如果主服务器硬件故障或无法通过ssh访问,MHA没法保存二进制日志,只进行故障转移而丢失了最新的数据。使用MySQL 5.5的半同步复制,可以大大降低数据丢失的风险。MHA可以与半同步复制结合起来。如果只有一个slave已经收到了最新的二进制日志,MHA可以将最新的二进制日志应用于其他所有的slave服务器上,因此可以保证所有节点的数据一致性。

目前MHA主要支持一主多从的架构,要搭建MHA,要求一个复制集群中必须最少有三台数据库服务器,一主二从,即一台充当master,一台充当备用master,另外一台充当从库,因为至少需要三台服务器,出于机器成本的考虑,淘宝也在该基础上进行了改造,目前淘宝TMHA已经支持一主一从。

二, MHA搭建前

MHA搭建前必须要完成一主多从的主从复制项目:

环境:

|

db01 |

主 | 10.0.0.51 |

| db02 | 从 | 10.0.0.52 |

| db03 | 从 | 10.0.0.53 |

三台节点配置:

vim /etc/my.cnf

[mysqld] basedir=/application/mysql datadir=/application/mysql/data socket=/tmp/mysql.sock log-error=/var/log/mysql.log log_bin=/data/mysql/mysql-bin binlog_format=row skip-name-resolve server-id=51 gtid-mode=on enforce-gtid-consistency=true log-slave-updates=1 [client] socket=/tmp/mysql.sock slave1:10.0.0.52 vim /etc/my.cnf [mysqld] basedir=/application/mysql datadir=/application/mysql/data socket=/tmp/mysql.sock log-error=/var/log/mysql.log log_bin=/data/mysql/mysql-bin binlog_format=row skip-name-resolve server-id=52 gtid-mode=on enforce-gtid-consistency=true log-slave-updates=1 [client] socket=/tmp/mysql.sock slave2:10.0.0.53 vim /etc/my.cnf [mysqld] basedir=/application/mysql datadir=/application/mysql/data socket=/tmp/mysql.sock log-error=/var/log/mysql.log log_bin=/data/mysql/mysql-bin binlog_format=row skip-name-resolve server-id=53 gtid-mode=on enforce-gtid-consistency=true log-slave-updates=1 [client] socket=/tmp/mysql.sock 3.重新初始化三台机器数据 /application/mysql/scripts/mysql_install_db --basedir=/application/mysql/ --datadir=/application/mysql/data --user=mysql 4. 分别启动三台数据库服务器 /etc/init.d/mysqld start

三. MHA部署开始

构建GTID:

51节点: grant replication slave on *.* to repl@'10.0.0.%' identified by '123'; 5253节点: change master to master_host='10.0.0.51',master_user='repl',master_password='123' ,MASTER_AUTO_POSITION=1; start slave;

密钥乱发:

主节点配值:

rm -rf /root/.ssh ssh-keygen cd /root/.ssh mv id_rsa.pub authorized_keys scp -r /root/.ssh 10.0.0.52 scp -r /root/.ssh 10.0.0.53

各个节点密钥完成无密码两两互联

51节点: ssh 10.0.0.51 date ssh 10.0.0.52 date ssh 10.0.0.53 date 52节点: ssh 10.0.0.51 date ssh 10.0.0.52 date ssh 10.0.0.53 date 53节点: ssh 10.0.0.51 date ssh 10.0.0.52 date ssh 10.0.0.53 date

数据库配置:

51节点 52节点 53节点配置 set global relay_log_purge = 0; 52节点 53节点配置 set global read_only=1;

服务器配置

ln -s /application/mysql/bin/mysqlbinlog /usr/bin/mysqlbinlog ln -s /application/mysql/bin/mysql /usr/bin/mysql

软件下载:

安装依赖包:

yum install perl-DBD-MySQL -y

安装node

所有节点

rpm -ivh mha4mysql-node-0.57-0.el7.noarch.rpm

主库创建MHA用户

grant all privileges on *.* to mha@'10.0.0.%' identified by 'mha';

53节点配置MHA

yum install -y perl-Config-Tiny epel-release perl-Log-Dispatch perl-Parallel-ForkManager perl-Time-HiRes

rpm -ivh mha4mysql-manager-0.57-0.el7.noarch.rpm

53节点配置文件配置

#创建配置文件目录 mkdir -p /etc/mha #创建日志目录 mkdir -p /var/log/mha/app1 #编辑mha配置文件

vim /etc/mha/app1.cnf [server default] manager_log=/var/log/mha/app1/manager manager_workdir=/var/log/mha/app1 master_binlog_dir=/data/mysql/ user=mha password=mha ping_interval=2 repl_password=123 repl_user=repl ssh_user=root [server1] hostname=10.0.0.51 port=3306 [server2] hostname=10.0.0.52 port=3306 [server3] hostname=10.0.0.53 port=3306

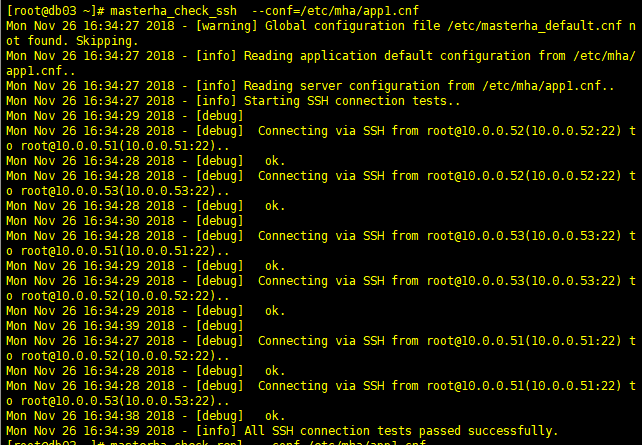

MHA 检查:

masterha_check_ssh --conf=/etc/mha/app1.cnf

全部ok为正常

主从状态:

masterha_check_repl --conf=/etc/mha/app1.cnf

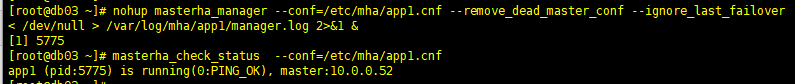

启动MHA

nohup masterha_manager --conf=/etc/mha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/mha/app1/manager.log 2>&1 &

四, 故障演练

某一天主库宕机了

pkill mysqld

同时MHA架构日志:

1 Mon Nov 26 17:31:10 2018 - [warning] Got error on MySQL select ping: 2006 (MySQL server has gone away) 2 Mon Nov 26 17:31:10 2018 - [info] Executing SSH check script: exit 0 3 Mon Nov 26 17:31:12 2018 - [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '10.0.0.51' (111)) 4 Mon Nov 26 17:31:12 2018 - [warning] Connection failed 2 time(s).. 5 Mon Nov 26 17:31:14 2018 - [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '10.0.0.51' (111)) 6 Mon Nov 26 17:31:14 2018 - [warning] Connection failed 3 time(s).. 7 Mon Nov 26 17:31:15 2018 - [warning] HealthCheck: Got timeout on checking SSH connection to 10.0.0.51! at /usr/share/perl5/vendor_perl/MHA/HealthCheck.pm line 342. 8 Mon Nov 26 17:31:16 2018 - [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '10.0.0.51' (111)) 9 Mon Nov 26 17:31:16 2018 - [warning] Connection failed 4 time(s).. 10 Mon Nov 26 17:31:16 2018 - [warning] Master is not reachable from health checker! 11 Mon Nov 26 17:31:16 2018 - [warning] Master 10.0.0.51(10.0.0.51:3306) is not reachable! 12 Mon Nov 26 17:31:16 2018 - [warning] SSH is NOT reachable. 13 Mon Nov 26 17:31:16 2018 - [info] Connecting to a master server failed. Reading configuration file /etc/masterha_default.cnf and /etc/mha/app1.cnf again, and trying to connect to all servers to check server status.. 14 Mon Nov 26 17:31:16 2018 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping. 15 Mon Nov 26 17:31:16 2018 - [info] Reading application default configuration from /etc/mha/app1.cnf.. 16 Mon Nov 26 17:31:16 2018 - [info] Reading server configuration from /etc/mha/app1.cnf.. 17 Mon Nov 26 17:31:17 2018 - [info] GTID failover mode = 1 18 Mon Nov 26 17:31:17 2018 - [info] Dead Servers: 19 Mon Nov 26 17:31:17 2018 - [info] 10.0.0.51(10.0.0.51:3306) 20 Mon Nov 26 17:31:17 2018 - [info] Alive Servers: 21 Mon Nov 26 17:31:17 2018 - [info] 10.0.0.52(10.0.0.52:3306) 22 Mon Nov 26 17:31:17 2018 - [info] 10.0.0.53(10.0.0.53:3306) 23 Mon Nov 26 17:31:17 2018 - [info] Alive Slaves: 24 Mon Nov 26 17:31:17 2018 - [info] 10.0.0.52(10.0.0.52:3306) Version=5.6.38-log (oldestmajor version between slaves) log-bin:enabled 25 Mon Nov 26 17:31:17 2018 - [info] GTID ON 26 Mon Nov 26 17:31:17 2018 - [info] Replicating from 10.0.0.51(10.0.0.51:3306) 27 Mon Nov 26 17:31:17 2018 - [info] 10.0.0.53(10.0.0.53:3306) Version=5.6.38-log (oldestmajor version between slaves) log-bin:enabled 28 Mon Nov 26 17:31:17 2018 - [info] GTID ON 29 Mon Nov 26 17:31:17 2018 - [info] Replicating from 10.0.0.51(10.0.0.51:3306) 30 Mon Nov 26 17:31:17 2018 - [info] Checking slave configurations.. 31 Mon Nov 26 17:31:17 2018 - [info] Checking replication filtering settings.. 32 Mon Nov 26 17:31:17 2018 - [info] Replication filtering check ok. 33 Mon Nov 26 17:31:17 2018 - [info] Master is down! 34 Mon Nov 26 17:31:17 2018 - [info] Terminating monitoring script. 35 Mon Nov 26 17:31:17 2018 - [info] Got exit code 20 (Master dead). 36 Mon Nov 26 17:31:17 2018 - [info] MHA::MasterFailover version 0.57. 37 Mon Nov 26 17:31:17 2018 - [info] Starting master failover. 38 Mon Nov 26 17:31:17 2018 - [info] 39 Mon Nov 26 17:31:17 2018 - [info] * Phase 1: Configuration Check Phase.. 40 Mon Nov 26 17:31:17 2018 - [info] 41 Mon Nov 26 17:31:18 2018 - [info] GTID failover mode = 1 42 Mon Nov 26 17:31:18 2018 - [info] Dead Servers: 43 Mon Nov 26 17:31:18 2018 - [info] 10.0.0.51(10.0.0.51:3306) 44 Mon Nov 26 17:31:18 2018 - [info] Checking master reachability via MySQL(double check)... 45 Mon Nov 26 17:31:18 2018 - [info] ok. 46 Mon Nov 26 17:31:18 2018 - [info] Alive Servers: 47 Mon Nov 26 17:31:18 2018 - [info] 10.0.0.52(10.0.0.52:3306) 48 Mon Nov 26 17:31:18 2018 - [info] 10.0.0.53(10.0.0.53:3306) 49 Mon Nov 26 17:31:18 2018 - [info] Alive Slaves: 50 Mon Nov 26 17:31:18 2018 - [info] 10.0.0.52(10.0.0.52:3306) Version=5.6.38-log (oldestmajor version between slaves) log-bin:enabled 51 Mon Nov 26 17:31:18 2018 - [info] GTID ON 52 Mon Nov 26 17:31:18 2018 - [info] Replicating from 10.0.0.51(10.0.0.51:3306) 53 Mon Nov 26 17:31:18 2018 - [info] 10.0.0.53(10.0.0.53:3306) Version=5.6.38-log (oldestmajor version between slaves) log-bin:enabled 54 Mon Nov 26 17:31:18 2018 - [info] GTID ON 55 Mon Nov 26 17:31:18 2018 - [info] Replicating from 10.0.0.51(10.0.0.51:3306) 56 Mon Nov 26 17:31:18 2018 - [info] Starting GTID based failover. 57 Mon Nov 26 17:31:18 2018 - [info] 58 Mon Nov 26 17:31:18 2018 - [info] ** Phase 1: Configuration Check Phase completed. 59 Mon Nov 26 17:31:18 2018 - [info] 60 Mon Nov 26 17:31:18 2018 - [info] * Phase 2: Dead Master Shutdown Phase.. 61 Mon Nov 26 17:31:18 2018 - [info] 62 Mon Nov 26 17:31:18 2018 - [info] Forcing shutdown so that applications never connect to the current master.. 63 Mon Nov 26 17:31:18 2018 - [warning] master_ip_failover_script is not set. Skipping invalidating dead master IP address. 64 Mon Nov 26 17:31:18 2018 - [warning] shutdown_script is not set. Skipping explicit shutting down of the dead master. 65 Mon Nov 26 17:31:19 2018 - [info] * Phase 2: Dead Master Shutdown Phase completed. 66 Mon Nov 26 17:31:19 2018 - [info] 67 Mon Nov 26 17:31:19 2018 - [info] * Phase 3: Master Recovery Phase.. 68 Mon Nov 26 17:31:19 2018 - [info] 69 Mon Nov 26 17:31:19 2018 - [info] * Phase 3.1: Getting Latest Slaves Phase.. 70 Mon Nov 26 17:31:19 2018 - [info] 71 Mon Nov 26 17:31:19 2018 - [info] The latest binary log file/position on all slaves is mysql-bin.000003:792 72 Mon Nov 26 17:31:19 2018 - [info] Retrieved Gtid Set: b54af3fb-f133-11e8-825d-00505628e54f:1-3 73 Mon Nov 26 17:31:19 2018 - [info] Latest slaves (Slaves that received relay log files to the latest): 74 Mon Nov 26 17:31:19 2018 - [info] 10.0.0.52(10.0.0.52:3306) Version=5.6.38-log (oldestmajor version between slaves) log-bin:enabled 75 Mon Nov 26 17:31:19 2018 - [info] GTID ON 76 Mon Nov 26 17:31:19 2018 - [info] Replicating from 10.0.0.51(10.0.0.51:3306) 77 Mon Nov 26 17:31:19 2018 - [info] 10.0.0.53(10.0.0.53:3306) Version=5.6.38-log (oldestmajor version between slaves) log-bin:enabled 78 Mon Nov 26 17:31:19 2018 - [info] GTID ON 79 Mon Nov 26 17:31:19 2018 - [info] Replicating from 10.0.0.51(10.0.0.51:3306) 80 Mon Nov 26 17:31:19 2018 - [info] The oldest binary log file/position on all slaves is mysql-bin.000003:792 81 Mon Nov 26 17:31:19 2018 - [info] Retrieved Gtid Set: b54af3fb-f133-11e8-825d-00505628e54f:1-3 82 Mon Nov 26 17:31:19 2018 - [info] Oldest slaves: 83 Mon Nov 26 17:31:19 2018 - [info] 10.0.0.52(10.0.0.52:3306) Version=5.6.38-log (oldestmajor version between slaves) log-bin:enabled 84 Mon Nov 26 17:31:19 2018 - [info] GTID ON 85 Mon Nov 26 17:31:19 2018 - [info] Replicating from 10.0.0.51(10.0.0.51:3306) 86 Mon Nov 26 17:31:19 2018 - [info] 10.0.0.53(10.0.0.53:3306) Version=5.6.38-log (oldestmajor version between slaves) log-bin:enabled 87 Mon Nov 26 17:31:19 2018 - [info] GTID ON 88 Mon Nov 26 17:31:19 2018 - [info] Replicating from 10.0.0.51(10.0.0.51:3306) 89 Mon Nov 26 17:31:19 2018 - [info] 90 Mon Nov 26 17:31:19 2018 - [info] * Phase 3.3: Determining New Master Phase.. 91 Mon Nov 26 17:31:19 2018 - [info] 92 Mon Nov 26 17:31:19 2018 - [info] Searching new master from slaves.. 93 Mon Nov 26 17:31:19 2018 - [info] Candidate masters from the configuration file: 94 Mon Nov 26 17:31:19 2018 - [info] Non-candidate masters: 95 Mon Nov 26 17:31:19 2018 - [info] New master is 10.0.0.52(10.0.0.52:3306) 96 Mon Nov 26 17:31:19 2018 - [info] Starting master failover.. 97 Mon Nov 26 17:31:19 2018 - [info] 98 From: 99 10.0.0.51(10.0.0.51:3306) (current master) 100 +--10.0.0.52(10.0.0.52:3306) 101 +--10.0.0.53(10.0.0.53:3306) 102 103 To: 104 10.0.0.52(10.0.0.52:3306) (new master) 105 +--10.0.0.53(10.0.0.53:3306) 106 Mon Nov 26 17:31:19 2018 - [info] 107 Mon Nov 26 17:31:19 2018 - [info] * Phase 3.3: New Master Recovery Phase.. 108 Mon Nov 26 17:31:19 2018 - [info] 109 Mon Nov 26 17:31:19 2018 - [info] Waiting all logs to be applied.. 110 Mon Nov 26 17:31:19 2018 - [info] done. 111 Mon Nov 26 17:31:19 2018 - [info] Getting new master's binlog name and position.. 112 Mon Nov 26 17:31:19 2018 - [info] mysql-bin.000003:792 113 Mon Nov 26 17:31:19 2018 - [info] All other slaves should start replication from here. Statement should be: CHANGE MASTER TO MASTER_HOST='10.0.0.52', MASTER_PORT=3306, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='xxx'; 114 Mon Nov 26 17:31:19 2018 - [info] Master Recovery succeeded. File:Pos:Exec_Gtid_Set: mysql-bin.000003, 792, b54af3fb-f133-11e8-825d-00505628e54f:1-3 115 Mon Nov 26 17:31:19 2018 - [warning] master_ip_failover_script is not set. Skipping taking over new master IP address. 116 Mon Nov 26 17:31:19 2018 - [info] Setting read_only=0 on 10.0.0.52(10.0.0.52:3306).. 117 Mon Nov 26 17:31:19 2018 - [info] ok. 118 Mon Nov 26 17:31:19 2018 - [info] ** Finished master recovery successfully. 119 Mon Nov 26 17:31:19 2018 - [info] * Phase 3: Master Recovery Phase completed. 120 Mon Nov 26 17:31:19 2018 - [info] 121 Mon Nov 26 17:31:19 2018 - [info] * Phase 4: Slaves Recovery Phase.. 122 Mon Nov 26 17:31:19 2018 - [info] 123 Mon Nov 26 17:31:19 2018 - [info] 124 Mon Nov 26 17:31:19 2018 - [info] * Phase 4.1: Starting Slaves in parallel.. 125 Mon Nov 26 17:31:19 2018 - [info] 126 Mon Nov 26 17:31:19 2018 - [info] -- Slave recovery on host 10.0.0.53(10.0.0.53:3306) started, pid: 5710. Check tmp log /var/log/mha/app1/10.0.0.53_3306_20181126173117.log if it takes time.. 127 Mon Nov 26 17:31:21 2018 - [info] 128 Mon Nov 26 17:31:21 2018 - [info] Log messages from 10.0.0.53 ... 129 Mon Nov 26 17:31:21 2018 - [info] 130 Mon Nov 26 17:31:19 2018 - [info] Resetting slave 10.0.0.53(10.0.0.53:3306) and startingreplication from the new master 10.0.0.52(10.0.0.52:3306).. 131 Mon Nov 26 17:31:19 2018 - [info] Executed CHANGE MASTER. 132 Mon Nov 26 17:31:21 2018 - [info] Slave started. 133 Mon Nov 26 17:31:21 2018 - [info] gtid_wait(b54af3fb-f133-11e8-825d-00505628e54f:1-3) completed on 10.0.0.53(10.0.0.53:3306). Executed 0 events. 134 Mon Nov 26 17:31:21 2018 - [info] End of log messages from 10.0.0.53. 135 Mon Nov 26 17:31:21 2018 - [info] -- Slave on host 10.0.0.53(10.0.0.53:3306) started. 136 Mon Nov 26 17:31:21 2018 - [info] All new slave servers recovered successfully. 137 Mon Nov 26 17:31:21 2018 - [info] 138 Mon Nov 26 17:31:21 2018 - [info] * Phase 5: New master cleanup phase.. 139 Mon Nov 26 17:31:21 2018 - [info] 140 Mon Nov 26 17:31:21 2018 - [info] Resetting slave info on the new master.. 141 Mon Nov 26 17:31:21 2018 - [info] 10.0.0.52: Resetting slave info succeeded. 142 Mon Nov 26 17:31:21 2018 - [info] Master failover to 10.0.0.52(10.0.0.52:3306) completed successfully. 143 Mon Nov 26 17:31:21 2018 - [info] Deleted server1 entry from /etc/mha/app1.cnf . 144 Mon Nov 26 17:31:21 2018 - [info] 145 146 ----- Failover Report ----- 147 148 app1: MySQL Master failover 10.0.0.51(10.0.0.51:3306) to 10.0.0.52(10.0.0.52:3306) succeeded 149 150 Master 10.0.0.51(10.0.0.51:3306) is down! 151 152 Check MHA Manager logs at db03:/var/log/mha/app1/manager for details. 153 154 Started automated(non-interactive) failover. 155 Selected 10.0.0.52(10.0.0.52:3306) as a new master. 156 10.0.0.52(10.0.0.52:3306): OK: Applying all logs succeeded. 157 10.0.0.53(10.0.0.53:3306): OK: Slave started, replicating from 10.0.0.52(10.0.0.52:3306) 158 10.0.0.52(10.0.0.52:3306): Resetting slave info succeeded. 159 Master failover to 10.0.0.52(10.0.0.52:3306) completed successfully.

可以发现主库实现漂移自动转到53节点

恢复机器:

再MHA的日志这里可以看见他说明了要恢复服务器的内容

而且

vim /etc/mha/app1.cnf

配置文件中不需要的服务器自动删除

重新启动服务器的话需要把配置文件重新配置

1 [server default] 2 manager_log=/var/log/mha/app1/manager 3 manager_workdir=/var/log/mha/app1 4 master_binlog_dir=/data/mysql/ 5 password=mha 6 ping_interval=2 7 repl_password=123 8 repl_user=repl 9 ssh_user=root 10 user=mha 11 12 [server1] 13 hostname=10.0.0.51 14 port=3306 15 16 17 [server2] 18 hostname=10.0.0.52 19 port=3306 20 21 [server3] 22 hostname=10.0.0.53 23 port=3306

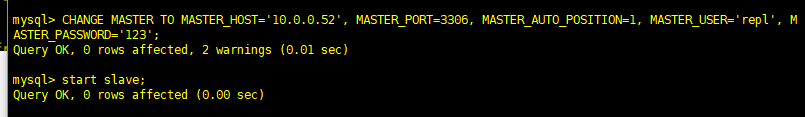

再宕机的服务器重新配置slave

CHANGE MASTER TO MASTER_HOST='10.0.0.52', MASTER_PORT=3306, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='123';

start slave;

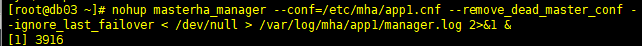

MHA重新启动

重新检测:

[root@db03 ~]# masterha_check_ssh --conf=/etc/mha/app1.cnf Mon Nov 26 17:54:15 2018 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping. Mon Nov 26 17:54:15 2018 - [info] Reading application default configuration from /etc/mha/app1.cnf.. Mon Nov 26 17:54:15 2018 - [info] Reading server configuration from /etc/mha/app1.cnf.. Mon Nov 26 17:54:15 2018 - [info] Starting SSH connection tests.. Mon Nov 26 17:54:16 2018 - [debug] Mon Nov 26 17:54:15 2018 - [debug] Connecting via SSH from root@10.0.0.51(10.0.0.51:22) to root@10.0.0.52(10.0.0.52:22).. Mon Nov 26 17:54:15 2018 - [debug] ok. Mon Nov 26 17:54:15 2018 - [debug] Connecting via SSH from root@10.0.0.51(10.0.0.51:22) to root@10.0.0.53(10.0.0.53:22).. Mon Nov 26 17:54:16 2018 - [debug] ok. Mon Nov 26 17:54:16 2018 - [debug] Mon Nov 26 17:54:15 2018 - [debug] Connecting via SSH from root@10.0.0.52(10.0.0.52:22) to root@10.0.0.51(10.0.0.51:22).. Mon Nov 26 17:54:16 2018 - [debug] ok. Mon Nov 26 17:54:16 2018 - [debug] Connecting via SSH from root@10.0.0.52(10.0.0.52:22) to root@10.0.0.53(10.0.0.53:22).. Mon Nov 26 17:54:16 2018 - [debug] ok. Mon Nov 26 17:54:17 2018 - [debug] Mon Nov 26 17:54:16 2018 - [debug] Connecting via SSH from root@10.0.0.53(10.0.0.53:22) to root@10.0.0.51(10.0.0.51:22).. Mon Nov 26 17:54:16 2018 - [debug] ok. Mon Nov 26 17:54:16 2018 - [debug] Connecting via SSH from root@10.0.0.53(10.0.0.53:22) to root@10.0.0.52(10.0.0.52:22).. Mon Nov 26 17:54:17 2018 - [debug] ok. Mon Nov 26 17:54:17 2018 - [info] All SSH connection tests passed successfully. [root@db03 ~]#

nohup masterha_manager --conf=/etc/mha/app1.cnf --remove_dead_master_conf --ignore_last_failover< /dev/null > /var/log/mha/app1/manager.log 2>&1 &